Vision and language navigation (VLN) combines visual perception with natural language understanding to guide agents through 3D environments. The goal is to allow agents to follow human-like instructions and navigate complex spaces efficiently. These advances have potential in robotics, augmented reality, and smart assistant technologies, where linguistic instructions guide interaction with physical spaces.

The central problem in VLN research is the lack of high-quality annotated datasets that combine navigation trajectories with precise natural language instructions. Annotating these data sets manually requires significant resources, expertise, and effort, making the process costly and time-consuming. Furthermore, these annotations often do not provide the linguistic richness and fidelity necessary to generalize models across diverse environments, limiting their effectiveness in real-world applications.

Existing solutions are based on synthetic data generation and environment augmentation. Synthetic data is generated using path-to-instruction models, while simulators diversify environments. However, these methods often need to improve quality, resulting in misaligned data between language and navigation trajectories. This misalignment results in suboptimal agent performance. The problem is further complicated by metrics that inadequately evaluate the semantic and directional alignment of instructions with their corresponding trajectories, thus challenging quality control.

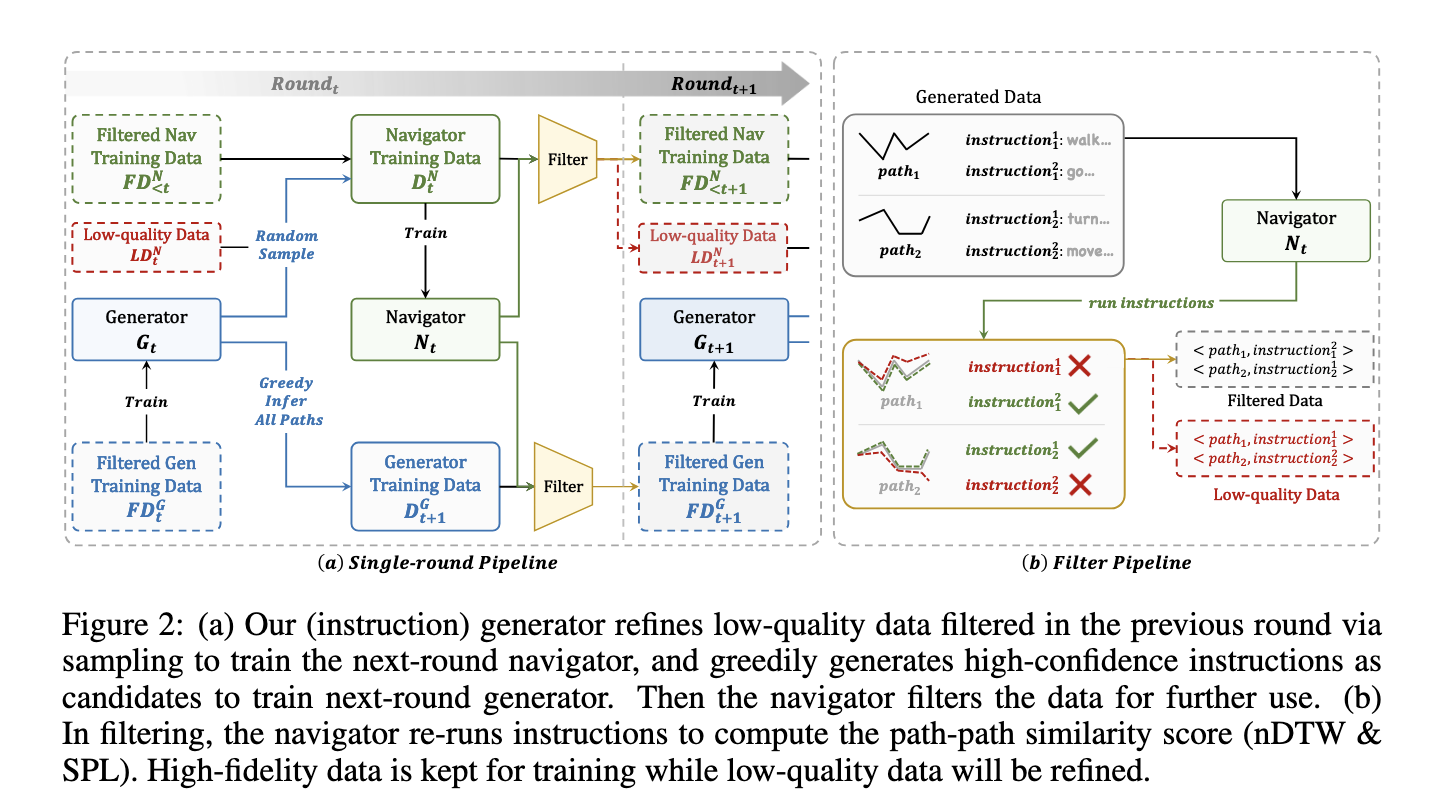

Researchers from Shanghai ai Lab, UNC Chapel Hill, Adobe Research, and Nanjing University proposed the Self-Refining Data Flywheel (SRDF), a system designed to iteratively improve both the dataset and the models through mutual collaboration between an instruction generator and a browser. This fully automated method eliminates the need for human annotations. From a small set of high-quality human-annotated data, the SRDF system generates synthetic instructions and uses them to train a base browser. The browser then evaluates the fidelity of these instructions and filters out low-quality data to train a better generator in subsequent iterations. This iterative refinement ensures continuous improvement in both data quality and model performance.

The SRDF system consists of two key components: an instruction generator and a browser. The generator creates synthetic navigation instructions from trajectories using advanced multimodal language models. The navigator, in turn, evaluates these instructions by measuring how accurately it can follow the generated routes. High-quality data is identified based on strict fidelity metrics, such as path length-weighted success (SPL) and normalized dynamic time warp (nDTW). Poor quality data is regenerated or excluded, ensuring that only reliable, highly aligned data is used for training. Over three iterations, the system refines the data set, which ultimately contains 20 million high-fidelity trajectory-instruction pairs spanning 860 diverse environments.

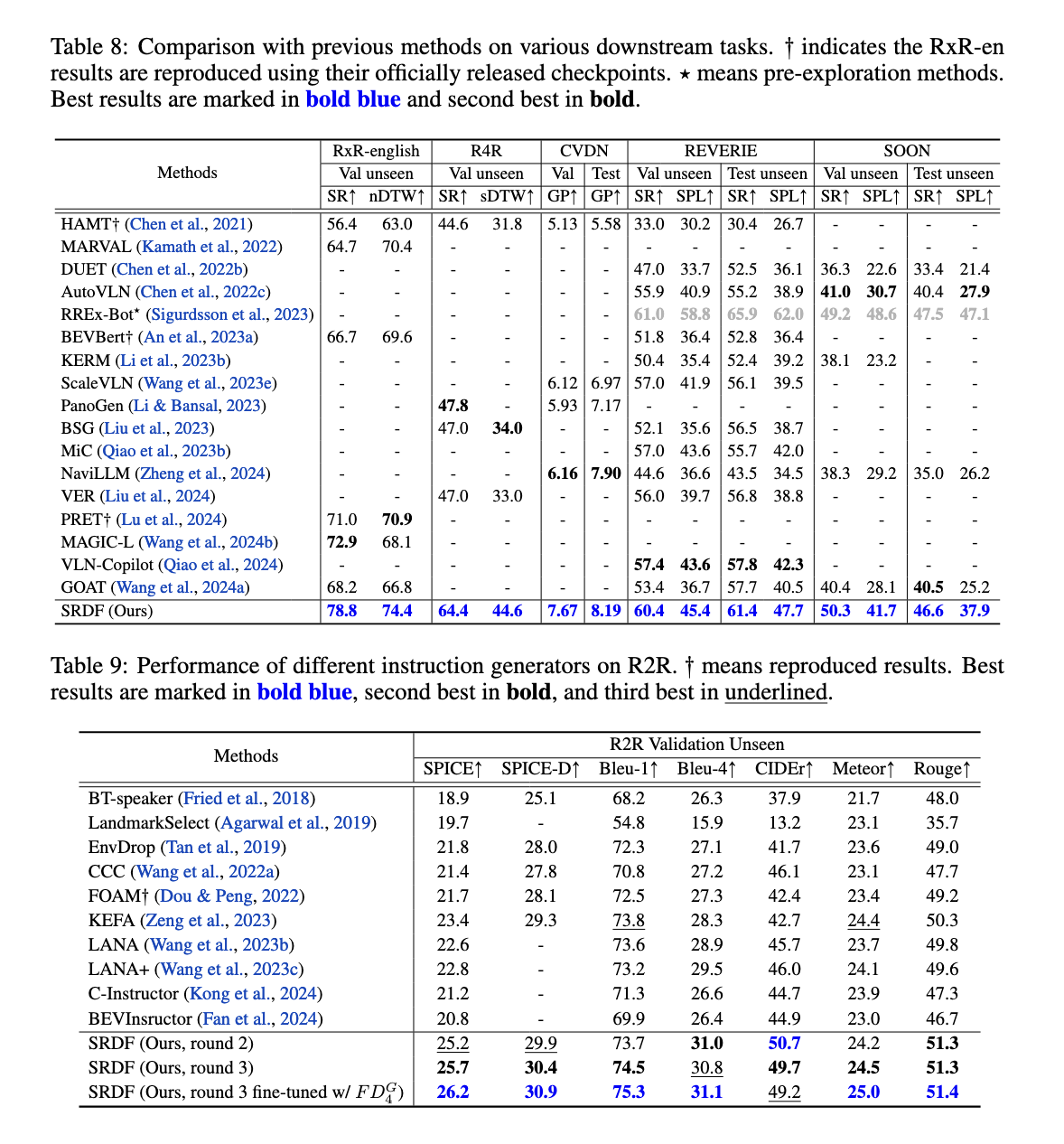

The SRDF system demonstrated exceptional performance improvements across several metrics and benchmarks. On the Room-to-Room (R2R) dataset, the SPL metric for the browser increased from 70% to an unprecedented 78%, surpassing the human benchmark of 76%. This is the first case where a VLN agent exceeds human-level navigation accuracy. The instruction generator also achieved impressive results, with SPICE scores increasing from 23.5 to 26.2, outperforming all previous vision and language navigation instruction generation methods. Furthermore, the data generated by SRDF facilitated superior generalization in downstream tasks, including long-term navigation (R4R) and dialogue-based navigation (CVDN), achieving state-of-the-art performance on all tested data sets.

Specifically, the system excelled in long-horizon navigation, achieving a 16.6% improvement in success rate on the R4R data set. The CVDN dataset significantly improved the goal progress metric, outperforming all previous models. Additionally, the scalability of SRDF was evident as the instruction generator steadily improved with larger data sets and diverse environments, ensuring strong performance across various tasks and benchmarks. The researchers also reported greater diversity and richness of instruction, with more than 10,000 unique words incorporated into the SRDF-generated data set, addressing the vocabulary limitations of previous data sets.

The SRDF approach addresses the long-standing challenge of data sparsity in VLNs by automating data set refinement. Iterative collaboration between the browser and the instruction generator ensures continuous improvement of both components, resulting in high-quality, highly aligned data sets. This innovative method has set a new standard in VLN research, showing the critical role of data quality and alignment in advancing embodied ai. With its ability to surpass human performance and generalize across diverse tasks, SRDF is poised to drive significant progress in the development of intelligent navigation systems.

Verify he Paper and GitHub page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER