The quest to refine the capabilities of large language models (LLM) is a fundamental challenge in artificial intelligence. These digital giants, repositories of vast knowledge, face a major hurdle: staying up-to-date and accurate. Traditional LLM updating methods, such as retraining or fine-tuning, are resource-intensive and carry the risk of catastrophic forgetting, where new learning can erase previously acquired valuable information.

The crux of LLM improvement revolves around the dual need to efficiently integrate new knowledge and correct or discard obsolete or incorrect knowledge. Current approaches to model editing, designed to address these needs, vary widely, from retraining with updated data sets to employing sophisticated editing techniques. However, these methods often need to be more laborious or risk the integrity of the information learned from the model.

A team from IBM ai Research and Princeton University has presented Larimar, an architecture that marks a paradigm shift in the improvement of LLM. Larimar, named after a rare blue mineral, equips LLMs with distributed episodic memory, allowing them to undergo dynamic knowledge updates in one go without the need for extensive retraining. This innovative approach is inspired by human cognitive processes, in particular the ability to learn, update knowledge and selectively forget.

Larimar's architecture stands out for allowing the selective updating and forgetting of information, similar to how the human brain manages knowledge. This capability is crucial to keeping LLMs relevant and unbiased in a rapidly evolving information landscape. Through an external memory module that interacts with the LLM, Larimar facilitates rapid and accurate modifications to the model's knowledge base, showing a significant leap over existing methodologies in speed and accuracy.

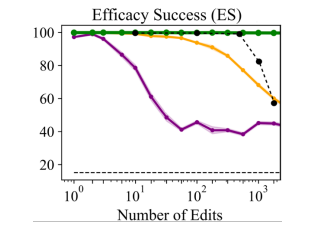

The experimental results underline the effectiveness and efficiency of Larimar. On knowledge editing tasks, Larimar matched and sometimes surpassed the performance of current leading methods. He demonstrated a notable advantage in speed, achieving updates up to 10 times faster. Larimar proved its worth in handling sequential edits and managing long input contexts, showing flexibility and generalization in different scenarios.

Some key findings from the research include:

- Larimar introduces a brain-inspired architecture for LLM.

- Enables dynamic and unique knowledge updates, avoiding extensive retraining.

- The approach reflects human cognitive abilities to selectively learn and forget.

- It achieves updates up to 10 times faster, demonstrating significant efficiency.

- It shows an exceptional ability to handle sequential edits and long input contexts.

In conclusion, Larimar represents a significant step in the ongoing effort to improve LLMs. By addressing the key challenges of updating and editing model knowledge, Larimar offers a robust solution that promises to revolutionize the maintenance and improvement of LLMs post-deployment. Its ability to perform dynamic one-time updates and selective forgetting without extensive retraining marks a notable advance, potentially leading to LLMs that evolve at the same pace as the wealth of human knowledge, maintaining their relevance and accuracy over time. .

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 38k+ ML SubReddit

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER