The exploration of natural language processing has been revolutionized with the advent of LLMs like GPT. These models show exceptional language comprehension and generation capabilities, but encounter significant obstacles. Their static knowledge base often challenges them, resulting in outdated information and inaccurate answers, especially in scenarios that demand domain-specific knowledge. This gap requires innovative strategies to overcome the limitations of LLMs, ensuring their practical applicability and reliability in diverse and knowledge-intensive tasks.

The traditional approach has refined LLMs with domain-specific data to address these challenges. While this method can produce substantial improvements, it has disadvantages. It requires a large investment in resources and specialized expertise, which limits its adaptability to the constantly evolving information landscape. This approach cannot dynamically update the model's knowledge base, which is essential for handling rapidly changing or highly specialized content. These limitations point to the need for a more flexible and dynamic approach to increasing LLMs.

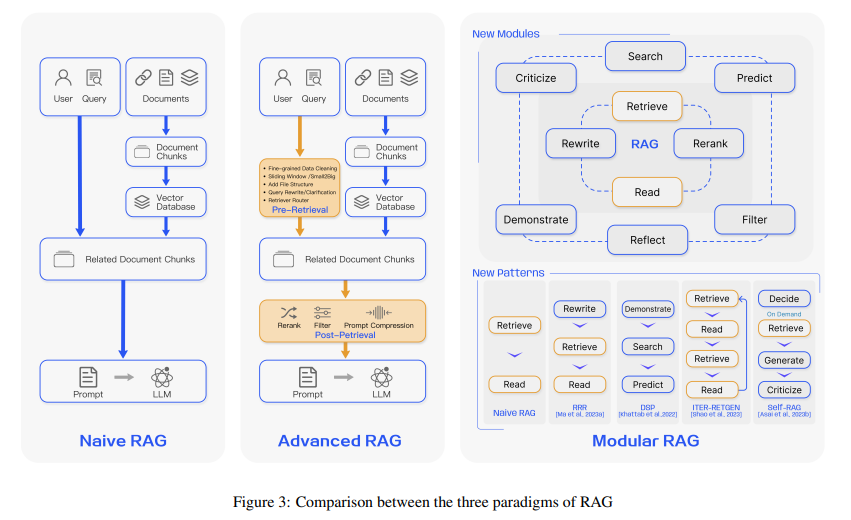

Researchers from Tongji University, Fudan University and Tongji University have launched a survey on recovery-augmented generation (RAG), an innovative methodology developed by researchers to improve the capabilities of LLMs. This approach ingeniously combines parameterized knowledge of the model with non-parameterized, dynamically accessible external data sources. RAG first identifies and extracts relevant information from external databases in response to a query. The data retrieved forms the basis on which the LLM generates its responses. This process enriches the model's reactions with current, domain-specific information and significantly decreases the occurrence of hallucinations, a common problem in LLM responses.

Digging deeper into the RAG methodology, the process begins with a sophisticated retrieval system that scans extensive external databases to locate information pertinent to the query. This system is adjusted to guarantee the relevance and accuracy of the information obtained. Once relevant data is identified, it is seamlessly integrated into the LLM response generation process. The LLM, now equipped with this newly obtained information, is better positioned to produce answers that are not only accurate but also up-to-date, addressing the inherent limitations of purely parameterized models.

The performance of RAG-augmented LLMs has been notable. A significant reduction in hallucinations has been observed in the models, which directly improves the reliability of the responses. Users can now receive answers that are not only based on the model's extensive training data, but also supplemented with the most up-to-date information from external sources. This aspect of RAG, where the sources of the retrieved information can be cited, adds a layer of transparency and reliability to the model results. RAG's ability to dynamically incorporate domain-specific knowledge makes these models versatile and adaptable to various applications.

In one word:

- RAG represents an innovative approach in natural language processing, addressing critical challenges facing LLMs.

- By uniting parameterized knowledge with external non-parameterized data, RAG significantly improves the accuracy and relevance of LLM responses.

- The dynamic nature of the method allows for the incorporation of up-to-date and domain-specific information, making it highly adaptable.

- RAG performance is characterized by a notable reduction in hallucinations and greater response reliability, which reinforces user confidence.

- The transparency that RAG offers, through source citations, further establishes its usefulness and credibility in practical applications.

This exploration of RAG's role in the rise of LLMs underscores its importance and potential for shaping the future of natural language processing, opening new avenues for research and development in this dynamic and ever-evolving field.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to join. our SubReddit of more than 35,000 ml, 41k+ Facebook community, Discord channel, LinkedIn Graboveand Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you'll love our newsletter.

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER