Automation of radiology report generation has become one of the important areas of interest in biomedical natural language processing. This is due to the large amount and exponential growth of medical imaging data and the reliance on highly accurate diagnostic interpretation in modern healthcare. Advances in artificial intelligence make image analysis combined with natural language processing the key to changing the landscape of radiology workflows in terms of efficiency, consistency and diagnostic accuracy.

A major challenge in this field lies in generating complete and accurate reports that meet the complexities of medical imaging. Radiology reports often require precise descriptions of imaging findings and their clinical implications. Ensuring consistency in reporting quality while capturing subtle nuances of medical images is particularly challenging. The limited availability of radiologists and the increasing demand for image interpretations further complicate the situation, highlighting the need for effective automation solutions.

The traditional approach to radiology reporting automation relies on convolutional neural networks (CNN) or visual transformers to extract features from images. These image processing techniques are often combined with transformers or recurrent neural networks (RNN) to generate textual output. These approaches have shown promise, but generally fail to maintain objective accuracy and clinical relevance. Integrating image and text data remains a technical hurdle, paving the way for future improvements in model design and data utilization.

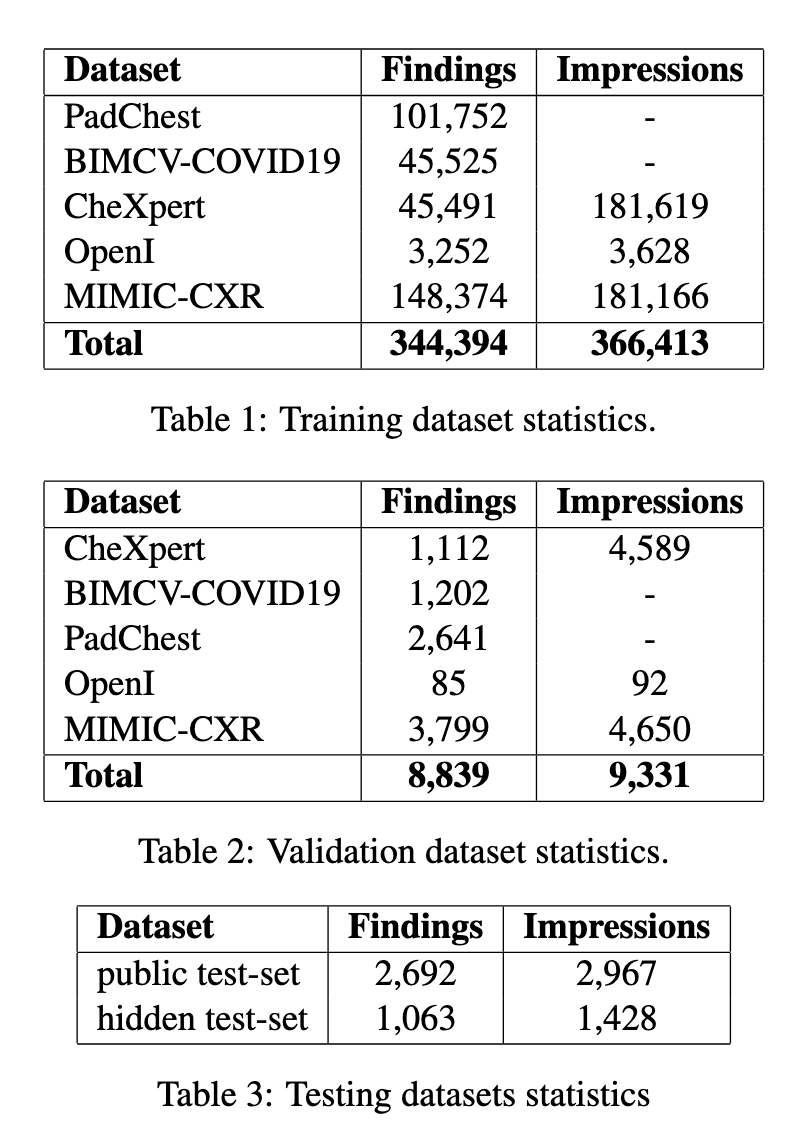

AIRI and Skoltech researchers brought the most advanced system that could combat all these challenges. It is a DINOv2 vision encoder trained specifically for medical data along with a large open biomedical language model called OpenBio-LLM-8B. This was achieved by using the LLaVA framework, which can facilitate the vision-language interaction process. The authors relied on a PadChest, BIMCV-COVID19, CheXpert, OpenI, and MIMIC-CXR dataset to train and test their model to effectively address many varied clinical settings.

The proposed system integrates advanced methodologies for both image coding and language generation. The DINOv2 vision encoder works with chest x-ray images and extracts nuanced features from radiological studies. These functions are processed by OpenBio-LLM-8B, a text decoder optimized for the biomedical domain. For two days, training was carried out on powerful computing resources, including 4 NVIDIA A100 GPUs. The team used a set of techniques called Low-Rank Adaptation (LoRA) fine-tuning methods to improve learning without overfitting. Only high-quality images were included in a careful preprocessing process, using the first two images of each study for evaluation.

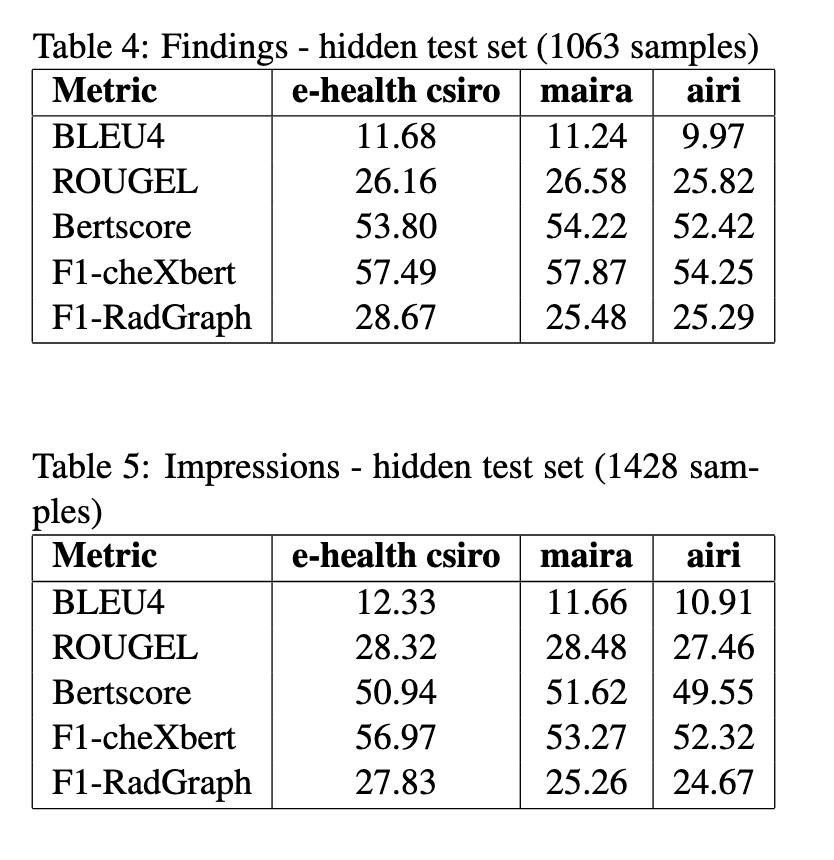

System performance was impressive across all chosen evaluation metrics; therefore, it works well in radiology report generation. On the hidden test sets, the model achieved a BLEU-4 score of 11.68 for findings and 12.33 for impressions, reflecting its accuracy in generating relevant textual content. Additionally, the system achieved an F1-CheXbert score of 57.49 for findings and 56.97 for impressions, indicating that it can accurately capture critical medical observations. The BERTScore for the findings was 53.80, further validating the semantic coherence of the generated texts. Metrics such as ROUGE-L and F1-RadGraph showed that the system performed better, with 26.16 and 28.67, respectively, in the results.

The researchers addressed long-standing challenges in radiology automation by leveraging a carefully curated data set and specialized computational techniques. Their approach balanced computational efficiency with clinical accuracy, demonstrating the practical feasibility of such systems in real-world settings. The integration of domain-specific encoders and decoders was instrumental in achieving high-quality results, setting a new benchmark for automated radiology reporting.

This research marks an important milestone in biomedical natural language processing. By solving the complexities of medical imaging, the AIRI and Skoltech team has demonstrated how ai can change radiology workflows. Their findings highlight the need to combine specific models with robust data sets to achieve significant advances in the automation of diagnostic reporting.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 65,000 ml.

<a target="_blank" href="https://nebius.com/blog/posts/studio-embeddings-vision-and-language-models?utm_medium=newsletter&utm_source=marktechpost&utm_campaign=embedding-post-ai-studio” target=”_blank” rel=”noreferrer noopener”> (Recommended Reading) Nebius ai Studio Expands with Vision Models, New Language Models, Embeddings, and LoRA (Promoted)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

NEWSLETTER

NEWSLETTER