Transformer models are crucial in machine learning for language and vision processing tasks. Transformers, recognized for their effectiveness in handling sequential data, play a critical role in natural language processing and computer vision. They are designed to process input data in parallel, making them highly efficient for large data sets. Still, traditional Transformer architectures must improve their ability to manage long-term dependencies within sequences, a critical aspect of understanding context in language and images.

The central challenge addressed in the current study is the efficient and effective modeling of long-term dependencies in sequential data. While adept at handling shorter sequences, traditional transformer models need help capturing extensive contextual relationships, primarily due to computational and memory limitations. This limitation becomes pronounced in tasks that require understanding long-range dependencies, such as in complex sentence structures in language modeling or detailed image recognition in vision tasks, where context can span a wide range of input data.

Current methods to mitigate these limitations include various memory-based approaches and specialized attention mechanisms. However, these solutions often increase computational complexity or fail to capture sparse and long-range dependencies adequately. Techniques such as memory caching and selective attention have been employed, but they either increase the complexity of the model or require sufficiently expanding the receptive field of the model. The existing landscape of solutions underscores the need for a more efficient method to improve Transformers' ability to process long sequences without prohibitive computational costs.

Researchers from the Chinese University of Hong Kong, the University of Hong Kong and Tencent Inc. propose an innovative approach called Cached Transformers, augmented with a closed recurrent cache (GRC). This novel component is designed to improve Transformers' ability to handle long-term relationships in data. GRC is a dynamic memory system that efficiently stores and updates token embeddings based on their relevance and historical importance. This system allows the Transformer to process the current input and leverage a rich, contextually relevant history, thereby significantly expanding its understanding of long-range dependencies.

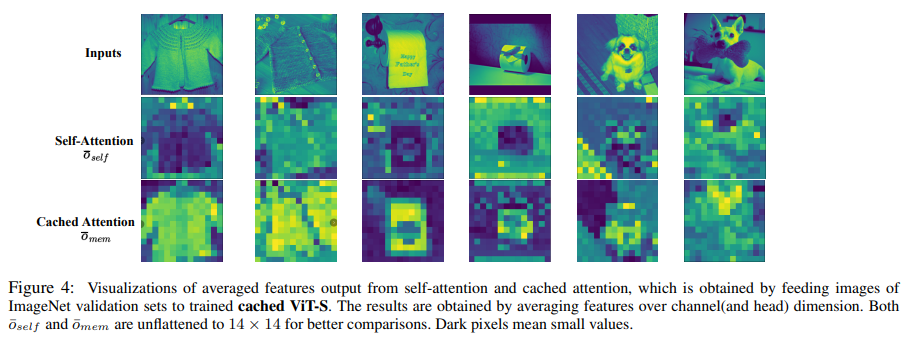

GRC is a key innovation that dynamically updates a token embedding cache to efficiently represent historical data. This adaptive caching mechanism allows the Transformer model to serve a combination of current and accumulated information, significantly expanding its ability to process long-range dependencies. The GRC maintains a balance between the need to store relevant historical data and computational efficiency, thus addressing the limitations of traditional Transformer models in handling long sequential data.

The integration of Cached Transformers with GRC demonstrates notable improvements in language and vision tasks. For example, in language modeling, enhanced Transformer models equipped with GRC outperform traditional models, achieving lower perplexity and higher accuracy in complex tasks such as machine translation. This improvement is attributed to the GRC's efficient handling of long-range dependencies, providing a more complete context for each input sequence. These advancements indicate a significant step forward in the capabilities of Transformer models.

In conclusion, the research can be summarized in the following points:

- Cached Transformers with GRC effectively addresses the problem of modeling long-term dependencies in sequential data.

- The GRC mechanism significantly improves Transformers' ability to understand and process extended sequences, thereby improving performance in both language and vision tasks.

- This advancement represents a notable leap in machine learning, particularly in how Transformer models handle context and dependencies in long sequences of data, setting a new standard for future developments in the field.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to join. our SubReddit of more than 35,000 ml, 41k+ Facebook community, Discord channel, and Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you'll love our newsletter.

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER