Graph Convolutional Networks (GCN) have become integral in the analysis of complex graph-structured data. These networks capture the relationships between nodes and their attributes, making them indispensable in domains such as social network analysis, biology, and chemistry. By leveraging graph structures, GCNs enable node classification and link prediction tasks, fostering advances in scientific and industrial applications.

Training large-scale graphs presents significant challenges, particularly in maintaining efficiency and scalability. Irregular memory access patterns caused by graph sparsity and the extensive communication required for distributed training make it difficult to achieve optimal performance. Furthermore, dividing graphs into subgraphs for distributed computing creates unbalanced workloads and increased communication overhead, further complicating the training process. Addressing these challenges is crucial to enable GCN training on massive data sets.

Existing methods for GCN training include mini-batch and full-batch approaches. Mini-batch training reduces memory usage by sampling smaller subgraphs, allowing computations to fit within limited resources. However, this method often sacrifices accuracy since it needs to preserve the entire structure of the graph. While preserving the graph structure, full batch training faces scalability issues due to increased memory and communication demands. Most current frameworks are optimized for GPU platforms, with a limited focus on developing efficient solutions for CPU-based systems.

The research team, which includes collaborators from Tokyo Institute of technology, RIKEN, the National Institute of Advanced Industrial Science and technology, and Lawrence Livermore National Laboratory, has introduced a novel framework called SuperGCN. This system is designed for CPU-powered supercomputers and addresses scalability and efficiency challenges in GCN training. The framework bridges the gap in distributed graph learning by focusing on optimized graph-related operations and communication reduction techniques.

SuperGCN leverages several innovative techniques to improve its performance. The framework employs optimized CPU-specific implementations of graphics operators, ensuring efficient memory usage and balanced workloads across threads. The researchers proposed a hybrid aggregation strategy that uses the minimum vertex coverage algorithm to categorize edges into pre- and post-aggregation sets, reducing redundant communications. Additionally, the framework incorporates Int2 quantization to compress messages during communication, significantly reducing data transfer volumes without compromising accuracy. Label propagation is used in conjunction with quantization to mitigate the effects of reduced precision, ensuring convergence and maintaining high model accuracy.

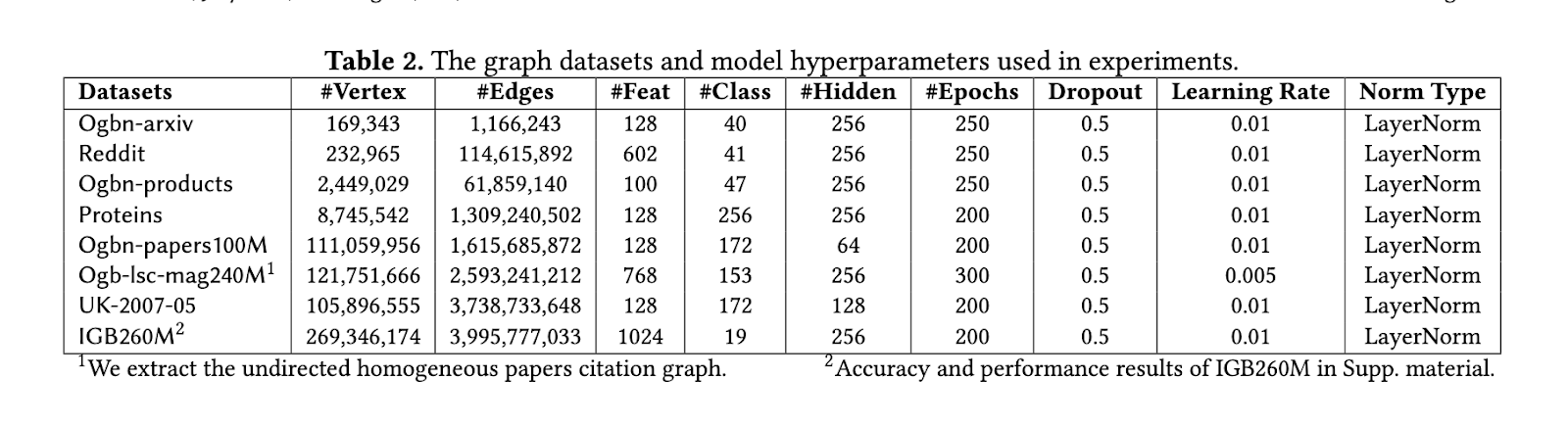

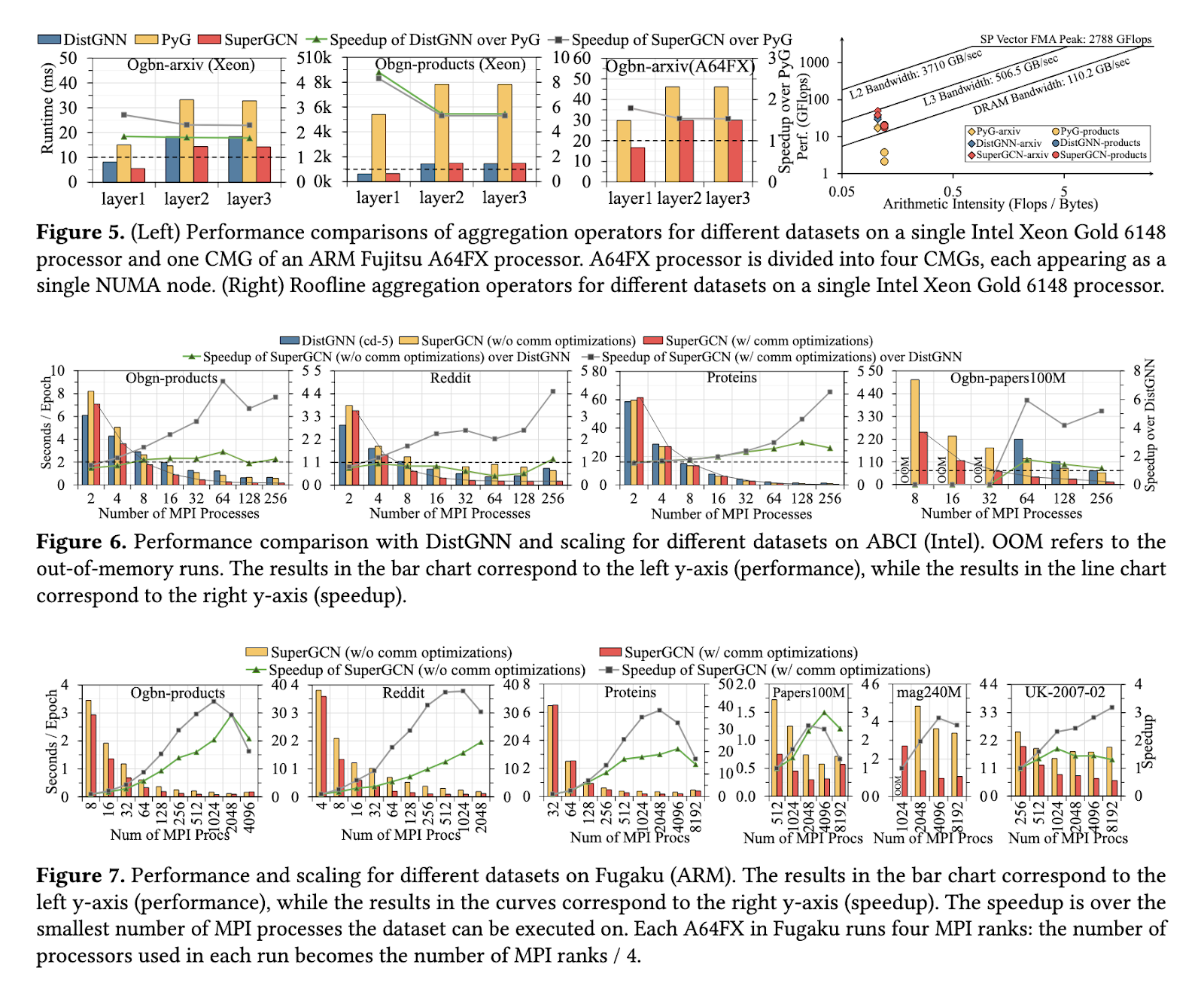

The performance of SuperGCN was evaluated on large-scale Ogbn-products, Reddit, and Ogbn-papers100M datasets, demonstrating notable improvements over existing methods. The framework achieved up to a six-fold speedup compared to Intel's DistGNN on Xeon-based systems, and performance increased linearly as the number of processors increased. On ARM-based supercomputers like Fugaku, SuperGCN scaled to over 8000 processors, showing unmatched scalability for CPU platforms. The framework achieved processing speeds comparable to GPU-driven systems, requiring significantly less power and cost. On Ogbn-papers100M, SuperGCN achieved an accuracy of 65.82% with tag propagation enabled, outperforming other CPU-based methods.

By introducing SuperGCN, researchers addressed critical bottlenecks in distributed GCN training. Their work demonstrates that efficient and scalable solutions can be achieved on CPU-driven platforms, providing a cost-effective alternative to GPU-based systems. This advancement marks an important step toward enabling large-scale graphics processing while preserving computational and environmental sustainability.

Verify the paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>