As the world of computational science continually evolves, physics-based neural networks (PINN) stand out as an innovative approach to address direct and inverse problems governed by partial differential equations (PDE). These models incorporate physical laws into the learning process, promising a significant jump in accuracy and predictive robustness.

But as PINNs grow in depth and complexity, their performance paradoxically decreases. This counterintuitive phenomenon arises from the complexities of multilayer perceptron (MLP) architectures and their initialization schemes, often leading to poor trainability and unstable results.

Current physics-based machine learning methodologies include refining neural network architecture, improving training algorithms, and employing specialized initialization techniques. Despite these efforts, the search for an optimal solution is still ongoing. Efforts such as incorporating symmetries and invariance into models and formulating custom loss functions have been critical.

A team of researchers from the University of Pennsylvania, Duke University, and North Carolina State University have introduced Physics-Informed Residual Adaptive Networks (PirateNets), an architecture designed to harness the full potential of deep PINNs. By forwarding adaptive residual connections, PirateNets offers a dynamic framework that allows the model to start as a shallow network and get progressively deeper during training. This innovative approach addresses initialization challenges and improves the network's ability to learn and generalize from physical laws.

PirateNets integrate random Fourier features as an embedding function to mitigate spectral bias and efficiently approximate high-frequency solutions. This architecture employs dense layers augmented with activation operations on each residual block, where the forward pass involves pointwise activation functions along with adaptive residual connections. Key to its design, trainable parameters within the jump connections modulate the nonlinearity of each block, culminating in the network's final result being a linear amalgam of initial layer embeddings. At first, PirateNets resemble a linear combination of basis functions, allowing for inductive bias control. This setup facilitates optimal initial estimation for the network, leveraging data from multiple sources to overcome the profound network initialization challenges inherent to PINNs.

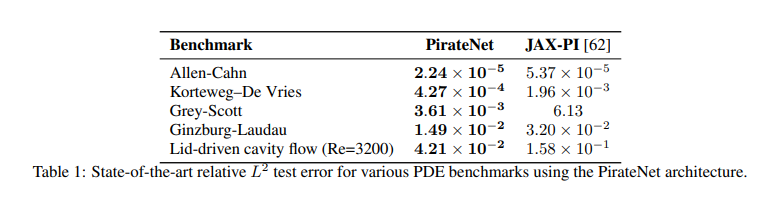

PirateNet's effectiveness is validated through rigorous benchmarks, outshining Modified MLP with its sophisticated architecture. Using random Fourier features for coordinate embedding and employing modified MLP as the backbone, enhanced by random weight factorization (RWF) and Tanh activation, PirateNet adheres to exact periodic boundary conditions. The training uses mini-batch gradient descent with the Adam optimizer, incorporating an exponential warm-up and decay learning rate schedule. PirateNet demonstrates superior performance and faster convergence between benchmarks, achieving record-breaking results for the Allen-Cahn and Korteweg-De Vries equations. Ablation studies further confirm its scalability, robustness, and effectiveness of its components, solidifying PirateNet's prowess in effectively addressing complex, nonlinear problems.

In conclusion, the development of PirateNets represents a notable achievement in computer science. PirateNets pave the way for more accurate and robust predictive models by integrating physical principles with deep learning. This research addresses the inherent challenges of PINNs and opens new avenues for scientific exploration, promising to revolutionize our approach to solving complex problems governed by PDE.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER