Machine learning for predictive modeling aims to accurately forecast outcomes based on input data. One of the main challenges in this field is “domain adaptation,” which addresses differences between training and application scenarios, especially when models face new and varied conditions after training. This challenge is important for tabular data sets in finance, healthcare, and social sciences, where the underlying conditions of the data often change. These changes can dramatically reduce the accuracy of predictions, as most models are initially trained under specific assumptions that do not generalize well when conditions change. Understanding and addressing these changes is essential to building adaptive and robust models for real-world applications.

An important problem in predictive modeling is the change in the relationship between characteristics (x) and target outcomes (Y), commonly known as Y|x changes. These changes may be due to missing information or confounding variables that vary across different settings or populations. Y|x changes are particularly challenging in tabular data, where the absence or alteration of key variables can distort learned patterns, leading to incorrect predictions. Current models struggle in such situations, as their reliance on fixed relationships between features and targets limits their adaptability to new data conditions. Therefore, developing methods that allow models to learn from only a few labeled examples in the new context without extensive retraining is crucial for practical implementation.

Traditional methods such as gradient boosting trees and neural networks have been widely used for modeling tabular data. While effective, these models should be reviewed when applied to data that differ significantly from the training scenarios. The recent application of large language models (LLM) represents an emerging approach to this problem. LLMs can encode a lot of contextual knowledge into features, which the researchers hypothesize could help models perform better when the training and target data distributions don't align. This novel adaptation strategy has potential, especially in cases where traditional models struggle with cross-domain variability.

Researchers at Columbia University and Tsinghua University have developed an innovative technique that leverages LLM embeddings to address the adaptation challenge. Their method involves transforming tabular data into serialized text format, which is then processed by an advanced LLM encoder called e5-Mistral-7B-Instruct. These serialized texts are converted into embeddings or numerical representations, which capture meaningful information about the data. The embeddings are then fed into a shallow neural network trained on the original domain and fine-tuned on a small sample of labeled target data. By doing so, the model can learn patterns that are more generalizable to new data distributions, making it more resilient to changes in the data environment.

This method employs an e5-Mistral-7B-Instruct encoder to transform tabular data into embeddings, which are then processed by a shallow neural network. The technique allows for the integration of additional domain-specific information, such as socioeconomic data, which researchers concatenate with serialized embeddings to enrich data representations. This combined approach provides a richer set of features, allowing the model to better capture variable changes between domains. By fitting this neural network with only a limited number of labeled examples from the target domain, the model adapts more effectively than traditional approaches, even under significant Y|x changes.

The researchers tested their method on three real-world data sets:

- ACS Income

- ACS Mobility

- Pub. ACS.

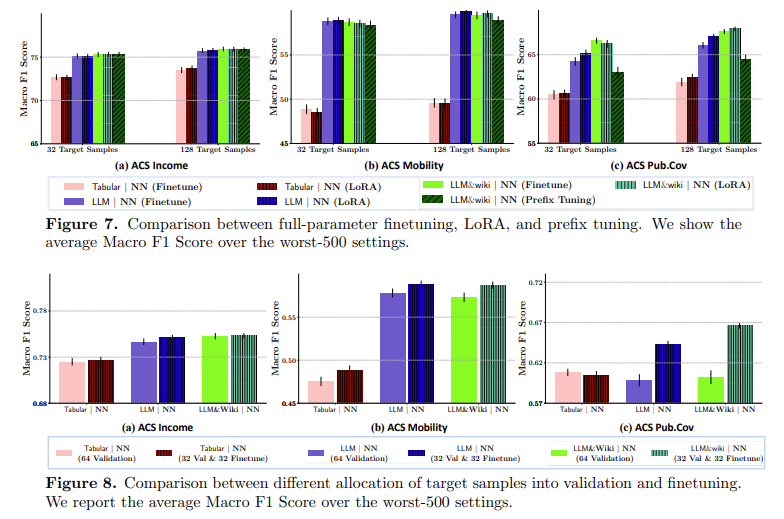

Their evaluations covered 7,650 unique combinations of source-target pairs across all data sets, using 261,000 model configurations with 22 different algorithms. The results revealed that LLM additions alone improved performance in 85% of cases in the ACS Income data set and 78% in the ACS Mobility data set. However, for the ACS Pub.Cov dataset, the FractionBest metric dropped to 45%, indicating that the LLM embeddings did not consistently outperform the tree ensemble methods across all datasets. However, when fine-tuned with only 32 labeled target samples, performance increased significantly, reaching 86% on ACS Income and Mobility and 56% on ACS Pub.Cov, underscoring the flexibility of the method under various data conditions.

The study findings suggest promising applications for LLM incorporations in predicting tabular data. Key takeaways include:

- Adaptive modeling: LLM additions improve adaptability, allowing models to better handle Y|x changes by incorporating domain-specific information into feature representations.

- Data efficiency: Tuning with a minimal set of target samples (as few as 32 examples) improved performance, indicating resource efficiency.

- Wide applicability: The method effectively adapted to different data changes on three data sets and 7650 test cases.

- Limitations and future research: Although the LLM embeddings showed substantial improvements, they did not consistently outperform the tree ensemble methods, particularly on the ACS Pub.Cov dataset. This highlights the need for further research on fitting methods and additional domain information.

In conclusion, this research demonstrates that the use of LLM embeddings for prediction of tabular data represents an important step forward in adapting models to distributional changes. By transforming tabular data into robust, information-rich embeddings and fitting models with limited target data, the approach overcomes traditional limitations, allowing models to operate effectively in diverse data environments. This strategy opens new avenues to leverage LLM additions to achieve more robust predictive models adaptable to real-world applications with minimal labeled data.

look at the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Trend) LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLM) for Intel PCs

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, he brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER