Artificial General Intelligence (AGI) seeks to create systems that can perform various tasks, reasoning and learning with human-like adaptability. Unlike narrow ai, AGI aims to generalize its capabilities across multiple domains, allowing machines to operate in dynamic and unpredictable environments. Achieving this requires combining sensory perception, abstract reasoning, and decision making with a robust memory and interaction framework to effectively reflect human cognition.

A major challenge in AGI development is bridging the gap between abstract representation and real-world understanding. Current ai systems struggle to connect abstract symbols or concepts with tangible experiences, a process known as symbol grounding. Furthermore, these systems lack a sense of causality, which is essential for predicting the consequences of actions. Compounding these challenges is the absence of effective memory mechanisms, which prevents these systems from retaining and using knowledge for adaptive decision making over time.

Existing approaches rely heavily on large language models (LLMs) trained on large data sets to identify patterns and correlations. The main specialty of these systems is natural language understanding and reasoning, but not their inability to learn through direct interaction with the environment. RAG allows models to access external databases to acquire more information. Still, these tools are insufficient to address core challenges such as causality learning, symbol grounding, or memory integration, which are vital to AGI.

Researchers from the Cape Coast Technical University, Cape Coast, Ghana, and the University of Mines and technology, UMaT, Tarkwa, explored the fundamental principles for promoting AGI. They emphasized the need for embodiment, symbol foundation, causality, and memory to achieve general intelligence. The ability of systems to interact with their environment through sensory inputs and actuators enables the collection of real-world data, which can inform symbols and be used in the context in which they are applied. The grounding of the symbol thus serves to bridge the gap between the abstract and the tangible. Causality allows a system to know what happens due to an action performed, while memory systems retain knowledge and structured memory for long-term reasoning.

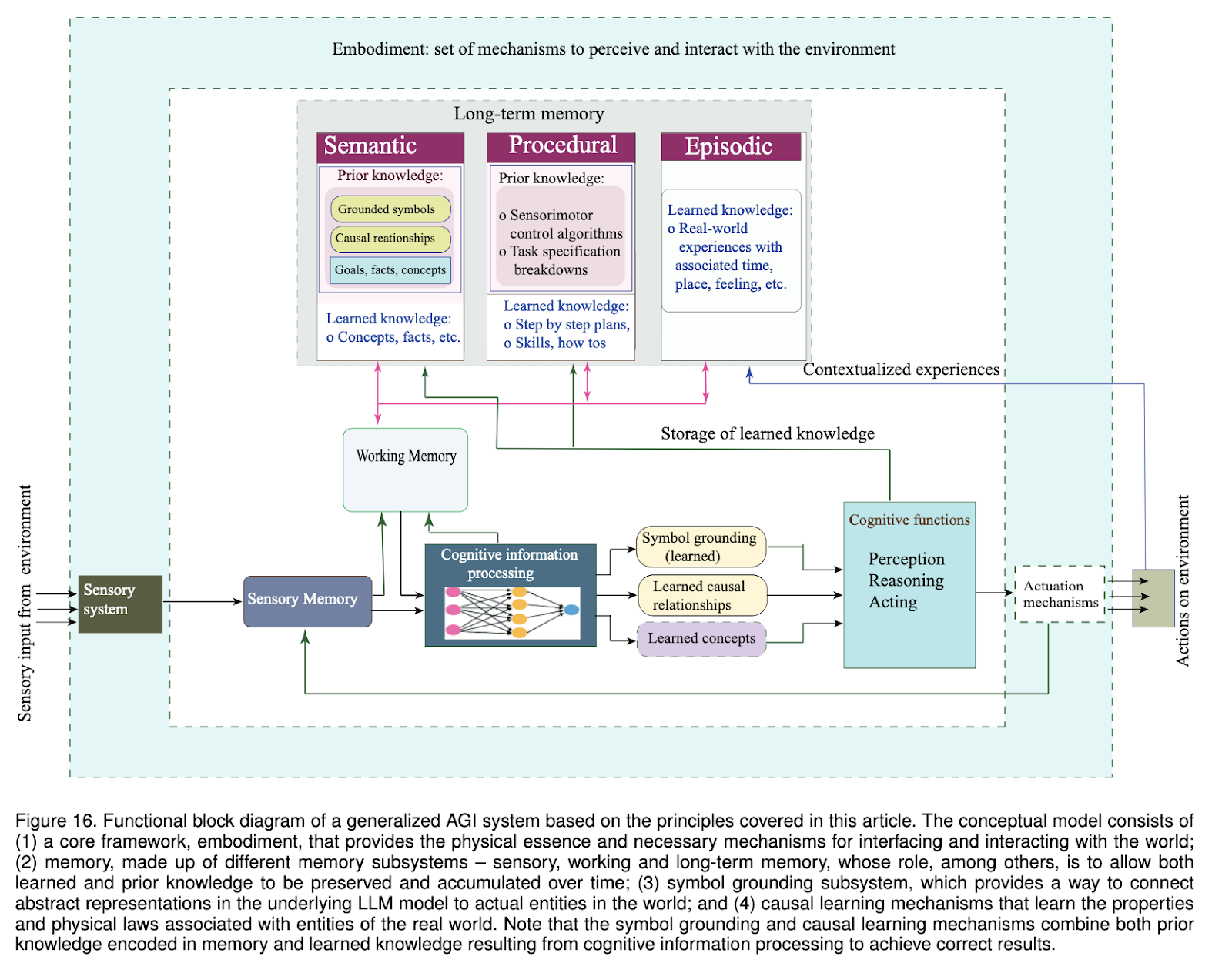

The researchers delved into the subtleties of these principles. Embodiment enables the collection of sensorimotor data and therefore allows systems to actively perceive their environment. The symbol base links abstract concepts with physical experiences, making them actionable in real-world contexts. Learning causality through direct interaction allows systems to predict outcomes and adjust their behavior. Memory is divided into sensory, working and long-term types, and each of them plays a fundamental role in the cognitive process. They come in semantic, episodic, and procedural forms; Long-term memory allows systems to store facts, contextual knowledge, and procedural instructions for later retrieval.

The impact of these capabilities on systems suggests that they have great leadership in the areas of AGI. For example, memory mechanisms supported by structured storage types such as knowledge graphs and vector databases improve retrieval efficiency and scalability: systems can quickly access knowledge to use it correctly. Embodied agents are more interactive and efficient due to sensorimotor experiences that enhance their perception of the environment. Causality learning predicts the outcomes of these systems, and symbol grounding ensures that abstract concepts remain contextual and actionable. These components help to overcome the problems identified in traditional ai systems.

This research emphasized the synergistic nature of embodiment, grounding, causality, and memory, so that a single breakthrough was seen to improve everything. Instead of building these components independently, the work focused on them as interrelated elements, giving a clearer vision of how more robust and scalable AGI systems could be obtained, which should reason, adapt and learn in a style closer to the human.

The findings of this research indicate that although much has been achieved, the development of AGI remains a challenge. The researchers noted that these fundamental principles should be integrated into a coherent architecture to fill gaps in current ai models. Their work is a guide to the future of AGI, imagining a world where machines can have human-like intelligence and versatility. Although practical implementation is still in its early stages, the concepts described provide a solid foundation for advancing artificial intelligence to new frontiers.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 65,000 ml.

UPCOMING FREE ai WEBINAR (JANUARY 15, 2025): <a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Increase LLM Accuracy with Synthetic Data and Assessment Intelligence–<a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Join this webinar to learn practical information to improve LLM model performance and accuracy while protecting data privacy..

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

NEWSLETTER

NEWSLETTER