Transformers have replaced recurrent neural networks (RNN) as the preferred architecture for natural language processing (NLP). Transformers stand out conceptually because they directly access each token in a sequence, unlike RNNs that rely on maintaining a recurring state from past inputs. Decoders have become a prominent variant within the field of transformers. These decoders typically produce results in an autoregressive manner, meaning that the generation of each token is influenced by the key and value calculations of previous tokens.

Researchers from the Hebrew University of Jerusalem and FAIR, ai at Meta, have shown that the autoregressive nature of transformers aligns with the fundamental principle of RNNs, which involves preserving a state from one step to the next. They formally redefine decoder-only transformers as multi-state RNN (MSRNN), presenting a generalized version of traditional RNNs. This redefinition highlights that as the number of previous tokens increases during decoding, the transformers become MSRNNs with infinite states. The researchers further show that transformers can be compressed into finite MSRNNs by limiting the number of tokens processed in each step. They introduce TOVA, a compression policy for MSRNN, which selects tokens for retention based solely on their attention scores. The TOVA evaluation is carried out on four long-range tasks.

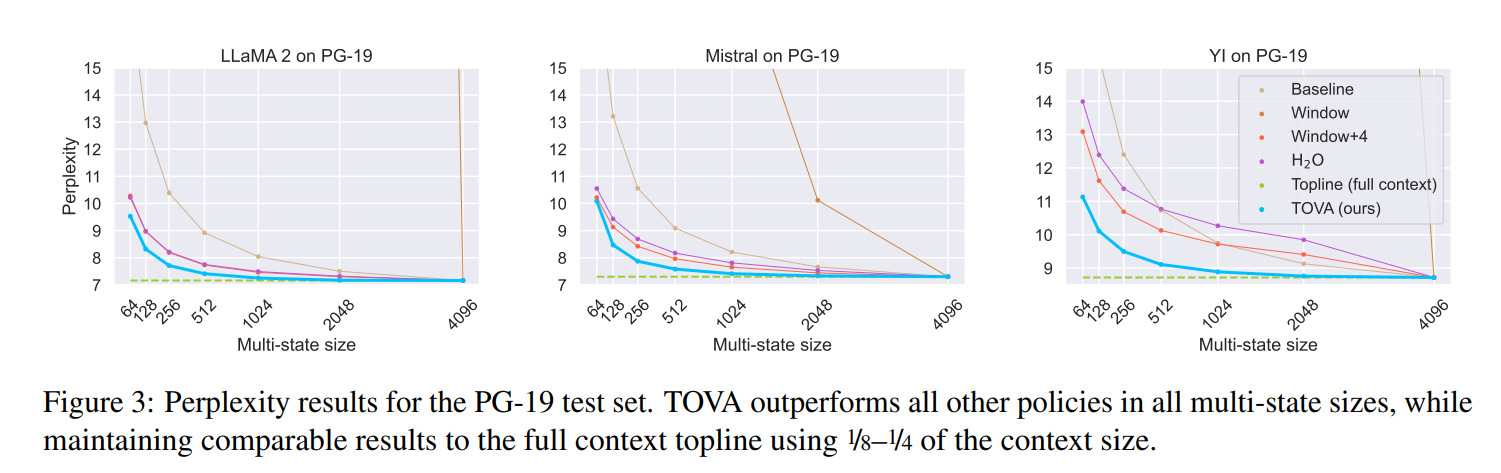

The study compares transformers and RNNs, showing that decoder-only transformers can be conceptualized as infinite multi-state RNNs, and pre-trained transformers can be converted into finite multi-state RNNs by fixing the size of their hidden state. Reports confusion in the PG-19 test suite for language modeling. It uses test sets from the ZeroSCROLLS benchmark to assess long-term comprehension, including long-term summaries and long-term question answering tasks. The study mentions the use of the QASPER dataset to answer long text questions and evaluate stories generated using GPT-4 as an evaluator.

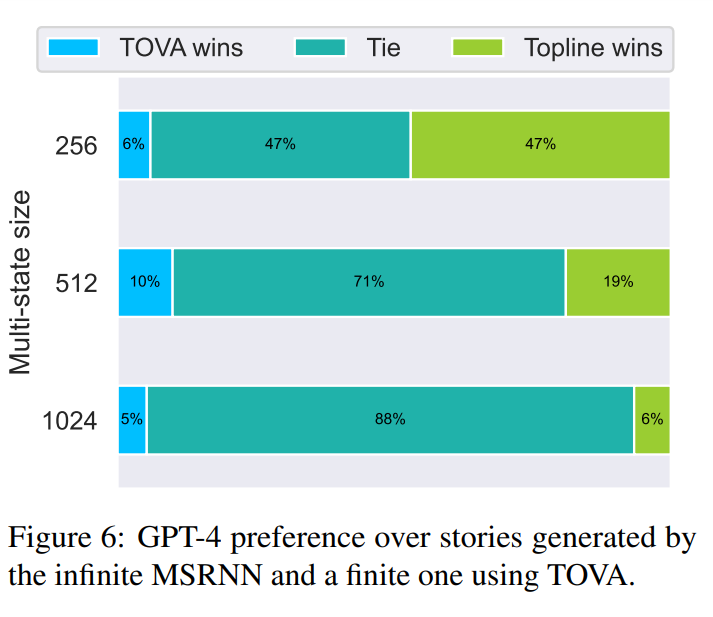

The study demonstrates that decoder-only transformers can be conceptualized as infinite multi-state RNNs, and pre-trained transformers can be converted into finite multi-state RNNs by fixing the size of their hidden state. The study also mentions modifying the attention mask to incorporate different MSRNN policies, such as the First In First Out (FIFO) strategy, to effectively parallel the language modeling task. Researchers use the GPT-4 model to evaluate the generated texts and compare the result of the TOVA policy with the main model.

The study shows that transformer decoder LLMs behave like finite MSRNNs even though they are trained as infinite MSRNNs. The proposed TOVA policy performs consistently better than other policies on long-range tasks with smaller cache sizes across all sizes and multi-state models. Experiments show that using TOVA with a quarter or even an eighth of the full context produces results within one point of the upper model in language modeling tasks. The study also reports a significant reduction in LLM cache size, up to 88%, leading to reduced memory consumption during inference. The researchers recognize the computational limitations and approximate the infinite MSRNN with a sequence length of 4,096 tokens for extrapolation experiments.

In summary, researchers have redefined decoder transformers as multi-state RNNs with infinite multi-state size. When the number of token representations that transformers can handle at each step is limited, it is the same as compressing it from infinite to finite MSRNNs. The TOVA policy, which is a simple compression method that selects which tokens to continue using their attention scores, has been found to outperform existing compression policies and perform comparably to the infinite MSRNN model with a reduced multi-state size. Although untrained, transformers often function as finite MSRNNs in practice. These findings provide insights into the interworking of transformers and their connections to RNNs. Additionally, they have practical value in reducing the LLM cache size by up to 88%.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>