After the success of large language models (LLM), current research extends beyond the text based on the text to multimodal reasoning tasks. These tasks integrate vision and language, which is essential for artificial general intelligence (AGI). The cognitive reference points such as Puzzlevqa and somepuzzlevqa evaluate the ability to process abstract visual information and algorithmic reasoning. Even with the advances, the LLMs fight with multimodal reasoning, particularly the recognition of patterns and the resolution of spatial problems. The high computational costs aggravate these challenges.

Previous evaluations were based on symbolic reference points such as ARC-Agi and Visual Evaluations such as Raven's progressive matrices. However, these do not properly challenge ai to process multimodal entries. Recently, data sets such as Puzzlevqa and Something Puzzlevqa have been entered to evaluate abstract visual reasoning and algorithmic problem solving. These data sets require models that integrate visual perception, logical deduction and structured reasoning. While the previous models, such as GPT-4-Turbo and GPT-4O, showed improvements, still faced limitations in abstract reasoning and multimodal interpretation.

Researchers from the Technological University and Design of Singapore (SUTD) introduced a systematic evaluation of the series of GPT- (N) models and O- (N) of OpenAI in multimodal tasks of puzzle resolution. His study examined how reasoning capabilities evolved in different generations of models. The objective aimed to identify gaps in the perception of ai, abstract reasoning and problem solving skills. The team compared the performance of models such as GPT-4-Turbo, GPT-4O and O1 in the Puzzlevqa and somewhat Puzzlevqa data sets, including abstract visual puzzles and algorithmic reasoning challenges.

The researchers carried out a structured evaluation using two main data sets:

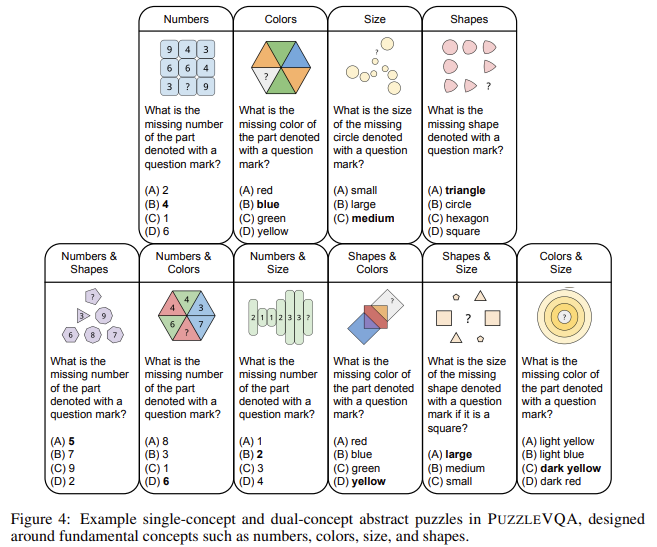

- Puzzlevqa: Puzzlevqa focuses on abstract visual reasoning and requires models to recognize patterns in numbers, shapes, colors and sizes.

- Something Puzzlevqa: Something Puzzlevqa presents algorithmic tasks for problem solving that require logical deduction and computational reasoning.

The evaluation was carried out using multiple and open option questions. The study used a zero shooting chain (COT) for reasoning and analyzed the performance drop by changing multiple choice to open responses. The models were also tested in conditions where visual perception and inductive reasoning were provided separately to diagnose specific weaknesses.

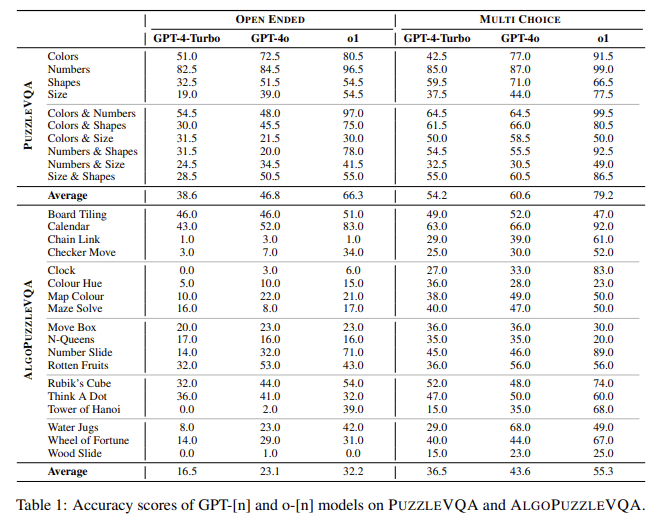

The study observed constant improvements in reasoning capabilities in different generations of models. GPT-4O showed a better performance than GPT-4-Turbo, while O1 achieved the most notable advances, particularly in algorithmic reasoning tasks. However, these profits came with a strong increase in the computational cost. Despite the general progress, ai models still fought with the tasks that required a precise visual interpretation, such as recognizing missing ways or deciphering abstract patterns. While O1 worked well in numerical reasoning, he had difficulty managing forms based on. The precision difference between multiple choice tasks and the end indicated a strong dependence on response indications. In addition, perception remained an important challenge in all models, with significantly improved precision when explicit visual details were provided.

In a quick summary, work can be summarized at some detailed points:

- The study observed a significant ascending trend in the reasoning capabilities of GPT-4-Turbo to GPT-4O and O1. While GPT-4O showed moderate profits, transition to O1 resulted in notable improvements, but occurred in an increase of 750x in the computational cost compared to GPT-4O.

- Through Puzzlevqa, O1 achieved an average precision of 79.2% in multiple choice configurations, exceeding 60.6% of GPT-4O and 54.2% of GPT-4-Turbo. However, in open tasks, all models exhibited performance drops, with O1 with a score of 66.3%, GPT-4O to 46.8%and GPT-4-Turbo at 38.6%.

- In somewhat Puzzlevqa, O1 improved substantially in previous models, particularly in puzzles that require a numerical and spatial deduction. O1 obtained 55.3%, compared to 43.6% of GPT-4O and 36.5% of GPT-4-Turbo in multiple choice tasks. However, its precision decreased by 23.1% in open tasks.

- The study identified perception as the main limitation in all models. Injecting explicit visual details improved precision by 22%–30%, indicating a dependency of external perception aids. The inductive reasoning guide further increased by 6%–19%, particularly in the recognition of numerical and spatial patterns.

- O1 stood out in numerical reasoning but fought with the shape-based puzzles, showing a 4.5% drop compared to recognition tasks in GPT-4O form. In addition, it worked well in the structured resolution of problems, but faced challenges in open scenarios that require independent deduction.

Verify he Paper and Github page. All credit for this investigation goes to the researchers of this project. Besides, don't forget to follow us <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LINKEDIN GRsplash. Do not forget to join our 75K+ ml of submen.

Recommended open source ai platform: 'Intellagent is a framework of multiple open source agents to evaluate the complex conversational system' (Promoted)

Sana Hassan, a consulting intern in Marktechpost and double grade student in Iit Madras, passionate to apply technology and ai to address real world challenges. With great interest in solving practical problems, it provides a new perspective to the intersection of ai and real -life solutions.

NEWSLETTER

NEWSLETTER