Introduction

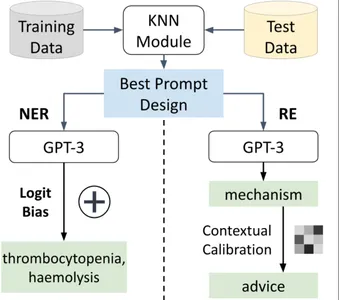

artificial intelligence (ai) has grown significantly in the past few years, mainly because of the rise of large language models (LLMs). These sophisticated ai systems, trained on vast datasets containing abundant human language, have driven myriad technological advancements. LLMs’ sheer scale and complexity, such as GPT-3 (Generative Pre-trained Transformer 3), have elevated them to the forefront of natural language understanding and generation. This article highlights email efficiency with LLMs’ pivotal role in revolutionizing email response generation and sorting. As our digital communication landscape evolves, the need for efficient, context-aware, and personalized responses to emails has become increasingly critical. LLMs hold the potential to reshape this landscape by offering solutions that enhance communication productivity, automate repetitive tasks, and augment human ingenuity.

Learning Objectives

- Trace the evolution of language models, discerning pivotal milestones and grasping the development from foundational systems to advanced models like GPT-3.5.

- Navigate the intricacies of training large language models. They will actively understand data preparation, model architecture, and the requisite computational resources while exploring challenges and innovative solutions in fine-tuning and transfer learning.

- Investigate how large language models transform email communication.

- Work through how language models optimize email sorting processes.

This article was published as a part of the Data Science Blogathon.

Understanding Large Language Models

Big language models, known as LLMs, constitute a significant step forward in artificial intelligence, especially in understanding human language. They’re good at understanding and creating human-like text. People are excited about them because they’re good at different language tasks. To grasp the concept of LLMs, it’s essential to delve into two key aspects: what they are and how they work.

What are Large Language Models?

At their center, large language models are like brilliant computer programs with extensive network connections. What sets them apart is their sheer scale. They are pre-trained on vast and diverse text datasets encompassing everything from books and articles to websites and social media posts. This pre-training phase exposes them to the intricacies of human language, allowing them to learn grammar, syntax, semantics, and even some common-sense reasoning. Importantly, LLMs don’t just regurgitate learned text but can generate coherent and contextually relevant responses.

One of the most notable examples of LLMs is GPT-3, which stands for Generative Pre-trained Transformer 3. GPT-3 boasts a staggering number of parameters—175 billion processes, to be exact—making it one of the most significant language models. These parameters represent the weights and connections within its neural network, and they are fine-tuned to enable the model to predict the next word in a sentence based on the context provided by the preceding words. This predictive capability is harnessed for various applications, from email response generation to content creation and translation services.

In essence, LLMs like GPT-3 are poised at the intersection of cutting-edge ai technology and the complexities of human language. They can understand and generate text fluently, making them versatile tools with broad-reaching implications for various industries and applications.

Training Processes and Models like GPT-3

The training process for large language models is an intricate and resource-intensive endeavor. It begins with acquiring massive textual datasets from the internet, encompassing diverse sources and domains. These datasets serve as the foundation upon which the model is built. During the training process, the model learns to predict the likelihood of a word or sequence of words given the preceding context. This process is achieved by optimizing the model’s neural network, adjusting the weights of its parameters to minimize prediction errors.

GPT-3 Architecture Overview

GPT-3, or the “Generative Pre-trained Transformer 3,” is a state-of-the-art language model developed by OpenAI. Its architecture is based on the Transformer model, which revolutionized natural language processing tasks by employing a self-attention mechanism.

Transformer Architecture: The Transformer architecture introduced by Vaswani et al. in 2017 plays a pivotal role in GPT-3. It relies on self-attention, enabling the model to weigh the importance of different words in a sequence when making predictions. This attention mechanism allows the model to consider the entire context of a sentence, capturing long-range dependencies effectively.

Scale of GPT-3: What makes GPT-3 particularly remarkable is its unprecedented scale. It boasts a massive number of parameters, with 175 billion, making it the largest language model of its time. This immense scale contributes to its ability to understand and generate complex language patterns, making it highly versatile across various natural language processing tasks.

Layered Architecture: GPT-3’s architecture is deeply layered. It consists of numerous transformer layers stacked on top of each other. Each layer refines the understanding of the input text, allowing the model to grasp hierarchical features and abstract representations. This depth of architecture contributes to GPT-3’s ability to capture intricate nuances in language.

Attention to Detail: The multiple layers in GPT-3 contribute to its capacity for detailed attention. The model can address specific words, phrases, or syntactic structures within a given context. This granular attention mechanism is crucial for the model’s ability to generate coherent and contextually relevant text.

Adaptability: GPT-3’s architecture enables it to adapt to various natural language processing tasks without task-specific training. The pre-training on diverse datasets allows the model to generalize well, making it applicable for tasks like language translation, summarization, question-answering, and more.

Significance of GPT-3’s Architecture

- Versatility: The layered architecture and the vast number of parameters empower GPT-3 with unparalleled versatility, allowing it to excel in various language-related tasks without task-specific fine-tuning.

- Contextual Understanding: The self-attention mechanism and layered structure enable GPT-3 to understand and generate text with a deep appreciation for context, making it proficient in handling nuanced language constructs.

- Adaptive Learning: GPT-3’s architecture facilitates adaptive learning, enabling the model to adapt to new tasks without extensive retraining. This adaptability is a critical feature that distinguishes it in natural language processing.

GPT-3’s architecture, built upon the Transformer model and distinguished by its scale and depth, is a technological marvel that has significantly advanced the capabilities of large language models in understanding and generating human-like text across diverse applications.

Capabilities and Applications

Large Language Models (LLMs) possess a wide range of natural language understanding and generation capabilities. These capabilities open the door to numerous applications, including their utilization in email response generation. Let’s explore these points in more detail:

1. Email Response Generation: LLMs offer significant utility in automating and enhancing the email response process, leveraging their language understanding and generation capabilities.

2. Content Creation: LLMs are powerful tools for generating creative content, including articles, blog posts, and social media updates. They can mimic specific writing styles, adapt to different tones, and produce engaging and contextually relevant content.

3. Chatbot Interactions: LLMs serve as the backbone for intelligent chatbots. They can engage in dynamic and context-aware conversations, providing users with information, assistance, and support. This is particularly useful in customer service applications.

4. Summarization Services: LLMs excel at distilling large volumes of text into concise summaries. This is valuable in news aggregation, document summarization, and content curation applications.

5. Translation Services: Leveraging their multilingual understanding, LLMs can be employed for accurate and contextually appropriate translation services. This is beneficial for breaking down language barriers in global communication.

6. Legal Document Drafting: In the legal domain, LLMs can assist in drafting standard legal documents, contracts, and agreements. They can generate text that adheres to legal terminology and formatting conventions.

7. Educational Content Generation: LLMs can aid in creating educational materials, including lesson plans, quizzes, and study guides. They can generate content tailored to different academic levels and subjects.

8. Code Generation: LLMs can generate code snippets based on natural language descriptions. This is particularly useful for programmers and developers looking for quick, accurate code suggestions.

These examples underscore the versatile applications of LLMs, showcasing their ability to streamline communication processes, automate tasks, and enhance content creation across various domains.

Enhancing Email Communication

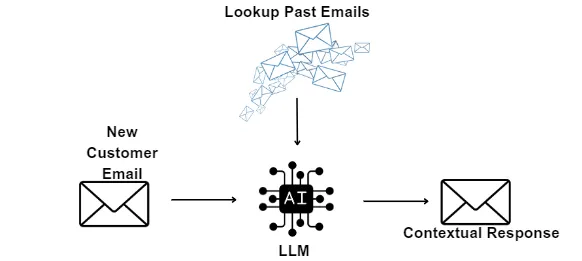

Effective email communication is a cornerstone of modern professional and personal interactions. Large Language Models (LLMs) play a pivotal role in enhancing this communication through various capabilities and applications, including automated responses, multilingual support, translation, content summarization, and sentiment analysis.

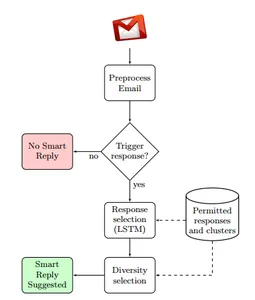

Automated Responses and Efficiency

LLMs can significantly improve email communication efficiency through automated responses. When used in email systems, they can generate automatic replies to common inquiries or messages. For example, if someone sends a password reset request, an LLM can quickly create a response with the necessary instructions, reducing the workload for human responders.

These automated responses are not limited to just routine tasks; LLMs can also handle more complex queries. For instance, they can analyze the content of an incoming email, understand its intent, and generate a personalized and contextually relevant response. This saves time for both senders and recipients and ensures that responses are consistently accurate.

Multilingual Support and Translation

In our increasingly globalized world, email communication often spans multiple languages. LLMs excel in providing multilingual support and translation services. They can help bridge language barriers by translating emails from one language to another, making communication more accessible and inclusive.

LLMs use their deep understanding of language to ensure that translations are literal and contextually appropriate. They can maintain the tone and intent of the original message, even when transitioning between languages. This feature is invaluable for international businesses, organizations, and individuals engaging in cross-cultural communication.

Content Summarization and Sentiment Analysis

Emails often contain lengthy and detailed information. LLMs are equipped to tackle this challenge through content summarization. They can analyze the content of emails and provide concise summaries, highlighting key points and critical information. This is especially useful for busy professionals who need to grasp the essence of lengthy messages quickly.

Additionally, LLMs can perform sentiment analysis on incoming emails. They assess the emotional tone of the message, helping users identify positive or negative sentiments. This analysis can be vital for prioritizing responses to urgent or emotionally charged emails, ensuring that critical issues are addressed promptly and effectively.

In conclusion, LLMs contribute significantly to enhancing email communication by automating responses, breaking down language barriers, and simplifying the understanding of email content. These capabilities improve efficiency and enable more effective and personalized email interactions.

Email Sorting and Organization

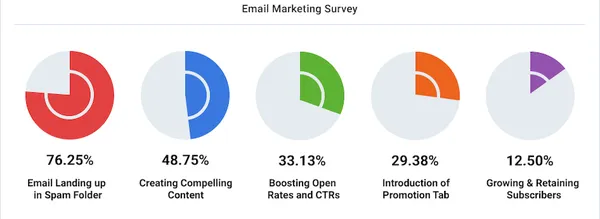

Efficient email sorting and organization are essential for managing the ever-increasing volume of emails in both personal and professional contexts. Large Language Models (LLMs) contribute significantly to email management through their capabilities, including spam filtering and priority sorting, categorization and auto-tagging, and conversation thread identification.

Spam Filtering and Priority Sorting

A big problem with emails is spam, which can fill your inbox and hide essential messages. LLMs play a vital role in addressing this challenge. They can employ sophisticated algorithms to analyze incoming emails’ content sender’s other characteristics and information and determine whether they will likely be spam or legitimate messages.

LLMs can also assist in prioritizing emails based on their content and context. For instance, they can identify emails containing keywords like “urgent” or “important” and ensure they receive immediate attention. By automating this process, LLMs help users focus on critical messages, enhancing productivity and responsiveness.

Categorization and Auto-Tagging

Categorizing and organizing emails into relevant folders or labels can streamline email management. LLMs are adept at classifying emails based on content, subject lines, and other attributes. For example, emails related to finance, marketing, customer support, or specific projects can be automatically sorted into their respective folders.

Furthermore, LLMs can auto-tag emails with relevant keywords or labels, making it easier for users to search for specific messages later. This feature enhances email accessibility and allows users to retrieve information quickly, particularly in cases where they need to reference past communications or documents.

Conversation Thread Identification

Email conversations often span multiple messages, making identifying and organizing them into coherent threads essential. LLMs excel in conversation thread identification. They can analyze the content, recipient lists, and timestamps of emails to group related messages into threads.

By presenting emails in a threaded format, LLMs help users understand the context and history of a conversation at a glance. This feature is precious in collaborative work environments, where tracking the progress of discussions and decisions is crucial.

In summary, LLMs enhance email sorting and organization by automating spam filtering, prioritizing messages, categorizing and tagging emails, and identifying and grouping conversation threads. These capabilities save time and contribute to a more organized and efficient email management process.

User Assistance and Personalization

User assistance and personalization are critical aspects of modern email communication. Large Language Models (LLMs) offer valuable features in these areas, including search assistance and reminder alerts, personalized recommendations, and data security and privacy considerations.

Search Assistance and Reminder Alerts

LLMs enhance the user experience by assisting with email searches and providing reminder alerts. When users seek specific emails or information in their inboxes, LLMs can improve search accuracy by suggesting related keywords, phrases, or filters. This feature streamlines the retrieval of important messages, making email management more efficient.

Reminder alerts are another valuable function of LLMs. They can help users stay organized by sending notifications for important emails or tasks that require attention. LLMs can identify keywords, dates, or user-defined criteria to trigger these reminders, ensuring that critical items are not overlooked.

Personalized Recommendations

Personalization is a key driver of effective email communication. LLMs can personalize email interactions in various ways. For instance, when composing emails, these models can suggest completions or provide templates tailored to the user’s writing style and context. This assists users in crafting responses that resonate with the recipient.

Additionally, LLMs can analyze email content to provide personalized recommendations. For example, they can suggest relevant attachments or related articles based on the context of the email. This personalization improves the user experience by making email communication more convenient and relevant.

Data Security and Privacy Concerns

While LLMs offer numerous benefits, they raise concerns about data security and privacy. These models require access to email content and sometimes may store or process sensitive information. Users and organizations need to address these concerns responsibly.

Data security measures, such as encryption and access controls, should be in place to protect sensitive email data from unauthorized access. Furthermore, organizations must ensure that LLMs comply with data protection regulations and ethical guidelines. Ethical considerations include safeguarding user privacy, minimizing data collection, and providing transparency about how email content is used.

LLMs contribute to user assistance and personalization in email communication by improving search functionality, providing reminder alerts, offering personalized recommendations, and more. However, balancing these benefits with data security and privacy considerations is crucial to ensure responsible and secure use of these technologies.

Ethical Considerations

As we integrate large language models (LLMs) into email response generation and sorting, several ethical considerations come to the forefront. These include addressing biases in automated responses and ensuring responsible ai usage and compliance.

Biases in Automated Responses

A big worry when using these models to write emails is that they might accidentally include unfair opinions. LLMs learn from vast datasets, which may contain biased or prejudiced language. As a result, automated responses produced by these models can inadvertently perpetuate stereotypes or exhibit biased behavior, even when not intended.

It’s essential to implement mechanisms for bias detection and mitigation to address this issue. This may involve carefully curating training datasets to remove biased content, fine-tuning models with fairness in mind, and regularly monitoring and auditing automated responses. By proactively working to reduce biases, we can ensure that LLMs generate fair, respectful, and inclusive responses.

<h4 class="wp-block-heading" id="h-responsible-ai-usage-and-compliance”>Responsible ai Usage and Compliance

Responsible ai usage is paramount when deploying LLMs in email communication. Compliance with ethical guidelines and data protection regulations, such as GDPR (General Data Protection Regulation), must be a top priority.

- User Consent: Users should be informed about using LLMs in email communication, and their consent should be obtained when necessary. Transparency regarding data processing and the role of ai in email response generation is crucial.

- Data Privacy: Protecting user data is fundamental. Organizations must implement robust data security measures to safeguard sensitive email content. Data should be anonymized and processed with respect for user privacy.

- Auditability: The actions of LLMs should be auditable, allowing users and organizations to trace how automated responses were generated and ensuring accountability.

- Human Oversight: While LLMs can automate many tasks, human oversight remains essential. Human reviewers should monitor and correct automated responses to meet ethical and organizational standards.

- Continuous Improvement: Responsible ai usage involves ongoing efforts to improve models and systems. Regular audits, feedback loops, and adjustments are necessary to maintain ethical ai practices.

In conclusion, ethical considerations when using LLMs in email response generation and sorting encompass addressing biases in automated responses, ensuring responsible ai usage, and complying with data protection regulations. By prioritizing fairness, transparency, and user privacy, we can harness the potential of LLMs while upholding ethical standards in email communication.

Real-world Applications

Large Language Models (LLMs) have found practical and impactful applications in various real-world scenarios, including the following case studies and examples:

1. Customer Support and Help Desks: Many companies use these models to help their customer service. For instance, a global e-commerce platform uses an LLM to automate responses to common customer inquiries about product availability, order tracking, and returns. This has significantly reduced response times and improved customer satisfaction.

2. Content Generation: A leading news organization employs an LLM to assist journalists in generating news articles. The LLM can quickly summarize large datasets, provide background information, and suggest possible news story angles. This accelerates content creation and allows journalists to focus on analysis and reporting.

3. Language Translation Services: An international organization relies on LLMs for real-time language translation in global meetings and conferences. LLMs can instantly translate spoken or written content into multiple languages, facilitating effective communication among participants who speak different languages.

4. Email Response Generation: A busy law firm uses LLMs to automate the generation of initial responses to client inquiries. The LLM can understand the nature of legal inquiries, draft preliminary responses, and flag cases requiring attorneys’ immediate attention. This streamlines client communication and improves efficiency.

5. Virtual Personal Assistants: A technology company has integrated an LLM into its virtual personal assistant app. Users can dictate emails, messages, or tasks to the assistant, and the LLM generates coherent text based on user input. This hands-free approach enhances accessibility and convenience.

6. Educational Support: In education, an online learning platform uses LLMs to provide instant explanations and answers to student queries. Whether students have questions about math problems or need clarification on complex concepts, the LLM can offer immediate assistance, promoting independent learning.

Challenges and Limitations

While large language models (LLMs) offer significant advantages in email response generation and sorting, they have challenges and limitations. Understanding these issues is essential for LLMs’ responsible and effective use of email communication.

Model Limitations and Lack of True Understanding

The main problem with these models is that they don’t understand things even though they’re outstanding. They generate text based on patterns and associations learned from vast datasets, which doesn’t entail genuine comprehension. Some fundamental limitations include:

- Lack of Contextual Understanding: LLMs might produce text that appears contextually relevant but fundamentally lacks understanding. For example, they can generate plausible-sounding explanations without grasping the underlying concepts.

- Inaccurate Information: LLMs may generate factually incorrect responses. They don’t possess the ability to fact-check or verify information, potentially leading to the propagation of misinformation.

- Failure in Uncommon Scenarios: LLMs can struggle with rare or highly specialized topics and situations not well represented in their training data.

While LLMs offer potent capabilities for email response generation and sorting, they face challenges related to their limitations in proper understanding and raise ethical and privacy concerns. Addressing these challenges requires a balanced approach that combines the strengths of ai with responsible usage practices and human oversight to maximize the benefits of LLMs while mitigating their limitations and ethical risks.

Generated Response Display

Importing Libraries

- Import the necessary libraries from the Transformers library.

- Load the pre-trained GPT-2 model and tokenizer.

# Import the necessary libraries from the Transformers library

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Load the pre-trained GPT-2 model and tokenizer

model_name = "gpt2" # Specify GPT-2 model

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

model = GPT2LMHeadModel.from_pretrained(model_name)

This section imports essential libraries from the Transformers library, including GPT2LMHeadModel (for the GPT-2 model) and GPT2Tokenizer. We then load the pre-trained GPT-2 model and tokenizer.

Input Prompt

- Define an input prompt as the starting point for text generation.

- Modify the prompt to reflect your desired input.

# Input prompt

prompt = "Once upon a time"

# Modify the prompt to your desired input

Here, we define an input prompt, which serves as the initial text for the text generation process. Users can modify the prompt to suit their specific requirements.

Tokenize the Input

- Use the tokenizer to convert the input prompt into a tokenized form (numerical IDs) that the model can understand.

# Tokenize the input and generate text

input_ids = tokenizer.encode(prompt, return_tensors="pt")

This section tokenizes the input prompt using the GPT-2 tokenizer, converting it into numerical IDs that the model can understand.

Generate Text

- Use the GPT-2 model to generate text based on the tokenized input.

- Specify various generation parameters, such as maximum length, number of sequences, and temperature, to control the output.

# Generate text based on the input

output = model.generate(

input_ids,

max_length=100,

num_return_sequences=1,

no_repeat_ngram_size=2,

top_k=50,

top_p=0.95,

temperature=0.7

)

The code uses the GPT-2 model to generate text based on the tokenized input. Parameters such as max_length, num_return_sequences, no_repeat_ngram_size, top_k, top_p, and temperature control aspects of the text generation process.

Decode and Print

- Decode the generated text from numerical IDs back into human-readable text using the tokenizer.

- Print the generated text to the console.

# Decode and print the generated text

generated_text = tokenizer.decode(output(0), skip_special_tokens=True)

print(generated_text)

These comments provide explanations for each section of the code and guide you through the process of loading a GPT-2 model, providing an input prompt, generating text, and printing the generated text to the console.

This section decodes the generated text from numerical IDs back into human-readable text using the tokenizer. The resulting text is then printed to the console.

Output

- The generated text, based on the provided input prompt, will be printed to the console. This is the result of the GPT-2 model’s text generation process.

Once upon a time, in a land far away, there lived a wise old wizard. He had a magical staff that could grant any wish...This point summarizes the purpose and content of the output section of the code.

Future Directions

The future of these big language models in emails looks exciting. It involves ongoing research and development to enhance their capabilities and responsible ai advancements to address ethical concerns and ensure their beneficial use.

Ongoing Research and Development

The field of natural language processing and LLMs is continuously evolving. Future directions in research and development include:

- Model Size and Efficiency: Researchers are exploring ways to make LLMs more efficient and environmentally friendly. This involves optimizing model architectures and training techniques to reduce their carbon footprint.

- Fine-Tuning and Transfer Learning: Refining techniques for fine-tuning LLMs on specific tasks or datasets will continue to be a focus. This allows organizations to adapt these models to their unique needs.

- Domain Specialization:

Context

Domain specialization refers to customizing large language models (LLMs) to cater to specific domains or industries. Each industry or domain often has its own jargon, terminology, and contextual nuances. General-purpose LLMs, while powerful, may not fully capture the intricacies of specialized fields.

Importance:

- Relevance: Customizing LLMs for specific domains ensures that the models can better understand and generate highly relevant content to the particular industry.

- Accuracy: Domain-specific jargon and terminology are often crucial for accurate communication within an industry. Specialized LLMs can be trained to recognize and use these terms appropriately.

- Contextual Understanding: Industries may have unique contextual factors that influence communication. Domain-specialized LLMs aim to capture and comprehend these specific contexts.

Example:

In the legal domain, a domain-specialized LLM may be trained on legal texts, contracts, and case law. This customization allows the model to understand legal terminology, interpret complex legal structures, and generate contextually appropriate content for legal professionals.

Multimodal Capabilities

Context:

Multimodal capabilities involve integrating large language models (LLMs) with other artificial intelligence (ai) technologies, such as computer vision. While LLMs primarily excel in processing and generating text, combining them with other modalities enhances their ability to understand and generate content beyond text.

Importance:

- Enhanced Understanding: Multimodal capabilities enable LLMs to process information from multiple sources, including images, videos, and text. This holistic understanding contributes to more comprehensive and contextually aware content generation.

- Expanded Utility: LLMs with multimodal capabilities can be applied to a broader range of applications, such as image captioning, video summarization, and content generation based on visual input.

- Improved Communication: In scenarios where visual information complements textual content, multimodal LLMs can provide a richer and more accurate representation of the intended message.

Example:

Consider an email communication scenario where a user describes a complex technical issue. A multimodal LLM, equipped with computer vision capabilities, could analyze attached images or screenshots related to the issue, enhancing its understanding and generating a more informed and contextually relevant response.

<h4 class="wp-block-heading" id="h-advancements-in-responsible-ai“>Advancements in Responsible ai

Addressing ethical concerns and ensuring responsible ai usage is paramount for the future of LLMs in email communication.

- Bias Mitigation: Ongoing research aims to develop robust methods for detecting and mitigating biases in LLMs, ensuring that automated responses are fair and unbiased.

- Ethical Guidelines: Organizations and researchers are developing clear guidelines for using LLMs in email communication, emphasizing transparency, fairness, and user consent.

- User Empowerment: Providing users with more control over LLM-generated responses and recommendations, such as allowing them to set preferences and override automated suggestions, is a direction that respects user autonomy.

- Privacy-Centric Approaches: Innovations in privacy-preserving ai techniques aim to protect user data while still harnessing the power of LLMs for email communication.

In summary, the future of LLMs in email response generation and sorting is marked by ongoing research to improve their capabilities and responsible ai advancements to address ethical concerns. These developments will enable LLMs to continue playing a valuable role in enhancing email communication while ensuring their use aligns with ethical principles and user expectations.

Conclusion

In the always-changing world of online communication, email is still significant. Large language models have emerged as tools for revolutionizing email response generation and sorting. In this article, we embarked on a journey through the evolution of language models, tracing their remarkable progression from rudimentary rule-based systems to the cutting-edge GPT-3 model.

With an understanding of these models’ underpinnings, we explored their training processes, illuminating how they ingest vast volumes of textual data and compute power to achieve human-like language understanding and generation. These models have redefined email communication by enabling automated responses, facilitating multilingual support, and conducting content summarization and sentiment analysis.

In conclusion, large language models have redefined the email landscape, offering efficiency and innovation while demanding our vigilance in ethical usage. The future beckons with the prospect of even more profound transformations in how we communicate via email.

Key Takeaways

- Language models have evolved from rule-based systems to advanced models like GPT-3, reshaping natural language understanding and generation.

- Large language models are trained on massive datasets and require significant computational resources to comprehend and generate human-like text.

- These models find applications in email communication, enhancing language understanding and generation, automating responses, offering multilingual support, and enabling content summarization and sentiment analysis.

- Large language models excel in sorting emails by filtering spam, prioritizing messages, categorizing content, and identifying conversation threads.

- They provide search assistance, personalized recommendations, and address data security concerns, tailoring the email experience to individual users.

Frequently Asked Questions

A. Readers often want to understand these models’ advantages to email communication, such as automation, efficiency, and improved user experiences.

A. Multilingual capabilities are a crucial aspect of these models. Explaining how they enable communication in multiple languages is essential.

A. Addressing ethical considerations, including response biases and responsible ai usage, is crucial to ensuring fair and honest email interactions.

A. Readers may want to know the constraints of these models, such as their potential for misunderstandings and the computational resources required.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

NEWSLETTER

NEWSLETTER