Kolmogorov-Arnold networks (KANs) have emerged as a promising alternative to traditional multilayer perceptrons (MLPs). Inspired by the Kolmogorov-Arnold representation theorem, these networks use neurons that perform simple summation operations. However, the current implementation of KANs poses some challenges in practical applications. Currently, researchers are investigating the possibility of identifying alternative multivariate functions for KAN neurons that could offer improved practical utility in various benchmarks related to machine learning tasks.

Research has highlighted the potential of KANs in several fields such as computer vision, time series analysis, and quantum architecture search. Some studies show that KANs can outperform MLPs in data fitting and PDE tasks while using fewer parameters. However, some research has raised concerns about the robustness of KANs to noise and their performance compared to MLPs. Variations and improvements of the standard KAN architecture such as graph-based designs, convolutional KANs, and transformer-based KANs are also explored to solve the problems. Furthermore, alternative activation functions such as wavelets, radial basis functions, and sinusoidal functions are investigated to improve the efficiency of KANs. Despite these works, there is a need for further improvements to enhance the performance of KANs.

A researcher from the Center for Applied Intelligent Systems Research at Halmstad University, Sweden, has proposed a new approach to improve the performance of Kolmogorov-Arnold networks (KAN). This method aims to identify the optimal multivariate function for KAN neurons in various machine learning classification tasks. The traditional use of the sum as a node-level function is often not ideal, especially for high-dimensional datasets with multiple features. This can cause inputs to exceed the effective range of subsequent activation functions, leading to training instability and reduced generalization performance. To solve this problem, the researcher suggests using the mean instead of the sum as a node function.

To evaluate the proposed modifications of KAN, 10 popular datasets from the UCI Machine Learning Database Repository are used, spanning multiple domains and varying sizes. These datasets are divided into training (60%), validation (20%), and testing (20%) partitions. A standardized preprocessing method is applied on all datasets, including categorical feature encoding, missing value imputation, and instance randomization. The models are trained for 2000 iterations using the Adam optimizer with a learning rate of 0.01 and batch size of 32. The model accuracy on the test set serves as the primary evaluation metric. The parameter count is managed by setting the grid to 3 and using default hyperparameters for the KAN models.

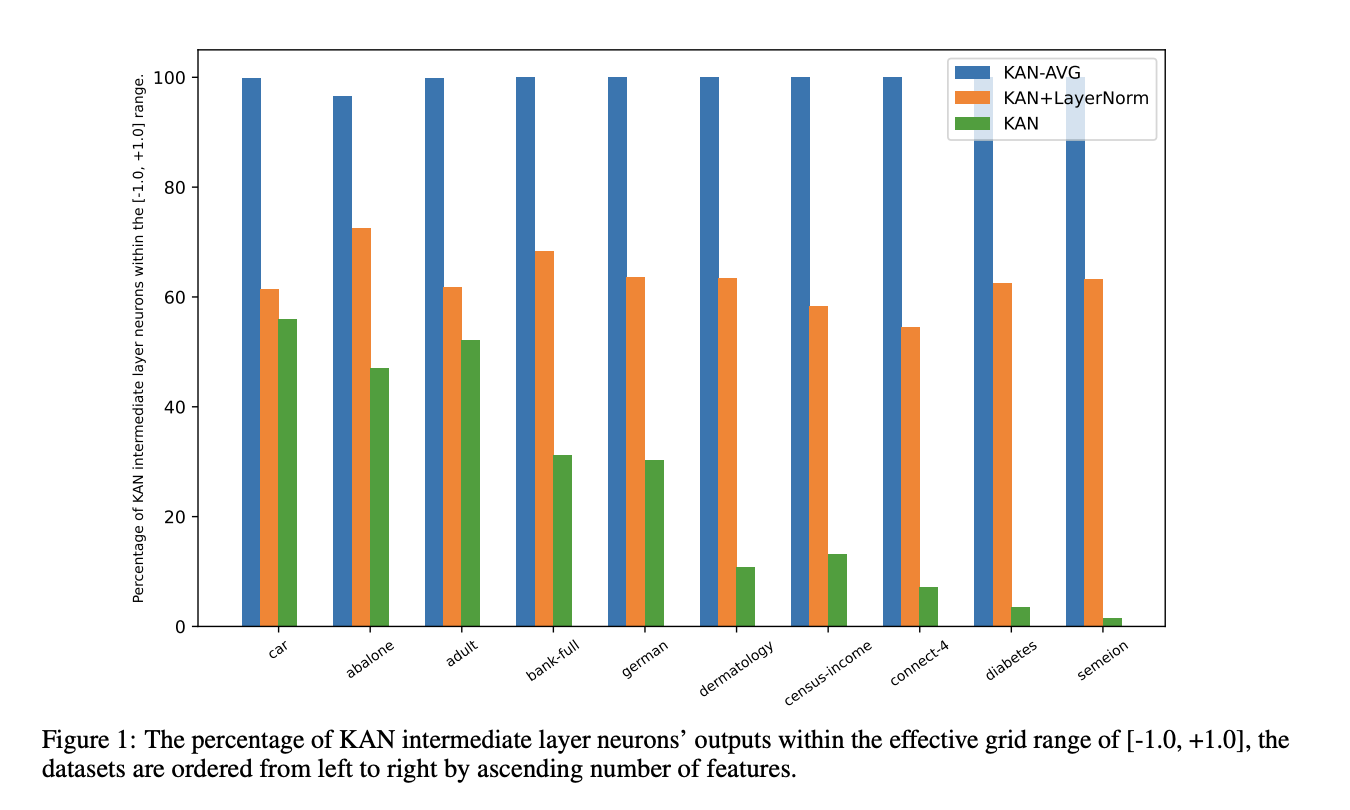

The results support the hypothesis that using the mean function in KAN neurons is more effective than the traditional sum function. This improvement is due to the ability of the mean to keep the input values within the optimal range of the spline activation function, which is (-1.0, +1.0). Standard KANs struggled to keep values within this range in the middle layers as the number of features increased. However, adopting the mean function in neurons leads to improved performance, keeping values within the desired range in datasets with 20 or more features. For datasets with fewer features, values remained within the range more than 99.0% of the time, except for the “abalone” dataset, which had a slightly lower stickiness rate of 96.51%.

In this paper, a researcher from the Center for Applied Intelligent Systems Research at Halmstad University, Sweden, has proposed a method to improve the performance of KANs. This paper introduces an important modification of KANs by replacing the traditional summation in KAN neurons with an averaging function. Experimental results show that this change leads to more stable training processes and keeps the inputs within the effective range of spline activations. This adjustment to the KAN architecture solves previous challenges related to input range and training stability. Going forward, this work offers a promising direction for future implementations of KANs, potentially improving their performance and applicability in various machine learning tasks.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Sajjad Ansari is a final year student from IIT Kharagpur. As a technology enthusiast, he delves into practical applications of ai, focusing on understanding the impact of ai technologies and their real-world implications. He aims to articulate complex ai concepts in a clear and accessible manner.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER