Google sees AI as fundamental and transformative technologywith recent advances in generative AI technologies, such as LaMDA, Palm, Image, Party, musicLMand similar machine learning (ML) models, some of which are now being incorporated into our products. This transformative potential requires us to be accountable not only for how we advance our technology, but also for how we envision what technologies to build and how we assess the social impact that AI and ML-enabled technologies have on the world. This effort requires fundamental and applied research with a interdisciplinary lens that relates to, and accounts for, the social, cultural, economic, and other contextual dimensions that shape the development and deployment of AI systems. We also need to understand the range of potential impacts that the continued use of such technologies can have on vulnerable communities and broader social systems.

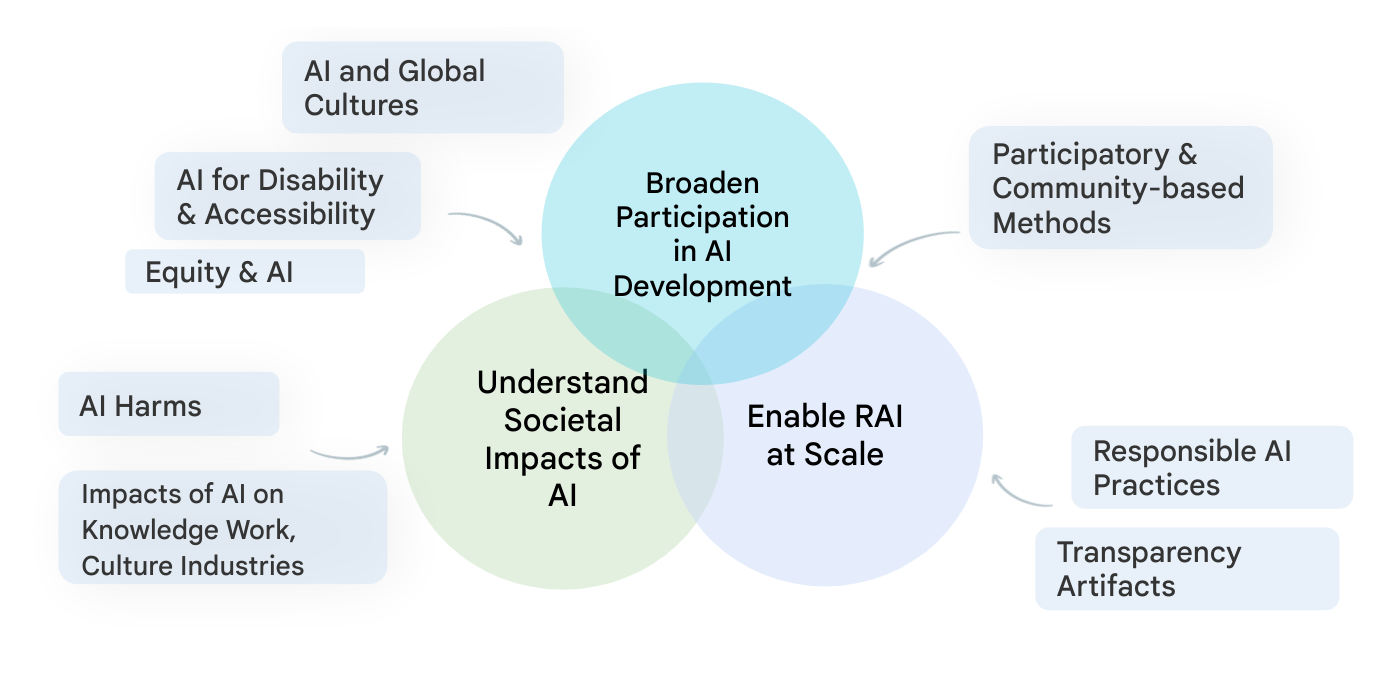

Our team, Technology, AI, Society and Culture (TASC), is addressing this critical need. Research on the social impacts of AI is complex and multifaceted; No single disciplinary or methodological perspective can provide the diverse insights needed to deal with the social and cultural implications of ML technologies. Therefore, TASC leverages the strengths of an interdisciplinary team, with backgrounds ranging from computer science to social sciences, digital media, and urban sciences. We use a multi-method approach with qualitative, quantitative and mixed methods to critically examine and shape the social and technical processes that underpin and surround AI technologies. We focus on participatory, culturally inclusive, and intersectional equity-oriented research that brings affected communities to the fore. Our work promotes Responsible AI (RAI) in areas such as computer vision, natural language processing, healthand general purpose ML models and applications. Below we share examples of our approach to Responsible AI and where we are headed in 2023.

Theme 1: Culture, communities and AI

One of our key areas of research is advancing methods to make generative AI technologies more inclusive and valuable to people around the world, through committed to the communityand culturally inclusive approaches. To this end, we view communities as experts in their context, acknowledging their deep understanding of how technologies can and should impact their own lives. Our research defends the importance of incorporating intercultural considerations throughout the ML development pipeline. Community engagement allows us to change the way we embed knowledge of what matters most throughout this pipeline, from dataset selection to evaluation. This also allows us to understand and account for the ways in which technologies fail and how specific communities can be harmed. Based on this understanding we have created responsible AI assessment strategies that are effective in recognizing and mitigating biases in multiple dimensions.

Our work in this area is vital to ensuring that Google technologies are safe, functional, and useful to a diverse set of stakeholders around the world. For example, our research on user attitudes towards AI, responsible interaction designand equity assessments with a focus on the global south demonstrated cross-cultural differences in the impact of AI and provided resources that allow for culturally situated assessments. We are also building interdisciplinary research communities to examine the relationship between AI, culture and society, through our recent and upcoming workshops on Cultures in AI/AI in Culture, Ethical considerations in creative applications of machine visionand Cross-Cultural Considerations in NLP.

Our recent research has also sought perspectives from particular communities known to be underrepresented in ML development and applications. For example, we have investigated gender bias, both in natural language and in contexts like gender inclusive healthbuilding on our research to develop more accurate bias assessments so that anyone developing these technologies can identify and mitigate harms for people with queer and non-binary identities.

Theme 2: Enabling responsible AI throughout the development lifecycle

We work to enable RAI at scale, establishing industry-wide best practices for RAI throughout the development pipeline and ensuring that our technologies verifiably incorporate those best practices by default. This applied research includes the production and analysis of responsible data for the development of ML and the systematic advancement of tools and practices that help practitioners meet key RAI objectives such as transparency, fairness and accountability. Extend previous work on data cards, cards model and the Model Card Tool Kitwe launched the Data Card Manual, providing developers with methods and tools to document appropriate uses and essential facts related to a data set. Because ML models are often trained and evaluated on human-annotated data, we’re advancing human-centric research on data annotation as well. we have developed frameworks for documenting annotation processes and accounting methods evaluator disagreement and diversity of evaluators. These methods allow ML practitioners to better ensure diversity in annotating data sets it is used to train models, by identifying current barriers and reinventing data working practices.

future directions

We are now working to further broaden participation in the development of the ML model, through approaches that incorporate a diversity of cultural contexts and voices in the design, development, and impact assessment of technology to ensure that AI achieves the goals. social goals. We are also redefining responsible practices that can handle the scale at which ML technologies operate in today’s world. For example, we are developing frameworks and structures that can enable community engagement within industry AI research and development, including community-focused assessment frameworks, benchmarking, and selection and sharing of data sets.

In particular, we are building on our previous work to understand how NLP language models may perpetuate bias against people with disabilities, expanding this research to address other marginalized communities and cultures and including images, videos, and other multimodal models. Such models may contain tropes and stereotypes about particular groups or may erase the experiences of specific people or communities. Our efforts to identify sources of bias within ML models will lead to better detection of these misrepresentations and support the creation of fairer and more inclusive systems.

TASC is about studying all the touch points between AI and people, from individuals and communities to cultures and society. For AI to be culturally inclusive, equitable, accessible, and reflective of the needs of affected communities, we must rise to these challenges with interdisciplinary and multidisciplinary research that focuses on the needs of affected communities. Our research studies will continue to explore the interactions between society and AI, fostering the discovery of new ways to develop and test AI so that we can develop more robust and culturally situated AI technologies.

Thanks

We would like to thank all the team members who contributed to this blog post. In alphabetical order by last name: Cynthia Bennett, Eric Corbett, Aida Mostafazadeh Davani, Emily Denton, Sunipa Dev, Fernando Diaz, Mark Diaz, Shaun Kane, Shivani Kapania, Michael Madaio, Vinodkumar Prabhakaran, Rida Qadri, Renee Shelby, Ding Wang, and Andrew Zaldivar. Additionally, we would like to thank Toju Duke and Marian Croak for their valuable comments and suggestions.

NEWSLETTER

NEWSLETTER