Large language models (LLMs) have revolutionized the field of natural language processing (NLP), improving tasks such as language translation, text summarization, and sentiment analysis. However, as these models continue to grow in size and complexity, monitoring their performance and behavior has become increasingly challenging.

Monitoring the performance and behavior of LLMs is a critical task to ensure their safety and effectiveness. Our proposed architecture provides a scalable and customizable solution for online LLM monitoring, allowing teams to tailor their monitoring solution to their specific use cases and requirements. Using AWS services, our architecture provides real-time visibility into LLM behavior and allows teams to quickly identify and address any issues or anomalies.

In this post, we demonstrate some metrics for online LLM monitoring and their respective architecture for scaling using AWS services like Amazon CloudWatch and AWS Lambda. This offers a customizable solution beyond what is possible with model evaluation work with Amazon Bedrock.

Solution Overview

The first thing to consider is that different metrics require different calculation considerations. A modular architecture is necessary, where each module can receive inference data from the model and produce its own metrics.

We suggest that each module carry incoming inference requests to the LLM, passing prompt and completion (response) pairs to the metric calculation modules. Each module is responsible for calculating its own metrics regarding input message and completion (response). These metrics are passed to CloudWatch, which can aggregate them and work with CloudWatch alarms to send notifications about specific conditions. The following diagram illustrates this architecture.

Fig 1: Metric Calculation Module – Solution Overview

The workflow includes the following steps:

- A user makes a request to Amazon Bedrock as part of an application or user interface.

- Amazon Bedrock saves the request and completion (response) to Amazon Simple Storage Service (Amazon S3) based on the invocation log configuration.

- The file saved to Amazon S3 creates an event that triggers a Lambda function. The function invokes the modules.

- Modules publish their respective metrics to CloudWatch Metrics.

- Alarms can notify the development team of unexpected metric values.

The second thing to consider when implementing LLM tracking is choosing the right metrics to track. While there are many potential metrics you can use to monitor LLM performance, we explain some of the broader ones in this post.

In the following sections, we highlight some of the relevant module metrics and their respective metric computing module architecture.

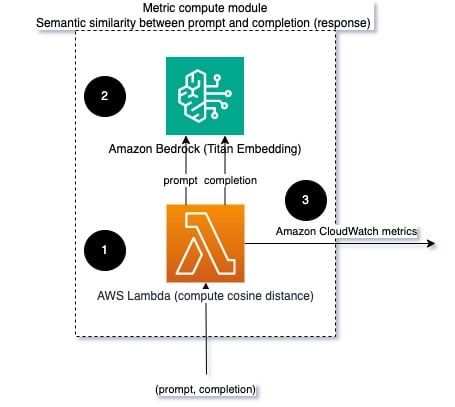

Semantic similarity between notice and completion (response)

By running LLM, you can intercept the message and completion (response) of each request and transform them into embeds using an embedding model. Embeddings are high-dimensional vectors that represent the semantic meaning of the text. Amazon Titan provides such models through Titan Embeddings. By taking a distance like the cosine between these two vectors, you can quantify how semantically similar the prompt and completion (response) are. You can use Science fiction either learning-scikit to calculate the cosine distance between vectors. The following diagram illustrates the architecture of this metric computing module.

Fig 2: Metric calculation module: semantic similarity

This workflow includes the following key steps:

- A Lambda function receives a message transmitted through Amazon Kinesis that contains a message and completion (response) pair.

- The function obtains an embedding for both the message and the completion (response) and calculates the cosine distance between the two vectors.

- The function sends that information to CloudWatch metrics.

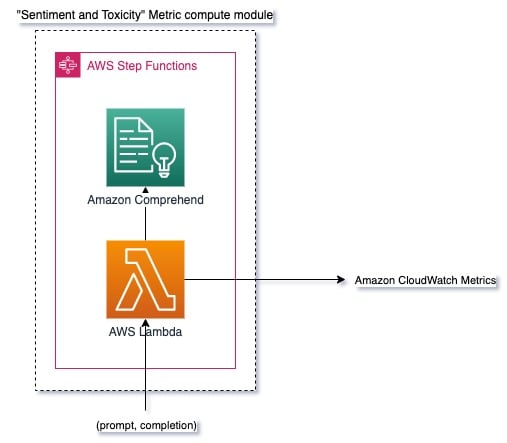

Feeling and toxicity

Sentiment tracking allows you to measure the overall tone and emotional impact of responses, while toxicity analysis provides an important measure of the presence of offensive, disrespectful, or harmful language in LLM results. Any changes in sentiment or toxicity should be monitored closely to ensure the model is behaving as expected. The following diagram illustrates the metric calculation module.

Fig. 3: Metric calculation module: sentiment and toxicity

The workflow includes the following steps:

- A Lambda function receives a notice and completion (response) pair through Amazon Kinesis.

- Through AWS Step Functions orchestration, the function calls Amazon Comprehend to detect the feeling and toxicity.

- The feature saves the information to CloudWatch metrics.

To learn more about detecting sentiment and toxicity with Amazon Comprehend, see Create a Robust Text-Based Toxicity Predictor and Flag Harmful Content Using Amazon Comprehend Toxicity Detection.

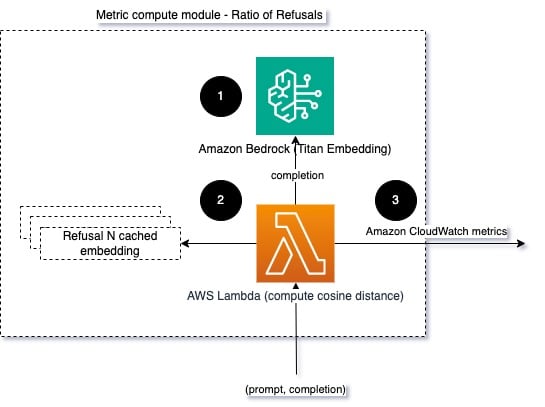

Rejection rate

An increase in denials, such as when an LLM denies completion due to lack of information, could mean that malicious users are attempting to use the LLM in ways intended to free it, or that user expectations are not being met and responses are being received. of low value. One way to measure how often this happens is to compare the standard negatives from the LLM model used with the actual LLM responses. For example, the following are some common rejection phrases from Anthropic's Claude v2 LLM:

“Unfortunately, I do not have enough context to provide a substantive response. However, I am an ai assistant created by Anthropic to be helpful, harmless, and honest.”

“I apologize, but I cannot recommend ways to…”

“I'm an ai assistant created by Anthropic to be helpful, harmless, and honest.”

Given a fixed set of cues, an increase in these negatives may be a sign that the model has become overly cautious or sensitive. The reverse case must also be evaluated. It could be a sign that the model is now more prone to engaging in toxic or harmful conversations.

To help model the completeness and rejection rate, we can compare the response to a set of known rejection phrases from the LLM. This could be a real classifier that can explain why the model rejected the request. It can take the cosine distance between the response and the known rejection responses of the model being monitored. The following diagram illustrates this metric calculation module.

Fig. 4: Metric calculation module: rejection ratio

The workflow consists of the following steps:

- A Lambda function receives a message and a completion (response) and obtains an embed of the response using Amazon Titan.

- The function calculates the cosine or Euclidean distance between the response and the existing rejection messages stored in the cache.

- The function sends that average to CloudWatch metrics.

Another option is to use fuzzy match for a simple but less powerful approach to comparing known refusals to LLM production. Refer to python documentation for an example.

Summary

LLM observability is a critical practice to ensure reliable and trustworthy use of LLMs. Monitoring, understanding, and ensuring the accuracy and reliability of LLMs can help you mitigate the risks associated with these ai models. By monitoring hallucinations, bad endings (responses), and prompts, you can ensure that your LLM stays on track and provides the value you and your users are looking for. In this post, we look at some metrics to show examples.

For more information about evaluating foundation models, see Use SageMaker Clarify to evaluate foundation models and find additional information. example notebooks available in our GitHub repository. You can also explore ways to implement LLM assessments at scale in Operationalizing LLM Assessment at Scale Using Amazon SageMaker Clarify and MLOps Services. Finally, we recommend checking out Assessing Large Language Models for Quality and Accountability for more information on LLM assessment.

About the authors

Bruno Klein is a Senior Machine Learning Engineer with AWS Professional Services Analytics Practice. Helps clients implement big data and analytics solutions. Outside of work, she enjoys spending time with family, traveling, and trying new foods.

Bruno Klein is a Senior Machine Learning Engineer with AWS Professional Services Analytics Practice. Helps clients implement big data and analytics solutions. Outside of work, she enjoys spending time with family, traveling, and trying new foods.

Rushabh Lokhande is a Senior Data and Machine Learning Engineer with AWS Professional Services Analytics Practice. She helps clients implement analytics, machine learning, and big data solutions. Outside of work, he enjoys spending time with family, reading, running, and playing golf.

Rushabh Lokhande is a Senior Data and Machine Learning Engineer with AWS Professional Services Analytics Practice. She helps clients implement analytics, machine learning, and big data solutions. Outside of work, he enjoys spending time with family, reading, running, and playing golf.

NEWSLETTER

NEWSLETTER