Generating anything, be it text or an image, in the digital world has never been so easy, thanks to the advancement of neural networks in recent years. From GPT models for text to diffusion models for images, we’ve seen revolutionary AI models that changed everything we know about generative AI. Today, the line between human-generated and AI-generated content is blurring.

This is especially noticeable in imaging models. If you’ve ever played around with the latest version of MidJourney, you can see how good it is at generating real-life human photos. In fact, they got so good that we now even have agencies using virtual models to advertise clothes, products, etc. The best thing about using a generative model is its excellent generalizability that allows you to customize the output however you want and still arrive. above with visually pleasing photos.

While these 2D generative models can produce high-quality faces, we still need more capacity for many applications of interest, such as facial animation, expression transfer, and virtual avatars. Using existing 2D generative models for these applications often leads to difficulties when it comes to effectively teasing out facial attributes such as pose, expression, and lighting. We can’t just use them to alter the fine details of the faces they generate. Additionally, a 3D representation of form and texture is crucial to many entertainment industries, including games, animation, and visual effects, which demand 3D content at ever larger scales to create immersive virtual worlds.

There have been attempts to design generative models to generate 3D faces, but the lack of high-quality and diverse 3D training data has limited the generalizability of these algorithms and their use in real-world applications. Some have attempted to overcome these limitations with parametric models and derived methods to approximate the 3D geometry and texture of a 2D facial image. However, these 3D facial reconstruction techniques typically do not recover high-frequency details.

So it is clear that we need a reliable tool that can generate realistic 3D faces. We can’t just stop at 2D while we have all these possible applications that can be used from the trailer. It would have been really cool if we could have an AI model that can generate realistic 3D faces, right? Well, actually we have it, and it’s time to meet with albedoGAN.

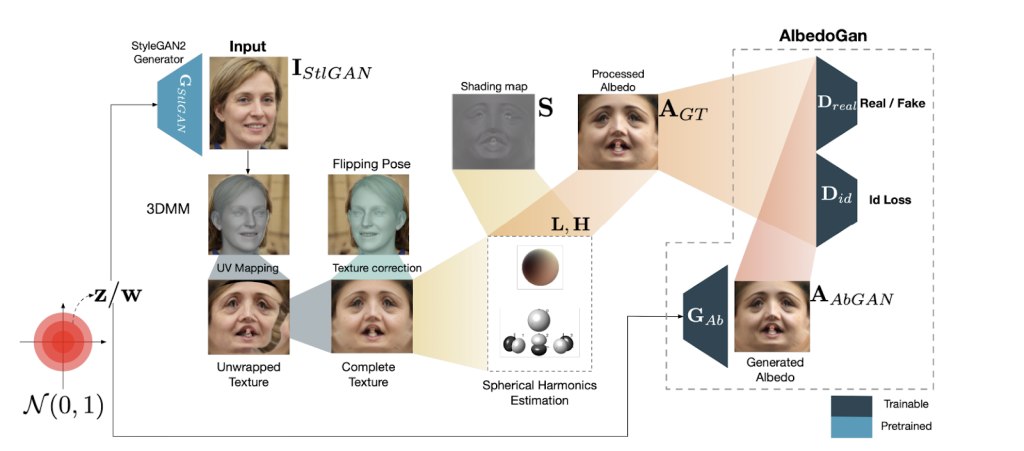

albedoGAN is a 3D generative model for faces that uses a self-monitoring approach that can generate high-resolution textures and capture high-frequency detail in geometry. Leverages a pretrained StyleGAN model to generate high-quality 2D faces and generate light-independent albedo directly from latent space.

The albedo is a critical aspect of a 3D facial model, as it largely determines the appearance of the face. However, generating high-quality 3D models with an albedo that can be generalized over pose, age, and ethnicity requires a massive database of 3D scans, which can be expensive and time-consuming. To address this issue, they use a novel approach that combines image blending and spherical harmonic lighting to capture a high-quality 1024 × 1024 resolution albedo that generalizes well across different poses and addresses shading variations.

For the shape component, the FLAME model is combined with latent space-guided per-vertex displacement maps from StyleGAN, resulting in a higher-resolution mesh. The two networks for albedo and shape are trained in alternate descent fashion. The proposed algorithm can generate 3D faces from the latent space of StyleGAN and can perform face editing directly in the 3D domain using the latent codes or text.

review the Paper and Code. Don’t forget to join our 21k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

🚀 Check out 100 AI tools at AI Tools Club

![]()

Ekrem Çetinkaya received his B.Sc. in 2018 and M.Sc. in 2019 from Ozyegin University, Istanbul, Türkiye. She wrote her M.Sc. thesis on denoising images using deep convolutional networks. She is currently pursuing a PhD. She graduated from the University of Klagenfurt, Austria, and working as a researcher in the ATHENA project. Her research interests include deep learning, computer vision, and multimedia networks.