It's no secret that supervised machine learning models need to be trained on high-quality labeled data sets. However, collecting sufficient high-quality labeled data can be a significant challenge, especially in situations where privacy and data availability are major concerns. Fortunately, this problem can be mitigated with synthetic data. Synthetic data is data that is artificially generated rather than collected from real-world events. This data can augment actual data or can be used in place of actual data. It can be created in several ways, including through the use of statistics, data augmentation/computer generated imagery (CGI), or generative ai, depending on the use case. In this post, we will go over:

- The value of synthetic data

- Synthetic data for extreme cases

- How to generate synthetic data

https://youtu.be/PIzDYbATawY?si=Eb9M8aAfgVBym4Ih

Problems with real data have led to many use cases for synthetic data, which you can check out below.

Privacy issues

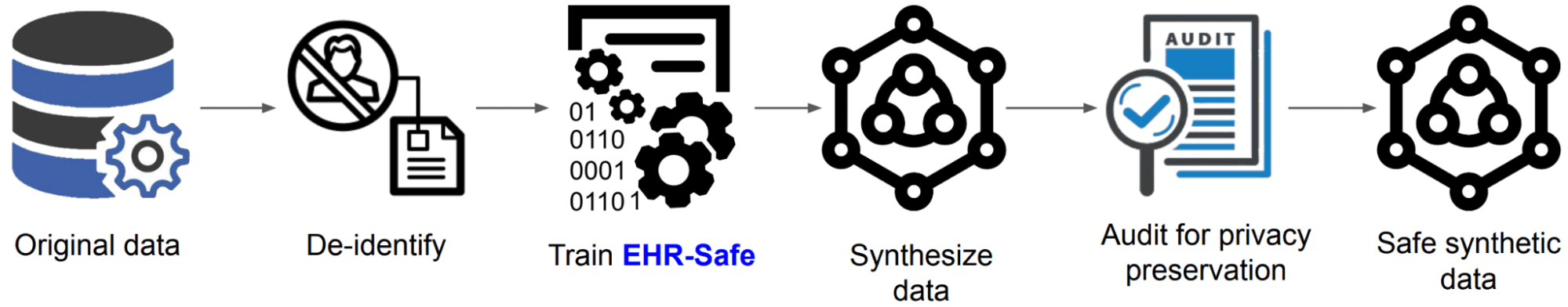

Image by Google research

It is widely known that health data has privacy restrictions. For example, while incorporating electronic health records (EHRs) into machine learning applications could improve patient outcomes, doing so while respecting patient privacy regulations like HIPAA is difficult. Even techniques for anonymizing data are not perfect. In response, Google researchers came up with EHR-Safe, which is a framework for generating realistic, privacy-preserving synthetic EHRs.

Security issues

Collecting real data can be dangerous. One of the main problems with robotic applications, such as self-driving cars, is that they are physical applications of machine learning. An insecure model cannot be implemented in the real world and causes a crash due to lack of relevant data. Augmenting a dataset with synthetic data can help models avoid these problems.

Real data collection and labeling are often not scalable

Annotating medical images is essential for training machine learning models. However, each image must be labeled by medical experts, which is an expensive and time-consuming process that is often subject to strict privacy regulations. Synthetic data can address this problem by generating large volumes of labeled images without requiring extensive human annotations or compromising patient privacy.

Manual labeling of real data can sometimes be very difficult, if not impossible.

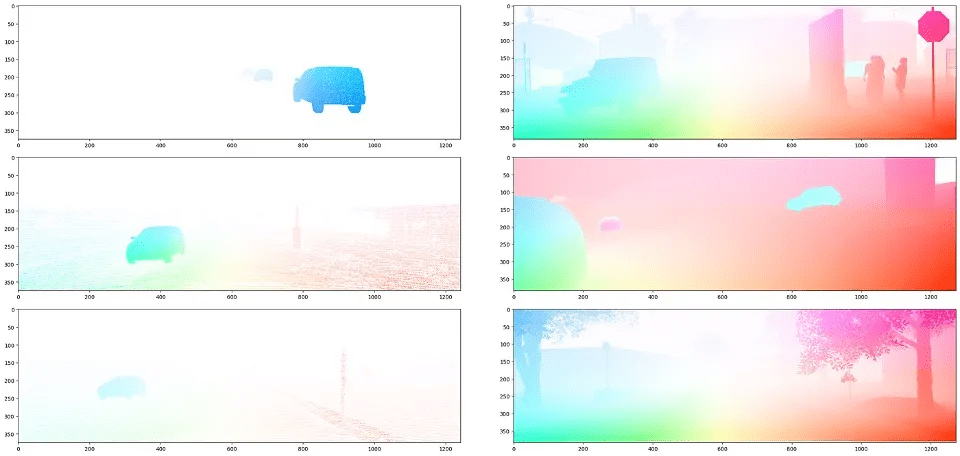

Optical flow labels from the sparse real-world KITTI data (left) and the Parallel Domain synthetic data (right). The color indicates the direction and magnitude of the flow. Author's image.

In autonomous driving, estimating per-pixel motion between video frames, also known as optical flow, is challenging with real-world data. Real data labeling can only be performed using LiDAR information to estimate the object's motion, whether dynamic or static, from the trajectory of the autonomous vehicle. Because LiDAR scans are scarce, the very few public optical flow data sets are also scarce. This is one of the reasons why some synthetic optical flow data has been shown to greatly improve performance on optical flow tasks.

A common use case for synthetic data is dealing with missing rare classes and edge cases in real data sets. Before generating synthetic data for this use case, review the tips below to consider what should be generated and how much is needed.

Identify your edge cases and rare classes

It is important to understand what edge cases are contained in a data set. They could be rare diseases in medical images or common animals and jaywalkers in autonomous vehicles. It is also important to consider which extreme cases are NOT in a data set. If a model needs to identify an edge case that is not present in the data set, additional data collection or synthetic data generation might be necessary.

Verify that the synthetic data is representative of the real world.

Synthetic data should represent real-world scenarios with minimal domain gaps that are differences between two different data sets (e.g., real and synthetic data). This can be done through manual inspection or using a separate model trained on real data.

Make potential synthetic performance improvements quantifiable

One goal of supervised learning is to build a model that works well with new data. That is why there are model validation procedures such as train test division. When augmenting a real data set with synthetic data, the data may need to be balanced based on rare classes. For example, in autonomous driving applications, a machine learning professional might be interested in using synthetic data to focus on specific edge cases, such as jaywalkers. The original train test split may not have been split by the number of jaywalkers. In this case, it might make sense to move many of the existing jaywalker samples to the test set to ensure that the improvement using synthetic data is measurable.

Make sure all your synthetic data aren't just weird classes

A machine learning model should not learn that synthetic data is mostly rare classes and edge cases. Additionally, when rarer classes and edge cases are discovered, more synthetic data may need to be generated to account for this scenario.

One of the main advantages of synthetic data is that you can always generate more. It also has the advantage of being already labeled. There are many ways to generate synthetic data and which one you choose depends on your use case.

statistical methods

A common statistical method is to generate new data based on the distribution and variability of the original data set. Statistical methods work best when the data set is relatively simple and the relationships between variables are well understood and can be defined mathematically. For example, if real data has a normal distribution, such as human height, synthetic data can be created using the same mean and standard deviation of the original data set.

Data Augmentation/CGI

A common strategy to increase the diversity and volume of training data is to modify existing data to create synthetic data. Data augmentation is widely used in image processing. This could mean flipping images, cropping them, or adjusting the brightness. Just make sure the data augmentation strategy makes sense for the project of interest. For example, for autonomous driving applications, rotating an image 180 degrees so that the road is at the top of the image and the sky at the bottom does not make sense.

https://youtu.be/296k6OHHerfM?si=H56aB5hlpEIBtp7c

Caption: Multiformer inference in an urban scene from the SHIFT synthetic dataset.

Instead of modifying existing data for autonomous driving applications, CGI can be used to accurately generate a wide variety of images or videos that might not be easy to obtain in the real world. This may include rare or dangerous scenarios, specific lighting conditions, or vehicle types. A couple of disadvantages of this approach are that creating high-quality CGI requires significant computational resources. specialized software and a trained team.

Generative ai

A generative model commonly used to create synthetic data is Generative Adversarial Networks or GANs for short. GANs consist of two networks, a generator and a discriminator, that are trained simultaneously. The generator creates new examples and the discriminator tries to differentiate between real and generated examples. The models learn together: the generator improves its ability to create realistic data and the discriminator becomes more adept at detecting synthetic data. If you want to try implementing a GAN with PyTorch, ai-synthetic-data-generation-with-gans-using-pytorch-2e4dde8a17dd” rel=”noopener” target=”_blank”>check out this TDS blog post.

These methods work well for complex data sets and can generate very realistic, high-quality data. However, as the image above shows, it is not always easy to control specific attributes such as the color, text or size of generated objects.

If a project does not have enough diverse, high-quality real data, synthetic data could be an option. After all, more synthetic data can always be generated. This is an important difference between real and synthetic data, as synthetic data is much easier to improve. If you have any questions or thoughts about this blog post, feel free to contact us in the comments below or via Twitter.

Michael Galarnyk is a data science professional and works as a product marketing content lead at Parallel Domain.

NEWSLETTER

NEWSLETTER