ai’s growing influence in large organizations brings crucial challenges in managing ai platforms. These include developing a scalable and operationally efficient platform that adheres to organizational compliance and security standards. Amazon SageMaker Studio offers a comprehensive set of capabilities for machine learning (ML) practitioners and data scientists. These include a fully managed ai development environment with an integrated development environment (IDE), simplifying the end-to-end ML workflow. Its collaborative capabilities such as real-time coediting and sharing notebooks within the team ensures smooth teamwork, while the scalability and high-performance training caters to large datasets. With built-in security, cost-effectiveness, and a range of pre-built tools like Amazon SageMaker Autopilot, Amazon SageMaker JumpStart, and Amazon SageMaker Feature store, SageMaker Studio is a powerful platform for accelerating ai projects and empowering data scientists at every level of expertise.

Deutsche Bahn is a leading transportation organization in Germany with a revenue of 56.3 billion EUR (in 2022), a workforce of 336,884 employees (including 221,343 employees in Germany), and operations spanning 130 countries. They offer a wide range of services, including public and regional transport, freight services, and rail infrastructure. Through the integrated operation of traffic and railway infrastructure, as well as the economically and ecologically intelligent connection of all modes of transport, Deutsche Bahn moves people and goods. Deutsche Bahn has been at the forefront in adopting ai, using SageMaker Studio as a key ai platform. At Deutsche Bahn, a dedicated ai platform team manages and operates the SageMaker Studio platform, and multiple data analytics teams within the organization use the platform to develop, train, and run various analytics and ML activities.

The ai platform team’s key objective is to ensure seamless access to Workbench services and SageMaker Studio for all Deutsche Bahn teams and projects, with a primary focus on data scientists and ML engineers. This platform helps Deutsche Bahn realize a spectrum of use cases, ranging from railway maintenance, forecasting, and future applications in generative ai.

The ai platform managed service, built on SageMaker Studio, seamlessly aligns with Deutsche Bahn’s group-wide platform strategy. It meets the company’s compliance requirements, enables a swift project initiation for the team by provisioning a SageMaker domain, and reduces maintenance overhead due to an overarching operating model. Major benefits include high scalability of the service, in large part due to automation and a self-service model, and an attractive pricing model that’s primarily based on resource consumption.

“SageMaker Studio provided us a common platform that is scalable, security compliant, and addresses the development needs of data scientists from multiple data analytics teams within the DB organization. Before this, each team managed and operated their own JupyterLab notebooks, which was not efficient or cost-effective. Within 8 weeks, we onboarded over 120 developers, provisioned 25 SageMaker domains, and quickly got started using this platform.”

– Emmanuel Drosos, product owner at DB Systel.

In this post, we explore how Deutsche Bahn scaled and operated their ai platform using SageMaker Studio for multiple teams, while ensuring robust security and oversight.

Solution overview

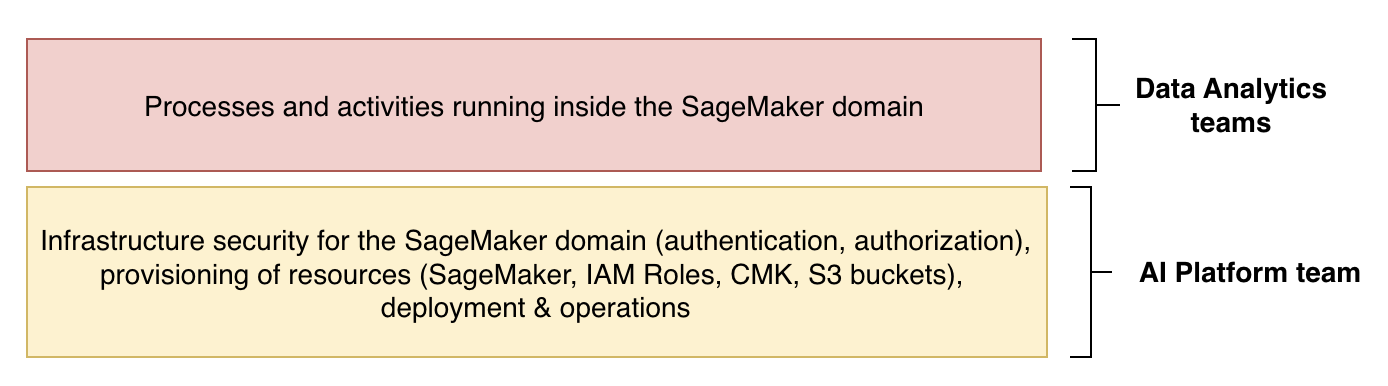

The architecture at Deutsche Bahn consists of a central platform account managed by a platform team responsible for managing infrastructure and operations for SageMaker Studio. SageMaker Studio resources are grouped by SageMaker domains, each consisting of an associated Amazon Elastic File System (Amazon EFS) volume, a list of authorized users, and a variety of security, application, policy, and Amazon Virtual Private Cloud (Amazon VPC) configurations. At Deutsche Bahn, data scientists from various teams use SageMaker domains for their ML activities; each team has a dedicated SageMaker domain that they use for developing and testing ML models and collaborate using features such as notebook sharing.

From an infrastructure perspective, the VPC provisioned in the ai platform account as shown in the following figure has no outbound internet connectivity to ensure security and compliance. For high availability, multiple identical private isolated subnets are provisioned. The SageMaker Studio domains are deployed in VPC only mode, which creates an elastic network interface for communication between the SageMaker service account (AWS service account) and the platform account’s VPC. The endpoints like SageMaker API, SageMaker Studio, and SageMaker notebook facilitate secure and reliable communication between the platform account’s VPC and the SageMaker domain managed by AWS in the SageMaker service account.

Each data analytics team is able to request one or multiple SageMaker domains through the company’s internal self-service portal. This process of ordering a SageMaker domain is orchestrated through a separate workflow process (via AWS Step Functions). During this orchestration flow, an Azure Active Directory (AD) group for the data analytics team is provisioned with the AD group name corresponding to the domain name. The orchestration leads to a continuous integration and continuous deployment (CI/CD) pipeline deploying an AWS Cloud Development Kit (AWS CDK) app consisting of a SageMaker domain for the respective team.

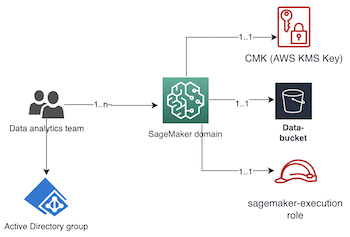

In addition to the SageMaker domain, a customized AWS Identity and Access Management (IAM) role (SageMaker-execution-role), Amazon Simple Storage Service (Amazon S3) bucket (data-bucket), customer managed key (CMK), and other AWS resources are provisioned during the deployment process by the AWS CDK app, as illustrated in the following figure. The AD group contains scientists who needs access to their team’s SageMaker domain. The AD group name corresponds to the SageMaker domain’s name and is primarily used during the authorization process.

Client separation is implemented on the level of SageMaker domains by using IAM authentication mode. A domain-specific IAM role (SageMaker-execution-role) is attached to each domain that follows the principle of least privilege and is assumed by the data analytics team during the login process. This role grants data scientists in the team the ability to perform various activities, such as running processing jobs, hyperparameter tuning jobs, transformation jobs, and experiments, as well as creating models. These ML activities are run on behalf of the user by SageMaker using the IAM pass role permission. However, certain actions like creating S3 buckets, modifying IAM roles, updating SageMaker domains, and provisioning large instances are restricted for security, compliance, and cost control reasons. The associated IAM policy makes sure that the data analytics team only has access to the relevant S3 bucket and CMK for their authorized domain, as depicted in the following figure. Additionally, the role SageMaker-execution-role allows the team members to assume roles in other accounts within the Deutsche Bahn organization from SageMaker Studio, providing them with flexibility to access resources like Amazon Relational Database Service (Amazon S3), other S3 buckets, and Amazon Athena. The IAM policy uses aws:RequestTag and aws:ResourceTag for fine-grained access control during SageMaker activities, like processing jobs, training jobs, and create models. These tags also help track associated costs for the domain. For more information, refer to Actions, resources, and condition keys for Amazon SageMaker.

The CMK encrypts both the SageMaker domain’s file system contents stored in Amazon EFS and the contents of the S3 bucket (data-bucket) that is provisioned to store data for SageMaker processing and transformation jobs. In addition, resource-based policies, such as the bucket policy and CMK policy, provide an extra layer of security, restricting both access to only authorized ai team members and permitted actions on these resources.

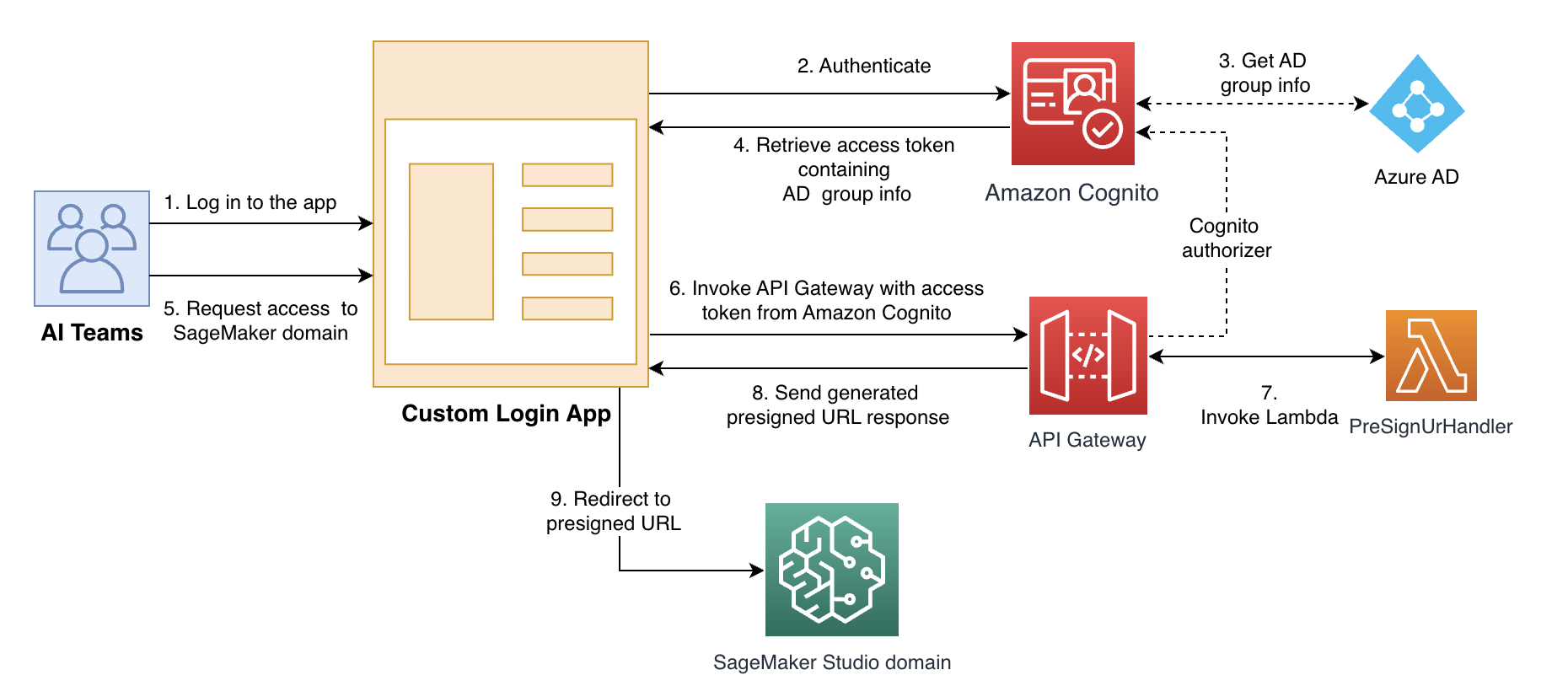

The ai team does not have AWS Management Console access to the ai platform team’s account. To access SageMaker Studio, as illustrated in the following figure, the data scientists from the data analytics team use a generated presigned URL by authenticating through an Amazon Cognito based custom login application. After the user logs in to this custom application, they receive an OAuth access token that contains information such as AD group name. After they log in to the custom application, the user requests SageMaker domain access through the UI by triggering an Amazon API Gateway call to generate a presigned URL. API Gateway invokes the PreSignUrlGenerator AWS Lambda function and uses an Amazon Cognito authorizer to validate the OAuth access token in the request header. The PreSignUrlGenerator function validates user access permissions for the requested SageMaker domain by comparing the AD name in the access token against the requested SageMaker domain. Upon successful authorization, the PreSignUrlGenerator function creates a SageMaker user profile upon first login and generates a presigned URL response. The custom login application then redirects the users to the requested SageMaker domain.

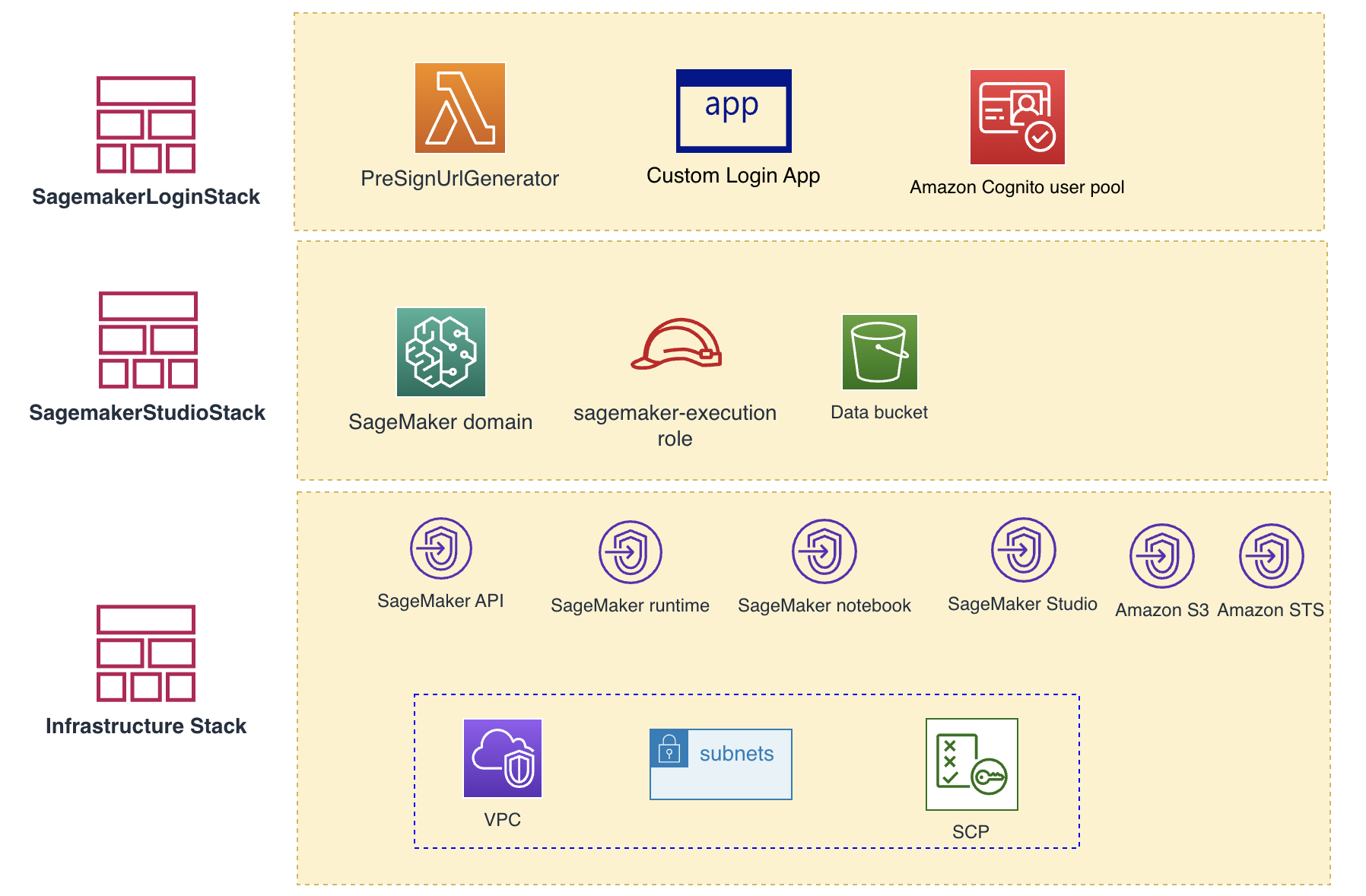

AWS CDK

The solution at Deutsche Bahn uses AWS CDK as infrastructure as code (IaC) to provision a SageMaker domain along with resources like S3 buckets and a CMK. The following figure illustrates the stacks and associated resources used for SageMaker deployment. The infrastructure stack takes care of setting up essential resources like VPC, subnets, and multiple SageMaker endpoints. The resources such as VPC, subnets, and service control policies (SCPs) are managed by a central cloud team through a different stack (but is shown here for simplicity). The SageMakerStudioStack is primarily responsible for provisioning a SageMaker domain, a dedicated data bucket, a CMK, and the dedicated IAM role SageMaker-execution-role. Notably, each SageMaker domain is provisioned through its individual SageMakerStudioStack.

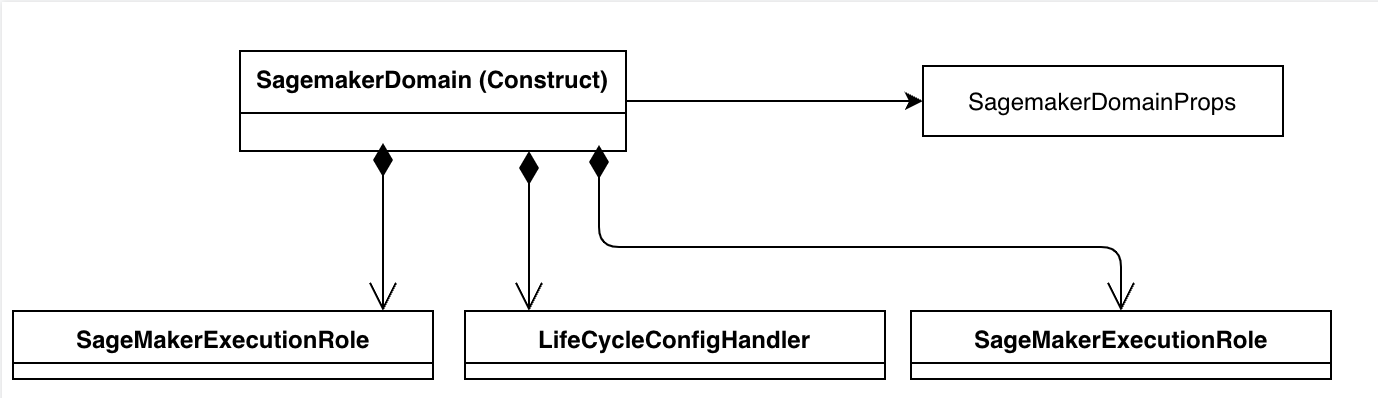

The solution uses a purpose-built L3 construct (SageMaker Studio domain), as shown in the following figure, for the SageMaker domain resource. SageMaker Studio has a lifecycle configuration feature that enables specific initializations during the startup of JupyterLab or KernelGateway apps.

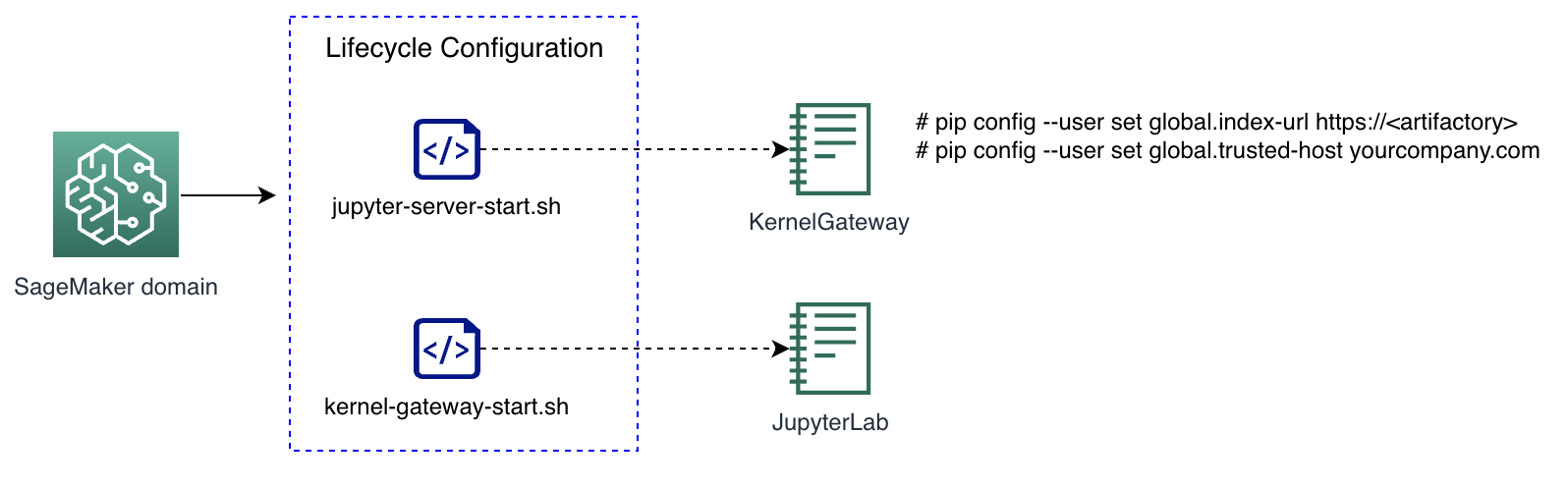

Deutsch Bahn uses the lifecycle configuration as shown in the following figure to automatically detect and shut down idle instances in the SageMaker domain, reducing unnecessary costs. Due to restricted outbound connectivity, the data analytics team uses internally hosted images and third-party libraries from the company’s internal artifactory. The lifecycle configuration script for KernelGateway configures pip and conda package managers to redirect downloads to the internally hosted artifactory location. As of this writing, there is no AWS CDK construct for the lifecycle configuration resource; therefore, they use a custom CDK resource to provision and manage the LifeCycleConfig script. Custom resources in AWS CDK offer the ability to provision and manage resources not directly supported by AWS CloudFormation or AWS CDK constructs.

Installation

The sample AWS CDK application demonstrates how various components, including the SageMaker domain, lifecycle configuration, Amazon Cognito, and IAM role with the least privileges, function together. Within the application, the SagemakerStudioStack class handles the provisioning of a SageMaker domain, IAM role (sagemaker-execution-role) that users assume, CMK, lifecycle configuration, SageMaker user profile, S3 bucket for data processing, and Amazon Cognito user group. The demo AWS CDK application provides a concise overview of key components, such as the SageMaker domain, lifecycle configuration, authentication through Amazon Cognito, and IAM role with least privileges. The SagemakerLoginStack, on the other hand, is responsible for deploying the Amazon Cognito user pool, Lambda function, and API Gateway for generating presigned URLs. The CognitoUserStack primarily focuses on deploying a user within the Amazon Cognito user pool.

You can run the following commands to compile, synthesize, and deploy the application. You should adjust the account, user, and password in the sample code for your application. The password should be at least 8 characters, with uppercase characters and numbers. The user parameter is the SageMaker domain user that will be authenticated by Amazon Cognito.

- Download the source code from the ai-team-with-Amazon-SageMaker-Studio” target=”_blank” rel=”noopener”>GitHub repo.

- Bootstrap the AWS account. In the following code, adjust the account number and Region as needed:

- Install the packages and compile the code:

- Synthesize the AWS CDK application:

- Deploy the application with all stacks into the account and Region of your choice:

- Download the Postman app to make an API call.

If you don’t have a Postman account, create a free account with your email. If you already have an account, sign in to your account.

- On the File menu, choose Import and import the Postman environment JSON file included in the GitHub repo.

- On the Environments tab in Postman, locate the environment called SageMaker.

- Add the following environment variables, which you see as part of the stack deployment output from

SagemakerLoginStack:

Use the following parameters (fetch the values from the output during cdk deploy):

-

- domainName – The domain name parameter you passed in cdk deploy, for example team1

- client-id – The Amazon Cognito client ID

- client-secret – The Amazon Cognito client secret.

- SageMaker-presigned-api – The URL of the API Gateway created by AWS CDK, which generates the presigned URL

- cognito-signin-endpoint – The endpoint URL of the Amazon Cognito domain where the client app (in this case, Postman) authenticates by providing credentials of the user (demo-user)

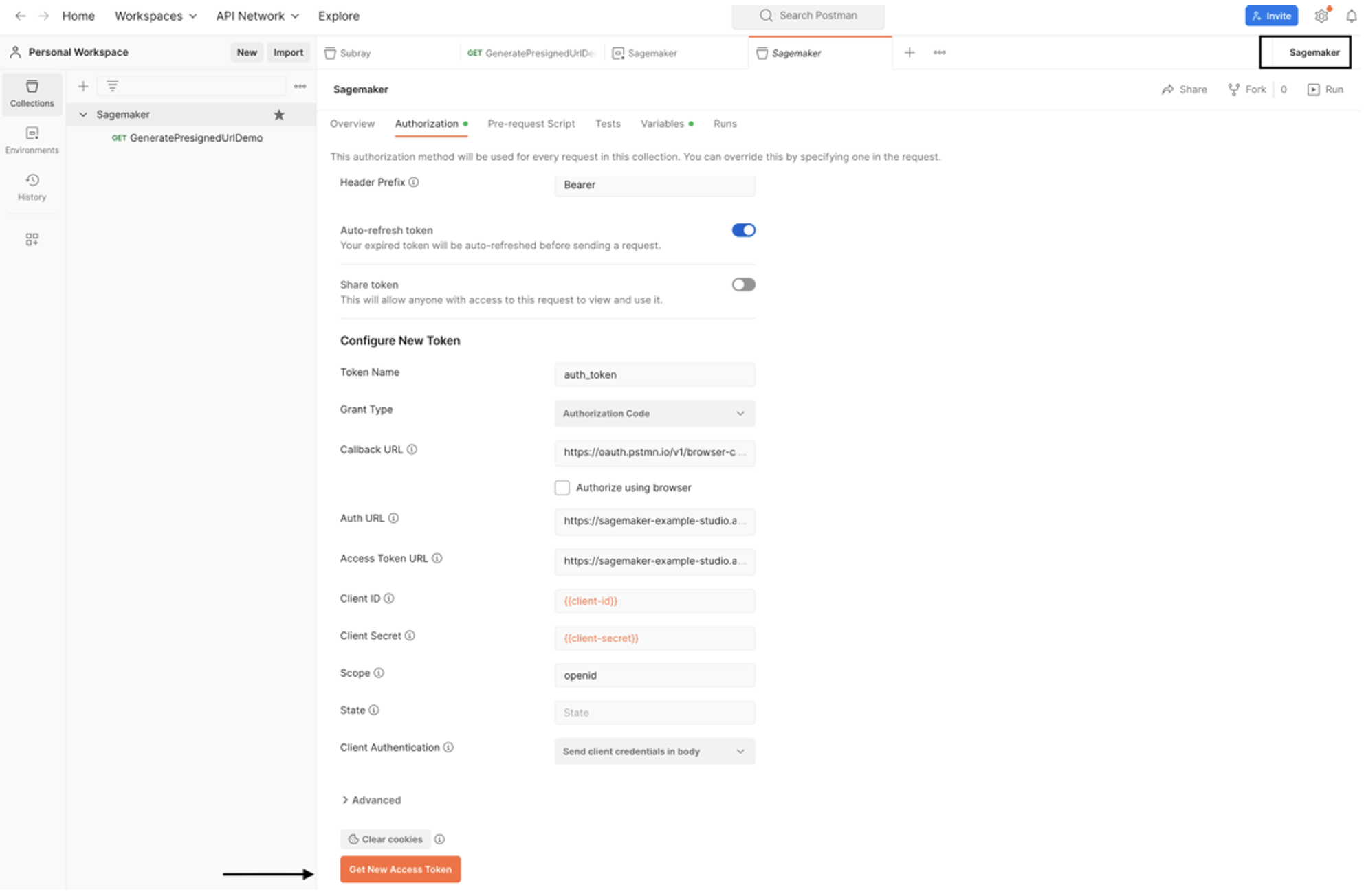

The next step is to generate an OAuth2 token.

-

- On the Authorization tab, choose the SageMaker environment and choose Generate New Access Token.

All the values on this tab should be prefilled.

-

- Update the environment variables and choose Get New Access Token.

- In the pop-up window that opens, log in to Amazon Cognito with the user name (demo-user) and password you used earlier.

Upon successful authentication, a new access token is generated.

- Choose Use Token.

- Choose

GeneratePresignedUrlDemoin the Postman SageMaker collections and choose Send. - Make sure you selected the right environment (SageMaker) on the drop-down list.

This makes a REST API call to API Gateway and generates a presigned URL to access the SageMaker domain. You can see this URL in the response body.

- Copy this URL and enter it in the browser window.

A new SageMaker domain will be launched with your user profile.

This demo application supports SageMaker features like training jobs, processing jobs, and model endpoints. Note that features like Amazon SageMaker Canvas, SageMaker JumpStart, and SageMaker Feature Store are not activated.

Clean up

Complete the following steps to clean up your resources:

- On the SageMaker console, in the navigation pane, choose Domain, User Profile, and Apps.

- Delete all running apps (KernelGateway or JupyterLab) from this solution.

- Delete all the SageMaker user profiles you created during the login step.

- On the Amazon EFS console, delete the EFS file system created for this post.

- Run the following command to delete the resources created with the AWS CDK:

Conclusion

The post highlighted how Deutsche Bahn effectively used SageMaker Studio to revamp its ai platform, resulting in a scalable, automated, and manageable solution to support its diverse data analytics teams. This architecture features a central platform account, a self-service domain ordering process, and infrastructure provisioning using AWS CDK. The deployment process incorporates a CI/CD pipeline, ensuring the smooth delivery of SageMaker domains.

Overall, the transformation brought about by SageMaker Studio has empowered Deutsche Bahn to construct a robust platform for their ai initiatives, catering to over 100 developers and managing 20 SageMaker domains within a single AWS account.

Lastly, we extend our sincere appreciation to Nico Seegert (d-fine) and Philipp Vollmer (Deutsche Bahn), whose invaluable contributions were instrumental in shaping this architecture.

For further reading, refer to the following resources:

___________________________________________________________________________________________

About the authors

Prasanna Tuladhar is a Cloud Infrastructure Architect at AWS Professional Services in Munich, Germany. Specializing in cloud infrastructure, workload migration, and DevOps on the AWS platform, he empowers customers to achieve their business objectives. Outside of work, he enjoys jogging, hiking, and quality time with his family.

Prasanna Tuladhar is a Cloud Infrastructure Architect at AWS Professional Services in Munich, Germany. Specializing in cloud infrastructure, workload migration, and DevOps on the AWS platform, he empowers customers to achieve their business objectives. Outside of work, he enjoys jogging, hiking, and quality time with his family.

Emmanuel Drosos is a Product Owner for the ai platform at DBSystel, a subsidiary of Deutsche Bahn (DB) Germany. With a passion for innovation and technology, Emmanuel spearheads initiatives aimed at leveraging the power of the cloud to drive ai platform at DB (Deutsche Bahn). The ai.Platform is one of DB’s group-wide development platforms. It includes ai services and tools for the development of ai (machine learning) models and directly usable ai services. Simple, integrated and scalable.He works closely with other DB customers to unlock the full potential of ai platform, enabling them to achieve their business objectives efficiently and effectively. Outside of his professional activities, Emmanuel enjoys traveling and is an enthusiastic nature and hiking lover.

Emmanuel Drosos is a Product Owner for the ai platform at DBSystel, a subsidiary of Deutsche Bahn (DB) Germany. With a passion for innovation and technology, Emmanuel spearheads initiatives aimed at leveraging the power of the cloud to drive ai platform at DB (Deutsche Bahn). The ai.Platform is one of DB’s group-wide development platforms. It includes ai services and tools for the development of ai (machine learning) models and directly usable ai services. Simple, integrated and scalable.He works closely with other DB customers to unlock the full potential of ai platform, enabling them to achieve their business objectives efficiently and effectively. Outside of his professional activities, Emmanuel enjoys traveling and is an enthusiastic nature and hiking lover.

Vishwanath Bhat is a DevOps Architect at AWS Professional Services, based in Germany. He helps customers to get the full benefit of the cloud and achieve their business goals with AWS cloud. When not working, he likes to go swimming in alpine lakes, hiking, reading or play football.

Vishwanath Bhat is a DevOps Architect at AWS Professional Services, based in Germany. He helps customers to get the full benefit of the cloud and achieve their business goals with AWS cloud. When not working, he likes to go swimming in alpine lakes, hiking, reading or play football.

Kumudhan Cherarajan is a DevOps Consultant at AWS Professional Services, based in Switzerland. He is passionate about helping customers adopt process and services that increase their efficiency in the cloud journey. When not working, he likes to play cricket and music.

Kumudhan Cherarajan is a DevOps Consultant at AWS Professional Services, based in Switzerland. He is passionate about helping customers adopt process and services that increase their efficiency in the cloud journey. When not working, he likes to play cricket and music.