Large language models (LLMs) have gained significant attention in the field of simultaneous speech-to-speech translation (SimulS2ST). This technology has become crucial for low-latency communication in various scenarios, such as international conferences, live broadcasts, and online subtitles. The main challenge of SimulS2ST lies in producing high-quality translated speech with minimal delay. This requires a sophisticated policy to determine the optimal times to initiate translation within streaming speech inputs (READ action) and subsequently generate coherent target speech outputs (WRITE action).

Current methodologies face several challenges. Existing simultaneous translation methods mainly focus on text-to-text (Simul-T2TT) and speech-to-text (Simul-S2TT) translation. These approaches typically rely on cascading external modules such as speech recognition (ASR) and text-to-speech synthesis (TTS) to achieve SimulS2ST. However, this cascade approach tends to progressively amplify inference errors between modules and prevent joint optimization of multiple components, highlighting the need for a more integrated solution.

Researchers have made several attempts to address the challenges of simultaneous speech-to-speech translation, mainly focusing on Simul-T2TT and Simul-S2TT translation methods. In Simul-T2TT, approaches are classified into fixed and adaptive methods. Fixed methods, such as the k-wait policy, employ a default strategy of waiting for a set number of tokens before switching between READ and WRITE actions. Adaptive methods use techniques such as monotone attention, alignments, non-autoregressive architecture, or language models to dynamically perform Simul-T2TT. For Simul-S2TT, the focus has been on speech segmentation. Fixed pre-decision methods divide speech into segments of equal length, while adaptive methods divide speech input into words or segments before applying Simul-T2TT policies. Some researchers have also explored the application of offline models to Simul-S2TT tasks. Despite these advances, these methods still rely heavily on cascading external modules, which can lead to error propagation and make it difficult to co-optimize the translation process.

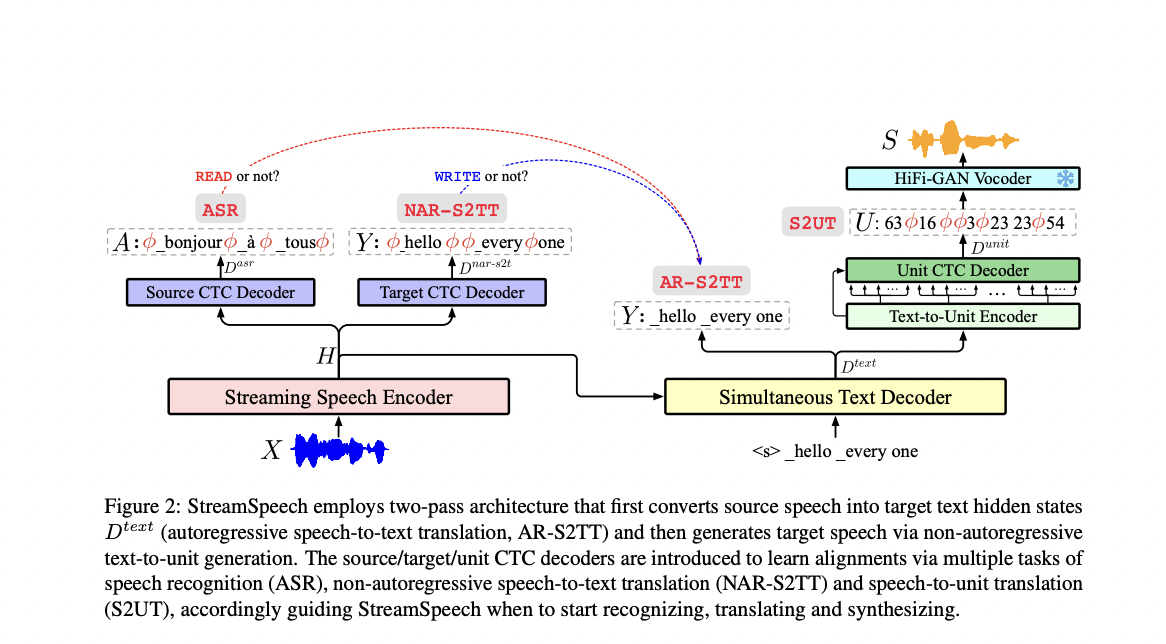

Researchers from Key Laboratory of Intelligent Information Processing, Institute of Information technology, Chinese Academy of Sciences (ICT/CAS), Key Laboratory of ai Security, Chinese Academy of Sciences, Chinese Academy of Sciences University, School of Science and Engineering of the Future, Soochow present university StreamSpeech, addresses the challenges of SimulS2ST by introducing textual information for both source and target speech, providing intermediate oversight, and guiding policy through text-based alignments. This forward model of SimulS2ST employs a two-step architecture: it first translates the source speech into hidden states of the target text and then converts them into target speech. Multiple CTC decoders, optimized using ASR and S2TT auxiliary tasks, provide intermediate supervision and learn alignments for policy guidance. By jointly optimizing all modules through multi-task learning, StreamSpeech enables simultaneous translation and policy learning, potentially overcoming the limitations of previous waterfall approaches.

The StreamSpeech architecture consists of three main components: a streaming speech encoder, a simultaneous text decoder, and a synchronized text-to-unit generation module. The broadcast voice encoder uses a fragment-based conformer design, which allows it to process broadcast inputs while maintaining bidirectional encoding within local fragments. The simultaneous text decoder generates target text based on the hidden states of the source speech, guided by a policy that determines when to generate each target token. This policy is based on alignments learned across multiple CTC decoders, which are optimized by ASR and S2TT auxiliary tasks. The text-to-unit generation module employs a non-autoregressive architecture to synchronously generate units corresponding to the decoded text. Finally, a HiFi-GAN speech encoder synthesizes the target speech from these units.

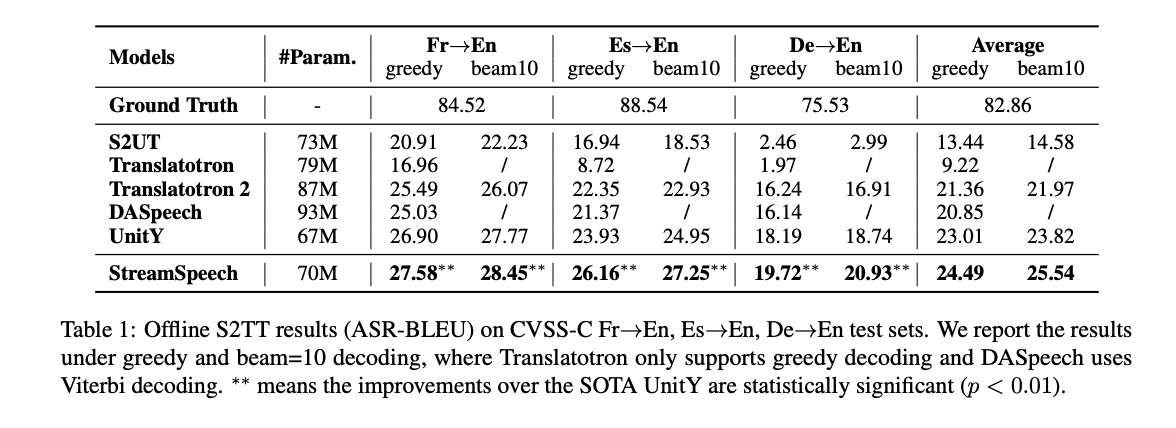

StreamSpeech demonstrates superior performance in both offline and S2ST tasks. In offline S2ST, it outperforms the state-of-the-art UnitY model with an average improvement of 1.5 BLEU. The model architecture, which combines autoregressive speech-to-text translation with non-autoregressive text-to-unit generation, is effective in balancing modeling capabilities and alignment capture. In simultaneous S2ST, StreamSpeech significantly outperforms the Wait-k baseline, showing an improvement of approximately 10 BLEU under low latency conditions in French, Spanish, and German-to-English translations. The policy derived from model alignment allows for more appropriate translation synchronization and consistent target speech generation. Furthermore, StreamSpeech shows advantages over cascaded systems, highlighting the benefits of its direct approach in reducing error accumulation and improving overall performance in Simul-S2ST tasks.

StreamSpeech represents a significant advancement in simultaneous voice-to-speech translation technology. This innovative, all-in-one integrated model effectively handles ASR transmission, simultaneous translation and real-time speech synthesis within a unified framework. Its comprehensive approach enables improved performance across multiple tasks, including offline speech-to-speech translation, ASR streaming, simultaneous speech-to-text translation, and simultaneous speech-to-speech translation.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram channel and LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 45,000ml

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>