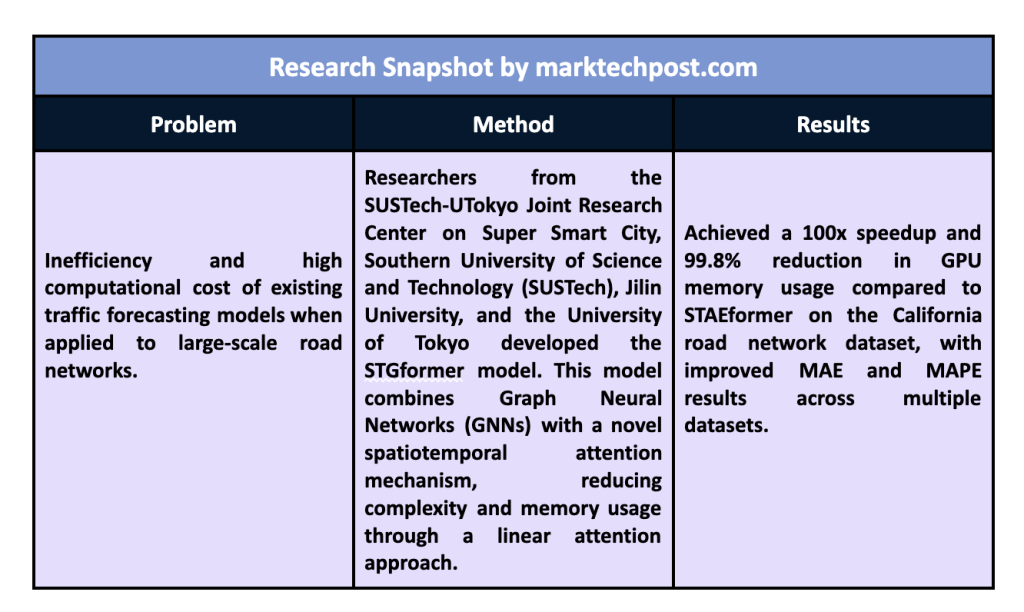

Traffic forecasting is a fundamental aspect of smart city management, essential for improving transportation planning and resource allocation. With the rapid advancement of deep learning, complex spatiotemporal patterns in traffic data can now be effectively modeled. However, real-world applications present unique challenges due to the large-scale nature of these systems, typically spanning thousands of interconnected sensors distributed over vast geographic areas. Traditional models, such as graph neural networks (GNN) and transformer-based architectures, have been widely adopted in traffic forecasting due to their ability to capture spatial and temporal dependencies. However, as these networks grow, their computational demands increase exponentially, making it difficult to apply these methods to extensive networks such as the California highway system.

One of the most pressing problems with existing models is their inability to handle large-scale road networks efficiently. For example, popular benchmarks such as the PEMS series and MeTR-LA contain relatively few nodes, which is manageable for standard models. However, these data sets do not accurately represent the complexity of real-world traffic systems, such as California's Caltrans Performance Measurement System, which comprises nearly 20,000 active sensors. The important challenge is to maintain computational efficiency while modeling local and global patterns within such a large network. Without an effective solution, limitations of current models, such as high memory usage and extensive computation time required, continue to hinder their scalability and implementation in practical scenarios.

Various approaches have been introduced to address these limitations, combining GNN and Transformer-based models to take advantage of their strengths. Spatiotemporal attention-based methods such as STAEformer provide high-order spatiotemporal interactions using multiple stacked layers. While these models improve performance on small and medium-sized data sets, their computational costs make them impractical for large-scale networks. Consequently, there is a need for novel architectures that can balance model complexity and computational requirements while ensuring accurate traffic predictions in various scenarios.

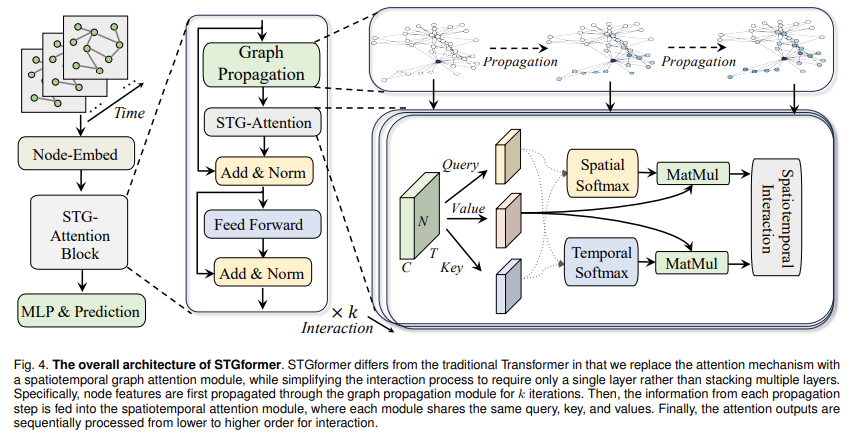

Researchers from the SUSTech-UTokyo Joint Research Center on Super Smart Cities, Southern University of Science and technology (SUSTech), Jilin University and the University of Tokyo developed the STGformer. This novel model integrates spatiotemporal attention mechanisms within a graphic structure. The research team introduced this model to achieve high efficiency in traffic forecasting. The key innovation in STGformer lies in its architecture, which combines graph-based convolutions with Transformer-like attention blocks in a single layer. This integration allows you to maintain the expressive power of Transformers while significantly reducing computational costs. Unlike traditional methods that require multiple layers of attention, STGformer captures high-order spatiotemporal interactions in a single attention block. This unique approach results in a 100x speedup and 99.8% reduction in GPU memory usage compared to the STAEformer model when tested on the LargeST benchmark.

The researchers implemented an advanced spatiotemporal graph attention module that processes spatial and temporal dimensions as a unified entity. This design reduces computational complexity by adopting a linear attention mechanism, which replaces the standard softmax operation with an efficient weighting function. The efficiency of this method was demonstrated using multiple large-scale data sets, including the San Diego and Bay Area data sets, where STGformer outperformed state-of-the-art models. The San Diego data set achieved a 3.61% improvement in mean absolute error (MAE) and a 6.73% reduction in mean absolute percentage error (MAPE) compared to the previous best models. Similar trends were observed in other data sets, highlighting the robustness and adaptability of the model in various traffic scenarios.

The STGformer architecture provides a breakthrough in traffic forecasting by making it feasible to deploy models on real-world, large-scale traffic networks without compromising performance or efficiency. When tested on the California highway network, the model demonstrated remarkable efficiency, completing batch inference 100 times faster than STAEformer and using only 0.2% of memory resources. These improvements make STGformer a suitable foundation for future research and development in spatiotemporal modeling. Its generalization capabilities were further validated through multi-year scenario testing, where the model maintained high accuracy even when applied to unseen data from the following year.

Key research findings:

- Computational efficiency: Compared with traditional models such as STAEformer, STGformer achieves a 100x speedup and a 99.8% reduction in GPU memory usage.

- Scalability: The model can handle real-world networks with up to 20,000 sensors, overcoming the limitations of existing models that fail in large-scale deployments.

- Performance Gains: It achieved a 3.61% improvement in MAE and a 6.73% reduction in MAPE on the San Diego dataset, outperforming state-of-the-art models.

- Generalization ability: It demonstrated strong performance across different data sets and maintained accuracy in year-on-year testing, demonstrating adaptability to changing traffic conditions.

- Novel architecture: Integrating spatiotemporal graph attention with linear attention mechanisms allows STGformer to capture local and global traffic patterns efficiently.

In conclusion, the STGformer model presented by the research team presents a highly efficient and scalable solution for traffic forecasting on large-scale road networks. Addressing the limitations of existing GNNs and Transformer-based methods enables more effective resource allocation and transportation planning in smart city management. The ability of the proposed model to handle high-dimensional spatiotemporal data using minimal computational resources makes it an ideal candidate for implementation in real-world traffic forecasting applications. The results obtained on multiple data sets and benchmarks emphasize its potential to become a standard tool in urban computing.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet..

Don't forget to join our SubReddit over 50,000ml

Want to get in front of over 1 million ai readers? ai-newsletter-alignment-lab-ai-releases-buzz-dataset-snowflake-introduces-arctic-embed-openai-released-gpt-4o-and-many-more” target=”_blank” rel=”noreferrer noopener”>Work with us here

Aswin AK is a Consulting Intern at MarkTechPost. He is pursuing his dual degree from the Indian Institute of technology Kharagpur. He is passionate about data science and machine learning, and brings a strong academic background and practical experience solving real-life interdisciplinary challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>