LLM processing requirements raise considerable challenges, particularly for real -time uses where fast -response time is vital. Processing each question again is slow and inefficient, which requires great resources. IA service providers exceed low performance by using a cache system that stores repeated consultations so that they can respond instantly without waiting, optimizing efficiency while saving latency. However, while accelerating response time, security risks also arise. Scientists have studied how storage habits in the API LLM could involuntarily reveal confidential information. They discovered that user consultations and information on the commercial secret model could be leaked through time -based lateral channel attacks based on storage policies in commercial cache cache.

One of the key risks of fast cache storage is its potential to reveal information on previous user consultations. If the indications in cache are shared among multiple users, an attacker could determine if someone more recently sent a similar application based on response time differences. The risk becomes even greater with the storage in global cache, where a user's warning can lead to a faster response time for another user to send a related query. When analyzing response time variations, researchers demonstrated how this vulnerability could allow attackers to discover confidential commercial data, personal information and patented consultations.

Several ai services providers store cache differently, but their cache storage policies are not necessarily transparent for users. Some restrict storage in cache to individual users so that the indications in cache are available only for the individual who published them, so it does not allow the data to be shared between the accounts. Others implement storage in cache by organization so that several users in a company or organization can share indications in cache. While it is more efficient, this also runs the risk of filtering confidential information if some users have special access privileges. The most threatening safety risk results of storage in global cache, in which all API services can access cache indications. As a result, an attacker can manipulate the inconsistencies of the response time to determine the previous indications presented. The researchers discovered that most ai suppliers are not transparent with their storage policies in cache, so users continue to ignore security threats that accompany their consultations.

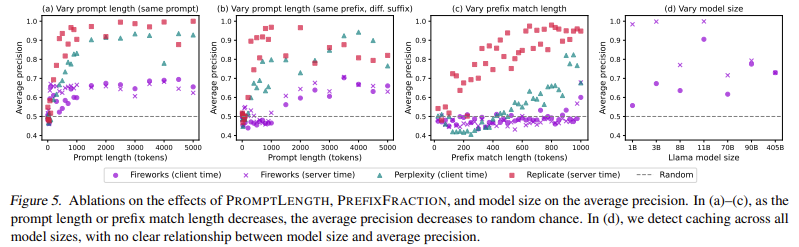

To investigate these problems, the Stanford University Research Team developed an audit frame capable of detecting fast cache storage at different access levels. His method involved sending controlled sequences of indications to several API of ai and measuring response time variations. If a warning was stored in cache, the response time would be remarkably faster when it is sent again. They formulated statistical hypothesis tests to confirm whether the storage in cache was occurring and to determine if the cache exchange spread beyond individual users. The researchers identified patterns that indicate cache storage by systematically adjusting the lengths immediately, prefix similarities and repetition frequencies. The audit process involved trying 17 commercial APIs, including those provided by OpenAi, Anthrope, Deepseek, Fireworks ai and others. Their tests focused on detecting whether storage in cache was implemented and if it was limited to a single user or shared in a broader group.

The audit procedure consisted of two main tests: one to measure the response times for cache and another for cache failures. In the cache test, the same notice was sent several times to observe if the response speed improved after the first request. In the Cache-Miss test, randomly generated indications were used to establish a baseline for response times without neglecting. The statistical analysis of these response times provided clear evidence of storage in cache in several API. The researchers identified the storage behavior in cache in 8 of 17 API suppliers. More critically, they discovered that 7 of these suppliers shared caches worldwide, which means that any user could infer the use patterns of another user depending on the response speed. Their findings also revealed a previously unknown architectural detail about the OpenNa-Inmbed-3-SMALS text model: the storage behavior in the Cache of the Prompt indicated that it follows a transformative structure only decoder, information that had not been publicly revealed.

The evaluation of the performance of the indications in cache versus the non -consulting indications highlighted surprising differences in the response times. For example, in the 3-Pequeña de Openai text, the average response time for a cache blow was approximately 0.1 seconds, while cache failures resulted in delays of up to 0.5 seconds. The researchers determined that the vulnerabilities of cache exchange could allow attackers to achieve almost perfect precision to distinguish between indications in cache and not consulted. Its statistical tests produced highly significant values, often below 10⁻⁸, indicating a strong probability of cache storage behavior. In addition, they discovered that in many cases, a single repeated application was sufficient to activate the storage in cache, with OpenAi and Azure that required up to 25 consecutive applications before the storage behavior in cache became evident. These findings suggest that API suppliers could use distributed cache storage systems where the indications are not immediately stored on all servers, but are stored in cache after repeated use.

The key conclusions of the investigation include the following:

- Quick storage storage accelerates responses to store previously processed consultations, but can expose confidential information when caches are shared in multiple users.

- The storage in global cache was detected in 7 of 17 API suppliers, allowing attackers to infer indications used by other users through time variations.

- Some API suppliers do not publicly reveal storage policies in cache, which means that users may not know that others store and access their contributions.

- The study identified discrepancies in response time, with cache successes with an average of 0.1 seconds and cache failures reach 0.5 seconds, providing measurable storage test in cache.

- The statistical audit frame detected the storage in cache with high precision, with P values that often fall below 10⁻⁸, confirming the presence of storage in systematic cache in multiple suppliers.

- It was revealed that Opena-Embeding-3-Small's text model was a decoder only transformer, a previously unleashed detail inferred from the storage behavior in cache.

- Some API suppliers repaired vulnerabilities after dissemination, but others still have to address the problem, indicating the need for strict industry standards.

- Mitigation strategies include restricting storage in cache to individual users, randomizing response delays to avoid time inference and provide greater transparency in cache storage policies.

Verify he Paper and Github page. All credit for this investigation goes to the researchers of this project. In addition, feel free to follow us <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter And don't forget to join our 80k+ ml subject.

Recommended Reading Reading IA Research Liberations: An advanced system that integrates the ai system and data compliance standards to address legal concerns in IA data sets

Sana Hassan, a consulting intern in Marktechpost and double grade student in Iit Madras, passionate to apply technology and ai to address real world challenges. With great interest in solving practical problems, it provides a new perspective to the intersection of ai and real -life solutions.

NEWSLETTER

NEWSLETTER