Stability AI, a leading AI startup, has once again pushed the boundaries of generative AI models with the launch of Stable Diffusion XL 1.0. This state-of-the-art text-to-image model promises to revolutionize image generation with its vibrant colors, stunning contrast, and impressive lighting. But amidst the excitement, ethical concerns loom as the model’s open-source nature raises questions about potential misuse. Let’s dive into the world of Stable Diffusion XL 1.0, exploring its features, capabilities, and the steps Stability AI is taking to safeguard against harmful content generation.

Also Read: Stability AI’s StableLM to Rival ChatGPT in Text and Code Generation

Meet Stable Diffusion XL 1.0: A Leap Forward

Stability AI is making waves in the AI world again with the release of Stable Diffusion XL 1.0. This advanced text-to-image model is touted as the most sophisticated offering from Stability AI to date. Equipped with 3.5 billion parameters, the model can generate full 1-megapixel resolution images in a matter of seconds, supporting multiple aspect ratios.

Also Read: Transform Your Images with Adobe Illustrator’s ‘Generative Recolor’ AI

Power and Versatility in Image Generation

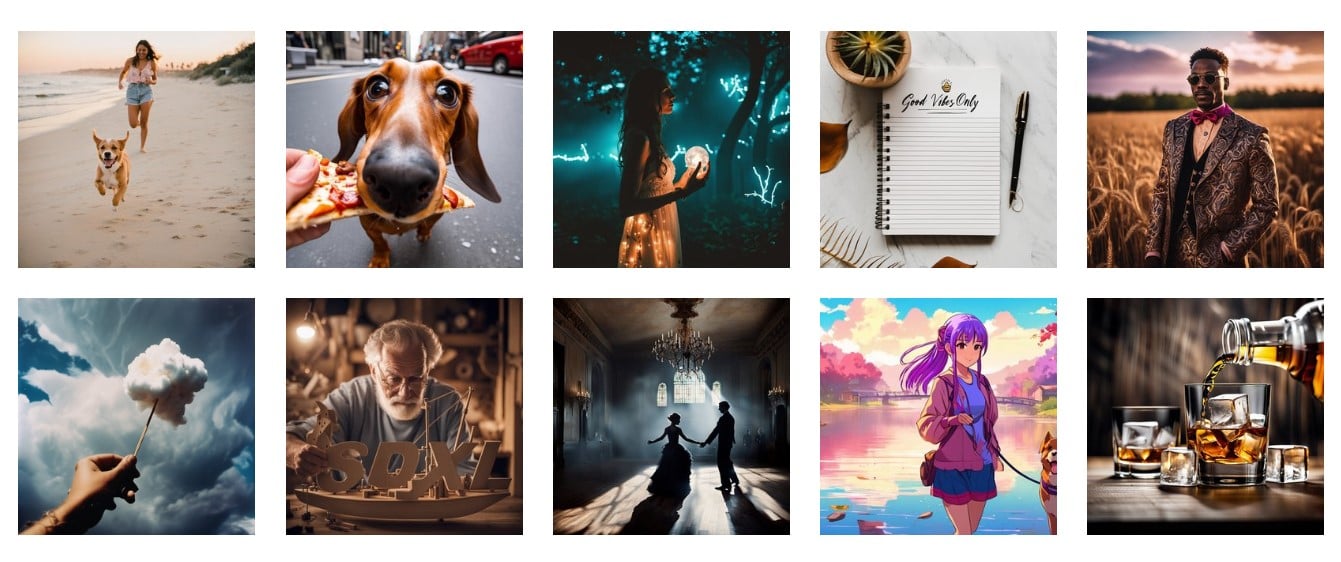

Stable Diffusion XL 1.0 boasts significant improvements in color accuracy, contrast, shadows, and lighting compared to its predecessor. The model’s enhanced capabilities allow it to produce images with a more vibrant visual appeal. Furthermore, Stability AI has made fine-tuning the model for specific concepts and styles easier, harnessing the potential of natural language processing prompts.

Also Read: How to Use Generative AI to Create Beautiful Pictures for Free?

The Art of Text Generation and Legibility

Stable Diffusion XL 1.0 stands out in the realm of text-to-image models for its advanced text generation and legibility. While many AI models struggle with producing images containing legible logos, calligraphy, or fonts, Stable Diffusion XL 1.0 proves its mettle by delivering impressive text rendering and readability. This opens new doors for creative expression and design possibilities.

Also Read: Meta Launches ‘Human-Like’ Designer AI for Images

The Ethical Challenge: Potential Misuse and Harmful Content

As an open-source model, Stable Diffusion XL 1.0 holds immense potential for innovation and creativity. However, this openness also brings ethical concerns, as malicious actors can use it to generate toxic or harmful content, including nonconsensual deepfakes. Stability AI acknowledges the possibility of abuse and the existence of certain biases in the model.

Also Read: AI-Generated Fake Image of Pentagon Blast Causes US Stock Market to Drop

Safeguarding Against Harmful Content Generation

Stability AI actively takes measures to mitigate harmful content generation using Stable Diffusion XL 1.0. The company filters the model’s training data for unsafe imagery and issues warnings related to problematic prompts. Additionally, they block problematic terms in the tool to minimize potential risks. Moreover, Stability AI respects artists’ requests to be removed from the training data, collaborating with startup Spawning to uphold opt-out requests.

Also Read: AI-Generated Content Can Put Developers at Risk

Our Say

Stable Diffusion XL 1.0 represents a significant advancement in the world of AI image generation. Stability AI’s commitment to innovation and collaboration is evident in the model’s capabilities and partnerships with AWS. However, ethical considerations must be at the forefront of AI development. As the AI community continues exploring the potential of Stable Diffusion XL 1.0, it is crucial to strike a balance between creative expression and preventing harmful content generation. By working together, we can harness the power of AI for positive advancements while safeguarding against potential misuse.

NEWSLETTER

NEWSLETTER