Research into linguistic models has advanced rapidly, focusing on improving how models understand and process language, particularly in specialized fields such as finance. Large linguistic models (LLMs) have moved beyond basic classification tasks to powerful tools capable of retrieving and generating complex knowledge. These models work by accessing large data sets and using advanced algorithms to provide insights and predictions. In finance, where the volume of data is immense and requires accurate interpretation, LLMs are crucial for analyzing market trends, predicting outcomes, and providing decision support.

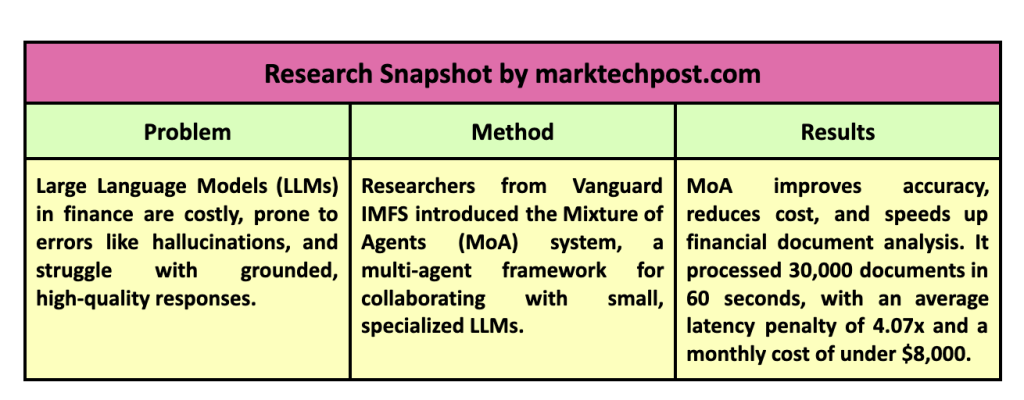

One of the main problems facing researchers in the field of LLMs is balancing cost-effectiveness with performance. LLMs are computationally expensive, and as they process larger data sets, the risk of producing inaccurate or misleading information increases, especially in fields such as finance, where incorrect predictions can lead to significant losses. Traditional approaches rely heavily on a single dense transformer model, which, while powerful, often needs help with hallucinations, where the model outputs incorrect or irrelevant information. Large financial applications that require fast, accurate, and cost-effective models amplify this problem.

Researchers have explored several methods to address these challenges, including ensemble models, which involve multiple LLMs working together to improve the accuracy of results. Ensemble models have been successful in reducing errors and improving generalization, especially when dealing with new information not included in the training data. However, the downside of these systems is their cost and slow processing speed, as running multiple models in parallel or in sequence requires significant computational power. The financial sector, which handles massive amounts of data, often finds these solutions impractical due to high operating costs and time constraints.

Researchers from the Vanguard IMFS (Investment Management FinTech Strategies) team presented a new framework called Mixture of Agents (MoA) to overcome the limitations of traditional ensemble methods. MoA is an advanced multi-agent system specifically designed for Recovery-Augmented Generation (RAG) tasks. Unlike previous models, MoA uses a collection of small, specialized models that work together in a highly coordinated manner to answer complex questions with greater accuracy and lower costs. This collaborative network of agents mirrors the structure of a research team, with each agent having expertise and a knowledge base, allowing the system to perform better across multiple financial domains.

The MoA system is comprised of multiple specialized agents, each acting as a “junior researcher” with a specific focus, such as sentiment analysis, financial metrics, or mathematical calculations. For example, the system includes agents such as the “10-K/Q Math Agent,” a fine-tuned GPT-4 model designed to handle accounting and financial figures, and the “10-K/Q Sentiment Agent,” a Llama-2 model trained to analyze sentiment in equity markets. Each agent has access to different data sources, including databases, APIs, and external documents, allowing them to process highly specific information quickly and efficiently. This specialization allows the MoA framework to outperform traditional single-model systems in speed and accuracy while keeping operational costs low.

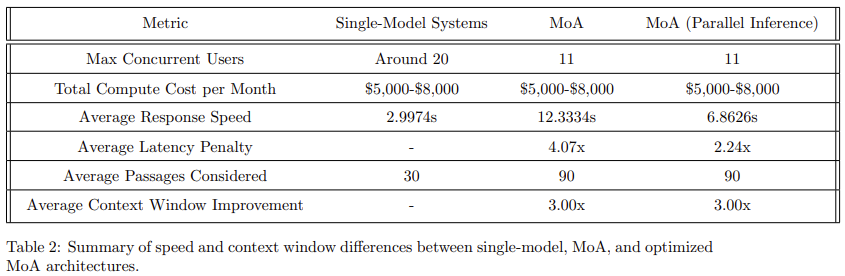

In terms of performance, the MoA system has shown significant improvements in response quality and efficiency compared to traditional single-model systems. During testing, the MoA system was able to analyze tens of thousands of financial documents in under 60 seconds using two layers of agents. Compared to a single-model system, these agents operate with a latency penalty of only 4.07x in serial inference or 2.24x when running in parallel. A baseline MoA system with two Mistral-7B agents was tested in an experiment alongside single-model systems such as GPT-4 and Claude 3 Opus. The MoA system consistently provided more accurate and complete answers. For example, when asked about revenue growth in Apple’s Q1 2023 earnings report, the MoA agents captured 5 out of 7 key points, compared to 4 for Claude and just two for GPT-4. This demonstrates the system's ability to surface critical information with greater accuracy and speed.

The cost-effectiveness of MoA makes it well suited for large-scale financial applications. Vanguard’s IMFS team reported that its MoA system operates at a total monthly cost of less than $8,000 while processing queries from a team of researchers. This is comparable to single-model systems, which cost between $5,000 and $8,000 per month but provide significantly lower throughput. The modular design of the MoA framework allows firms to scale their operations based on budget and needs, with the flexibility to add or remove agents as needed. As the system scales, it becomes increasingly efficient, saving time and computational resources.

In conclusion, the Mixture of Agents framework offers a powerful solution to improve the performance of large language models in finance. The researchers successfully addressed critical issues such as scalability, cost, and response accuracy by leveraging a collaborative agent-based system. The MoA framework improves the speed and quality of information retrieval and offers significant cost savings compared to traditional methods. With its ability to process large amounts of data in a fraction of the time while maintaining high accuracy, MoA is destined to become a standard for enterprise-level applications in finance and beyond. This system represents a significant advancement in LLM technology, providing a scalable, cost-effective, and highly efficient method for handling complex financial data.

Take a look at the PaperAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

FREE ai WEBINAR: 'SAM 2 for Video: How to Optimize Your Data' (Wednesday, September 25, 4:00 am – 4:45 am EST)

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>