Three-dimensional (3D) models are widely used in various fields such as animation, gaming, virtual reality, and product design. Creating 3D models is a complex, time-consuming task that requires extensive knowledge and specialized software skills. While pre-built models are easily accessible from online databases, customizing them to fit a specific artistic vision falls into the same complicated process of creating 3D models that, as already mentioned, requires specialized expertise in 3D modeling. 3D editing software. Recently, research has demonstrated the expressive power of neural field-based representations, such as NeRF, to capture fine details and enable effective optimization schemes through differentiable representation. As a result, its applicability has been broadened for various editing tasks.

However, most of the research in this area has focused on appearance-only manipulations, which alter the texture and style of the object, or on geometric editing through mappings to an explicit mesh representation. Unfortunately, these methods still require users to place control points on the mesh representation and do not allow adding new structures or significantly modifying the geometry of the object.

Therefore, a new approach to voxel editing, called Vox-E, has been developed to address the issues mentioned above. The general description of the architecture is illustrated in the following figure.

This framework focuses on enabling more localized and flexible edits to objects guided solely by textual cues, which can encompass geometric and appearance modifications. To achieve this, the authors exploit pretrained 2D diffusion models to modify images and match specific textual descriptions. Loss Distillation Punctuation (SDS) has been adapted for unconditional text-based 3D generation and used in conjunction with regularization techniques. The optimization process in 3D space is regularized by coupling two volumetric fields. This approach gives the system more flexibility to comply with text guidance while preserving input geometry and appearance.

Instead of using neural fields, Vox-E relies on ReLU Fields, which are lighter than NeRF-based approaches and do not rely on neural networks. ReLU fields represent the scene as a voxel grid where each voxel contains learned features. This explicit grid structure allows for faster render and rebuild times, as well as tight volumetric coupling between the volumetric fields that represent the 3D object before and after the desired edit. Vox-E achieves this through a novel volumetric correlation loss on density features.

To further refine the spatial extent of the edits, the authors exploit 2D cross-attention maps to capture regions associated with the target edit and transform them into volumetric grids. The premise behind this approach is that while the independent 2D internals of generative models can be noisy, unifying them into a single 3D representation allows for better distillation of semantic knowledge. These 3D cross-attention grids are necessary for a binary volume segmentation algorithm to divide the reconstructed volume into edited and unedited regions. This process allows the framework to merge the features of the volumetric grids and preserve better areas that should not be affected by textual editing.

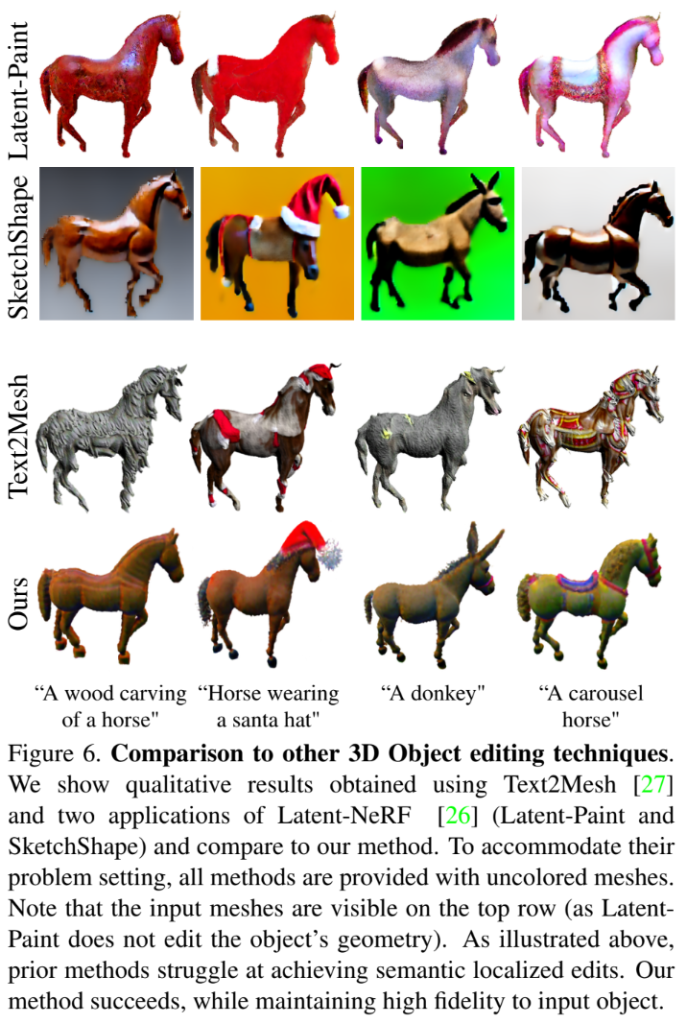

The results of this approach are compared with other techniques of the state of the art. Some samples taken from the aforementioned work are shown below.

This was the brief for Vox-E, an AI framework for text-guided voxel editing of 3D objects.

If you are interested or would like more information about this work, you can find a link to the document and the project page.

review the Paper, Code, and project page. Don’t forget to join our 19k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

🚀 Check out 100 AI tools at AI Tools Club

![]()

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.