Large video language models (LLMs) have emerged as powerful tools to process video inputs and generate contextually relevant responses to user commands. However, these models face significant challenges in their current methodologies. The main problem lies in the high computational and labeling costs associated with training on supervised fine-tuning (SFT) video datasets. Furthermore, existing video LLMs struggle with two main disadvantages: they have limited capacity to process a large number of input frames, making it difficult to capture fine-grained spatial and temporal content across all videos, and they lack a proper temporal modeling design, relying solely on the LLM’s ability to model motion patterns without specialized video processing components.

Researchers have attempted to solve video processing challenges using various LLM approaches. Image LLMs such as Flamingo, BLIP-2, and LLaVA proved successful on visual and textual tasks, while video LLMs such as Video-ChatGPT and Video-LLaVA extended these capabilities to video processing. However, these models often require expensive fine-tuning on large video datasets. Training-free methods such as FreeVA and IG-VLM emerged as cost-effective alternatives, which use pre-trained image LLMs without additional fine-tuning. Despite promising results, these approaches still struggle to process longer videos and capture complex temporal dependencies, limiting their effectiveness in handling diverse video content.

Apple researchers present SF-LLaVAa unique untrained video LLM that addresses video processing challenges by introducing a SlowFast design inspired by successful two-stream networks for action recognition. This approach captures both detailed spatial semantics and long-range temporal context without requiring additional fine-tuning. The slow path extracts features at a low frame rate with higher spatial resolution, while the fast path operates at a high frame rate with aggressive spatial pooling. This dual-path design balances modeling capability and computational efficiency, allowing for the processing of more video frames to preserve adequate details. SF-LLaVA integrates complementary features of slowly changing visual semantics and rapidly changing motion dynamics, providing a comprehensive understanding of videos and overcoming the limitations of previous methods.

SlowFast-LLaVA (SF-LLaVA) introduces a unique SlowFast architecture for untrained video LLMs, inspired by two-stream networks for action recognition. This design effectively captures both fine-grained spatial semantics and long-range temporal context without exceeding the token limits of common LLMs. The slow path processes high-resolution but low frame rate features (e.g., 8 frames with 24×24 tokens each) to capture spatial details. In contrast, the fast path handles low-resolution but high frame rate features (e.g., 64 frames with 4×4 tokens each) to model broader temporal context. This dual-path approach enables SF-LLaVA to preserve both spatial and temporal information, aggregating them into a powerful representation for comprehensive video understanding without requiring additional fine-tuning.

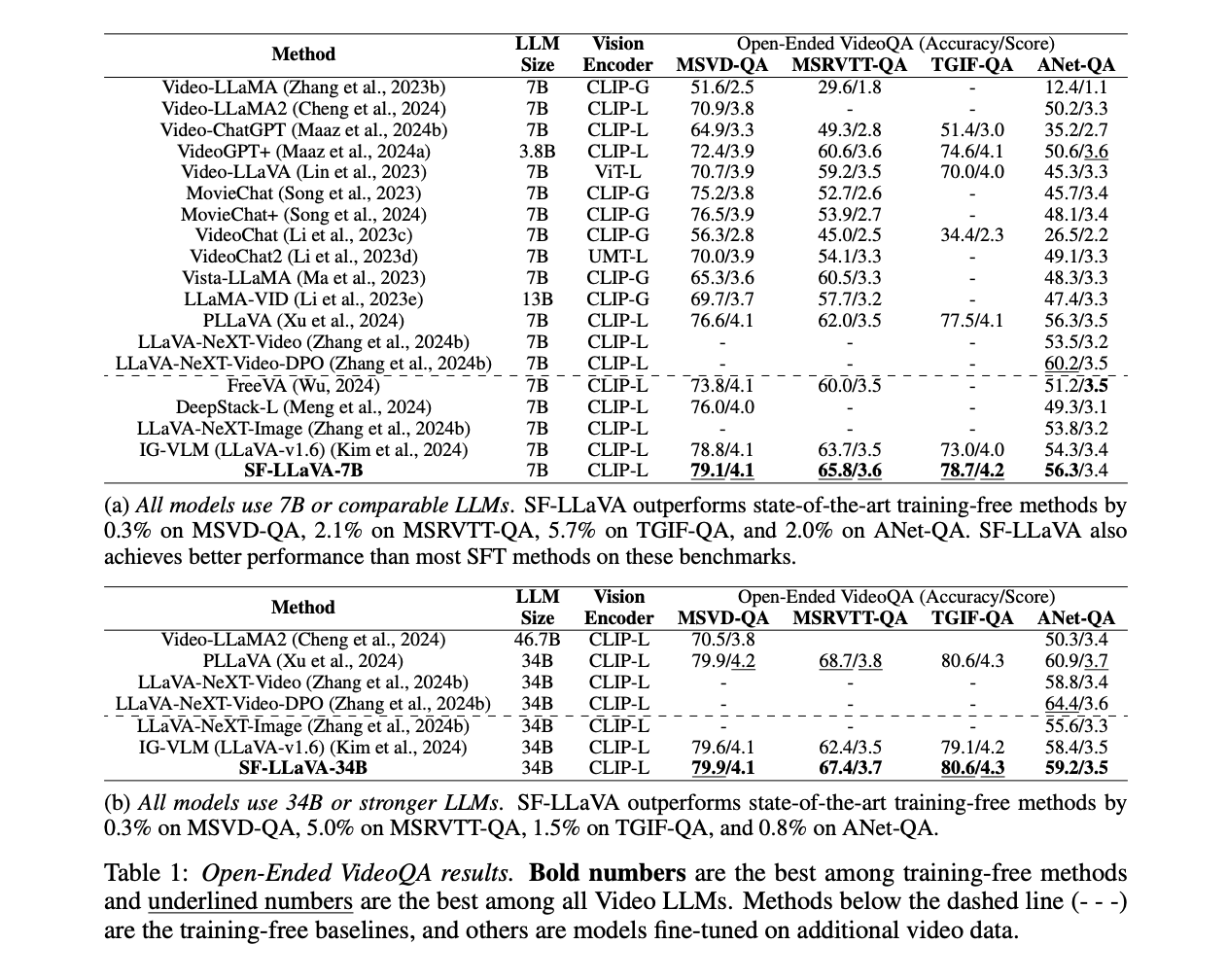

SF-LLaVA demonstrates impressive performance on several video understanding tasks, often outperforming state-of-the-art untrained methods and competing with SFT models. On open-ended VideoQA tasks, SF-LLaVA outperforms other untrained methods on all benchmarks, with improvements of up to 5.7% on some datasets. For multiple-choice VideoQA, SF-LLaVA shows significant advantages, particularly on complex long-form temporal reasoning tasks such as EgoSchema, where it outperforms IG-VLM by 11.4% using an LLM of 7B. On text generation tasks, SF-LLaVA-34B outperforms all untrained baselines on average and excels at temporal understanding. While SF-LLaVA occasionally falls short in capturing fine spatial details compared to some methods, its SlowFast design enables it to cover longer temporal contexts efficiently, demonstrating superior performance on most tasks, especially those requiring temporal reasoning.

This research presents SF-LLaVA, a unique untrained video LLM, which represents a significant advancement in video understanding without the need for additional fine-tuning. Based on LLaVA-NeXT, it features a SlowFast design that utilizes dual-stream inputs to capture both fine-grained spatial semantics and long-range temporal context effectively. This innovative approach aggregates frame-wise features into a comprehensive video representation, enabling SF-LLaVA to perform exceptionally well on various video tasks. Extensive experiments on 8 different video benchmarks demonstrate SF-LLaVA’s superiority over existing untrained methods, with performance often matching or outperforming state-of-the-art fine-tuned and supervised video LLMs. SF-LLaVA not only serves as a solid foundation in the field of video LLMs but also offers valuable insights for future research in modeling video representations for multimodal LLMs through its design choices.

Review the PaperAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Asjad is a consultant intern at Marktechpost. He is pursuing Bachelors in Mechanical Engineering from Indian Institute of technology, Kharagpur. Asjad is a Machine Learning and Deep Learning enthusiast who is always researching the applications of Machine Learning in the healthcare domain.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER