The use of large language models (LLM) and generative ai has exploded over the past year. With the release of powerful publicly available core models, the tools to train, tune, and host your own LLM have also become democratized. Wearing <a target="_blank" href="https://docs.vllm.ai/en/stable/index.html” target=”_blank” rel=”noopener”>vllm on AWS Trainium and Inferentia makes it possible to host LLM for high-performance inference and scalability.

In this post, we will explain how you can quickly implement The latest models of Llama de Metausing vLLM on an amazon Elastic Compute Cloud (amazon EC2) Inf2 instance. For this example, we will use version 1B, but other sizes can be implemented by following these steps, along with other popular LLMs.

Deploy vLLM on AWS Trainium and Inferentia EC2 instances

In these sections, you will be guided through using vLLM on an AWS Inferentia EC2 instance to deploy Meta's newer Llama 3.2 model. You will learn how to request access to the model, create a Docker container to use vLLM to deploy the model, and how to run online and offline inference on the model. We will also talk about tuning the performance of the inference graph.

Prerequisite: Hugging Face account and model access

To use the meta-llama/Llama-3.2-1B model, you will need a Hugging Face account and access to the model. Please go to model cardRegister and accept the model license. You will then need a Hugging Face token, which you can obtain by following these steps. When you arrive at Save your access token screen, as shown in the following figure, be sure to copy the token because it will not be displayed again.

Create an EC2 instance

You can create an EC2 instance by following the guide. Some things to keep in mind:

- If this is your first time using inf/trn instances, you will need to request a fee increase.

- you will use

inf2.xlargeas its instance type.inf2.xlargeInstances are only available in these AWS regions. - Increase the gp3 volume to 100 G.

- you will use

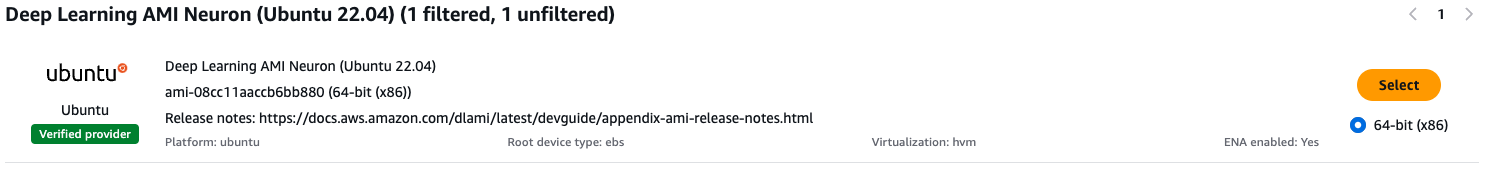

Deep Learning AMI Neuron (Ubuntu 22.04)as your AMI, as shown in the following figure.

Once the instance is started, you can connect to it to access the command line. In the next step, you will use Docker (pre-installed on this AMI) to run a vLLM container image for neuron.

Start the vLLM server

You will use Docker to create a container with all the tools necessary to run vLLM. Create a Dockerfile using the following command:

Then run:

Building the image will take about 10 minutes. Once done, use the new Docker image (replace YOUR_TOKEN_HERE with the Hugging Face token):

You can now start the vLLM server with the following command:

This command runs vLLM with the following parameters:

serve meta-llama/Llama-3.2-1B: The face that embracesmodelIDof the model being implemented for inference.--device neuron: Configures vLLM to run on the neural device.--tensor-parallel-size 2– Sets the number of partitions for tensor parallelism. inf2.xlarge has 1 neural device and each neural device has 2 neural cores.--max-model-len 4096: Set to the maximum sequence length (input tokens plus output tokens) for which to compile the model.--block-size 8: For neural devices, this is set internally to max-model-len.--max-num-seqs 32: This is set to the hardware batch size or a desired level of concurrency that the model server needs to handle.

The first time you load a model, if there is no previously built model, it will need to be compiled. This compiled model can optionally be saved, so the build step is not necessary if the container is recreated. Once everything is done and the model server is running, you should see the following logs:

This means that the model server is running, but is not yet processing requests because none have been received. It can now be detached from the container by pressing ctrl + p and ctrl + q.

Inference

When you started the Docker container, you ran it with the command -p 8000:8000. This told Docker to forward port 8000 from the container to port 8000 on your local machine. When you run the following command, you should see that the model server with meta-llama/Llama-3.2-1B is running.

This should return something like:

Now, send him a message:

You should receive a response similar to the following from vLLM:

Offline inference with vLLM

Another way to use vLLM in Inferentia is to send a few requests at the same time in a script. This is useful for automation or when you have a batch of messages that you want to send all at the same time.

You can reconnect to your Docker container and stop the inference server online with the following:

At this point you should see a blank cursor, press ctrl + c to stop the server and you should be returned to the bash prompt in the container. Create a file to use the offline inference engine:

Now, run the script. python offline_inference.py and you should receive answers to all four prompts. This may take a minute as the model needs to be restarted.

Now you can write exit and press return and then press ctrl + c to close the Docker container and return to your inf2 instance.

Clean

Now that you have finished testing Llama 3.2 1B LLM, you should cancel your EC2 instance to avoid additional charges.

Performance tuning for variable sequence lengths

You will probably need to process variable length sequences during LLM inference. The Neuron SDK generates buckets and a calculation graph that works with the shape and size of the buckets. To tune performance based on the length of input and output tokens in inference requests, you can configure two types of buckets corresponding to the two phases of LLM inference through the following environment variables as a list of numbers integers:

NEURON_CONTEXT_LENGTH_BUCKETSIt corresponds to the context coding phase. Set this to the estimated duration of the prompts during inference.NEURON_TOKEN_GEN_BUCKETSIt corresponds to the token generation phase. Set this to a range of powers of two within the duration of your spawn.

You can use the Docker run command to configure the environment variables when starting the vLLM server (remember to replace YOUR_TOKEN_HERE with your Hugging Face token):

You can then start the server using the same command:

vllm serve meta-llama/Llama-3.2-1B --device neuron --tensor-parallel-size 2 --block-size 8 --max-model-len 4096 --max-num-seqs 32

Since the model graph has changed, the model will need to be compiled again. If the container was canceled, the model will be downloaded again. You can then send a request by detaching from the container by pressing ctrl + p and ctrl + q and using the same command:

curl localhost:8000/v1/completions \-H "Content-Type: application/json" \-d '{"model": "meta-llama/Llama-3.2-1B", "prompt": "What is Gen ai?", "temperature":0, "max_tokens": 128}' | jq '.choices(0).text'

For more information on how to set up buckets, see the Developer's guide to bundling. Note, NEURON_CONTEXT_LENGTH_BUCKETS corresponds to context_length_estimate in the documentation and NEURON_TOKEN_GEN_BUCKETS corresponds to n_positions in the documentation.

Conclusion

You just saw how to implement meta-llama/Llama-3.2-1B using vLLM on an amazon EC2 Inf2 instance. If you are interested in implementing other popular Hugging Face LLMs, you can replace the modelID in the vLLM serve domain. More details about the integration between Neuron SDK and vLLM can be found at Neuron User Guide for Continuous Batch Processing and the <a target="_blank" href="https://docs.vllm.ai/en/latest/getting_started/neuron-installation.html” target=”_blank” rel=”noopener”>vLLM Guide for Neuron.

Once you've identified a model you want to use in production, you'll want to deploy it with auto-scaling, observability, and fault tolerance. You can also check this. <a target="_blank" href="http://amazon.com/blogs/machine-learning/deploy-meta-llama-3-1-8b-on-aws-inferentia-using-amazon-eks-and-vllm/” target=”_blank” rel=”noopener”>blog post to understand how to deploy vLLM on Inferentia through amazon Elastic Kubernetes Service (amazon EKS). In the next post in this series, we will discuss using amazon EKS with Ray Serve to deploy vLLM in production with auto-scaling and observability.

About the authors

Omri Shiva is an open source machine learning engineer focused on helping customers on their ai/ML journey. In his free time, he enjoys cooking, playing with open source and open hardware, and listening to and playing music.

Omri Shiva is an open source machine learning engineer focused on helping customers on their ai/ML journey. In his free time, he enjoys cooking, playing with open source and open hardware, and listening to and playing music.

Pink Panigrahi works with clients to create ML-based solutions to solve strategic business problems on AWS. In his current role, he works on optimizing the training and inference of generative ai models on AWS ai chips.

Pink Panigrahi works with clients to create ML-based solutions to solve strategic business problems on AWS. In his current role, he works on optimizing the training and inference of generative ai models on AWS ai chips.

NEWSLETTER

NEWSLETTER