Temporal reasoning involves understanding and interpreting relationships between events over time, a crucial capability for intelligent systems. This field of research is essential to developing ai that can handle tasks ranging from natural language processing to decision making in dynamic environments. ai can perform complex operations such as scheduling, forecasting, and historical data analysis by accurately interpreting time-related data. This makes temporal reasoning a fundamental aspect of developing advanced ai systems.

Despite the importance of temporal reasoning, it is often necessary to revise existing reference points. They rely heavily on real-world data that LLMs may have seen during training or use anonymization techniques that can lead to inaccuracies. This creates a need for more robust assessment methods that accurately measure LLMs' skills in temporal reasoning. The main challenge lies in creating benchmarks that test memory retrieval and genuinely assess reasoning skills. This is critical for applications that require precise, context-aware temporal understanding.

Current research includes the development of synthetic data sets to test LLM capabilities such as logical and mathematical reasoning. Frameworks such as TempTabQA, TGQA, and knowledge graph-based benchmarks are widely used. However, these methods are limited by inherent biases and pre-existing knowledge within the models. This often results in evaluations that do not truly reflect the models' reasoning abilities but rather their ability to remember learned information. The focus on well-known entities and facts must adequately challenge the understanding of the temporal logic and arithmetic of the models, leading to an incomplete evaluation of their true capabilities.

To address these challenges, researchers from Google Research, Google DeepMind, and Google have introduced the Test of Time (ToT) benchmark. This innovative benchmark uses synthetic datasets specifically designed to evaluate temporal reasoning without relying on prior knowledge of the models. The benchmark is open source to encourage further research and development in this area. The introduction of ToT represents a significant advance as it provides a controlled environment to systematically test and improve the temporal reasoning skills of LLMs.

The ToT benchmark consists of two main tasks. ToT-Semantic focuses on semantics and temporal logic, allowing flexible exploration of various graph structures and reasoning complexities. This task isolates basic reasoning abilities from preexisting knowledge. ToT-Arithmetic assesses the ability to perform calculations involving moments and durations, using collaborative tasks to ensure practical relevance. These tasks are meticulously designed to cover various temporal reasoning scenarios, providing a comprehensive assessment framework.

To create the ToT-Semantic task, the researchers generated random graph structures using algorithms such as the Erdős-Rényi and Barabási-–Albert models. These graphs were then used to create various temporal questions, allowing for an in-depth assessment of the LLMs' ability to understand and reason about time. For ToT-Arithmetic, tasks were designed to test practical arithmetic involving time, such as calculating durations and handling time zone conversions. This dual approach ensures a comprehensive evaluation of the logical and arithmetic aspects of temporal reasoning.

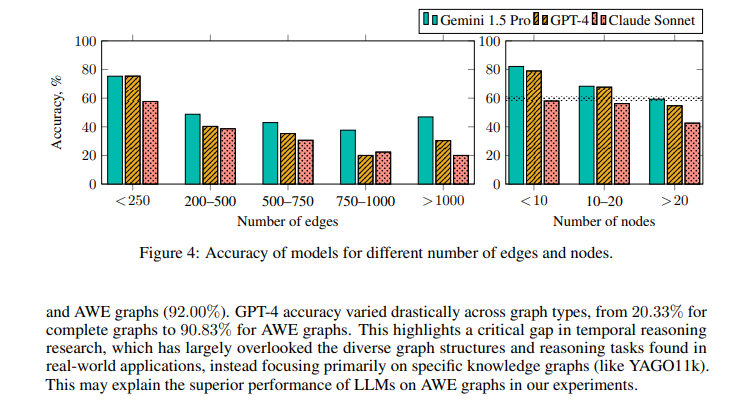

Experimental results using the ToT benchmark reveal important information about the strengths and weaknesses of current LLMs. For example, GPT-4 performance varied widely across different graph structures, with accuracy ranging from 40.25% on full graphs to 92.00% on AWE graphs. These findings highlight the impact of temporal structure on reasoning performance. Furthermore, the order of events presented to the models significantly influenced their performance, with the highest accuracy observed when the target entity classified the events and the start time.

The study also explored the types of temporal questions and their levels of difficulty. Single-fact questions were easier for the models to handle, while multiple-fact questions, which required the integration of multiple pieces of information, posed more challenges. For example, GPT-4 achieved 90.29% accuracy on EventAtWhatTime questions, but struggled with timeline questions, indicating a gap in handling complex temporal sequences. Detailed analysis of question types and model performance provides a clear picture of current capabilities and areas needing improvement.

In conclusion, the ToT benchmark represents a significant advance in the assessment of LLMs' temporal reasoning abilities. Providing a more comprehensive and controlled evaluation framework helps identify areas for improvement and guides the development of more capable ai systems. This benchmark lays the foundation for future research aimed at improving the temporal reasoning capabilities of LLMs, ultimately contributing to the broader goal of achieving artificial general intelligence.

Review the Paper and HF Page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram channel and LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 44k+ ML SubReddit

![]()

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER