Large language models (LLMs) have demonstrated remarkable language generation capabilities. However, their training process, which involves unsupervised learning from large datasets followed by supervised fine-tuning, presents significant challenges. The main concern arises from the nature of pre-training datasets, such as Common Crawl, which often contain undesirable content. Consequently, LLMs inadvertently acquire the ability to generate offensive language and potentially harmful advice. This unintended capability poses a serious security risk, as these models can produce consistent responses to user input without proper content filtering. The challenge for researchers lies in developing methods to maintain the language generation capabilities of LLMs while effectively mitigating the production of unsafe or unethical content.

Existing attempts to overcome security concerns in LLMs have primarily focused on two approaches: security tuning and guardrail implementation. Security tuning aims to optimize models to respond in a manner aligned with human values and security considerations. However, these chat models remain vulnerable to jailbreak attacks, which employ various strategies to bypass security measures. These strategies include the use of low-resource languages, rejection suppression, privilege escalation, and distractions.

To counter these vulnerabilities, researchers have developed guardrails to monitor exchanges between chat models and users. One notable approach involves the use of model-based guardrails, which are independent of the chat models themselves. These guardrail models are designed to flag harmful content and serve as a critical component of ai security stacks in deployed systems.

However, current methods face significant challenges. Using separate guard models introduces substantial computational overhead, making them impractical in resource-constrained environments. Furthermore, the learning process is inefficient due to the considerable overlap in language understanding capabilities between chat models and guard models, as both need to perform their respective response generation and content moderation tasks effectively.

Researchers from Samsung R&D Institute present LoRA Guardianan innovative system integrating chat and guard models, addressing efficiency issues in LLM security. It uses a low-rank adapter on the backbone of a chat model transformer to detect harmful content. The system operates in dual modes: turning on LoRA parameters for protection with a classification head and turning them off for normal chat functions. This approach significantly reduces parameter overhead by 100-1000x compared to previous methods, making deployment feasible in resource-constrained environments. LoRA-Guard has been evaluated on multiple datasets, including zero-shot scenarios, and its model weights have been published to support future research.

The LoRA-Guard architecture is designed to efficiently integrate protection capabilities into a chat model. It uses the same integration and tokenizer for both the chat model C and the protection model G. The key innovation lies in the feature map: while C uses the original feature map f, G employs f' with LoRA adapters connected to f. G also uses a separate output header hguard for classification into danger categories.

This dual-path design allows seamless switching between chat and protection functions. By activating or deactivating the LoRA adapters and switching between output headers, the system can perform either task without performance degradation. Parameter sharing between paths significantly reduces computational overhead, as the protection model typically adds only a fraction (often 1/1000) of the original model parameters.

LoRA-Guard is trained through supervised fine-tuning of f' and hguard on labeled datasets, keeping the chat model parameters frozen. This approach utilizes the existing knowledge of the chat model while learning to detect harmful content efficiently.

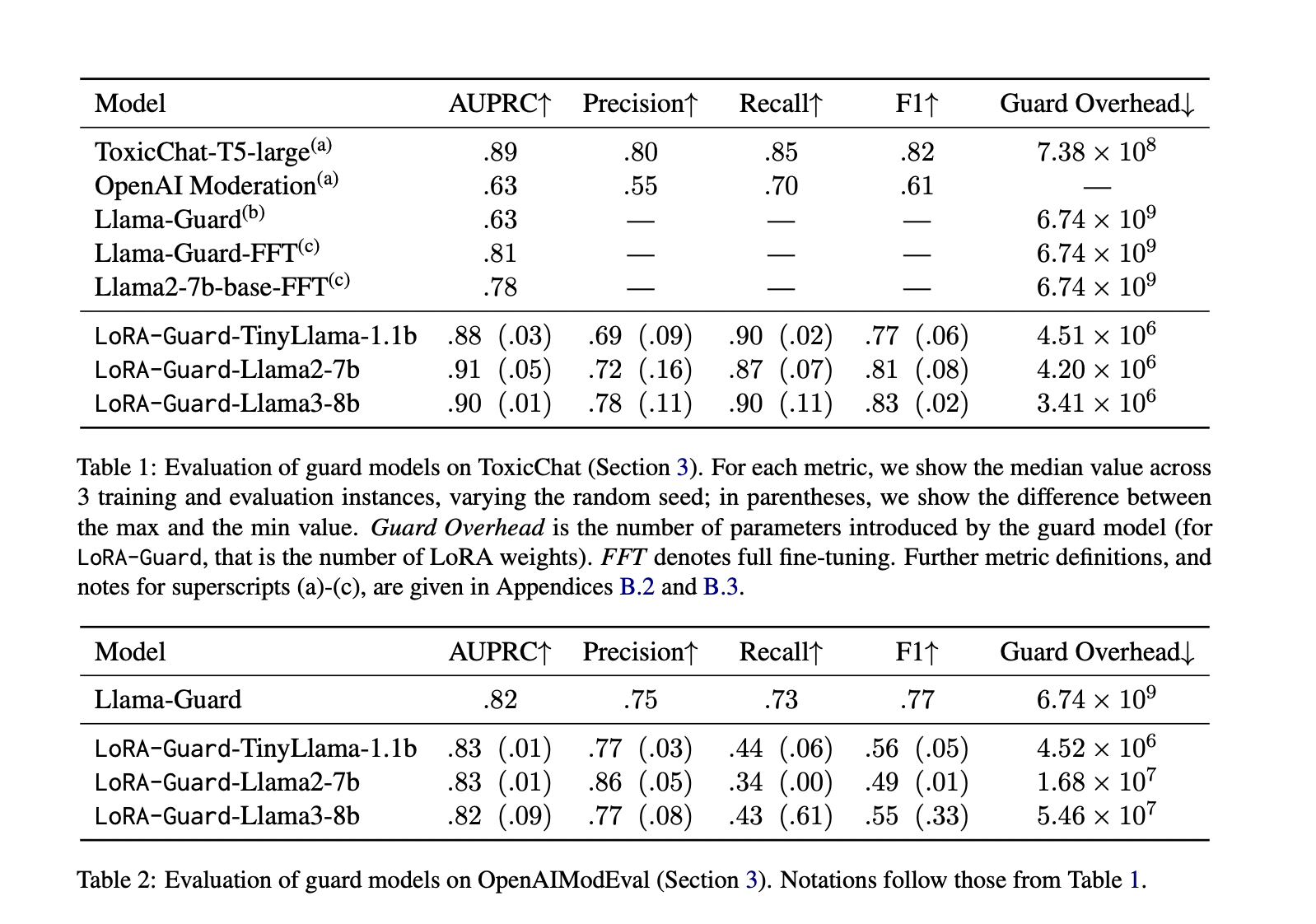

LoRA-Guard demonstrates outstanding performance on multiple datasets. On ToxicChat, it outperforms baselines on AUPRC while using significantly fewer parameters, up to 1500x fewer than fully-tuned models. For OpenAIModEval, it matches alternative methods with 100x fewer parameters. Cross-domain evaluations reveal interesting asymmetries: models trained on ToxicChat generalize well to OpenAIModEval, but conversely show considerable drops in performance. This asymmetry may be due to differences in dataset characteristics or the presence of jailbreak samples in ToxicChat. Overall, LoRA-Guard proves to be an efficient and effective solution for content moderation in language models.

LoRA-Guard represents a significant advancement in moderated conversational systems, reducing protection parameter overhead by 100-1000x while maintaining or improving performance. This efficiency is achieved through knowledge sharing and parameter-efficient learning mechanisms. Its dual-path design prevents catastrophic forgetting during fine-tuning, a common problem in other approaches. By dramatically reducing training time, inference time, and memory requirements, LoRA-Guard emerges as a crucial development for implementing robust content moderation in resource-constrained environments. As on-device LLMs become more prevalent, LoRA-Guard paves the way for safer ai interactions across a wider range of applications and devices.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram Channel and LinkedIn GrAbove!.

If you like our work, you will love our Newsletter..

Don't forget to join our Subreddit with over 46 billion users

Asjad is a consultant intern at Marktechpost. He is pursuing Bachelors in Mechanical Engineering from Indian Institute of technology, Kharagpur. Asjad is a Machine Learning and Deep Learning enthusiast who is always researching the applications of Machine Learning in the healthcare domain.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER