Large multimodal models (LMMs) are rapidly advancing, driven by the need to develop ai systems capable of processing and generating content across multiple modalities, such as text and images. These models are particularly valuable in tasks that require deep integration of visual and linguistic information, such as image captioning, visual question answering, and multimodal language understanding. As ai technologies evolve, effectively combining these different types of data has become increasingly critical to improving ai performance in complex real-world scenarios.

Despite significant advances in the development of linear modeling models, several challenges remain, particularly regarding the accessibility and scale of resources available to the research community. The main problem is the limited access to large-scale, high-quality datasets and the complex training methodologies required to build robust models. Open-source initiatives often have to play catch-up with proprietary models due to these limitations, hindering researchers’ ability to replicate, understand, and develop existing models. This disparity stifles innovation and limits the potential applications of linear modeling models in diverse fields. Addressing these challenges is crucial to democratizing access to advanced ai technologies and enabling broader participation in their development.

Current approaches to building LMMs typically involve sophisticated architectures that effectively integrate vision and language modalities. For example, cross-attention mechanisms are commonly used to link these two types of data, as seen in models such as Flamingo and LLaVA. These methods rely heavily on large-scale pretraining, followed by specific fine-tuning tasks to improve model performance. However, despite their success, these models need to be improved, particularly with regard to data scale, diversity, and the complexity of their training pipelines. For example, the BLIP-2 model, while a pioneering effort, needs help with the scale and diversity of its training data, which hinders its ability to achieve competitive performance compared to state-of-the-art LMMs. The intricate Q-Former architecture used in BLIP-2 adds further challenges when scaling up training pipelines, making it difficult for researchers to work with larger datasets.

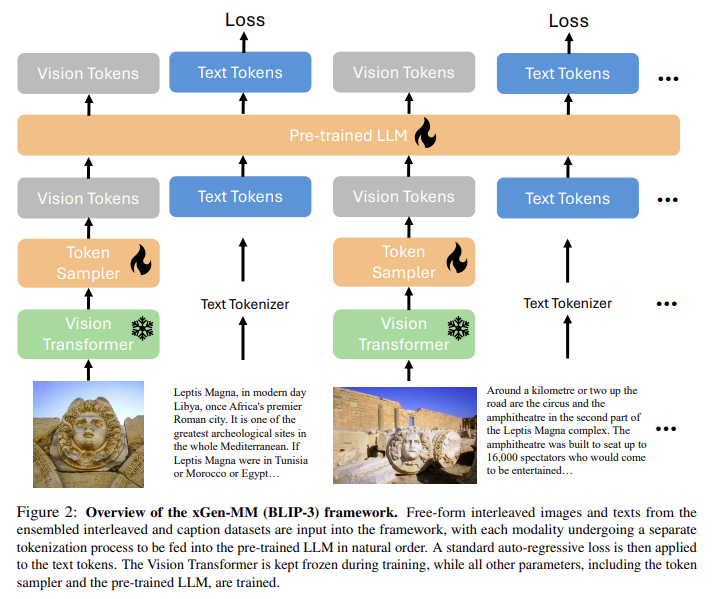

Researchers from Salesforce ai Research and the University of Washington have presented the xGen-MM (BLIP-3) The xGen-MM framework is an innovative solution designed to improve the scalability and accessibility of LMMs. The xGen-MM framework builds on previous efforts but introduces several key enhancements to overcome the limitations of previous models. The framework utilizes a set of multi-modal interleaved datasets, curated caption datasets, and publicly available datasets to create a robust training environment. A significant innovation in xGen-MM is the replacement of the Q-Former layers with a more scalable vision token sampler, specifically a Perceiver Resampler. This change simplifies the training process by unifying training objectives into a single loss function at each stage, streamlining the model development process and making it more accessible for large-scale training.

The xGen-MM (BLIP-3) framework incorporates several advanced technologies to improve the efficiency and effectiveness of multimodal training. The framework is composed of a pre-trained large language model (phi3-mini) paired with a vision token sampler. This combination enables the model to handle freely interleaved images and text, which is essential for tasks requiring deep understanding of multimodal content. The training process includes a high-resolution dynamic image encoding strategy, which enables the model to efficiently process images at varying resolutions. This strategy involves patch-encoding images, preserving their resolution and reducing the length of the vision token sequence. This method improves the model’s ability to interpret text-rich images and significantly reduces computational requirements, making the model more scalable and efficient for large-scale applications.

The performance of the xGen-MM (BLIP-3) models has been rigorously evaluated on several multimodal benchmarks, yielding impressive results. For example, the instruction-optimized models showed exceptional performance on Visual Question Answering (VQA) and Optical Character Recognition (OCR) tasks. Specifically, xGen-MM significantly outperformed comparable models on tasks such as TextVQA and COCO subtitling, achieving scores of 66.9 and 90.6 on 8-shot evaluations, respectively. The introduction of safety-optimized models has further improved the reliability of these LMMs by reducing harmful behaviors such as hallucinations while maintaining high accuracy on complex multimodal tasks. The models also excelled on tasks requiring high-resolution image processing, demonstrating the effectiveness of the high-resolution dynamic encoding strategy.

In conclusion, the xGen-MM (BLIP-3) framework offers a robust solution for developing high-performance LMMs by addressing critical challenges related to data accessibility and training scalability. The use of a curated set of datasets and innovative training methodologies has enabled xGen-MM models to set new benchmarks in multimodal performance. The framework’s ability to efficiently and accurately integrate complex visual and textual data makes it a valuable tool for researchers and practitioners.

Take a look at the Paper and Project page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our Subreddit with over 48 billion users

Find upcoming ai webinars here

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER