Natural language processing (NLP) in artificial intelligence focuses on enabling machines to understand and generate human language. This field encompasses a variety of tasks, including language translation, sentiment analysis, and text summarization. In recent years, significant advances have been made, leading to the development of large language models (LLMs) that can process large amounts of text. These advances have opened up possibilities for complex tasks such as long context summarization and retrieval-augmented generation (RAG).

One of the main challenges in natural language processing is to effectively evaluate the performance of LLMs on tasks that require processing long contexts. Traditional tasks, such as finding a needle in a haystack, do not offer the complexity needed to differentiate the capabilities of state-of-the-art models. Furthermore, evaluating the quality of the results of these tasks is challenging due to the need for high-quality baseline summaries and reliable automatic metrics. This gap in evaluation methods makes it difficult to accurately evaluate modern LLMs.

Existing methods for evaluating summarization performance typically focus on single-document settings with few input data. These methods rely heavily on low-quality reference summaries, which correlate poorly with human judgments. While some benchmarks exist for large context models, such as the needle-in-a-haystack and book summarization models, these need to sufficiently test the full capabilities of state-of-the-art LLMs. This limitation underscores the need for more comprehensive and reliable evaluation methods.

Researchers at Salesforce ai Research introduced a new evaluation method called the “Summary of a Haystack” (SummHay) task. This method aims to evaluate large context models and RAG systems more effectively. The researchers created synthetic haystacks of documents, ensuring that specific insights were repeated across them. The SummHay task requires systems to process these haystacks, generate summaries that accurately cover relevant insights, and cite source documents. This approach provides a reproducible and comprehensive framework for evaluation.

The methodology involves several detailed steps. First, researchers generate stacks of documents on specific topics, making sure that certain ideas are repeated across them. Each stack of documents typically contains around 100 documents, with a total of approximately 100,000 tokens. The documents are carefully crafted to include specific ideas categorized into several subtopics. For example, subtopics might consist of study techniques and stress management in a topic about exam preparation, each expanded on by separate ideas.

Once the Haystacks are generated, the SummHay task is framed as a query-focused summarization task. The systems receive queries related to the subtopics and must generate summaries in bullet format. Each summary must cover the relevant knowledge and cite the source documents accurately. The evaluation protocol then evaluates the summaries on two main aspects: the coverage of the expected knowledge and the quality of the citations. This rigorous process ensures high reproducibility and accuracy in the evaluation.

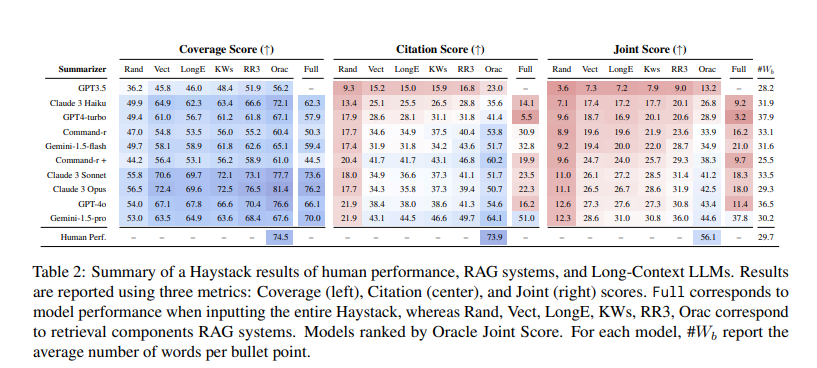

In terms of performance, the research team conducted a large-scale evaluation of 10 LLMs and 50 RAG systems. Their findings indicated that the SummHay task remains a significant challenge for current systems. For example, even when the systems were provided with oracular signals of document relevance, they lagged behind human performance by more than 10 points on a joint score. Specifically, long-context LLMs such as GPT-4o and Claude 3 Opus scored less than 20% on SummHay without a retriever. The study also highlighted trade-offs between RAG systems and long-context models. RAG systems often improve citation quality at the expense of information coverage.

Performance evaluation revealed that current models struggle to reach human performance levels. For example, using an advanced RAG component such as Cohere’s Rerank3, end-to-end performance on the SummHay task showed substantial improvements. However, even with these improvements, models such as Claude 3 Opus and GPT-4o were only able to achieve a joint score of around 36%, significantly below the estimated human performance of 56%. This gap underscores the need for further progress in this field.

In conclusion, the research conducted by Salesforce ai Research addresses a critical gap in the evaluation of long-context RAG and LLM systems. The SummHay benchmark provides a robust framework for evaluating the capabilities of these systems, highlighting significant challenges and areas for improvement. Although current systems underperform human benchmarks, this research paves the way for future developments that could eventually match or exceed human performance on long-context summarization.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram Channel and LinkedIn GrAbove!.

If you like our work, you will love our Newsletter..

Don't forget to join our Subreddit with over 46 billion users

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER