Vision-language models (VLM) are gaining importance in artificial intelligence due to their ability to integrate visual and textual data. These models play a crucial role in fields such as video understanding, human-computer interaction, and multimedia applications, offering tools to answer questions, generate subtitles, and improve decision making based on video inputs. Demand for efficient video processing systems is growing as video-based tasks proliferate across industries, from autonomous systems to medical and entertainment applications. Despite advances, handling the large amount of visual information in videos remains a central challenge in the development of scalable and efficient VLMs.

A critical problem in video understanding is that existing models often rely on processing each video frame individually, generating thousands of visual tokens. This process consumes a lot of time and computational resources, limiting the model's ability to efficiently handle long or complex videos. The challenge is to reduce the computational load while capturing relevant visual and temporal details. Without a solution, tasks requiring real-time or large-scale video processing become impractical, creating the need for innovative approaches that balance efficiency and accuracy.

Current solutions attempt to reduce the number of visual tokens using techniques such as cross-frame pooling. Models like Video-ChatGPT and Video-LLaVA focus on spatial and temporal clustering mechanisms to condense frame-level information into smaller tokens. However, these methods still generate many tokens, and models like MiniGPT4-Video and LLaVA-OneVision produce thousands of tokens, leading to inefficient handling of longer videos. These models often need help optimizing token efficiency and video processing performance, requiring more effective solutions to optimize token management.

In response, researchers at Salesforce ai Research introduced BLIP-3-Video, an advanced VLM designed specifically to address inefficiencies in video processing. The model incorporates a “temporal encoder” that dramatically reduces the visual tokens needed to represent a video. By limiting the token count to between 16 and 32 tokens, the model significantly improves computational efficiency without sacrificing performance. This advancement allows BLIP-3-Video to perform video-based tasks with much lower computational costs, making it an innovative step toward scalable video understanding solutions.

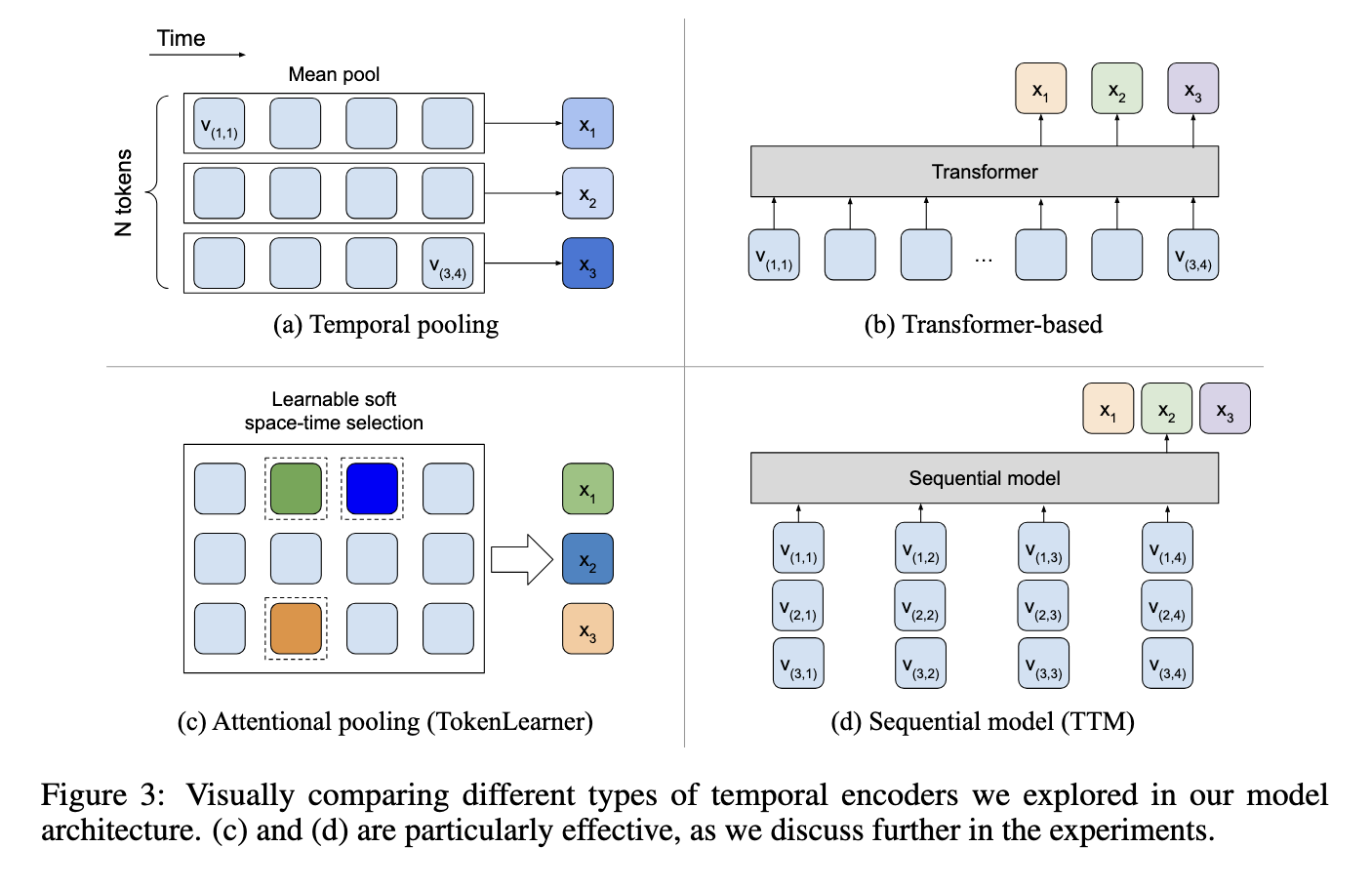

The temporal encoder in BLIP-3-Video is critical to its ability to process video more efficiently. It employs a learnable spatio-temporal attention pooling mechanism that extracts only the most informative tokens from video frames. The system consolidates spatial and temporal data from each frame, transforming them into a compact set of video-level tokens. The model includes a vision encoder, a frame-level tokenizer, and an autoregressive language model that generates text or responses based on video input. The temporal encoder uses sequential models and attention mechanisms to retain the core information of the video while reducing redundant data, ensuring that BLIP-3-Video can handle complex video tasks efficiently.

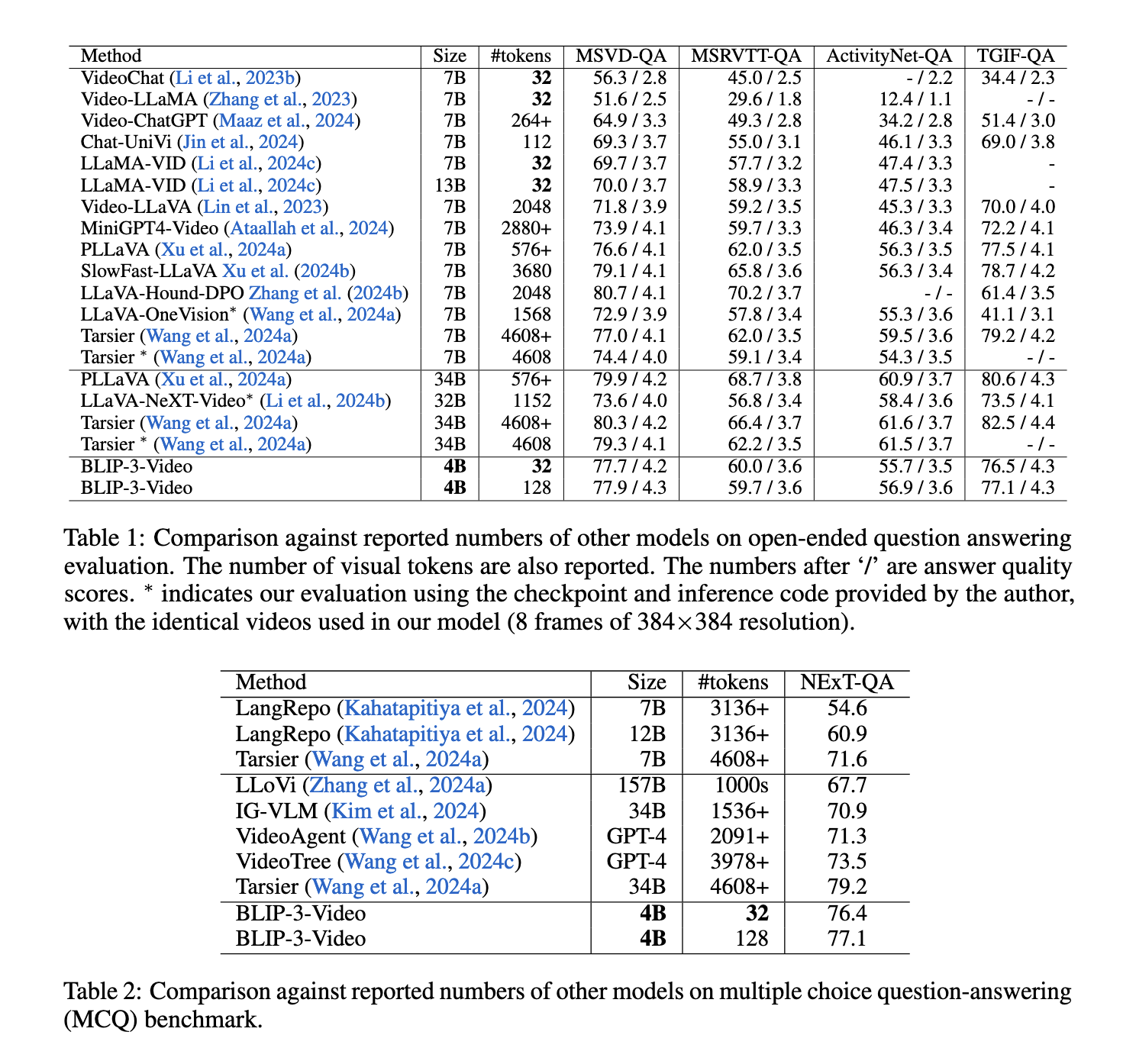

Performance results demonstrate the superior efficiency of BLIP-3-Video compared to larger models. The model achieves video question answering (QA) accuracy similar to state-of-the-art models such as Tarsier-34B, while using a mere fraction of the visual tokens. For example, Tarsier-34B uses 4608 tokens for 8 video frames, while BLIP-3-Video reduces this number to only 32 tokens. Despite this reduction, BLIP-3-Video still maintains strong performance, achieving a score of 77.7% on the MSVD-QA benchmark and 60.0% on the MSRVTT-QA benchmark, both of which are data sets widely used to evaluate video-based questions. answer tasks. These results underscore the model's ability to maintain high levels of accuracy while operating with fewer resources.

The model performed exceptionally well on multiple-choice question answering tasks, such as the NExT-QA dataset, with a score of 77.1%. This is particularly noteworthy given that it only used 32 tokens per video, significantly less than many competing models. Furthermore, on the TGIF-QA dataset, which requires understanding dynamic actions and transitions in videos, the model achieved an impressive accuracy of 77.1%, further highlighting its efficiency in handling complex video queries. These results establish BLIP-3-Video as one of the most token-efficient models available, providing accuracy comparable or superior to much larger models while dramatically reducing computational overhead.

In conclusion, BLIP-3-Video addresses the challenge of token inefficiency in video processing by introducing an innovative temporal encoder that reduces the number of visual tokens while maintaining high performance. Developed by Salesforce ai Research, the model demonstrates that it is possible to process complex video data with many fewer tokens than previously thought necessary, offering a more scalable and efficient solution for video understanding tasks. This advancement represents a major step forward in vision and language models, paving the way for more practical applications of ai in video-based systems in various industries.

look at the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Next live webinar: October 29, 2024) Best platform to deliver optimized models: Predibase inference engine (promoted)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>