Large language models (LLMs) have emerged as powerful tools in artificial intelligence, demonstrating remarkable capabilities in understanding and generating text. These models use advanced technologies such as web-scale unsupervised pretraining, instruction fine-tuning, and value alignment, showing strong performance on various tasks. However, applying LLMs to real-world big data presents significant challenges, primarily due to the enormous costs involved. By 2025, the total cost of LLMs is projected to reach nearly $5 trillion, far exceeding the GDP of major economies. This financial burden is particularly pronounced in text and structured data processing, which account for a substantial share of expenditures despite being smaller in volume compared to multimedia data. As a result, there has been an increasing focus on relational table learning (RTL) in recent years, given that relational databases host approximately 73% of the world’s data.

Researchers from Shanghai Jiao Tong University and Tsinghua University present rLLM project (relationLLM)which addresses challenges in RTL by providing a platform for rapid development of RTL-like methods using LLM. This innovative approach focuses on two key features: decomposing state-of-the-art graph neural networks (GNNs), LLMs, and table neural networks (TNNs) into standardized modules, and enabling the construction of robust models through a “merge, align, and co-train” methodology. To demonstrate the application of rLLM, a simple RTL method called BRIDGE is presented. BRIDGE processes table data using TNNs and uses “foreign keys” in relational tables to establish relationships between table samples, which are then analyzed using GNNs. This method considers multiple tables and their interconnections, providing a comprehensive approach to relational data analysis. Furthermore, to address the scarcity of datasets in the emerging RTL field, the project introduces a robust data collection called SJTUTables, which comprises three relational table datasets: TML1M, TLF2K, and TACM12K.

The rLLM project presents a comprehensive architecture consisting of three main layers: the data engine layer, the module layer, and the model layer. This structure is designed to facilitate efficient processing and analysis of data from relational tables.

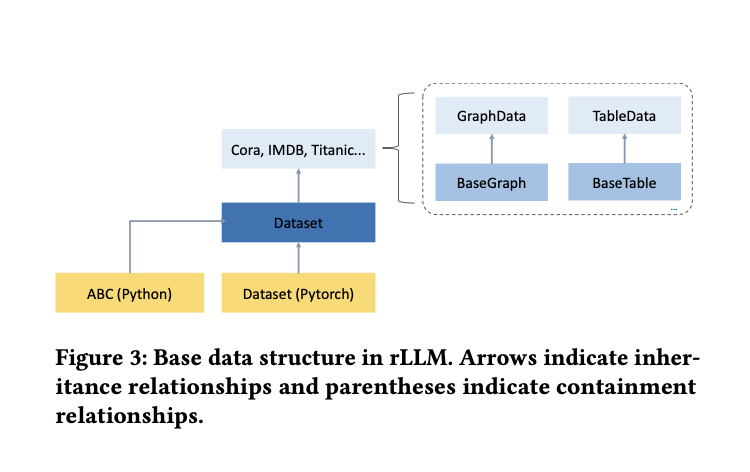

The data engine layer forms the foundation and focuses on the fundamental data structures for graph and table data. It decouples data loading and storage via the Dataset and BaseGraph/BaseTable subclasses, respectively. This design allows for flexible handling of various graph and table data types, optimizing storage and processing of both homogeneous and heterogeneous graph and table data.

The module layer decomposes GNN, LLM, and TNN operations into standard submodules. For GNN, it includes GraphTransform for preprocessing and GraphConv for implementing graph convolution layers. The LLM modules comprise a Predictor for data annotation and an Enhancer for data augmentation. The TNN modules feature TableTransform for mapping features to higher-dimensional spaces and TableConv for multi-layer interactive learning across feature columns.

BRIDGE demonstrates the application of rLLM in RTL-like methods. It addresses the complexity of relational databases by processing both table and non-table features. A table encoder, using the TableTransform and TableConv modules, handles heterogeneous table data to produce table embeddings. A graph encoder, employing the GraphTransform and GraphConv modules, models foreign key relationships and generates graph embeddings. BRIDGE integrates the outputs of both encoders, allowing simultaneous modeling of data from multiple tables and their interconnections. The framework supports both supervised and unsupervised training approaches, and adapts to various data scenarios and learning objectives.

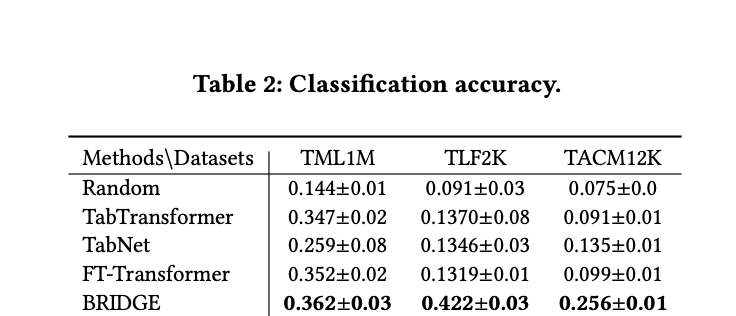

Experimental results reveal the limitations of traditional single-table transcriptional neural networks for processing relational table data. These transcriptional neural networks, limited to learning from a single target table, fail to leverage the abundant information available across multiple tables and their interconnections, resulting in suboptimal performance. In contrast, the BRIDGE algorithm demonstrates superior capabilities by effectively combining a table encoder with a graph encoder. This integrated approach enables BRIDGE to extract valuable information from both individual tables and their relations. Consequently, BRIDGE achieves significant performance improvement compared to conventional methods, highlighting the importance of considering the relational structure of data in table learning tasks.

The rLLM framework introduces a robust approach to learning relational tables using large language models. It integrates advanced methods and optimizes data structures to improve efficiency. The project invites collaboration from researchers and software engineers to extend its capabilities and applications in the field of relational data analysis.

Review the Paper and GitHubAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Asjad is a consultant intern at Marktechpost. He is pursuing Bachelors in Mechanical Engineering from Indian Institute of technology, Kharagpur. Asjad is a Machine Learning and Deep Learning enthusiast who is always researching the applications of Machine Learning in the healthcare domain.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER