In the field of video generation, diffusion models have shown notable advances. However, a persistent challenge remains: unsatisfactory temporal consistency and unnatural dynamics in inference results. The study explores the complexities of noise initialization in video diffusion models, uncovering a crucial gap between training and inference.

The study addresses challenges in diffusion-based video generation, identifying a training-inference gap in noise initialization that hinders temporal consistency and natural dynamics in existing models. It reveals intrinsic differences in the spatio-temporal frequency distribution between the training and inference phases. Researchers S-Lab and Nanyang Technological University introduced FreeInit, a concise inference sampling strategy; iteratively refines the low-frequency components of the initial noise during inference, effectively closing the initialization gap.

The study explores three categories of video generation models (GAN-based, transformer-based, and diffusion-based), emphasizing the progress of diffusion models in text-to-image and text-to-image generation. video. Focusing on diffusion-based methods such as VideoCrafter, AnimateDiff, and ModelScope reveals an implicit gap between training and inference in noise initialization, which affects the quality of inference.

Diffusion models, successful in text-to-image generation, are extended to text-to-video conversion with pre-trained image models and temporal layers. Despite this, a gap in training inference in noise initialization hinders performance. FreeInit addresses this gap without additional training, improving temporal consistency and refining the visual appearance in the generated frames. Evaluated on public text-to-video models, FreeInit significantly improves generation quality, marking a key advance in overcoming noise initialization challenges in broadcast-based video generation.

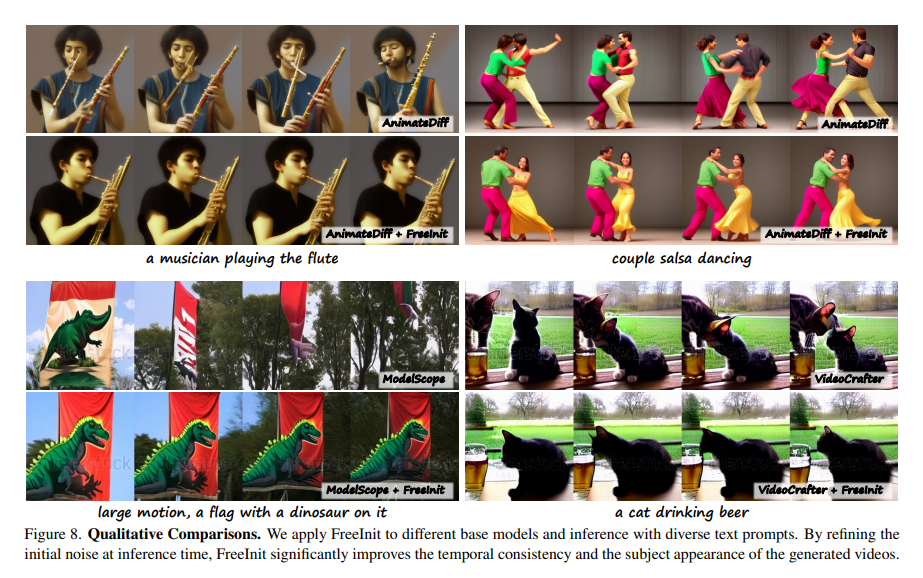

FreeInit is a method that addresses the initialization gap in video diffusion models by iteratively refining the initial noise without additional training. Applied to publicly available text-to-video models, AnimateDiff, ModelScope, and VideoCrafter, FreeInit significantly improves inference quality. The study also explores the impact of frequency filters, including Gaussian low-pass filter and Butterworth low-pass filter, on the balance between temporal coherence and visual quality in the generated videos. Evaluation metrics include frame similarity and the DINO metric, using ViT-S16 DINO to evaluate temporal consistency and visual quality.

FreeInit significantly improves temporal coherence in videos generated by diffusion models without requiring additional training. It seamlessly integrates into various video diffusion models in inference, iteratively refining the initial noise to close the gap between training and inference. Evaluation of text-to-video models such as AnimateDiff, ModelScope, and VideoCrafter reveals a substantial improvement in temporal coherence, ranging from 2.92 to 8.62. Quantitative evaluations of the UCF-101 and MSR-VTT datasets demonstrate the superiority of FreeInit, as indicated by performance metrics such as DINO score, outperforming models without noise reset or using different frequency filters.

In conclusion, the complete study can be summarized in the following points:

- The research addresses a gap between training and inference in video broadcast models, which can affect the quality of inference.

- The researchers have proposed FreeInit, a concise, training-free sampling strategy.

- FreeInit improves temporal consistency when applied to three text-to-video models, resulting in improved video generation without additional training.

- The study also explores frequency filters such as GLPF and Butterworth, further improving video generation.

- The results show that FreeInit offers a practical solution to improve the quality of inference in video diffusion models.

- FreeInit is easy to implement and requires no additional training or learnable parameters.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to join. our 34k+ ML SubReddit, 41k+ Facebook community, Discord Channel, and Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you'll love our newsletter.

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>