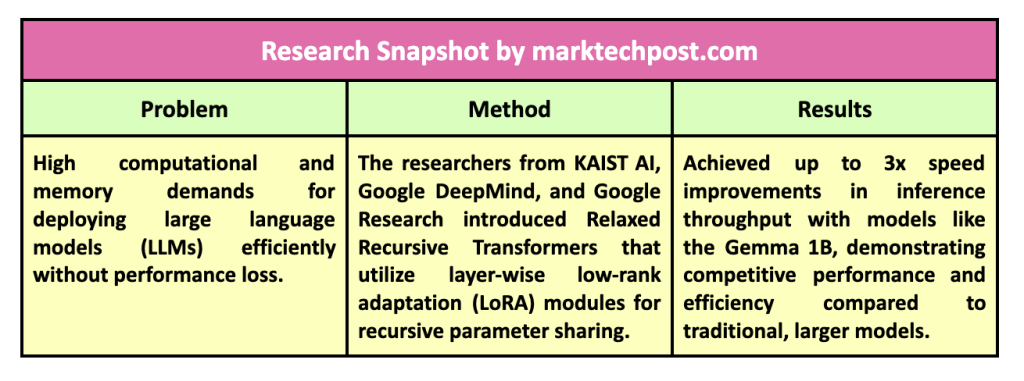

Large language models (LLMs) are based on deep learning architectures that capture complex linguistic relationships within layered structures. Primarily based on Transformer architectures, these models are increasingly deployed in industries for tasks that require nuanced language generation and understanding. However, the demands of large Transformer models come with high computational and memory requirements. As models grow to billions of parameters, implementing them on standard hardware becomes challenging due to limitations in memory capacity and processing power. To make LLMs feasible and accessible for broader applications, researchers are looking for optimizations that balance model performance with resource efficiency.

LLMs typically require extensive computational resources and memory, making them expensive to implement and difficult to scale. One of the critical issues in this area is to reduce the resource burden on LLMs while preserving their performance. Researchers are investigating methods to minimize model parameters without affecting accuracy, with parameter swapping being one approach being considered. Model weights are reused across multiple layers by sharing parameters, which theoretically reduces the model's memory footprint. However, this approach has had limited success in modern LLMs, where layer complexity can cause shared parameters to degrade performance. Therefore, reducing parameters effectively without loss of model accuracy has become a major challenge as models become highly interdependent within their layers.

Researchers have explored techniques already used in parameter reduction, such as knowledge distillation and pruning. Knowledge distillation transfers performance from a larger model to a smaller one, while pruning removes less influential parameters to reduce model size. Despite their advantages, these techniques may not achieve the desired efficiency in large-scale models, particularly when scale performance is essential. Another approach, low-range adaptation (LoRA), adjusts the model structure to achieve similar results, but does not always produce the efficiency needed for broader applications.

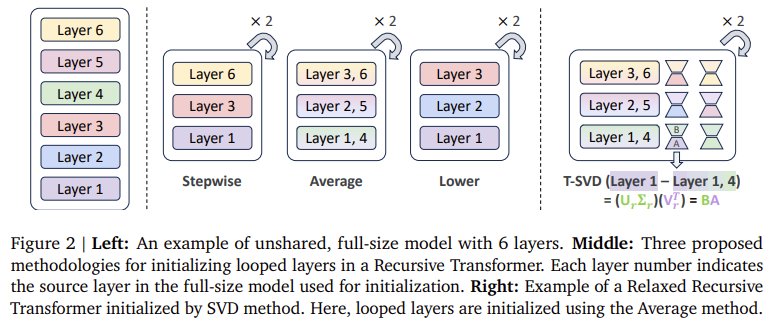

Researchers from KAIST ai, Google DeepMind and Google Research presented Relaxed Recursive Transformers to overcome these limitations. This architecture builds on traditional Transformers by implementing parameter sharing between layers through recursive transformations supported by LoRA modules. The Recursive Transformer architecture works by reusing a single block of layers multiple times in a loop, preserving performance benefits and decreasing computational load. The researchers showed that by looping the same layer block and initializing it from a pre-trained standard model, recursive transformers could reduce parameters while maintaining accuracy and optimizing the use of model resources. This configuration introduces even more relaxed recursive transformers by adding low-rank adaptations to relax strict parameter sharing restrictions, allowing for greater flexibility and refined performance in the shared structure.

The Relaxed Recursive Transformer design relies on the integration of custom LoRA modules for each layer, allowing the model to operate with a reduced number of parameters without compromising accuracy. Each layer block is initialized using singular value decomposition (SVD) techniques, which ensure that the model layers can operate effectively at a compressed scale. Recursive models such as the Gemma 1B model, which uses this design, have been shown to outperform their non-recursive counterparts of similar size, such as the TinyLlama 1.1B and Pythia 1B, by achieving higher accuracy in low-shot tasks. This architecture further allows recursive transformers to take advantage of early exit mechanisms, improving inference performance by up to 3x compared to traditional LLMs due to their recursive design.

The results reported in the study show that recursive transformers achieve notable gains in efficiency and performance. For example, the Gemma 1B recursive model demonstrated an accuracy gain of 10 percentage points compared to downsized models trained on the same data set. The researchers report that by using early exit strategies, the Recursive Transformer achieved nearly 3x speed improvements in inference by enabling deep batch processing. Furthermore, recursive models performed competitively with larger models, achieving performance levels comparable to non-recursive models pre-trained on substantially larger data sets, with some recursive models nearly matching models trained on corpora exceeding three trillion tokens.

Key research findings:

- Efficiency gains: Recursive Transformers achieved up to 3x improvements in inference performance, making them significantly faster than standard Transformer models.

- Share parameters: The exchange of parameters with LoRA modules allowed models like the Gemma 1B to achieve almost ten percentage points more accuracy than smaller models without losing effectiveness.

- Improved initialization: Singular value decomposition (SVD) initialization was used to maintain performance with reduced parameters, providing a balanced approach between fully shared and non-shared structures.

- Precision maintenance: Recursive transformers maintained high accuracy even when trained on 60 billion tokens, achieving competitive performance against non-recursive models trained on much larger data sets.

- Scalability: Recursive transformer models present a scalable solution by integrating recursive layers and early exit strategies, facilitating broader implementation without demanding high-end computational resources.

In conclusion, relaxed recursive transformers offer a novel approach to parameter efficiency in LLMs by leveraging the sharing of recursive layers supported by LoRA modules, preserving both memory efficiency and model effectiveness. By optimizing parameter exchange techniques with flexible low-rank modules, the team presented a scalable, high-performance solution that makes large-scale language models more accessible and feasible for practical applications. The research presents a viable path to improve cost and performance efficiency in LLM implementation, especially where computational resources are limited.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Trend) LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLM) for Intel PCs

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>