Businesses are increasingly relying on user-generated images and videos to drive engagement. From e-commerce platforms that encourage customers to share product images to social media companies that promote user-generated videos and images, using user content to drive engagement is a powerful strategy. However, it can be difficult to ensure that this user-generated content is consistent with your policies and fosters a safe online community for your users.

Today, many companies rely on human moderators or respond reactively to user complaints to manage inappropriate user-generated content. These approaches do not scale to effectively moderate millions of images and videos with sufficient quality or speed, resulting in poor user experience, high costs to achieve scale, or even potential damage to brand reputation.

In this post, we discuss how to use the custom moderation feature in Amazon Rekognition to improve the accuracy of your pre-trained content moderation API.

Content Moderation in Amazon Rekognition

Amazon Rekognition is a managed artificial intelligence (ai) service that offers customizable, pre-trained computer vision capabilities to extract information and insights from images and videos. One such capability is Amazon Rekognition content moderation, which detects inappropriate or unwanted content in images and videos. Amazon Rekognition uses a hierarchical taxonomy to tag inappropriate or unwanted content with 10 top-level moderation categories (such as violence, explicit, alcohol, or drugs) and 35 second-level categories. Customers in industries such as e-commerce, social media, and gaming can use content moderation in Amazon Rekognition to protect their brand reputation and foster safe user communities.

When using Amazon Rekognition for image and video moderation, human moderators have to review a much smaller set of content, typically between 1 and 5% of the total volume, already flagged by the content moderation model. This allows businesses to focus on more valuable activities and still achieve comprehensive moderation coverage at a fraction of their current cost.

Introducing Amazon Rekognition Custom Moderation

You can now improve the accuracy of Rekognition’s moderation model for your company’s specific data with the custom moderation feature. You can train a custom adapter with as few as 20 annotated images in less than 1 hour. These adapters extend the capabilities of the moderation model to detect images used for training more accurately. For this post, we used a sample dataset containing safe images and images with alcoholic beverages (considered unsafe) to improve the accuracy of the alcohol moderation label.

The unique ID of the capable adapter can be provided to the existing user. Detect moderation tags API operation to process images using this adapter. Each adapter can only be used by the AWS account that was used to train the adapter, ensuring that data used for training remains secure in that AWS account. With the custom moderation feature, you can adapt Rekognition’s pre-trained moderation model to improve performance for your specific moderation use case, without requiring machine learning (ML) experience. You can continue to enjoy the benefits of a fully managed moderation service with a pay-as-you-go pricing model for custom moderation.

Solution Overview

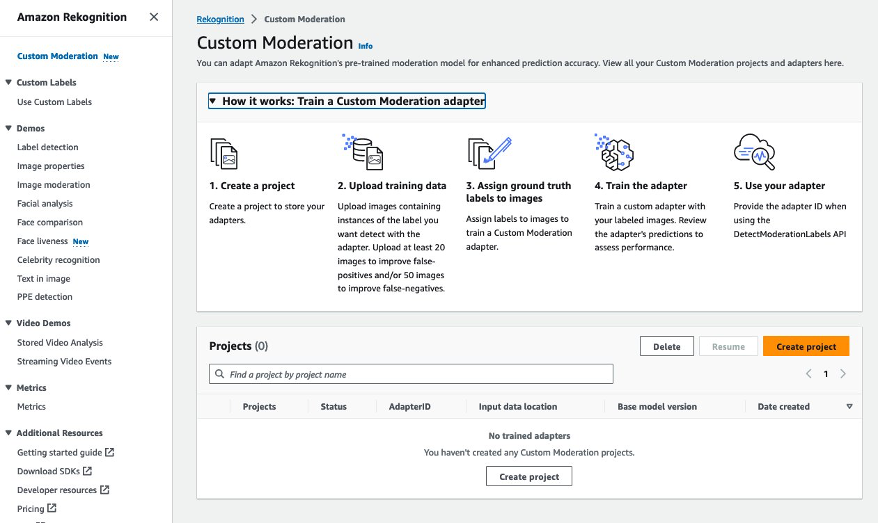

Training a custom moderation adapter involves five steps that you can complete using the AWS Management Console or API interface:

- Create a project

- Upload training data.

- Assign ground truth tags to images

- Train the adapter

- Use the adapter

Let’s go over these steps in more detail using the console.

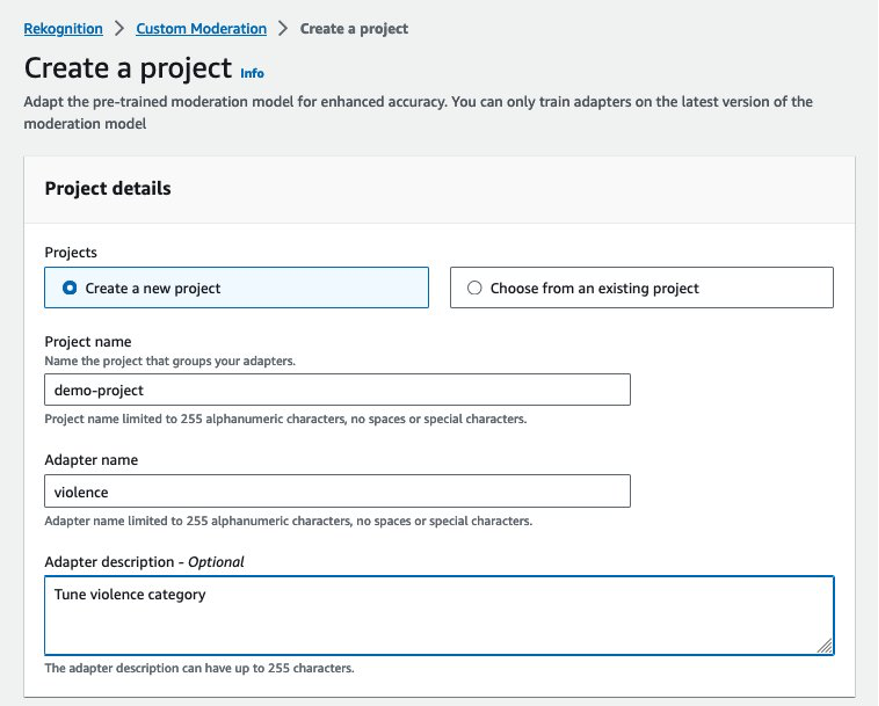

Create a project

A project is a container to store your adapters. You can train multiple adapters within a project with different training data sets to evaluate which adapter works best for your specific use case. To create your project, complete the following steps:

- In the Amazon Rekognition console, choose Personalized moderation in the navigation panel.

- Choose Create project.

- For Project’s nameEnter a name for your project.

- For Adapter nameEnter a name for your adapter.

- Optionally, enter a description for your adapter.

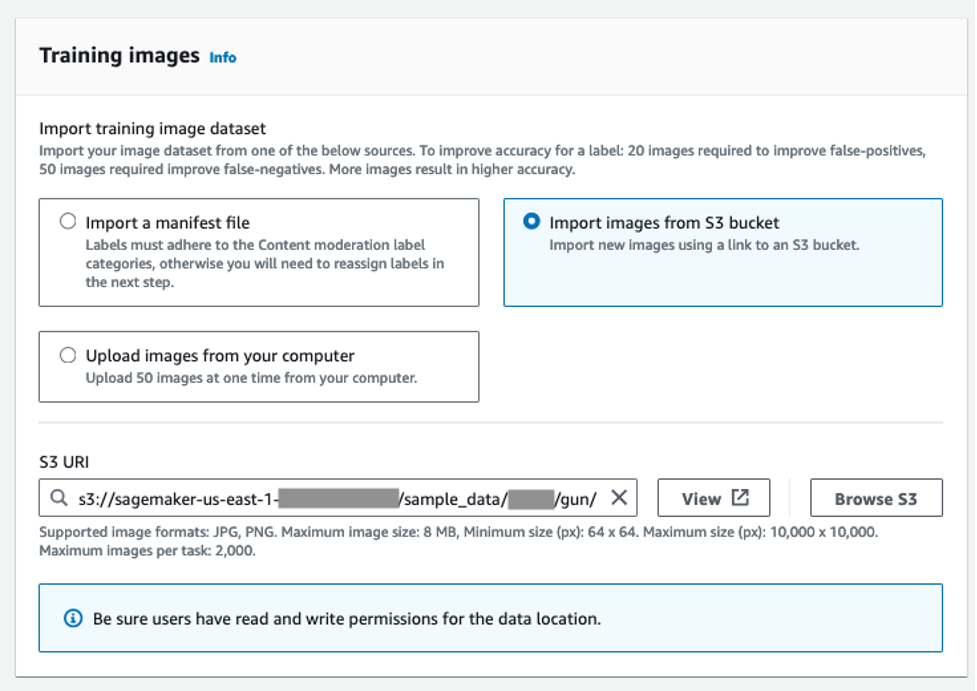

Load training data

You can start with as few as 20 sample images to adapt the moderation model and detect fewer false positives (images that are appropriate for your business but that the model flags with a moderation label). To reduce false negatives (images that are inappropriate for your business but are not flagged with a moderation label), you should start with 50 sample images.

You can select from the following options to provide the image data sets for adapter training:

Complete the following steps:

- For this post, select Import images from S3 bucket and enter your S3 URI.

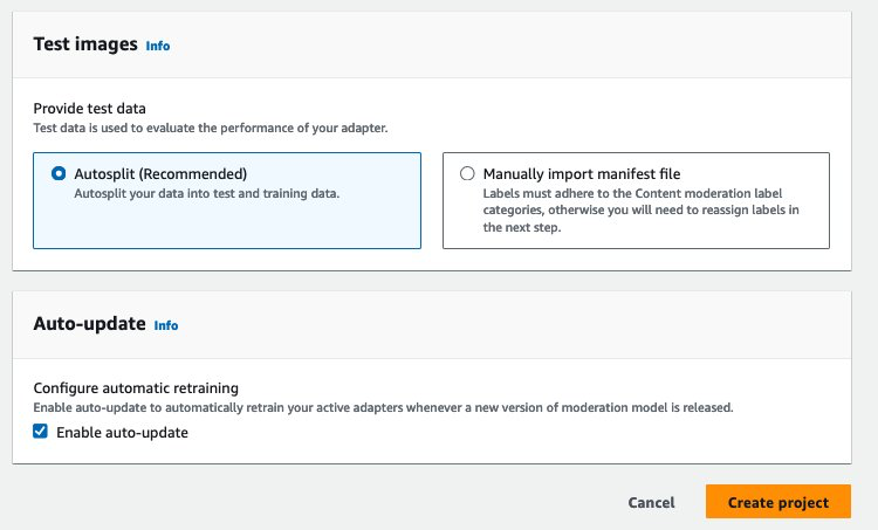

Like any ML training process, training a custom moderation adapter in Amazon Rekognition requires two separate data sets: one to train the adapter and another to evaluate it. You can upload a separate test data set or choose to automatically split your training data set for training and testing.

- For this post, select automatic division.

- Select Enable automatic update to ensure that the system automatically retrains the adapter when a new version of the content moderation model is released.

- Choose Create project.

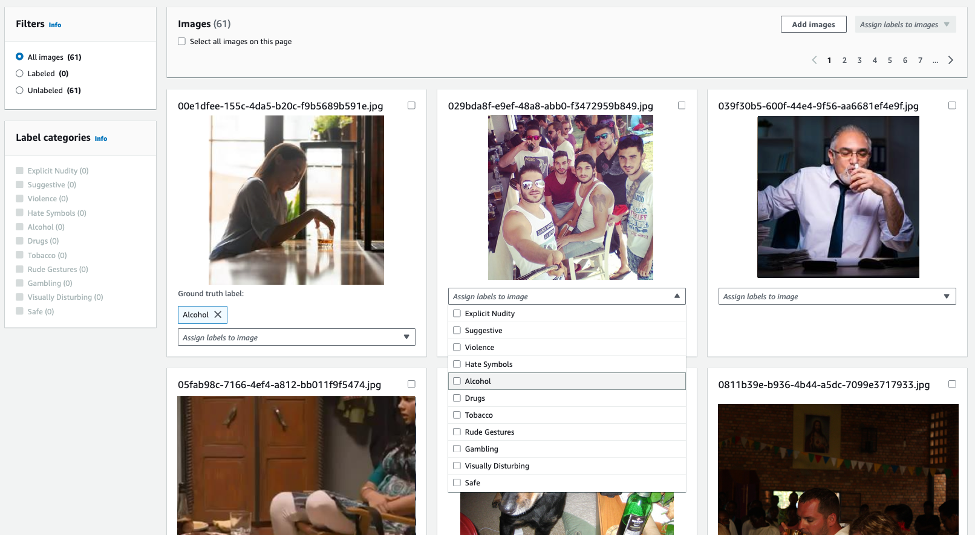

Assign ground truth tags to images

If you uploaded images without annotations, you can use the Amazon Rekognition console to provide image tags based on the moderation taxonomy. In the following example, we train an adapter to detect hidden alcohol more accurately and label all those images with the label alcohol. Images that are not considered inappropriate can be labeled as safe.

Train the adapter

After labeling all the images, choose start training to start the training process. Amazon Rekognition will use the uploaded image datasets to train an adapter model to improve accuracy on the specific type of images provided for training.

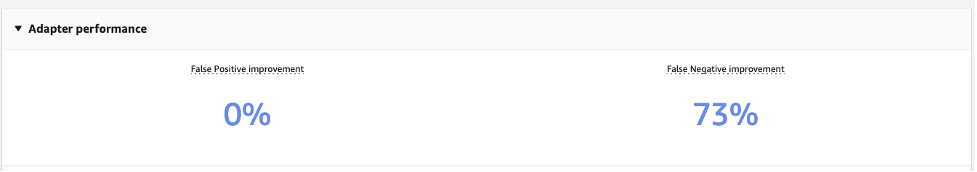

After you have trained your custom moderation adapter, you will be able to see all the details of the adapter (adapterID, test and training manifest files) in the Adapter performance section.

He Adapter performance The section shows improvements in false positives and false negatives compared to the pre-trained moderation model. The adapter we trained to improve alcohol label detection reduces the false negative rate on test images by 73%. In other words, the adapter now accurately predicts the alcohol moderation label for 73% more images compared to the pre-trained moderation model. However, no improvement is observed in false positives, since no false positive samples were used for training.

Use the adapter

You can perform inference using the newly trained adapter for higher accuracy. To do this, call Amazon Rekognition DetectModerationLabel API with an additional parameter, ProjectVersionwhich is the only one AdapterID of the adapter. The following is a sample command that uses the AWS Command Line Interface (AWS CLI):

The following is a sample code snippet that uses the Python Boto3 Library:

Best practices for training.

To maximize the performance of your adapter, the following best practices are recommended for training the adapter:

- The sample image data should capture the representative errors for which you want to improve the accuracy of the moderation model.

- Instead of only introducing error images for false positives and false negatives, you can also provide true positives and true negatives to improve performance.

- Please provide as many annotated images as possible for training.

Conclusion

In this post, we present a detailed description of the new custom moderation feature in Amazon Rekognition. Additionally, we detail the steps for conducting training using the console, including best practices for optimal results. For additional information, visit the Amazon Rekognition console and explore the custom moderation feature.

Amazon Rekognition Custom Moderation is now generally available in all AWS Regions where Amazon Rekognition is available.

Learn more about content moderation on AWS. Take the first step to optimize your content moderation operations with AWS.

About the authors

Shipra Kanoria He is a Principal Product Manager at AWS. She is passionate about helping clients solve their most complex problems with the power of machine learning and artificial intelligence. Prior to joining AWS, Shipra spent over 4 years at Amazon Alexa, where she launched many productivity-related features in the Alexa voice assistant.

Shipra Kanoria He is a Principal Product Manager at AWS. She is passionate about helping clients solve their most complex problems with the power of machine learning and artificial intelligence. Prior to joining AWS, Shipra spent over 4 years at Amazon Alexa, where she launched many productivity-related features in the Alexa voice assistant.

deep aakash is a software development engineering manager based in Seattle. He enjoys working on computer vision, artificial intelligence, and distributed systems. His mission is to enable customers to address complex problems and create value with AWS Rekognition. Outside of work, he enjoys hiking and traveling.

deep aakash is a software development engineering manager based in Seattle. He enjoys working on computer vision, artificial intelligence, and distributed systems. His mission is to enable customers to address complex problems and create value with AWS Rekognition. Outside of work, he enjoys hiking and traveling.

Lana Zhang is a Senior Solutions Architect on the AWS WWSO ai Services team, specializing in ai and machine learning for content moderation, computer vision, natural language processing, and generative ai. With his expertise, he is dedicated to advancing AWS ai/ML solutions and helping customers transform their business solutions across various industries, including social media, gaming, e-commerce, media, advertising. and marketing.

Lana Zhang is a Senior Solutions Architect on the AWS WWSO ai Services team, specializing in ai and machine learning for content moderation, computer vision, natural language processing, and generative ai. With his expertise, he is dedicated to advancing AWS ai/ML solutions and helping customers transform their business solutions across various industries, including social media, gaming, e-commerce, media, advertising. and marketing.

NEWSLETTER

NEWSLETTER