As global temperatures rise, forest fires around the world are becoming more frequent and dangerous. Many communities feel its effects as people evacuate their homes or suffer damage from even proximity to fire and smoke.

As part of Google’s mission to help people access trusted information at critical times, we use satellite imagery and machine learning (ML) to monitor wildfires and inform affected communities. Our wildfire tracker was recently expanded. Provides updated fire boundary information every 10-15 minutes, is more accurate than similar satellite products and improves our previous work. These limits are shown for large fires in the continental US, Mexico, and most of Canada and Australia. They are displayed, with additional information from local authorities, at Google search and google mapsallowing people to stay safe and informed about potential dangers near them, their homes, or their loved ones.

Tickets

Tracking wildfire boundaries requires balancing spatial resolution and update rate. The most scalable method of getting frequent limit updates is to use geostationary satellites, that is, satellites that orbit the earth once every 24 hours. These satellites remain at a fixed point on Earth, providing continuous coverage of the area surrounding that point. Specifically, our wildfire tracker models use the GOES-16 and GOES-18 satellites to cover North America, and the Himawari-9 and GK2A satellites to cover Australia. These provide images on a continental scale every 10 minutes. The spatial resolution is 2 km at nadir (the point directly below the satellite), and lower as one moves away from nadir. The goal here is to provide people with warnings as soon as possible and refer them to authoritative sources for spatially accurate ground data as needed.

|

| Plumes of smoke obscuring the 2018 Camp Fire in California. [Image from NASA Worldview] |

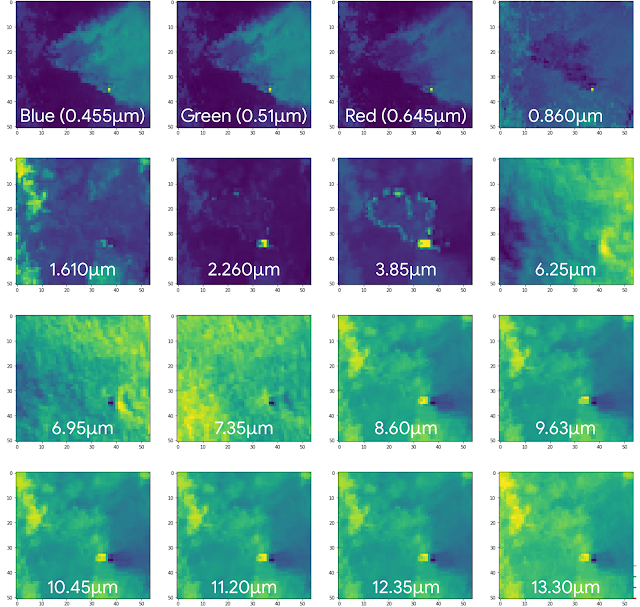

Determining the precise extent of a wildfire is not trivial, as fires emit massive plumes of smoke, which can spread far from the burned area and obscure the flames. Clouds and other weather phenomena further obscure the underlying fire. To overcome these challenges, it is common to rely on infrared (IR) frequencies, particularly in the 3 to 4 μm wavelength range. This is because forest fires (and similar hot surfaces) radiate considerably in this frequency band and these emissions diffract with relatively minor distortions through smoke and other particles in the atmosphere. This is illustrated in the figure below, which shows a multispectral image of a bushfire in Australia. The visible channels (blue, green, and red) mainly show the triangular smoke column, while the 3.85 μm IR channel shows the ring-shaped burning pattern of the fire itself. However, even with the additional information from the IR bands, determining the exact extent of the fire remains a challenge, since fire has variable emission intensity and many other phenomena emit or reflect IR radiation.

|

| Himawari-8 hyperspectral image of a forest fire. Note the smoke plume in the visible channels (blue, green, and red) and the ring indicating the current burn area in the 3.85 μm band. |

Model

Previous work on fire detection from satellite imagery typically relies on physically-based algorithms to identify hotspots from multispectral images. For example, him National Oceanic and Atmospheric Administration (NOAA) product of fire identifies potential wildfire pixels on each of the GOES satellites, primarily based on the 3.9 μm and 11.2 μm frequencies (with ancillary information from two other frequency bands).

In our wildfire tracker, the model is trained on all satellite inputs, allowing it to learn the relative importance of different frequency bands. The model receives a sequence of the three most recent images in each band to compensate for temporary obstructions, such as cloud cover. In addition, the model receives inputs from two geostationary satellites, achieving a super-resolution effect whereby the detection accuracy is improved by the pixel size of either satellite. In North America, we also supply the aforementioned NOAA fire product as a consumable. Finally, we calculate the relative angles of the sun and the satellites, and provide them as additional input to the model.

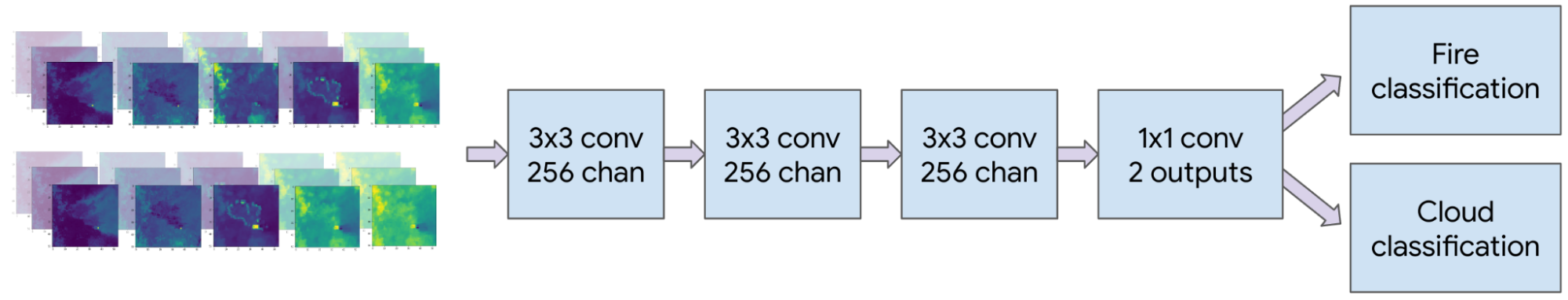

All inputs are resampled on a uniform 1 km square grid and entered into a convolutional neural network (CNN). We experimented with various architectures and settled on a CNN followed by a 1×1 convolutional layer to produce separate classifier heads for fire and cloud pixels (shown below). The number of layers and their sizes are hyperparameters, which are optimized separately for Australia and North America. When a pixel is identified as a cloud, we override any fire detection, as dense clouds obscure underlying fires. Still, separating the classification task from the cloud improves fire detection performance as we incentivize the system to better identify these edge cases.

|

| CNN architecture for the Australia model; a similar architecture was used for North America. Adding a cloud classification head improves fire classification performance. |

To train the network, we use thermal anomaly data from the MODIS and VIRUS polar orbiting satellites as labels. MODIS and VIIRS have a higher spatial precision (750–1000 meters) than the geostationary satellites we use as inputs. However, they cover a given spot only once every few hours, occasionally causing them to miss fast-moving fires. Therefore, we use MODIS and VIIRS to build a training set, but at the time of inference we rely on high-frequency images from geostationary satellites.

Even when attention is limited to active fires, most pixels in an image are not currently burning. To reduce the bias of the model towards non-burning pixels, we increase the sample of the burning pixels in the training set and apply focal loss to encourage improvements to the rare misclassified fire pixels.

|

| The creeping boundary of the 2022 McKinney fire and a smaller nearby fire. |

Assessment

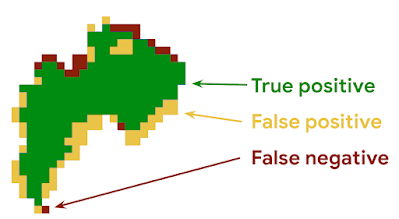

High-resolution fire signals from polar-orbiting satellites are a rich source of training data. However, such satellites use sensors that are similar to geostationary satellites, which increases the risk of systemic labeling errors (eg, cloud-related misdetections) being incorporated into the model. To evaluate our wildfire tracker model without such bias, we compared it to fire scars (ie, the shape of the total area burned) as measured by local authorities. Fire scars are obtained after containing a fire and are more reliable than real-time fire detection techniques. We compare each fire scar with the union of all the fire pixels detected in real time during the wildfire to obtain an image like the one shown below. In this image, green represents correctly identified burned areas (true positive), yellow represents unburned areas detected as burned areas (false positive), and red represents missed burned areas (false negative).

|

| Example of assessment for a single fire. The pixel size is 1 km x 1 km. |

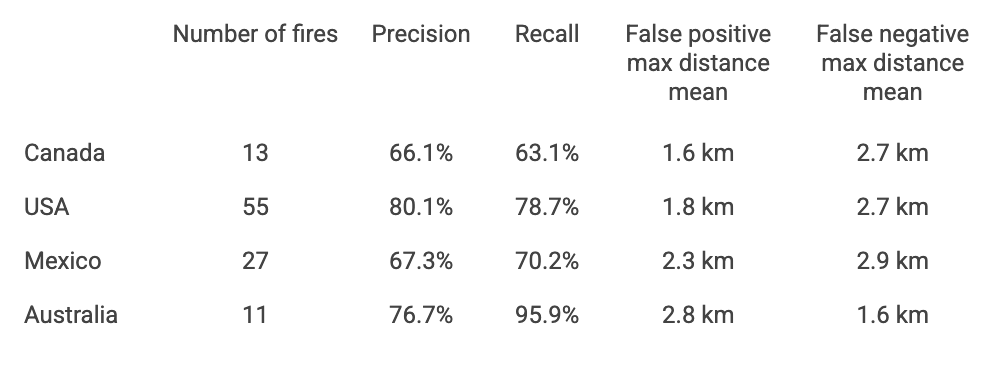

We compared our models with the official fire scars using the precision and recall metrics. To quantify the spatial severity of misclassification errors, we took the maximum distance between a false positive or false negative pixel and the closest true positive fire pixel. We then average each metric across all fires. The results of the evaluation are summarized below. The most serious misdetections were found to be due to errors in official data, such as a missing scar from a nearby fire.

|

| Metrics from test suites comparing our models to official fire scars. |

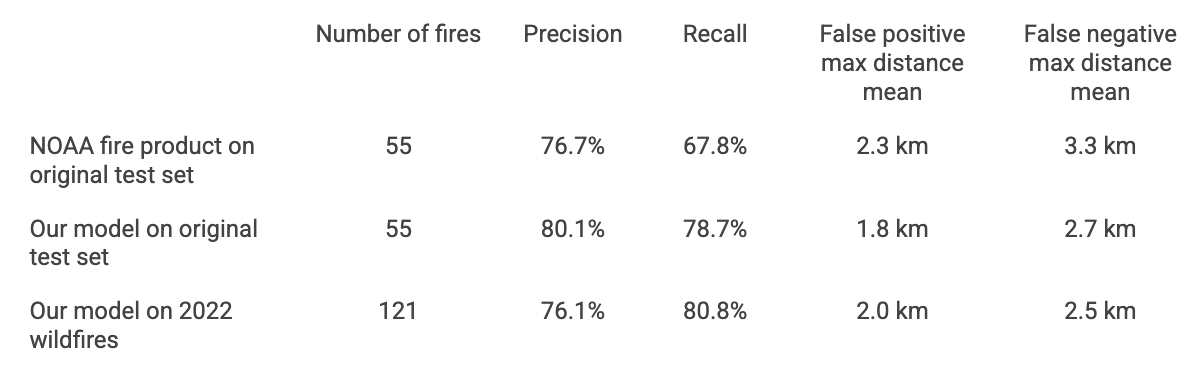

We conducted two additional experiments on wildfires in the United States (see table below). First, we evaluate a previous model which is based solely on NOAA GOES-16 and GOES-17 fire products. Our model outperforms this approach on all metrics considered, demonstrating that raw satellite measurements can be used to improve the existing NOAA fire product.

Next, we compile a new test set consisting of all large fires in the United States in 2022. This test set was not available during training because the model was released before the fire season began. Performance evaluation on this test set shows performance in line with the expectations of the original test set.

|

| Comparison between fire models in the United States. |

Conclusion

Boundary tracking is part of Google’s broader commitment to provide accurate and up-to-date information to people at critical times. This demonstrates how we use satellite imagery and ML to track wildfires and provide real-time support to affected people in times of crisis. In the future, we plan to continue to improve the quality of our wildfire boundary tracking, expand this service to more countries, and continue our work helping fire authorities access critical information in real time.

Thanks

This work is a collaboration between the teams of Google Research, Google Maps and Crisis response, with the support of our alliances and policy teams. We would also like to thank the fire authorities we partner with around the world.

NEWSLETTER

NEWSLETTER