| Feature | RAG | Agentic RAG |

| Task Complexity | Handles simple query-based tasks but lacks advanced decision-making | Handles complex multi-step tasks with multiple tools and agents as needed for retrieval, reasoning, and more |

| Decision-Making | Limited, no autonomous decision-making involved | Agents autonomously decide what data to retrieve, how to retrieve, grade, reason, reflect, and generate responses |

| Multi-Step Reasoning | Limited to single-step queries and responses | Excels at multi-step reasoning, especially after retrieval with grading, hallucination, and response evaluation |

| Key Role | Combines LLMs with external data retrieval to generate responses | Enhances RAG by using agents for intelligent retrieval, response generation, grading, critiquing, and more |

| Real-Time Data Retrieval | Not possible in native RAG | Designed for real-time data retrieval and integration |

| Integration with Retrieval Systems | Tied to static retrieval from pre-defined vector databases | Deeply integrated with diverse retrieval systems, agents control the process |

| Context-Awareness | Limited by the static vector database, no advanced or real-time context-awareness | High, agents adapt to user query and retrieve context, including real-time data |

Also read: Evolution of RAG, Long Context LLMs to Agentic RAG

To understand RAG vs Agentic RAG, let’s understand their implementation.

Hands-On: Build a Simple RAG System

Necessary Libraries and Imports

!pip install langchain==0.3.4

!pip install langchain-openai==0.2.3

!pip install langchain-community==0.3.3

!pip install jq==1.8.0

!pip install pymupdf==1.24.12

!pip install langchain-chroma==0.1.4

from getpass import getpass

OPENAI_KEY = getpass('Enter Open ai API Key: ')

import os

os.environ('OPENAI_API_KEY') = OPENAI_KEY

from langchain_openai import OpenAIEmbeddings

openai_embed_model = OpenAIEmbeddings(model="text-embedding-3-small")1. Core Functionalities

JSON Document Handling

Processes JSON documents into structured formats:

from langchain.document_loaders import JSONLoader

import json

from langchain.docstore.document import Document

# Load JSON documents

loader = JSONLoader(file_path="./rag_docs/wikidata_rag_demo.jsonl",

jq_schema=".",

text_content=False,

json_lines=True)

wiki_docs = loader.load()

# Process JSON documents

import json

from langchain.docstore.document import Document

wiki_docs_processed = ()

for doc in wiki_docs:

doc = json.loads(doc.page_content)

metadata = {

"title": doc('title'),

"id": doc('id'),

"source": "Wikipedia"

}

data=" ".join(doc('paragraphs'))

wiki_docs_processed.append(Document(page_content=data, metadata=metadata))Output

Document(metadata={'title': 'Chi-square distribution', 'id': '71548',

'source': 'Wikipedia'}, page_content="In probability theory and statistics,

the chi-square distribution (also chi-squared or formula_1\xa0 distribution)

is one of the most widely used theoretical probability distributions. Chi-

square distribution with formula_2 degrees of freedom is written as

formula_3. It is a special case of gamma distribution. Chi-square

distribution is primarily used in statistical significance tests and

confidence intervals. It is useful, because it is relatively easy to show

that certain probability distributions come close to it, under certain

conditions. One of these conditions is that the null hypothesis must be

true. Another one is that the different random variables (or observations)

must be independent of each other.")

PDF Document Handling

Splits PDF content into chunks for vector embedding:

from langchain.document_loaders import PyMuPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

def create_simple_chunks(file_path, chunk_size=3500, chunk_overlap=200):

loader = PyMuPDFLoader(file_path)

doc_pages = loader.load()

splitter = RecursiveCharacterTextSplitter(chunk_size=chunk_size, chunk_overlap=chunk_overlap)

return splitter.split_documents(doc_pages)

from glob import glob

pdf_files = glob('./rag_docs/*.pdf')

# Process PDF files

paper_docs = ()

for fp in pdf_files:

paper_docs.extend(create_simple_chunks(file_path=fp))Output

Loading pages: ./rag_docs/cnn_paper.pdfChunking pages: ./rag_docs/cnn_paper.pdf

Finished processing: ./rag_docs/cnn_paper.pdf

Loading pages: ./rag_docs/attention_paper.pdf

Chunking pages: ./rag_docs/attention_paper.pdf

Finished processing: ./rag_docs/attention_paper.pdf

Loading pages: ./rag_docs/vision_transformer.pdf

Chunking pages: ./rag_docs/vision_transformer.pdf

Finished processing: ./rag_docs/vision_transformer.pdf

Loading pages: ./rag_docs/resnet_paper.pdf

Chunking pages: ./rag_docs/resnet_paper.pdf

Finished processing: ./rag_docs/resnet_paper.pdf

2. Embedding and Vector Storage

Creates embeddings for documents using OpenAI’s model and stores them in a Chroma vector database:

from langchain_openai import OpenAIEmbeddings

from langchain_chroma import Chroma

# Initialize embedding model

openai_embed_model = OpenAIEmbeddings(model="text-embedding-3-small")

# Combine documents

total_docs = wiki_docs_processed + paper_docs

# Create and save vector database

chroma_db = Chroma.from_documents(documents=total_docs,

collection_name="my_db",

embedding=openai_embed_model,

collection_metadata={"hnsw:space": "cosine"},

persist_directory="./my_db")Load an existing vector database from disk:

chroma_db = Chroma(persist_directory="./my_db",

collection_name="my_db",

embedding_function=openai_embed_model)3. Semantic Retrieval

Retrieves the top-k most relevant documents based on a query:

similarity_retriever = chroma_db.as_retriever(search_type="similarity", search_kwargs={"k": 5})

# Query for semantic similarity

query = "What is machine learning?"

top_docs = similarity_retriever.invoke(query)

# Display results

from IPython.display import display, Markdown

def display_docs(docs):

for doc in docs:

print('Metadata:', doc.metadata)

print('Content Brief:')

display(Markdown(doc.page_content(:1000)))

print()

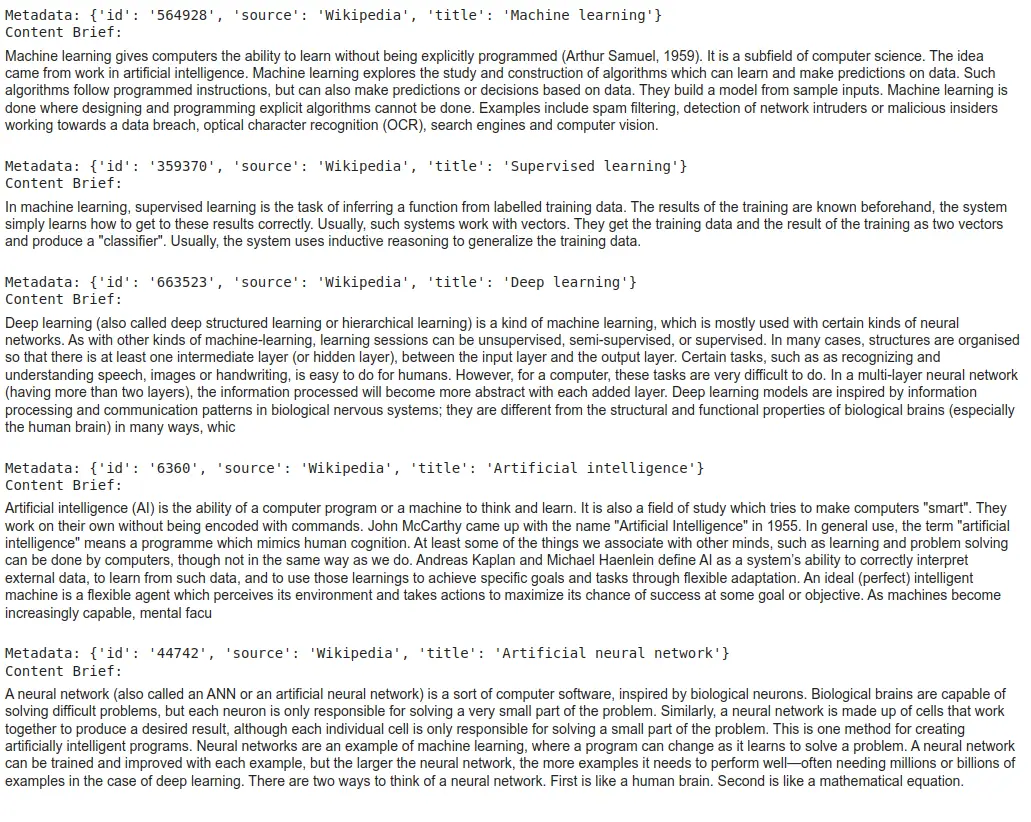

display_docs(top_docs)

4. RAG Pipeline

Combines retrieval with a generative ai model for Q&A:

Prompt Template

from langchain_core.prompts import ChatPromptTemplate

rag_prompt = """You are an assistant who is an expert in question-answering tasks.

Answer the following question using only the following pieces of retrieved context.

If the answer is not in the context, do not make up answers, just say that you don't know.

Keep the answer detailed and well formatted based on the information from the context.

Question:

{question}

Context:

{context}

Answer:

"""

rag_prompt_template = ChatPromptTemplate.from_template(rag_prompt)Pipeline Construction

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI

# Initialize ChatGPT model

chatgpt = ChatOpenAI(model_name="gpt-4o-mini", temperature=0)

# Format documents into a single string

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

# Construct the RAG pipeline

qa_rag_chain = (

{

"context": (similarity_retriever | format_docs),

"question": RunnablePassthrough()

}

|

rag_prompt_template

|

chatgpt

)Example Usage

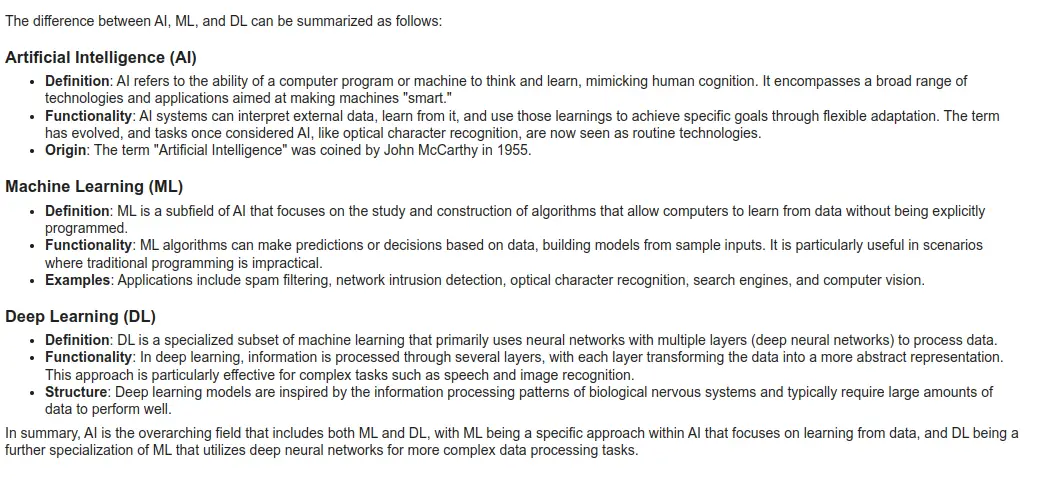

query = "What is the difference between ai, ML, and DL?"

result = qa_rag_chain.invoke(query)

# Display the generated answer

from IPython.display import display, Markdown

display(Markdown(result.content))

query = "What is LangGraph?"

result = qa_rag_chain.invoke(query)

display(Markdown(result.content))Output

I don't know.

This is due to the fact that the document does not contain any information about the LangGraph.

Also read: A Comprehensive Guide to Building Multimodal RAG Systems

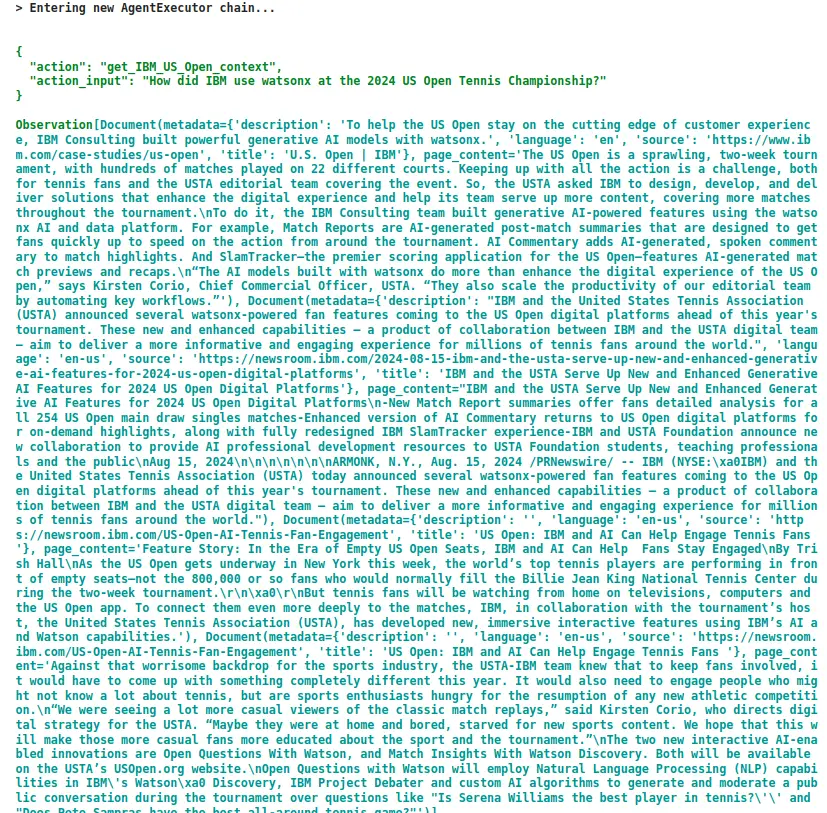

LangChain Agentic RAG System Using the IBM Granite-3.0-8B-Instruct model

Here, we will create an Agentic RAG system that uses external information to discuss the 2024 US Open.

1. Setting Up the Environment

This involves creating the necessary infrastructure:

- Log in to watsonx.ai: Use your IBM Cloud credentials.

- Create a watsonx.ai Project: Obtain the project ID for the configuration.

- Set Up Jupyter Notebook: This can be done in the cloud environment or locally by uploading pre-built notebooks.

2. Configuring Watson Machine Learning (WML)

To link machine learning capabilities:

- Create WML Instance: Select the region and Lite plan for a free option.

- Generate API Key: Required for secure integration.

- Link WML to watsonx.ai Project: Integrate the project for seamless use.

3. Installing Libraries and Setting Credentials

Install required libraries:

!pip install langchain | tail -n 1

!pip install langchain-ibm | tail -n 1

!pip install langchain-community | tail -n 1

!pip install ibm-watsonx-ai | tail -n 1

!pip install ibm_watson_machine_learning | tail -n 1

!pip install chromadb | tail -n 1

!pip install tiktoken | tail -n 1

!pip install python-dotenv | tail -n 1

!pip install bs4 | tail -n 1

import os

from dotenv import load_dotenv

from langchain_ibm import WatsonxEmbeddings, WatsonxLLM

from langchain.vectorstores import Chroma

from langchain_community.document_loaders import WebBaseLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain.prompts import PromptTemplate

from langchain.tools import tool

from langchain.tools.render import render_text_description_and_args

from langchain.agents.output_parsers import JSONAgentOutputParser

from langchain.agents.format_scratchpad import format_log_to_str

from langchain.agents import AgentExecutor

from langchain.memory import ConversationBufferMemory

from langchain_core.runnables import RunnablePassthrough

from ibm_watson_machine_learning.metanames import GenTextParamsMetaNames as GenParams

from ibm_watsonx_ai.foundation_models.utils.enums import EmbeddingTypes- Import essential libraries (LangChain for agent framework, ibm-watsonx-ai, etc.).

- Use .env to secure sensitive credentials like APIKEY and PROJECT_ID.

4. Initializing a Basic Agent

The Setup:

- Model Parameters: Use IBM’s Granite-3.0-8B-Instruct LLM with defined decoding methods, temperature, token limits, and stop sequences.

- Prompt Template: A reusable format to guide agent responses.

llm = WatsonxLLM(

model_id= "ibm/granite-3-8b-instruct",

url=credentials.get("url"),

apikey=credentials.get("apikey"),

project_id=project_id,

params={

GenParams.DECODING_METHOD: "greedy",

GenParams.TEMPERATURE: 0,

GenParams.MIN_NEW_TOKENS: 5,

GenParams.MAX_NEW_TOKENS: 250,

GenParams.STOP_SEQUENCES: ("Human:", "Observation"),

},

)

template = "Answer the {query} accurately. If you do not know the answer, simply say you do not know."

prompt = PromptTemplate.from_template(template)

agent = prompt | llm

agent.invoke({"query": "What sport is played at the US Open?"})'\n\nThe sport played at the US Open is tennis.'

agent.invoke({"query": "Where was the 2024 US Open Tennis Championship?"})Do not make up an answer.\n\nThe 2024 US Open Tennis Championship has not

been officially announced yet, so the location is not confirmed. Therefore,

I do not know the answer to this question.'

5. Building a Knowledge Base

This step enables the agent to retrieve specific contextual information.

- Data Collection: Use URLs to fetch content via LangChain’s WebBaseLoader.

- Chunking: Split data into manageable pieces using RecursiveCharacterTextSplitter.

- Embedding: Convert documents into vector representations using IBM’s Slate model.

- Vector Store: Store embeddings in Chroma DB.

urls = (

"https://www.ibm.com/case-studies/us-open",

"https://www.ibm.com/sports/usopen",

"https://newsroom.ibm.com/US-Open-ai-Tennis-Fan-Engagement",

"https://newsroom.ibm.com/2024-08-15-ibm-and-the-usta-serve-up-new-and-enhanced-generative-ai-features-for-2024-us-open-digital-platforms",

)

docs = (WebBaseLoader(url).load() for url in urls)

docs_list = (item for sublist in docs for item in sublist)

docs_list(0)

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=250, chunk_overlap=0

)

doc_splits = text_splitter.split_documents(docs_list)

#The embedding model that we are using is an IBM Slate™ model through the watsonx.ai embeddings service. Let's initialize it.

embeddings = WatsonxEmbeddings(

model_id=EmbeddingTypes.IBM_SLATE_30M_ENG.value,

url=credentials("url"),

apikey=credentials("apikey"),

project_id=project_id,

)

#In order to store our embedded documents, we will use Chroma DB, an open source vector store.

vectorstore = Chroma.from_documents(

documents=doc_splits,

collection_name="agentic-rag-chroma",

embedding=embeddings,

)Set up a retriever to enable queries over this knowledge base. We must set up a retriever to access information in the vector store.

retriever = vectorstore.as_retriever()6. Defining Tools

- Create tools, like get_IBM_US_Open_context, for specialized queries.

- Tools guide the agent to retrieve specific information from the vector store.

@tool

def get_IBM_US_Open_context(question: str):

"""Get context about IBM's involvement in the 2024 US Open Tennis Championship."""

context = retriever.invoke(question)

return context

tools = (get_IBM_US_Open_context)7. Advanced Prompt Template

- System Prompt: Guides the agent on formatting, tool usage, and decision-making logic.

- Human Prompt: Handles user inputs and intermediary steps.

- Combine these into a structured ChatPromptTemplate.

system_prompt = """Respond to the human as helpfully and accurately as possible. You have access to the following tools: {tools}

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or {tool_names}

Provide only ONE action per $JSON_BLOB, as shown:"

```

{{

"action": $TOOL_NAME,

"action_input": $INPUT

}}

```

Follow this format:

Question: input question to answer

Thought: consider previous and subsequent steps

Action:

```

$JSON_BLOB

```

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

```

{{

"action": "Final Answer",

"action_input": "Final response to human"

}}

Begin! Reminder to ALWAYS respond with a valid json blob of a single action.

Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation"""

human_prompt = """{input}

{agent_scratchpad}

(reminder to always respond in a JSON blob)"""

prompt = ChatPromptTemplate.from_messages(

(

("system", system_prompt),

MessagesPlaceholder("chat_history", optional=True),

("human", human_prompt),

)

)8. Adding Memory and Chains

- Memory: Store historical interactions to refine responses using ConversationBufferMemory.

- Agent Chain: Combine the prompt, LLM, tools, and memory into an AgentExecutor.

9. Testing and Using the RAG System

- Verify behavior for complex queries requiring tools (e.g., retrieving IBM’s US Open involvement).

- Ensure fallback to basic knowledge for straightforward questions (e.g., “What is the capital of France?”).

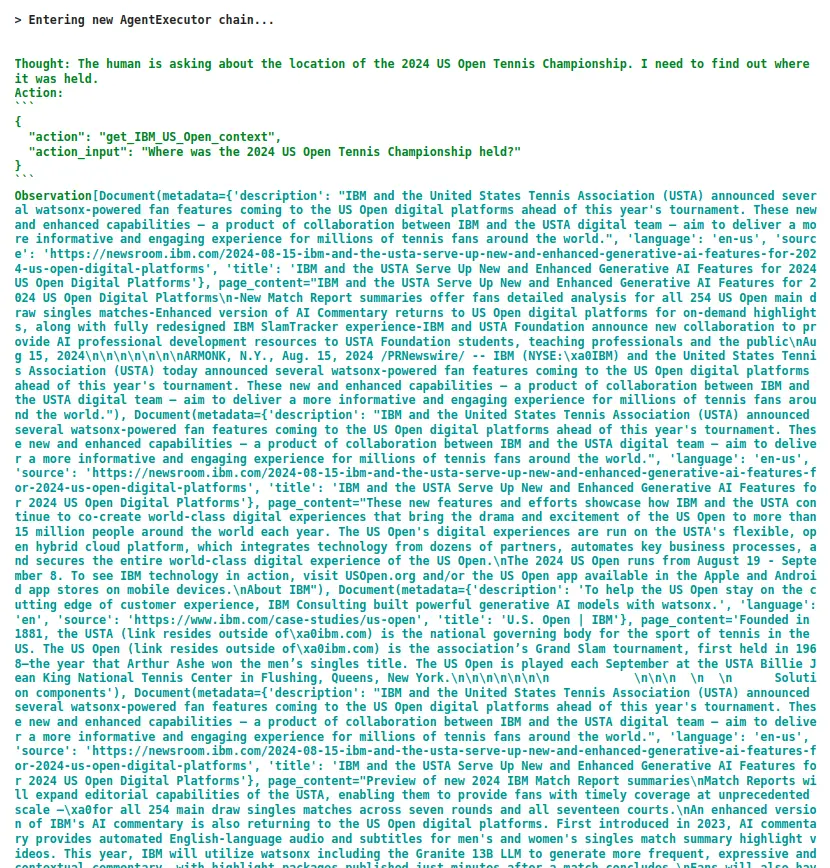

agent_executor.invoke({"input": "Where was the 2024 US Open Tennis Championship?"})

{'input': 'Where was the 2024 US Open Tennis Championship?', 'history': '',

'output': 'The 2024 US Open Tennis Championship was held at the USTA Billie

Jean King National Tennis Center in Flushing, Queens, New York.'}

Great! The agent used its available RAG tool to return the location of the

2024 US Open, per the user's query. We even get to see the exact document

that the agent is retrieving its information from. Now, let's try a slightly

more complex question query. This time, the query will be about IBM's

involvement in the 2024 US Open.

agent_executor.invoke(

{"input": "How did IBM use watsonx at the 2024 US Open Tennis Championship?"}

)

> Finished chain.

Out( ):{'input': 'How did IBM use watsonx at the 2024 US Open Tennis Championship?',

'history': 'Human: Where was the 2024 US Open Tennis Championship?\nAI: The

2024 US Open Tennis Championship was held at the USTA Billie Jean King

National Tennis Center in Flushing, Queens, New York.','output': 'IBM used watsonx at the 2024 US Open Tennis Championship to

create generative ai-powered features such as Match Reports, ai Commentary,

and SlamTracker. These features enhance the digital experience for fans and

scale the productivity of the USTA editorial team.'}

How Does It Work in Practice?

- Query Processing: The agent parses the user’s query.

- Decision Making: Determines whether to use tools or respond directly.

- Tool Interaction: If necessary, invoke the tool (e.g., get_IBM_US_Open_context).

- Final Response: Combines retrieved data or knowledge base information to provide an accurate answer.

This structured system combines IBM’s watsonx.ai, LangChain, and machine learning to build a versatile, knowledge-augmented ai agent tailored for both general and domain-specific queries.

Also, if you are looking for an ai Agents course online, then explore: Agentic ai Pioneer Program

Conclusion

RAG (Retrieval-Augmented Generation) enhances LLMs by combining external data retrieval with generative capabilities, improving accuracy and relevance and reducing hallucinations. However, it struggles with complex, multi-step queries. Agentic RAG advances this by integrating intelligent agents that dynamically select tools, refine queries, and handle specialized tasks like code generation or visualizations. It supports multi-agent collaboration, ensuring adaptability, scalability, and precise context-aware responses. While traditional RAG suits basic Q&A and research, Agentic RAG excels in dynamic, data-intensive applications like real-time analysis and enterprise systems. Agentic RAG’s modularity and intelligence make it ideal for tackling complex tasks beyond the scope of traditional RAG systems.

I hope you find this guide helpful in understanding RAG vs Agentic RAG! If you any questions regarding the article comment below.

Frequently Asked Questions

Ans. RAG focuses on integrating retrieval and generation capabilities to improve ai outputs by grounding responses with external knowledge. Agentic RAG, on the other hand, incorporates intelligent agents that can autonomously select tools, refine queries, and adapt to complex, multi-step tasks.

Ans. Agentic RAG enables decision-making and dynamic planning, allowing it to handle real-time data, multi-tool integration, and context-aware reasoning, making it ideal for sophisticated, task-specific applications.

Ans. Agentic RAG employs agents like routing agents to direct queries, query planning agents for breaking down multi-step tasks, and Re-Act agents for iterative reasoning and actions, ensuring precise and contextual responses.

Ans. Traditional RAG struggles with contextual understanding, synthesis, and scalability. Agentic RAG overcomes these by dynamically adapting to user inputs, integrating diverse data sources, and leveraging multi-agent collaboration for efficient task management.

Ans. Agentic RAG is ideal for applications requiring real-time updates, multi-step reasoning, and integration with multiple tools, such as enterprise systems, data analytics, and domain-specific ai systems. Traditional RAG suits simpler, static tasks like basic Q&A or static content retrieval.