Quantization after training (PTQ) It focuses on reducing the size and improving the speed of large language models (LLM) to make them more practical for the use of real world. These models require large volumes of data, but the distribution of strongly biased and highly heterogeneous data during quantization presents considerable difficulties. This inevitably expands the rank of quantization, which makes it, most of the values, a less precise expression and reduces the general performance in the precision of the model. Although PTQ methods aim to address these problems, the challenges continue to distribute data effectively throughout the quantization space, which limits the optimization potential and hinders the broader implementation in limited environments for resources.

The methods of quantification after current training (PTQ) of large language models (LLMS) focus on the quantization of weight only and weight activation. Weight only methods, such as GPTQ, AWQand OWQTry to reduce the use of memory minimizing quantization errors or addressing atypical activation values, but cannot completely optimize all values. Techniques like Sophism and Sophism# Use random matrices and vector quantization, but remain limited in the management of extreme data distributions. The quantification of weight activation aims to accelerate inference quantifying weights and activations. However, methods such as Gentle, Zeroquantand Quarot Fight to manage the mastery of atypical activation values, causing errors in most values. In general, these methods are based on heuristic approaches and fail to optimize the distribution of data throughout the quantization space, which limits performance and efficiency.

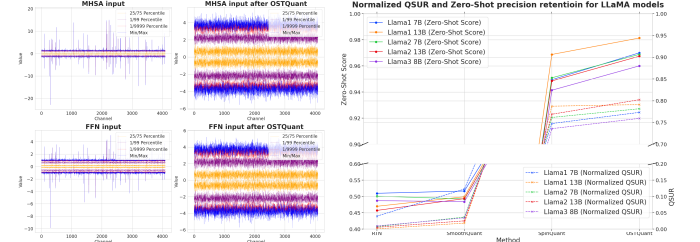

To address the limitations of the quantization methods of heuristic training (PTQ) and the lack of a metric to evaluate quantization efficiency, researchers from The Houumo ai, University of Nanjing, and Southeast University proposed the Cuantization space use rate concept (QSUR). QSUR measures how effective weight and activation distributions use the quantization space, offering a quantitative basis to evaluate and improve PTQ methods. The metric takes advantage of statistical properties such as the decomposition of self -value and trust ellipsoids to calculate the hypervolume of weight and activation distributions. The QSUR analysis shows how linear and rotational transformations affect quantization efficiency, with specific techniques that reduce intercanal disparities and minimize atypical values to improve performance.

The researchers proposed the Zafa Marco, which combines orthogonal and scale transformations to optimize the weight and activation distributions of large language models. This approach integrates equivalent transformation pairs of diagonal scale and orthogonal matrices, guaranteeing computational efficiency while preserving equivalence in quantization. Reduces the overhabit without compromising the output of the original network at the time of inference. Zafa Use learning between block to spread transformations worldwide Llm blocks, using techniques such as Initialization of Atypical Weight Minimization (WOMI) For effective initialization. The method achieves higher QsurReduce execution time overload and improve quantization performance in LLM.

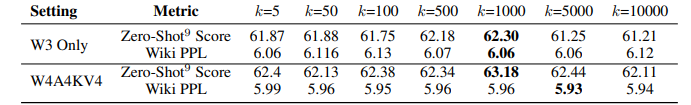

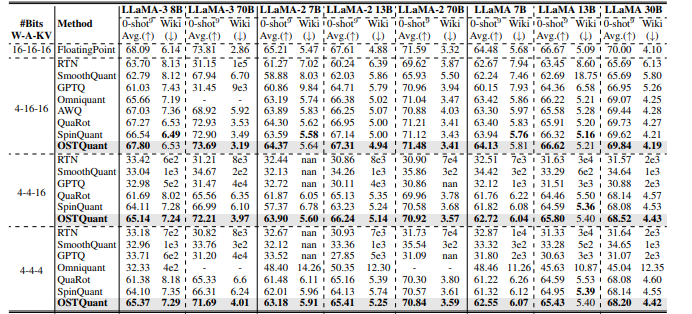

For evaluation purposes, the researchers applied Zafa toward Calls family (Flame-1, call-2, and Call-3) and evaluated performance using perplexity in Wikitoxt2 and nine zero shooting tasks. Compared to methods such as Gentle, GPTQ, Quarotand Spinquant, Zafa He constantly exceeded them, achieving at least 99.5% precision of the floating point under the 4-16-16 configuration and significantly reduced performance gaps. Llama-3-8b incurred only one 0.29-Pointing falls into Zero shooting taskscompared to higher losses 1.55 points for others. In more difficult scenarios, Ostquant was better than Spinquant and won as much as 6.53 points Call-2b In configuration 4-4-16. The KL-TOP loss function provided a better adjustment of semantics and reduced noise, thus improving the performance and reduction of the gaps in the W4A4KV4 by 32%. These results showed that Zafa It is more effective for atypical management and ensure that distributions are more impartial.

In the end, the proposed method optimized data distributions in the quantization space based on the QSUR metric and the loss function, KL-TOP, improving the performance of large language models. With low calibration data, noise and semantic wealth preserved compared to existing quantization techniques, achieving high performance at multiple reference points. This framework can serve as a basis for future work, starting a process that will be essential to improve quantization techniques and make models more efficient for applications that require high calculation efficiency in environments with limited resources.

Verify he Paper. All credit for this investigation goes to the researchers of this project. Besides, don't forget to follow us <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LINKEDIN GRsplash. Do not forget to join our 70k+ ml of submen.

<a target="_blank" href="https://nebius.com/blog/posts/studio-embeddings-vision-and-language-models?utm_medium=newsletter&utm_source=marktechpost&utm_campaign=embedding-post-ai-studio” target=”_blank” rel=”noreferrer noopener”> (Recommended Read) Nebius ai Studio expands with vision models, new language models, inlays and Lora (Promoted)

Divyesh is a consulting intern in Marktechpost. He is looking for a BTECH in agricultural and food engineering of the Indian Institute of technology, Kharagpur. He is a data science enthusiast and automatic learning that wants to integrate these leading technologies in the agricultural domain and solve challenges.

NEWSLETTER

NEWSLETTER