XAI, or explainable ai, represents a paradigm shift in neural networks that emphasizes the need to explain the decision-making processes of neural networks, which are the well-known black boxes. In XAI, feature selection, mechanistic interpretability, concept-based explainability, and training data attribution (TDA) methods have gained popularity. Today we talk about TDA, whose objective is to relate the inference of a model from a specific sample to its training data. Apart from model explainability, it also helps with other vital tasks like model debugging, data summarization, machine unlearning, dataset selection, fact tracing, etc. Research on ADD is thriving, but we see little work on attribution assessment. Several independent metrics have been proposed to assess the quality of ADT across contexts; however, they do not provide a systematic and unified comparison that can gain the trust of the research community. This requires a unified framework for TDA assessment (and beyond).

The Fraunhofer Institute for Telecommunications has proposed Quanda to bridge this gap. It is a Python toolset that provides a complete set of evaluation metrics and a uniform interface for seamless integration with current TDA implementations. It's easy to use, thoroughly tested, and available as a library on PyPI. Quanda incorporates the PyTorch Lightning, HuggingFace Datasets, Torchvision, Torcheval, and Torchmetrics libraries for seamless integration into users' pipelines while avoiding reimplementing available features.

TDA testing in Quanda is comprehensive and begins with a standard interface for many methods distributed in independent repositories. It includes several metrics for various tasks that allow for comprehensive evaluation and comparison. These standard benchmarks are available as sets of pre-computed evaluation benchmarks to ensure user reproducibility and reliability. Quanda differs from its contemporaries, such as Captum, TransformerLens, Alibi Explain, etc., in terms of the extent and comparability of evaluation metrics. Other assessment strategies, such as post-task assessment and heuristic assessment, fail due to their fragmented nature, one-off comparisons, and unreliability.

There are several functional units represented by modular interfaces in the Quanda library. It has three main components: explainers, evaluation metrics, and benchmarks. Each element is implemented as a base class that defines the minimum functionalities necessary to create a new instance. This base class design allows users to evaluate even novel TDA methods by adjusting their implementation according to the base explainability model.

Quanda is based on explainers, metrics and benchmarks. Each Explainer represents a specific TDA method, including its architecture, model weights, training data set, etc. Metrics summarize the performance and reliability of a TDA method in a compact form. Quanda's stateful metric design includes an update method to account for new test batches. Furthermore, a metric can be classified into three types: ground_truth, top-down_evaluation, or heuristic. Finally, Benchmark allows standard comparisons between different TDA methods.

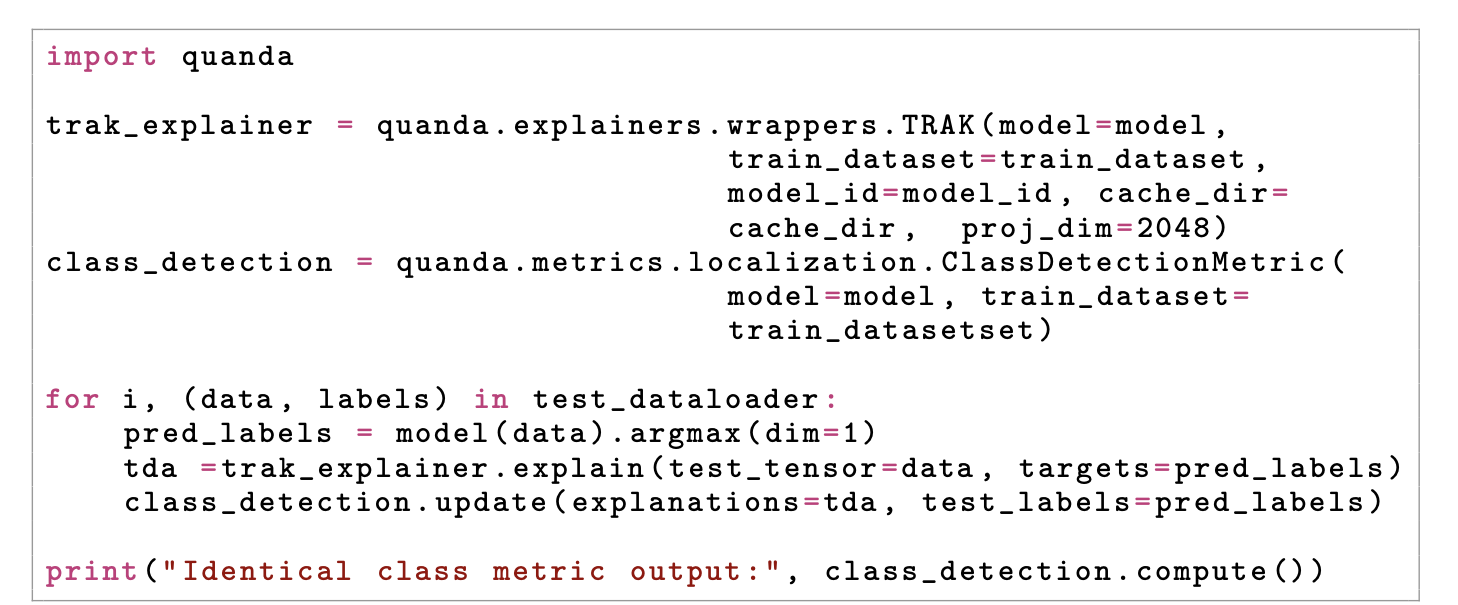

An example of using the Quanda library to evaluate simultaneously generated explanations is provided below:

Quanda addresses gaps in TDA evaluation metrics that led to doubts in its adoption within the explainable community. TDA researchers can benefit from this library's standard metrics, out-of-the-box configurations, and consistent wrappers for available implementations. In the future, it would be interesting to see Quanda's capabilities expanded to more complex areas, such as natural language processing.

look at the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml.

(Next live webinar: October 29, 2024) Best platform to deliver optimized models: Predibase inference engine (promoted)

Adeeba Alam Ansari is currently pursuing her dual degree from the Indian Institute of technology (IIT) Kharagpur, where she earned a bachelor's degree in Industrial Engineering and a master's degree in Financial Engineering. With a keen interest in machine learning and artificial intelligence, she is an avid reader and curious person. Adeeba firmly believes in the power of technology to empower society and promote well-being through innovative solutions driven by empathy and a deep understanding of real-world challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>