Generated by moez ali using half trip

- Introduction

- Stable Time Series Forecast Module

- New object-oriented API

- More options for experiment registration

- Refactored preprocessing module

- Compatibility with the latest version of sklearn

- Training Parallel Distributed Models

- Speed up model training on the CPU

- RIP: PNL and Arules module

- More information

- Collaborators

PyCaret is an open source and low-code machine learning library in Python that automates machine learning workflows. It is a comprehensive machine learning and model management tool that exponentially speeds up the experimentation cycle and makes it more productive.

Compared to the other open source machine learning libraries, PyCaret is a low-code alternative library that can be used to replace hundreds of lines of code with just a few lines. This makes experiments exponentially fast and efficient. PyCaret is essentially a Python wrapper around various machine learning libraries and frameworks in Python.

PyCaret’s design and simplicity is inspired by the emerging role of citizen data scientists, a term first used by Gartner. Citizen data scientists are power users who can perform simple to moderately sophisticated analytical tasks that previously would have required more technical expertise.

For more information on PyCaret, check out our GitHub either Official documents.

Consult our complete release notes for PyCaret 3.0

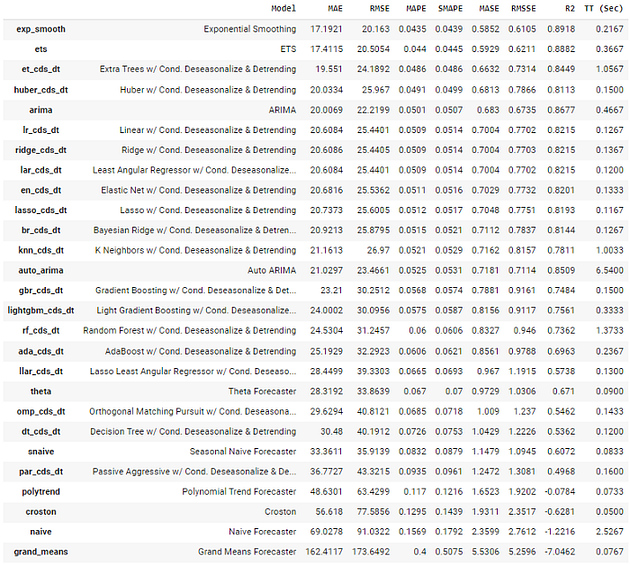

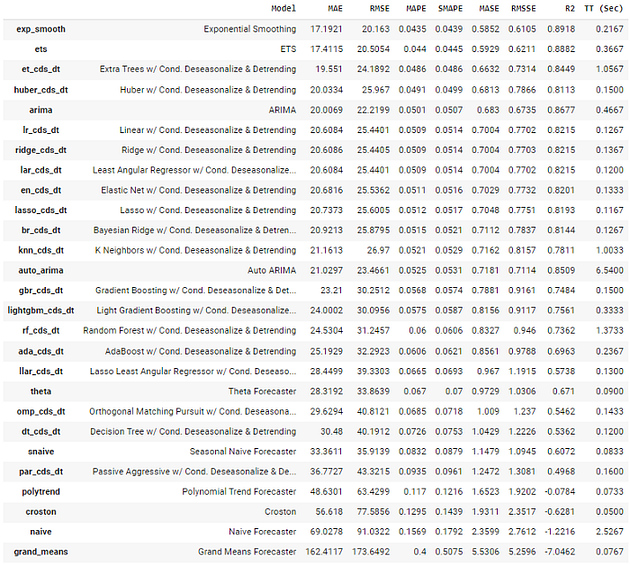

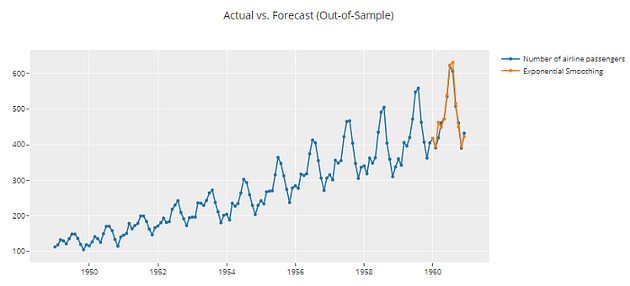

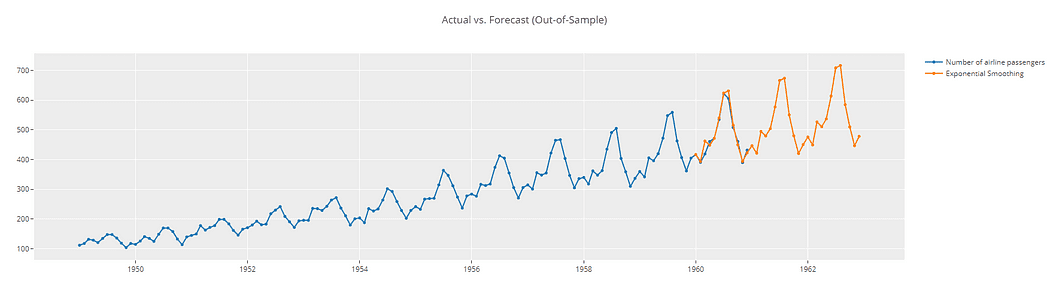

The PyCaret Time Series module is now stable and available in 3.0. It currently supports forecasting tasks, but time series anomaly detection and clustering algorithms are planned to be available in the future.

# load dataset

from pycaret.datasets import get_data

data = get_data('airline')

# init setup

from pycaret.time_series import *

s = setup(data, fh = 12, session_id = 123)

# compare models

best = compare_models()

# forecast plot

plot_model(best, plot="forecast")

# forecast plot 36 days out in future

plot_model(best, plot="forecast", data_kwargs = {'fh' : 36})

While PyCaret is a fantastic tool, it doesn’t adhere to the typical object-oriented programming practices used by Python developers. To address this issue, we had to rethink some of the initial design decisions we made for version 1.0. It is important to note that this is a significant change that will require considerable effort to implement. Now, let’s explore how this will affect you.

# Functional API (Existing)

# load dataset

from pycaret.datasets import get_data

data = get_data('juice')

# init setup

from pycaret.classification import *

s = setup(data, target="Purchase", session_id = 123)

# compare models

best = compare_models()

It’s great to run experiments on the same laptop, but if you want to run a different experiment with different setup function parameters, this can be a problem. Although it is possible, the previous experiment setup will be overridden.

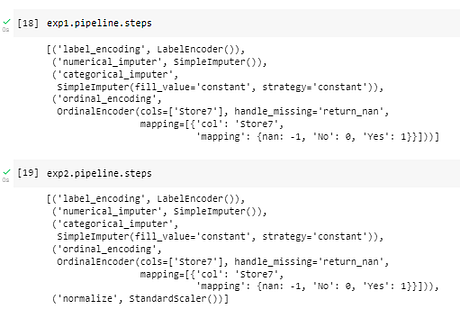

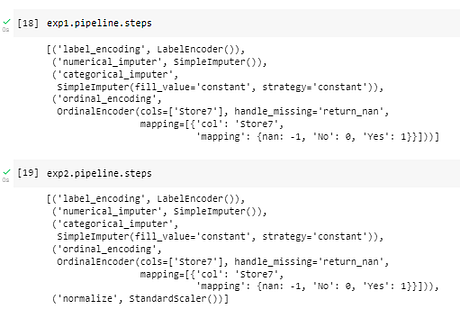

However, with our new object-oriented API, you can effortlessly perform multiple experiments in the same notebook and compare them without any difficulty. This is because the parameters are bound to an object and can be associated with various modeling and preprocessing options.

# load dataset

from pycaret.datasets import get_data

data = get_data('juice')

# init setup 1

from pycaret.classification import ClassificationExperiment

exp1 = ClassificationExperiment()

exp1.setup(data, target="Purchase", session_id = 123)

# compare models init 1

best = exp1.compare_models()

# init setup 2

exp2 = ClassificationExperiment()

exp2.setup(data, target="Purchase", normalize = True, session_id = 123)

# compare models init 2

best2 = exp2.compare_models()

exp1.compare_models

exp2.compare_models

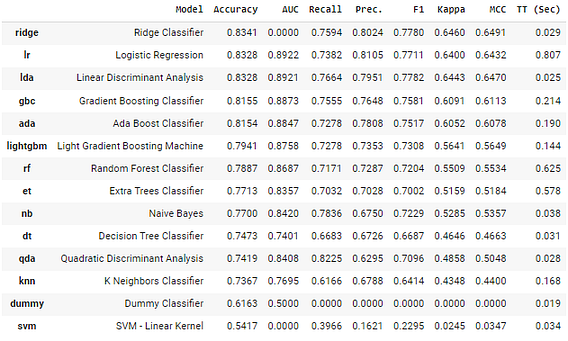

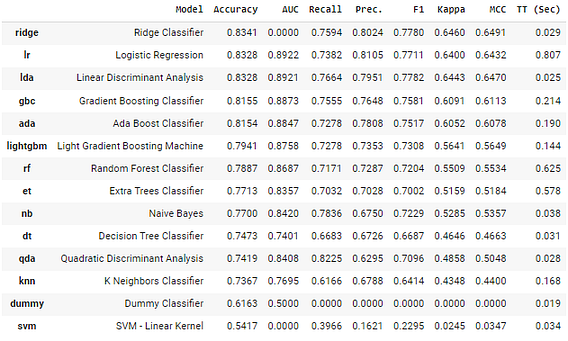

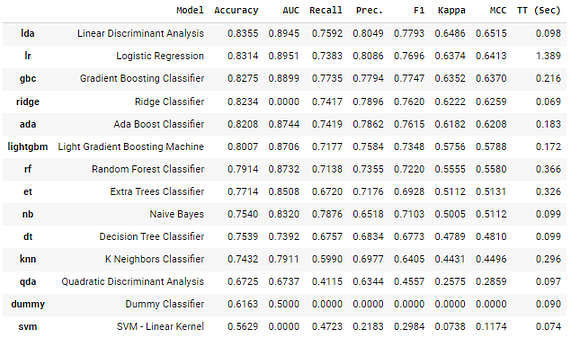

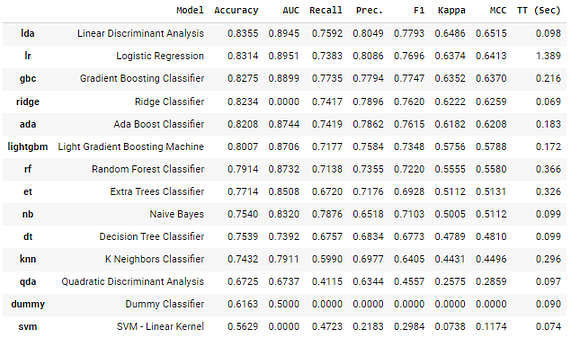

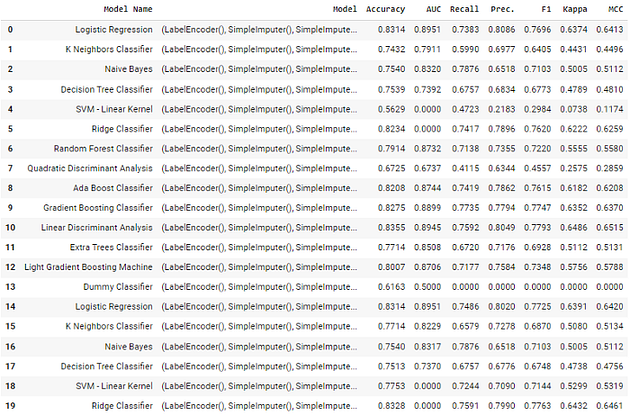

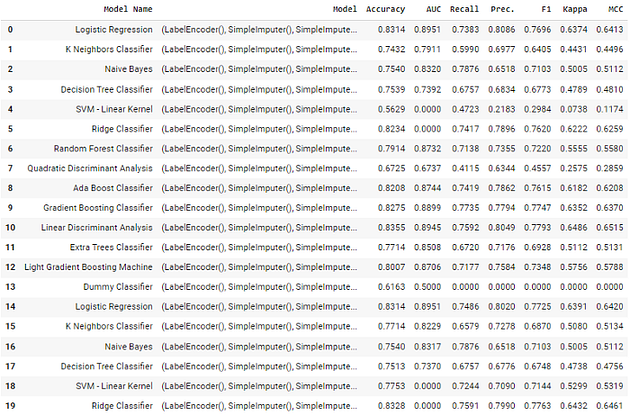

After conducting experiments, you can use the get_leaderboard feature to create leaderboards for each experiment, making it easy to compare.

import pandas as pd

# generate leaderboard

leaderboard_exp1 = exp1.get_leaderboard()

leaderboard_exp2 = exp2.get_leaderboard()

lb = pd.concat([leaderboard_exp1, leaderboard_exp2])

truncated output

# print pipeline steps

print(exp1.pipeline.steps)

print(exp2.pipeline.steps)

PyCaret 2 can automatically record experiments using MLflow . While still the default, there are more options for experiment logging in PyCaret 3. Newly added options in the latest version are wandb, cometml, dagshub .

To change the default MLflow logger to other available options, simply pass one of the following in thelog_experiment parameter. ‘mlflow’, ‘wandb’, ‘cometml’, ‘dagshub’.

The preprocessing module underwent a complete redesign to improve its efficiency and performance, as well as to ensure compatibility with the latest version of Scikit-Learn.

PyCaret 3 includes several new preprocessing features, including innovative categorical coding techniques, support for text features in machine learning modeling, novel outlier detection methods, and advanced feature selection techniques.

Some of the new features are:

- New categorical coding methods

- Handling text features for machine learning modeling

- New methods to detect outliers

- New methods for feature selection

- Guarantee to prevent target leaks as all tubing is now installed at one fold level.

PyCaret 2 is heavily based on scikit-learn 0.23.2, which makes it impossible to use the latest version of scikit-learn (1.X) simultaneously with PyCaret in the same environment.

PyCaret is now compatible with the latest version of scikit-learn and we would like to keep it that way.

To scale on large data sets, you can run compare_models function in a cluster in distributed mode. To do this, you can use the parallel parameter in the compare_models function.

This was possible thanks to Drainan open source unified interface for distributed computing that allows users to run Python, Pandas, and SQL code on Spark, Dask, and Ray with minimal rewrites

# load dataset

from pycaret.datasets import get_data

diabetes = get_data('diabetes')

# init setup

from pycaret.classification import *

clf1 = setup(data = diabetes, target="Class variable", n_jobs = 1)

# create pyspark session

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

# import parallel back-end

from pycaret.parallel import FugueBackend

# compare models

best = compare_models(parallel = FugueBackend(spark))

you can apply Intel Optimizations for machine learning algorithms and speed up your workflow. To train models using Intel optimizations sklearnex engine, the installation of the Intel sklearnex library is required:

# install sklearnex

pip install scikit-learn-intelexTo use Intel optimizations, just pass engine="sklearnex" in it create_model function.

# Functional API (Existing)

# load dataset

from pycaret.datasets import get_data

data = get_data('bank')

# init setup

from pycaret.classification import *

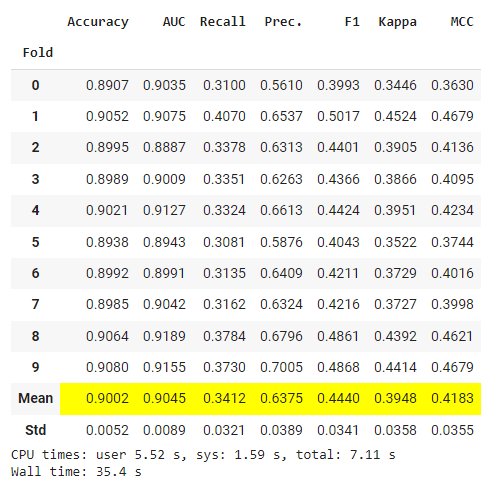

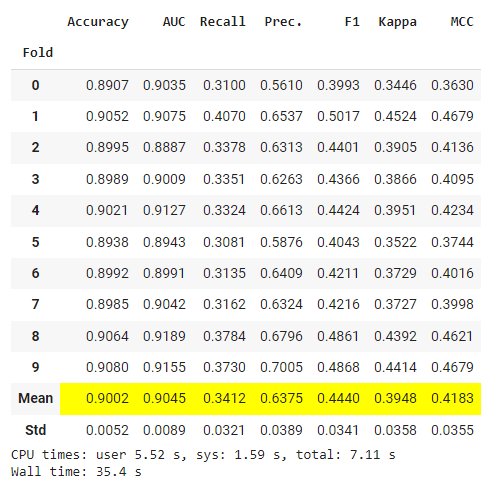

s = setup(data, target="deposit", session_id = 123)Model Training without Intel Accelerations:

%%time

lr = create_model('lr')

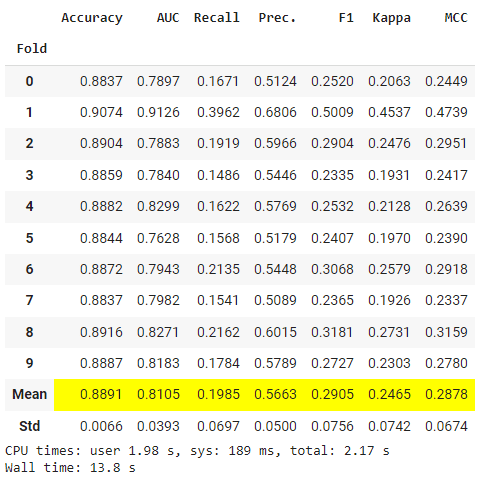

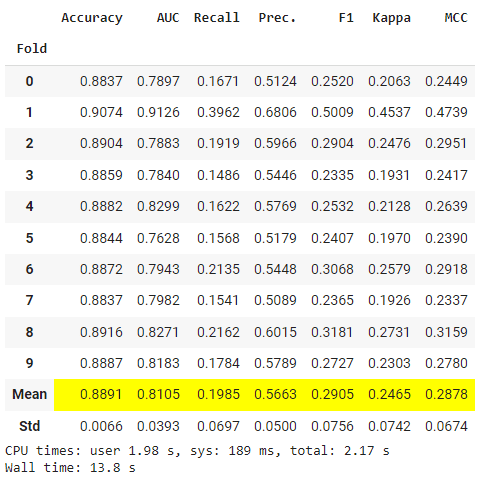

Model Training with Intel Accelerations:

%%time

lr2 = create_model('lr', engine="sklearnex")

There are some differences in model performance (negligible in most cases), but the improvement over time is ~60% on a 30,000 row dataset. The benefit is much greater when dealing with larger data sets.

NLP is changing rapidly, and there are many dedicated libraries and companies that work exclusively to solve end-to-end NLP tasks. Due to lack of resources, existing experience in the team, and new contributors willing to maintain and support NLP and Arules, we have decided to remove them from PyCaret. PyCaret 3.0 does not have nlp and arules module. It has also been removed from the documentation. You can still use them with the older version of PyCaret.

documents Getting started with PyCaret

API Reference Detailed API docs

tutorials New to PyCaret? Consult our official notebooks

Notebooks created and maintained by the community

Blog Contributor Articles and Tutorials

Videos Video tutorials and events

Youtube Subscribe to our YouTube channel

Loose Join our slack community

LinkedIn Follow our LinkedIn page

discussions Interact with the community and collaborators

Thanks to all the contributors who have participated in PyCaret 3.

@ngupta23

@yard1

@tvdboom

@jinensetpal

@buenowanghan

@Alexsandruss

@daikikatsuragawa

@caron14

@sherpan

@haizadtarik

@ethanlaser

@kumar21120

@satya-pattnaik

@ltsaprounis

@sayatan1410

@AJarman

@drmario-gh

@NeptuneN

@Abonia1

@LucasSerra

@desaizeeshan22

@roboro

@jonasvdd

@PivovarA

@ykskks

@chrimaho

@AnthonyA1223

@ArtificialZeng

@cspartalis

@vladocodes

@huangzhhui

@keisuke-umezawa

@ryankarlos

@celestinoxp

@qubiit

@beckernick

@napetrov

@erwanlc

@Danpilz

@ryanxjhan

@wkuopt

@TremaMiguel

@IncubatorShokuhou

@moezali1

moez ali writes about PyCaret and its real world use cases. If you would like to be notified automatically, you can follow Moez on Half, LinkedInand Twitter.

Original. Reposted with permission.