Large language models (LLM) such as GPT, Gemini and Claude use vast training sets and complex architectures to generate high quality responses. However, the optimization of its inference time calculation remains challenging, since increasing the size of the model leads to higher computational costs. Researchers continue to explore strategies that maximize efficiency while maintaining or improve model performance.

A widely adopted approach to improve LLM's performance is the set, where multiple models are combined to generate a final output. The mixture of agents (MOA) is a popular joint method that adds responses from different LLM to synthesize a high quality response. However, this method introduces fundamental compensation between diversity and quality. While combining various models can offer advantages, it can also result in suboptimal performance due to the inclusion of lower quality responses. Researchers aim to balance these factors to ensure optimal performance without compromising the quality of the response.

Traditional MOA frameworks work through the first consultation of multiple proponents models to generate answers. An aggregator model then synthesizes these answers in a final response. The effectiveness of this method is based on the assumption that the diversity between the proponent models leads to better performance. However, this assumption does not take into account the degradation of the potential quality caused by weaker models in the mixture. Previous investigations have focused mainly on increasing the diversity of cross models instead of optimizing the quality of proponent models, which leads to performance inconsistencies.

A research team from Princeton introduced Auto-MOA, a new set method that eliminates the need for multiple models by adding various results of a single high performance model. Unlike the traditional moa, which combines different LLM, the auto-moa take advantage of diversity in the model when repeatedly sampling from the same model. This approach ensures that only high quality responses contribute to the final output, which addresses quality diversity compensation observed in mixed moa settings.

Self-MOA works by generating multiple responses from a single superior performance model and synthesizing them in a final output. Doing so eliminates the need to incorporate lower quality models, thus improving the general quality of the response. To further improve scalability, researchers introduced auto-moa-seq, a sequential variation that processes multiple responses iteratively. This allows efficient aggregation of results even in scenarios where computational resources are limited. Self-seq processes outputs using a sliding window approach, ensuring that LLMs with shorter context lengths can still benefit from a set without compromising performance.

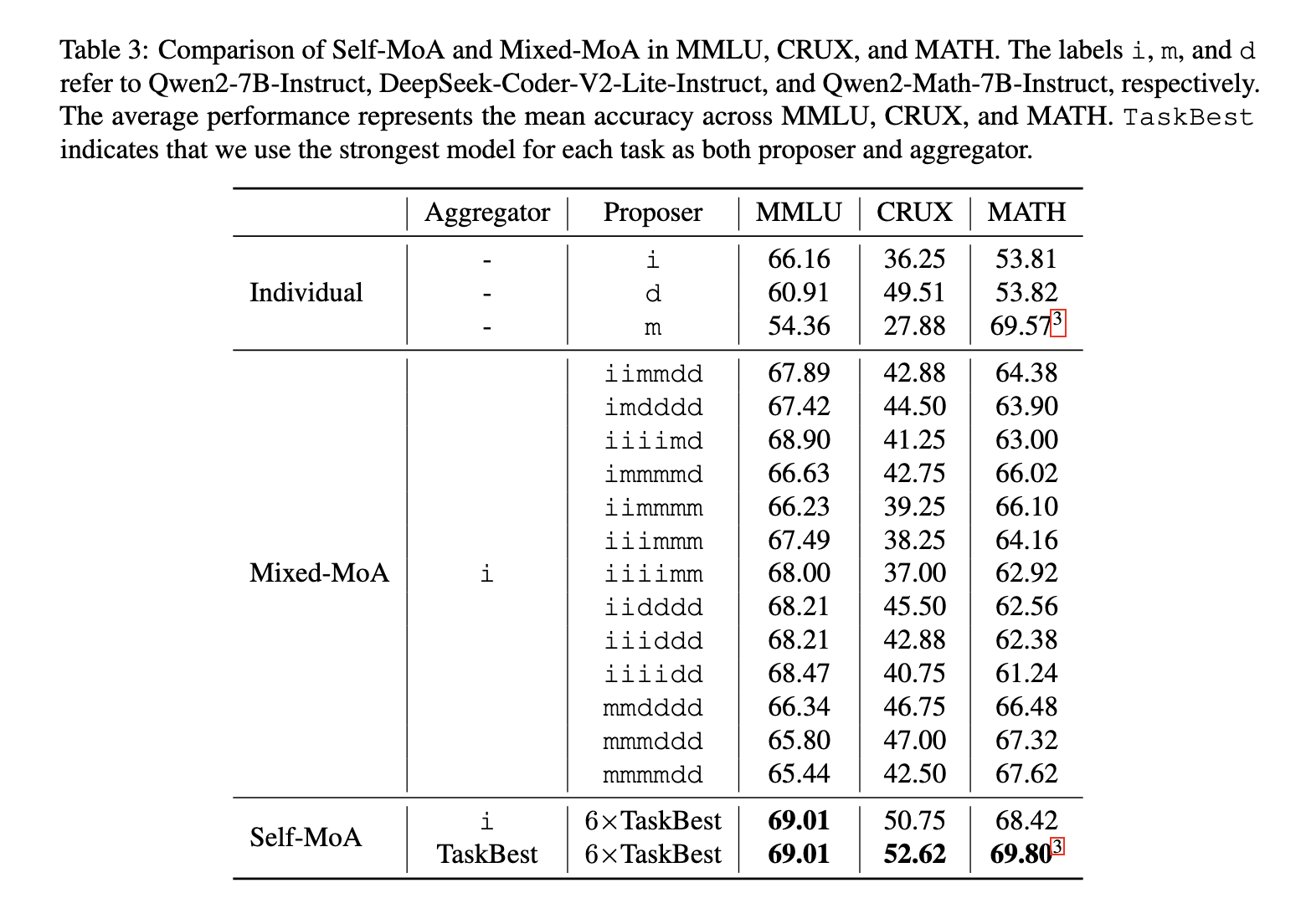

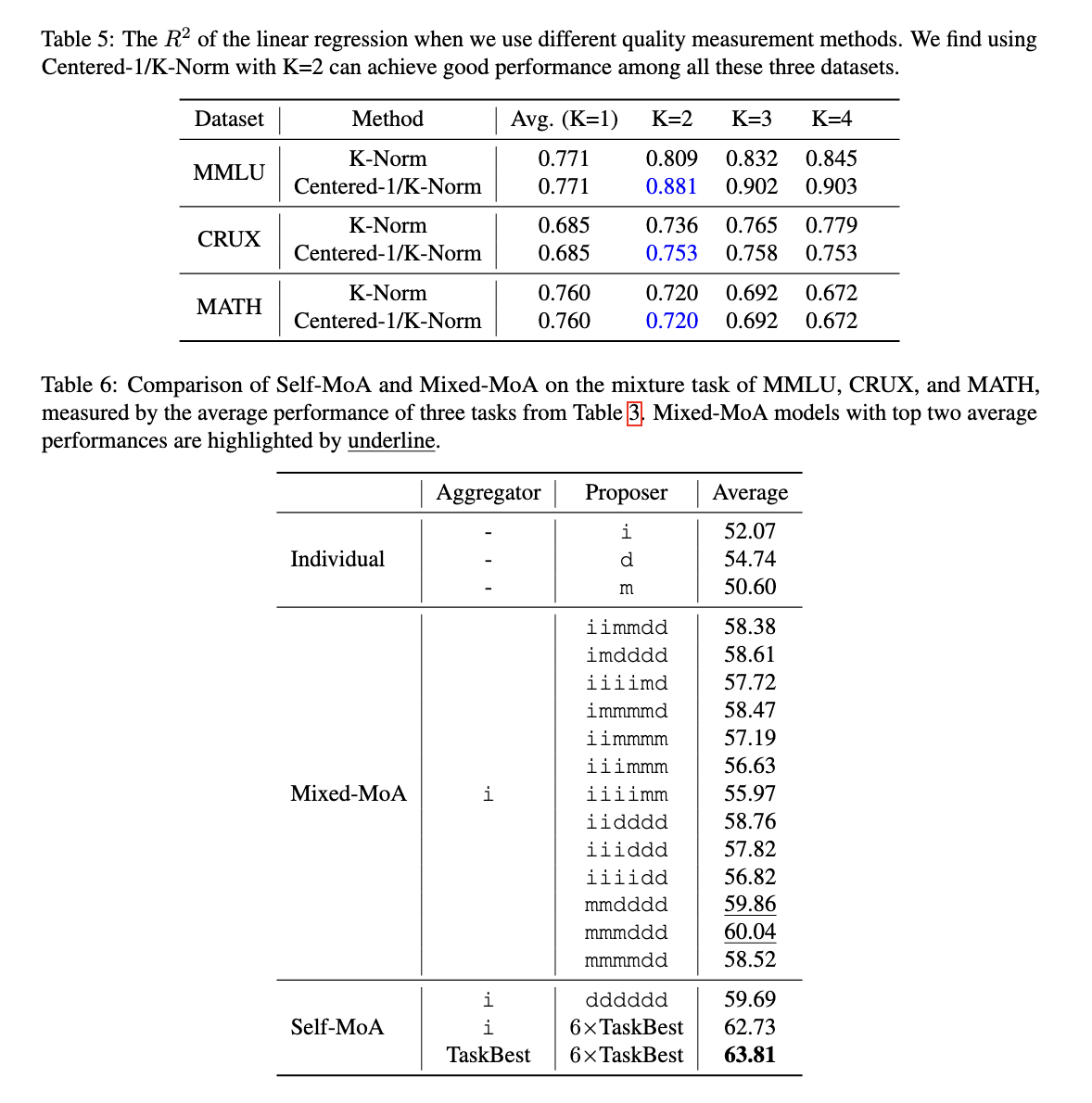

The experiments showed that the auto-moa significantly exceeds the mixed moa in several reference points. In the reference point Alpacaeval 2.0, the auto-moa achieved an improvement of 6.6% on the traditional moa. When tested in multiple data sets, including MMU, CRUX and Math, Self-MOA showed an average improvement of 3.8% on mixed moa approaches. When applied to one of the high-ranking models in Alpacaeval 2.0, Self-MOA established a new latest generation performance record, even more validating its effectiveness. In addition, the auto-seq proved to be as effective as adding all the outputs simultaneously while adding the limitations imposed by the restrictions of context length of the model.

The research results highlight a crucial vision of MOA configurations: performance is highly sensitive to the quality of the proposal. The results confirm that the incorporation of various models does not always lead to higher performance. On the other hand, the responses of a single high quality model produce better results. The researchers carried out more than 200 experiments to analyze compensation between quality and diversity, concluding that the self -moa constantly exceeds the mixed moa when the best performance model is used exclusively as a proposal.

This study challenges the predominant assumption that the mixture of different LLM leads to better results. By demonstrating the superiority of the auto-moa, it presents a new perspective on the optimization of the calculation of LLM inference. The results indicate that focusing on high quality individual models instead of increasing diversity can improve general performance. As LLM's research continues to evolve, self-MOA provides a promising alternative to traditional set methods, which offers an efficient and scalable approach to improve the quality of the model.

Verify he Paper. All credit for this investigation goes to the researchers of this project. Besides, don't forget to follow us <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LINKEDIN GRsplash. Do not forget to join our 75K+ ml of submen.

Recommended open source ai platform: 'Intellagent is a framework of multiple open source agents to evaluate the complex conversational system' (Promoted)

Nikhil is an internal consultant at Marktechpost. He is looking for a double degree integrated into materials at the Indian Institute of technology, Kharagpur. Nikhil is an ai/ML enthusiast who is always investigating applications in fields such as biomaterials and biomedical sciences. With a solid experience in material science, it is exploring new advances and creating opportunities to contribute.

NEWSLETTER

NEWSLETTER