Reinforcement learning (RL) algorithms can learn abilities to solve decision-making tasks, such as playing games, allowing robots to pick up objects, or even optimizing microchip designs. However, running RL algorithms in the real world requires expensive active data collection. Pre-training on diverse data sets has been shown to allow efficient fine-tuning of the data for individual downstream tasks in natural language processing (NLP) and vision problems. in the same way as BERT either GPT-3 The models provide a general purpose initialization for NLP, the large pretrained RL models could provide a general purpose initialization for decision making. Therefore, we ask the question: Can we allow similar pre-training to speed up RL methods and create a general-purpose “backbone” for efficient RL on various tasks?

In “Offline Q-learning on diverse data multitasks, scales, and generalizes”, which will be published in ICLR 2023we discuss how we scale RL offline, which can be used to train value functions on previously collected static data sets, to provide a general method of pretraining. We show that Scaled Q-Learning using a diverse data set is sufficient to learn representations that facilitate rapid transfer to new tasks and rapid online learning about new variations of a task, significantly improving existing representation learning approaches and even existing ones. Transformer-based methods that use much larger models.

|

Scaled Q-learning: multitasking pretraining with conservative Q-learning

To provide a general-purpose pretraining approach, offline RL needs to be scalable, allowing us to pretrain with data on different tasks and use expressive neural network models to acquire powerful pretrained backbones, specialized for individual downstream tasks. We base our offline RL pre-training method on conservative Q learning (CQL), a simple offline RL method that combines standards Q-learning updates with an additional regularizer that minimizes the value of unseen shares. With discrete actions, the CQL regularizer is equivalent to a standard cross entropy loss, which is a simple one-line modification on standard deep Q-learning. Some crucial design decisions made this possible:

- Neural network size: We found that multigame Q-learning required large neural network architectures. While the above methods often used relatively shallow convolutional networkswe found that models as large as a ResNet 101 led to significant improvements over the smaller models.

- Neural network architecture: To learn pre-trained backbones that are useful for new games, our final architecture uses a shared neural network backbone, with separate 1-layer headers generating Q values from each game. This design prevents crosstalk between games during pretraining while providing enough data exchange to learn a single shared representation. Our shared vision backbone also used a learned position embedding (similar to Transformer models) to keep track of spatial information in the game.

- Representational regularization: Recent work has observed that Q-learning tends to suffer from figurative collapse affairs, where even large neural networks can fail to learn effective representations. To counteract this problem, we take advantage of our priority work to normalize the characteristics of the last layer of the shared part of the network Q. Furthermore, we use a categorical method distributional RL loss for Q-learning, which is known to provide richer representations that improve the performance of subsequent tasks.

Atari’s multitasking benchmark

We evaluated our approach to scalable offline RL on a set of atari games, where the goal is to train a single RL agent to play a collection of games using heterogeneous low-quality (i.e., suboptimal) player data and then use the resulting backbone to quickly learn new variations in pre-training games. or completely new games. Training a single policy that can play many different Atari games is quite difficult even with standards. online deep RL methods, as each game requires a different strategy and different representations. In the offline environment, some Previous jobslike the multigame decision transformers, they proposed to dispense with RL entirely and instead use conditional imitation learning in an attempt to scale with large neural network architectures, such as transformers. However, in this paper, we show that this type of multigame pretraining can be done effectively via RL using CQL in combination with some careful design decisions, which we describe below.

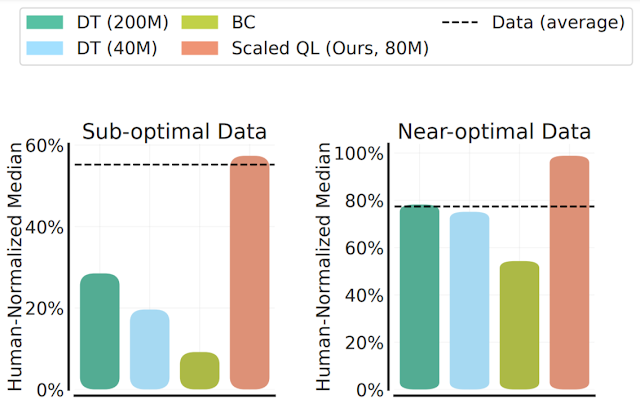

Scalability in training games

We evaluate the performance and scalability of the Scaled Q-Learning method using two compositions of data: (1) near-optimal data, consisting of all training data appearing in replay buffers from previous RL runs, and (2) low-quality data, consisting of data from the first 20% of tests in the replay buffer (that is, only highly suboptimal policy data). In our results below, we compare Scaled Q-Learning with an 80 million parameter model with multi-set decision transformers (DTs) with 40 million or 80 million parameter models, and a baseline of behavioral cloning (learning by imitation) (BC ). We find that Scaled Q-Learning is the only approach that improves offline data, reaching around 80% of normalized human performance.

|

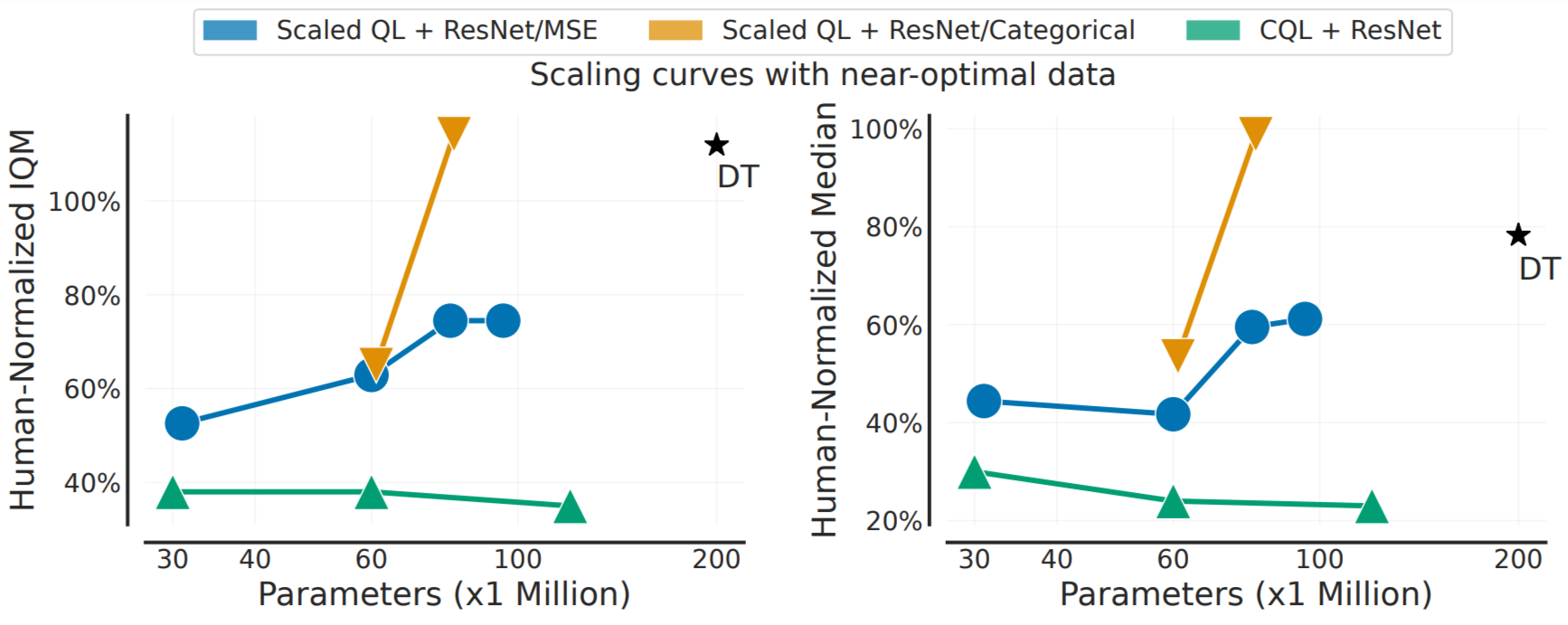

Also, as shown below, Scaled Q-Learning improves in terms of performance, but also enjoys benefits climbing Properties: Just as the performance of pretrained language and vision models improves as the size of the network increases, enjoying what is commonly known as “power-law scaling”, we show that the performance of Scaled Q-learning enjoy similar scale properties. While this may not be surprising, this type of scaling has been elusive in RL, and performance often deteriorates with larger models. This suggests that Scaled Q-Learning in combination with the design choices above better unlocks the ability of RL offline to use large models.

|

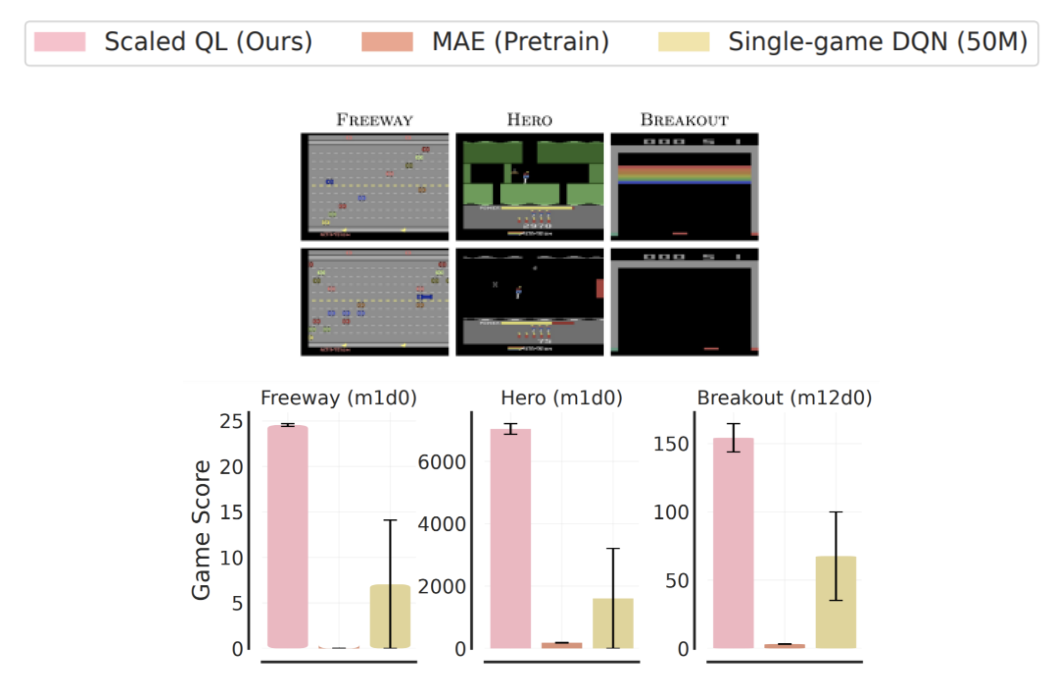

Adaptation to new games and variations

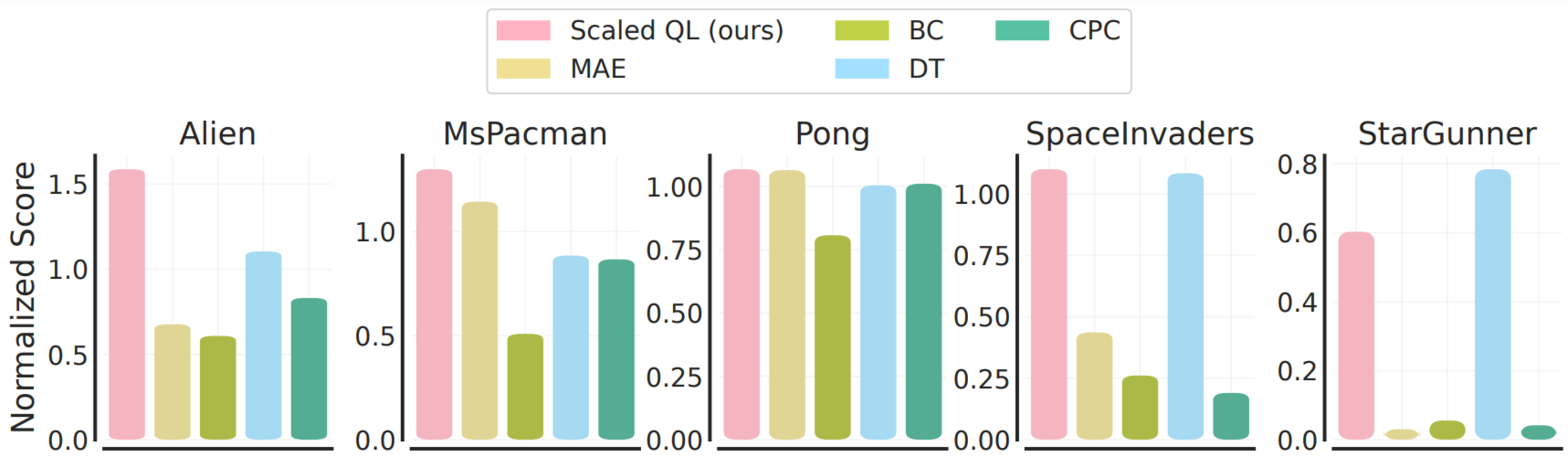

To assess the fine tuning of this offline initialization, we consider two configurations: (1) fine tuning to a completely invisible new game with a small amount of offline data from that game, corresponding to 2 million game transitions, and (2) development of a new variant of games with online interaction. Offline game data fine tuning is illustrated below. Note that this condition is generally more favorable for the Imitation Style, Decision Transformer, and Behavior Cloning methods, since the offline data for new games is of relatively high quality. Nonetheless, we see that, in most cases, Scaled Q-learning outperforms alternative approaches (80% on average), as well as dedicated representation-learning methods, such as MAE either cpcwhich only use the offline data to learn visual representations instead of value functions.

|

In the online setup, we see even greater improvements from pre-training with Scaled Q-learning. In this case, representational learning methods like MAE produce minimal improvement during online RL, while Scaled Q-Learning can successfully integrate prior knowledge about pre-training games to significantly improve the final score after 20k. online interaction steps.

These results demonstrate that prior training of generalist value function pillars with RL offline multitasking can significantly increase RL performance on subsequent tasks, both in offline and online modes. Keep in mind that these fine-tuning tasks are quite difficult: various Atari games, and even variants of the same game, differ significantly in appearance and dynamics. For example, the target blocks in Breakout disappear in the game variation as below, making it difficult to control. However, the success of Scaled Q-learning, particularly in comparison to visual representation learning techniques such as MAE and CPC, suggests that the model is in fact learning some representation of game dynamics, rather than simply providing better visual features.

Conclusion and conclusions

Introducing Scaled Q-Learning, a scaled offline RL pre-training method that is based on the cql algorithm, and demonstrated how it enables efficient offline RL for multitasking training. This work made initial progress in enabling more hands-on, real-world training of RL agents as an alternative to expensive and complex simulation-based pipelines or large-scale experiments. Perhaps, in the long term, similar work will lead to generally capable pre-trained RL agents developing broadly applicable exploration and interaction skills from prior large-scale offline training. Validate these results in a broader range of more realistic tasks, in domains such as robotics (see some initial results) and NLP, is an important direction for future research. Offline RL pretraining has a lot of potential, and we hope to see a lot of progress in this area in future work.

Thanks

This work was done by Aviral Kumar, Rishabh Agarwal, Xinyang Geng, George Tucker, and Sergey Levine. Special thanks to Sherry Yang, Ofir Nachum, and Kuang-Huei Lee for help with the multi-game decision transformer codebase for Atari multi-game benchmarking and evaluation, and to Tom Small for illustrations and the animation.

NEWSLETTER

NEWSLETTER