Introduction

Cohere introduced its next-generation core model, Rerank 3 for efficient enterprise search and retrieval augmented generation (RAG). The Rerank model is compatible with any type of database or search index and can also be integrated into any legal application with native search capabilities. You wouldn't imagine that a single line of code can increase search performance or reduce the burden of running a RAG application with negligible impact on latency.

Let's explore how this basic model is established to advance RAG and enterprise search systems, with greater accuracy and efficiency.

Reclassification capabilities

Rerank offers the best capabilities for enterprise search including:

- 4K context length significantly improves search quality for longer format documents.

- You can search semi-structured and multi-aspect data, such as tables, codes, JSON documents, invoices, and emails.

- It can cover more than 100 languages.

- Improved latency and lower total cost of ownership (TCO)

Generative ai models with long contexts have the potential to run a RAG. To improve accuracy score, latency and cost, the RAG solution should require a combination of generation ai models and of course the Rerank model. rerank3's high-precision semantic reranking ensures that only relevant information is fed into the generation model, increasing response accuracy and keeping latency and cost very low, particularly when retrieving information from millions of documents .

Improved enterprise search

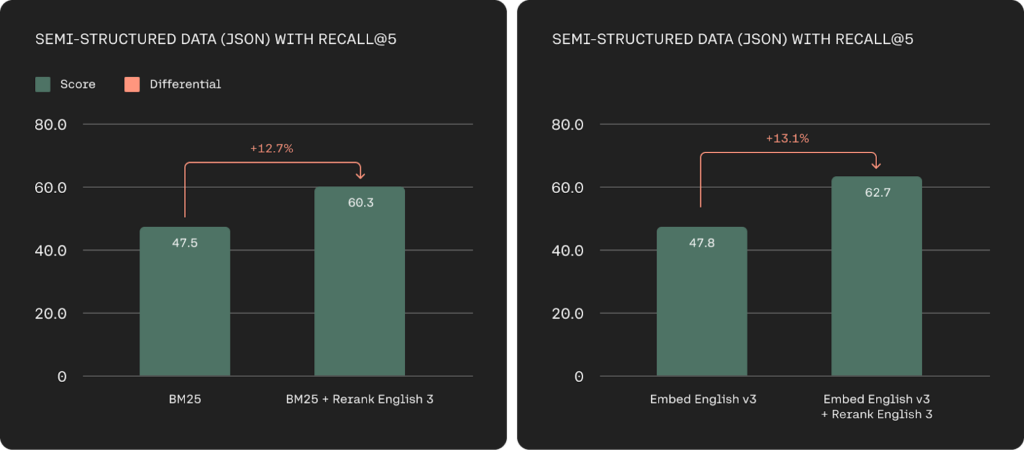

Enterprise data is often very complex and current systems placed in the organization find it difficult to search across semi-structured and multi-aspect data sources. Mainly, in the organization the most useful data is not in the simple document format, such as JSON, which is very common in enterprise applications. Rerank 3 can easily classify complex, multi-faceted messages, such as emails, based on all their relevant metadata fields, including their recency.

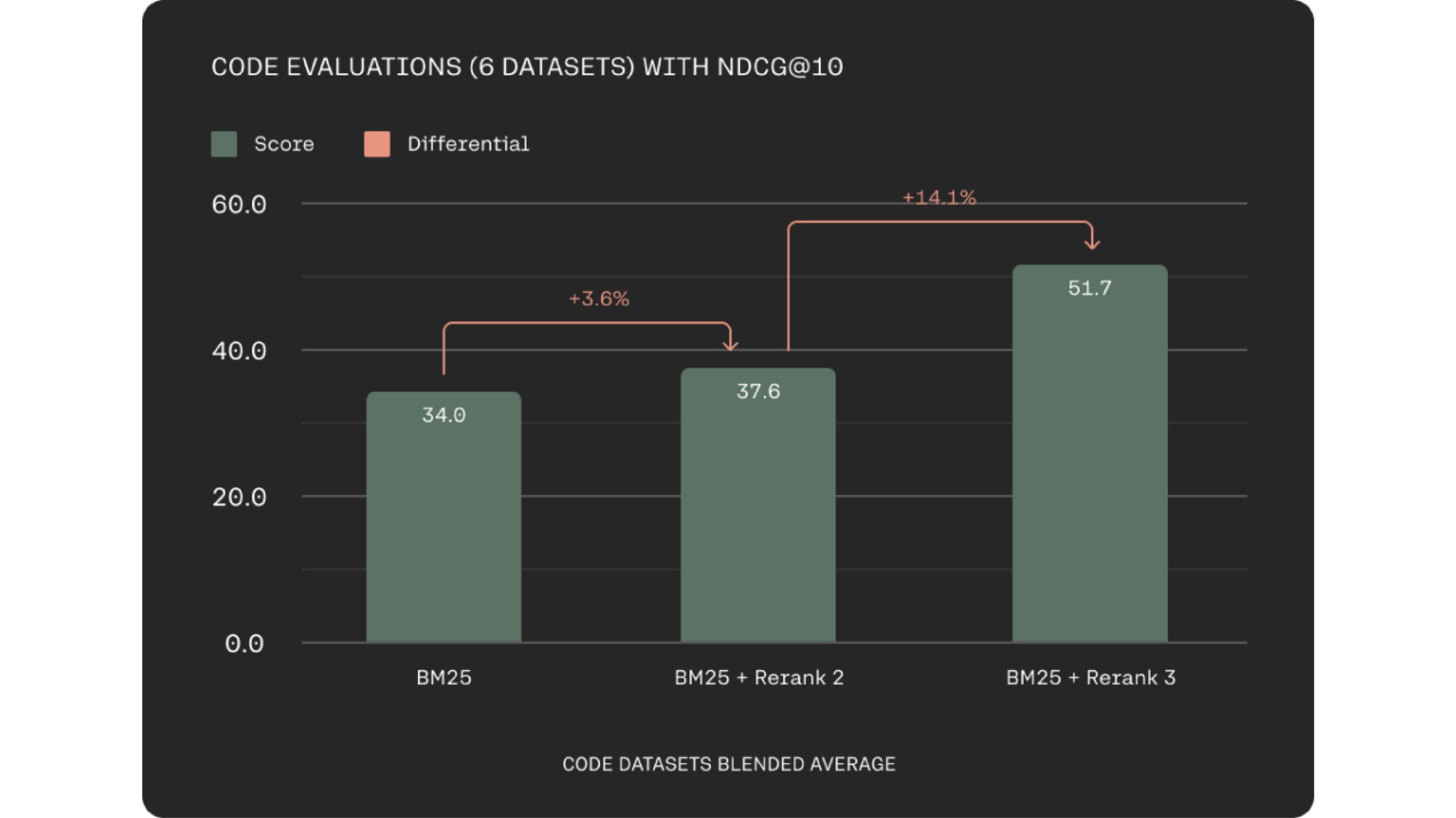

Rerank 3 significantly improves code retrieval quality. This can increase engineer productivity by helping them find the right code snippets faster, whether within your company's code base or across vast documentation repositories.

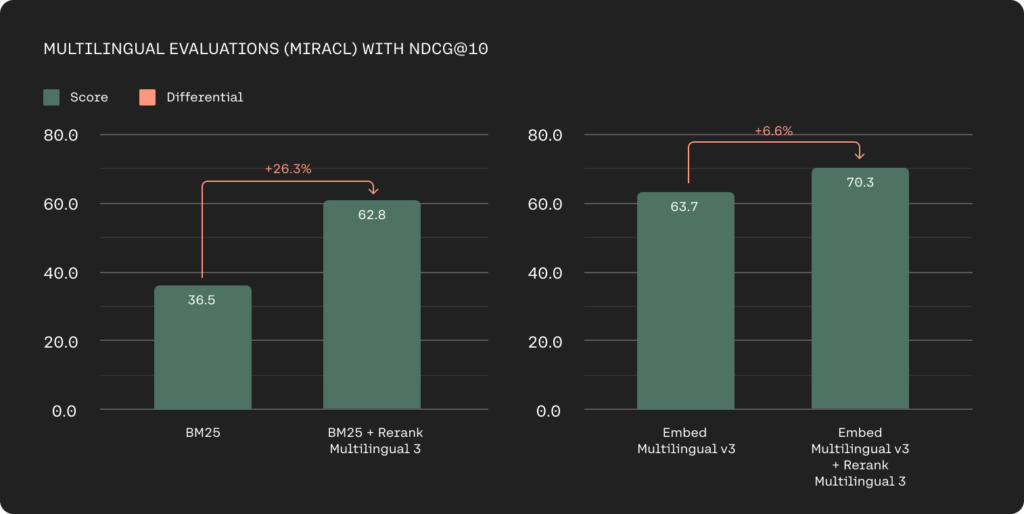

tech giants also deal with multilingual data sources and previously, multilingual retrieval has been the biggest challenge with keyword-based methods. Rerank 3 models offer strong multilingual performance with over 100 languages, simplifying the recovery process for non-English speaking customers.

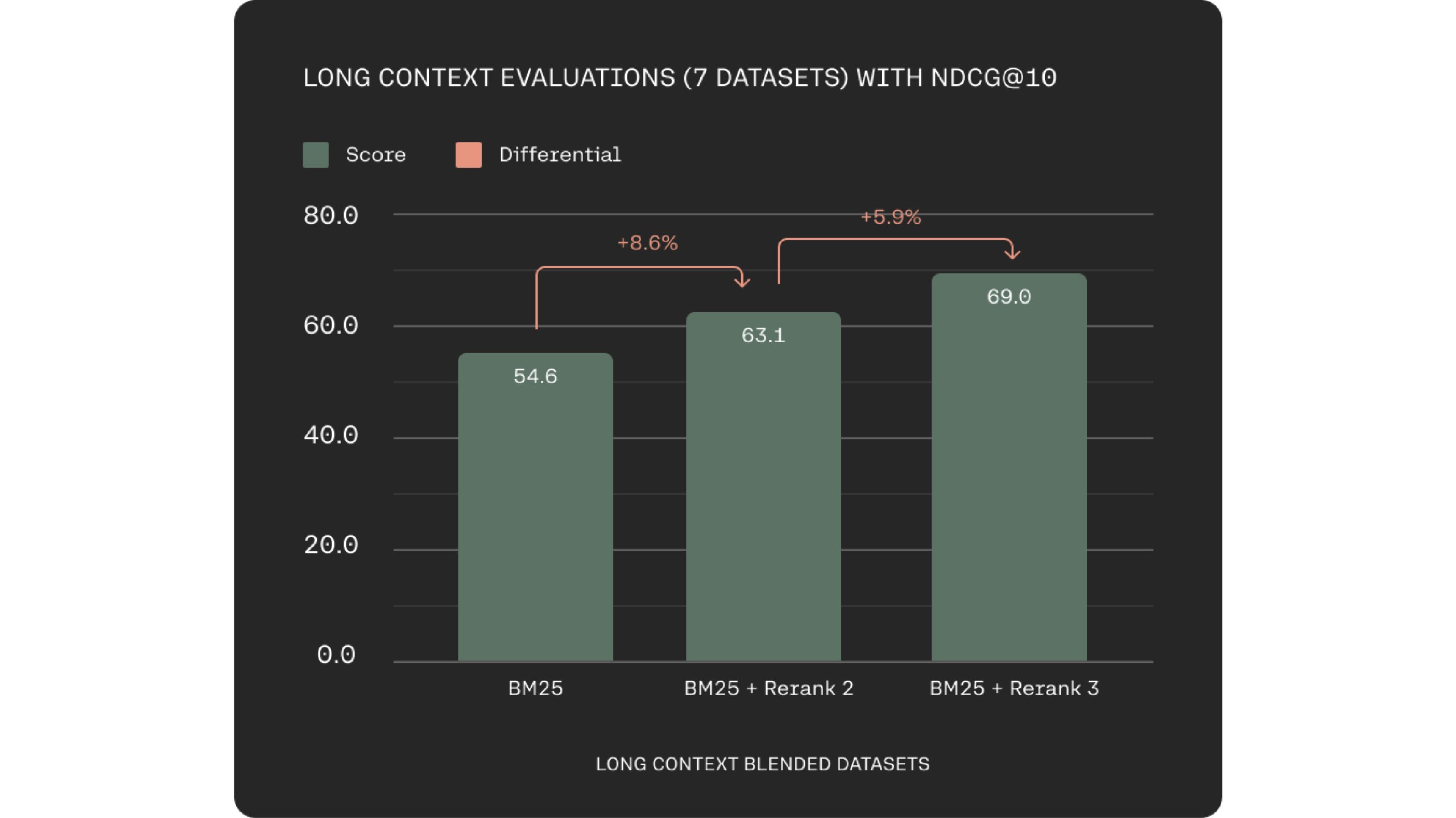

A key challenge in semantic search and RAG systems is optimizing data fragmentation. Rerank 3 solves this with a 4k contextual window, allowing for direct processing of larger documents. This leads to better consideration of context during relevance scoring.

Rerank 3 also supports the Elastic Inference API. Elastic Search has widely adopted search technology and the keyword and vector search capabilities on the Elasticsearch platform are designed to handle larger and more complex enterprise data efficiently.

“We are excited to partner with Cohere to help businesses unlock the potential of their data,” said Matt Riley, GVP and GM of Elasticsearch. Cohere's advanced recovery models, Embed 3 and Rerank 3, deliver excellent performance on large and complex enterprise data. They are your problem solver and are becoming essential components in any enterprise search system.

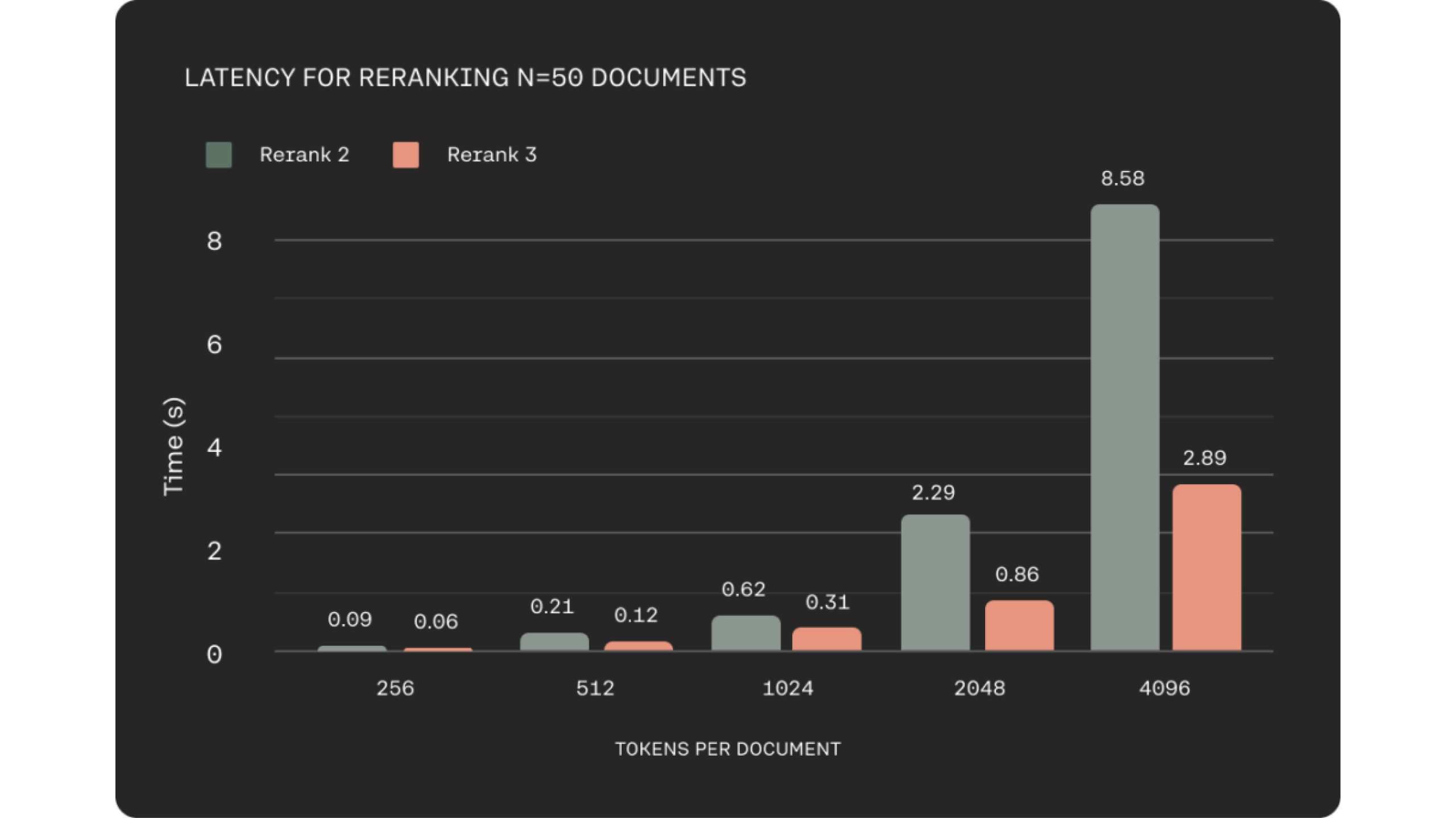

Improved latency with longer context

In many business areas, such as e-commerce or customer service, low latency is crucial to delivering a quality experience. They took this into account when creating Rerank 3, which shows up to 2x lower latency compared to Rerank 2 for shorter document lengths and up to 3x improvements at long context lengths.

Better performance and efficient RAG

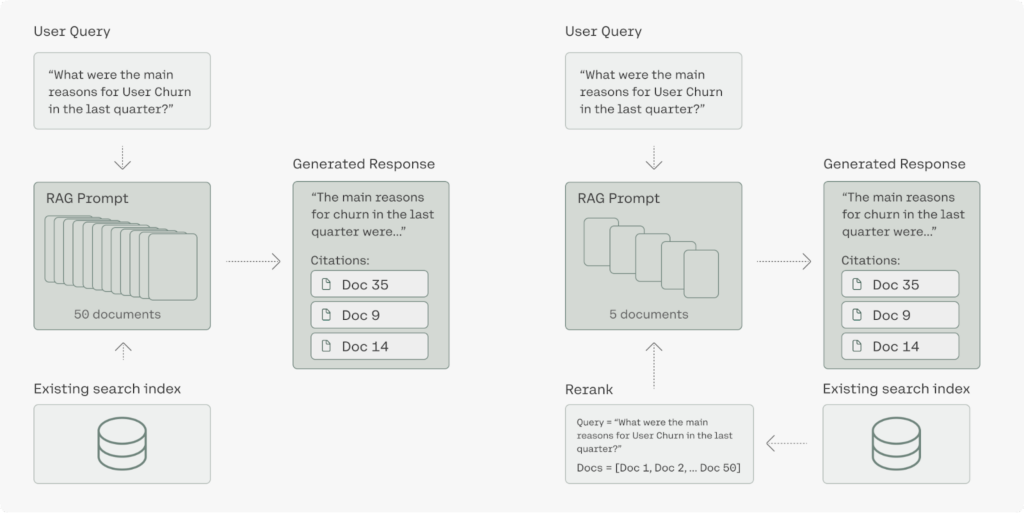

In augmented generation (RAG) retrieval systems, the document retrieval stage is critical to overall performance. Rerank 3 addresses two essential factors for exceptional RAG performance: response quality and latency. The model stands out for identifying the most relevant documents for a user's query through its semantic reclassification capabilities.

This targeted recovery process directly improves the accuracy of the RAG system responses. By enabling the efficient retrieval of relevant information from large data sets, Rerank 3 enables large companies to unlock the value of their proprietary data. This facilitates various business functions, including customer service, legal, human resources and finance, providing them with the most relevant information to address user queries.

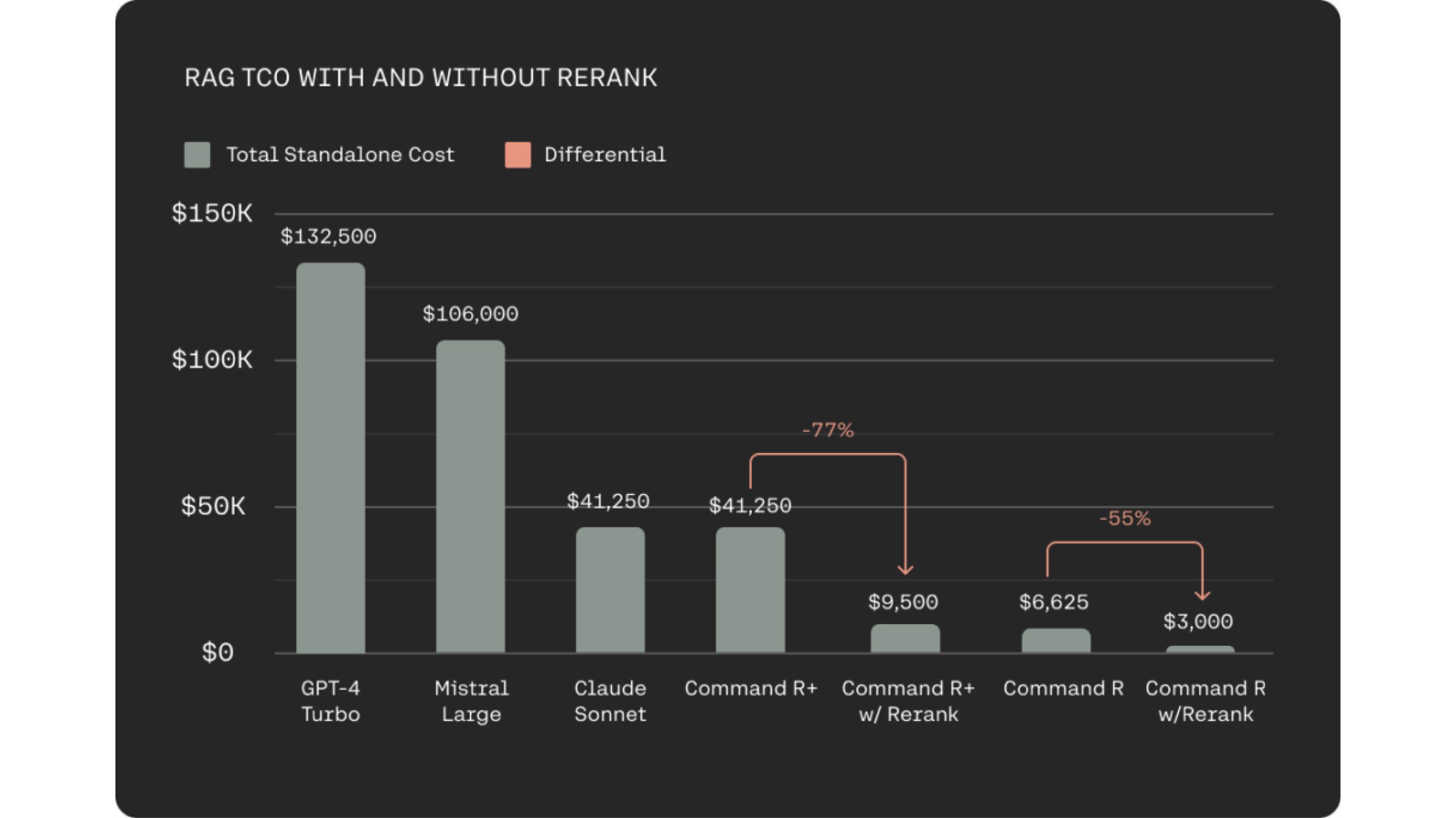

The integration of Rerank 3 with the cost-effective Command R family for RAG systems offers a significant reduction in total cost of ownership (TCO) for users. This is achieved through two key factors. First, Rerank 3 makes it easier to select highly relevant documents, requiring the LLM to process fewer documents to generate an informed response. This maintains response accuracy and minimizes latency. Second, the combined efficiency of the Rerank 3 and Command R models leads to cost reductions of 80-93% compared to alternative generative LLMs on the market. In fact, when considering the cost savings of Rerank 3 and Command R, total cost reductions can exceed 98%.

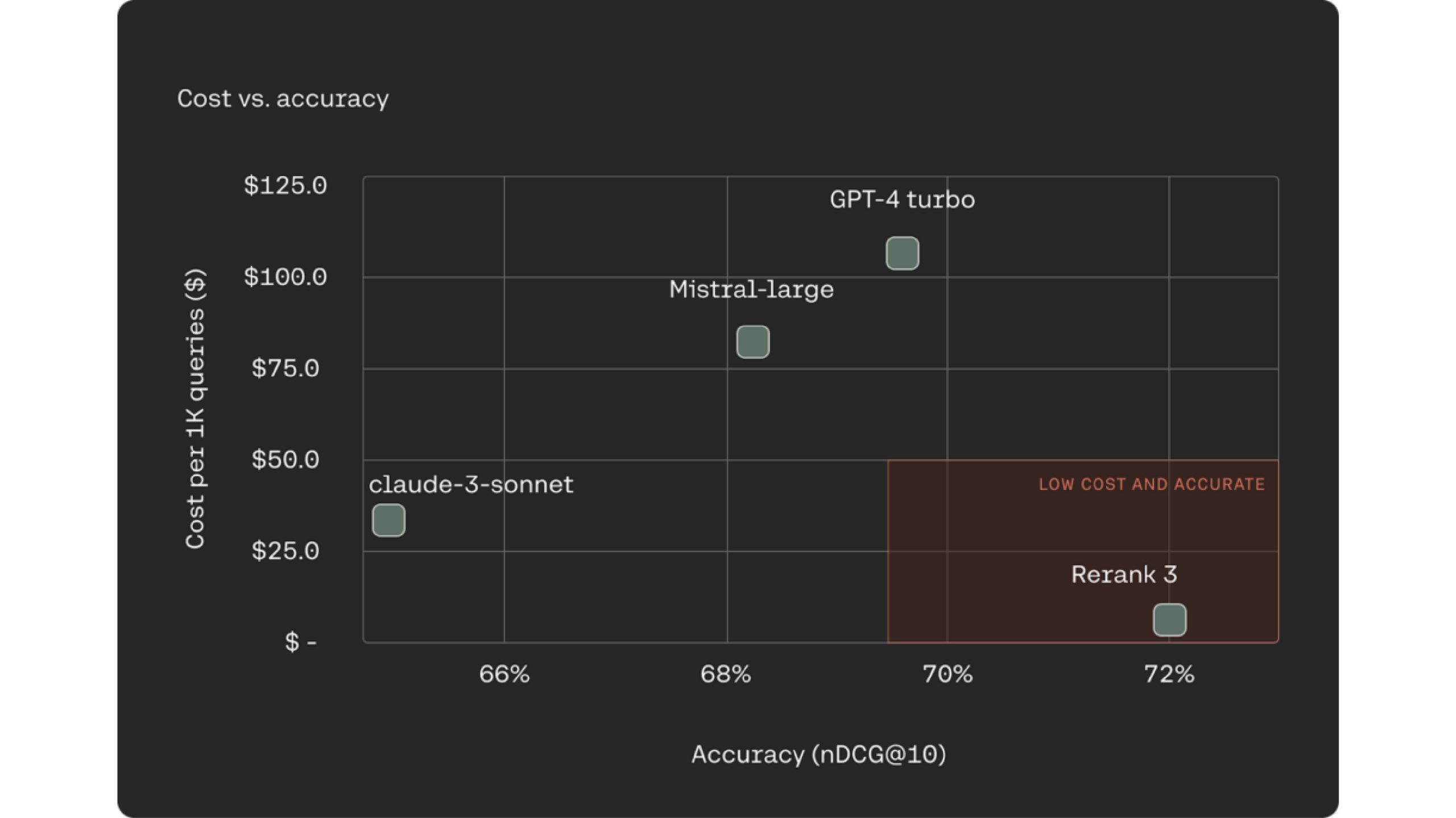

An increasingly common and well-known approach for RAG systems is to use LLMs as reorderers for the document retrieval process. Rerank 3 outperforms industry-leading LLMs such as Claude -3 Sonte, GPT Turbo in ranking accuracy and is 90-98% less expensive.

Changing range 3 increases the accuracy and quality of the LLM response. It also helps reduce end-to-end TCO. Rerank achieves this by removing our least relevant documents and ranking only the small subset of those relevant for answers.

Conclusion

Rerank 3 is a revolutionary tool for enterprise search and RAG systems. It allows high precision in handling complex data structures and multiple languages. Rerank 3 minimizes data fragmentation, reducing latency and total cost of ownership. This results in faster search results and cost-effective RAG implementations. Integrates with Elasticsearch to improve decision making and customer experience.

You can explore many more ai

NEWSLETTER

NEWSLETTER