Large language models (LLMs) have gained significant attention in solving planning problems, but current methodologies need to be revised. Direct plan generation using LLMs has shown limited success, with GPT-4 only achieving 35% accuracy on simple planning tasks. This low accuracy highlights the need for more effective approaches. Another major challenge lies in the lack of rigorous techniques and benchmarks to evaluate the translation of natural language planning descriptions into structured planning languages, such as the Planning Domain Definition Language (PDDL).

Researchers have explored several approaches to overcome the challenges presented by using LLMs for planning tasks. One method involves using LLMs to generate plans directly, but this has proven to have limited success due to poor performance even on simple planning tasks. Another approach, “planner-augmented LLMs,” combines LLMs with classical planning techniques. This method frames the problem as a machine translation task, converting natural language descriptions of planning problems into structured formats such as PDDL, finite state automata, or logic programming.

The hybrid approach of translating natural language into PDDL leverages the advantages of both LLMs and traditional symbolic planners. LLMs interpret natural language, while traditional efficient planners ensure the correctness of the solution. However, the evaluation of code generation tasks, including PDDL translation, remains a challenge. Existing evaluation methods, such as match-based metrics and plan validators, need to be revisited to assess the accuracy and relevance of the generated PDDL with respect to the original instructions.

Researchers from Brown University's Department of Computer Science present Planetarya rigorous benchmark for evaluating the ability of LLMs to translate natural language descriptions of planning problems into PDDLs, addressing challenges in assessing the accuracy of PDDL generation. This benchmark offers a rigorous approach to assess PDDL equivalence, formally defining planning problem equivalence and providing an algorithm to check whether two PDDL problems satisfy this definition. Planetarium includes a comprehensive dataset featuring 132,037 ground-truth PDDL problems with corresponding text descriptions, varying in abstraction and size. The benchmark also provides an extensive evaluation of current LLMs in both zero-shot and fine-tuned configurations, revealing the difficulty of the task. With GPT-4 achieving only 35.1% accuracy in a zero-shot configuration, Planetarium serves as a valuable tool to measure progress in LLM-based PDDL generation and is publicly available for further development and evaluation.

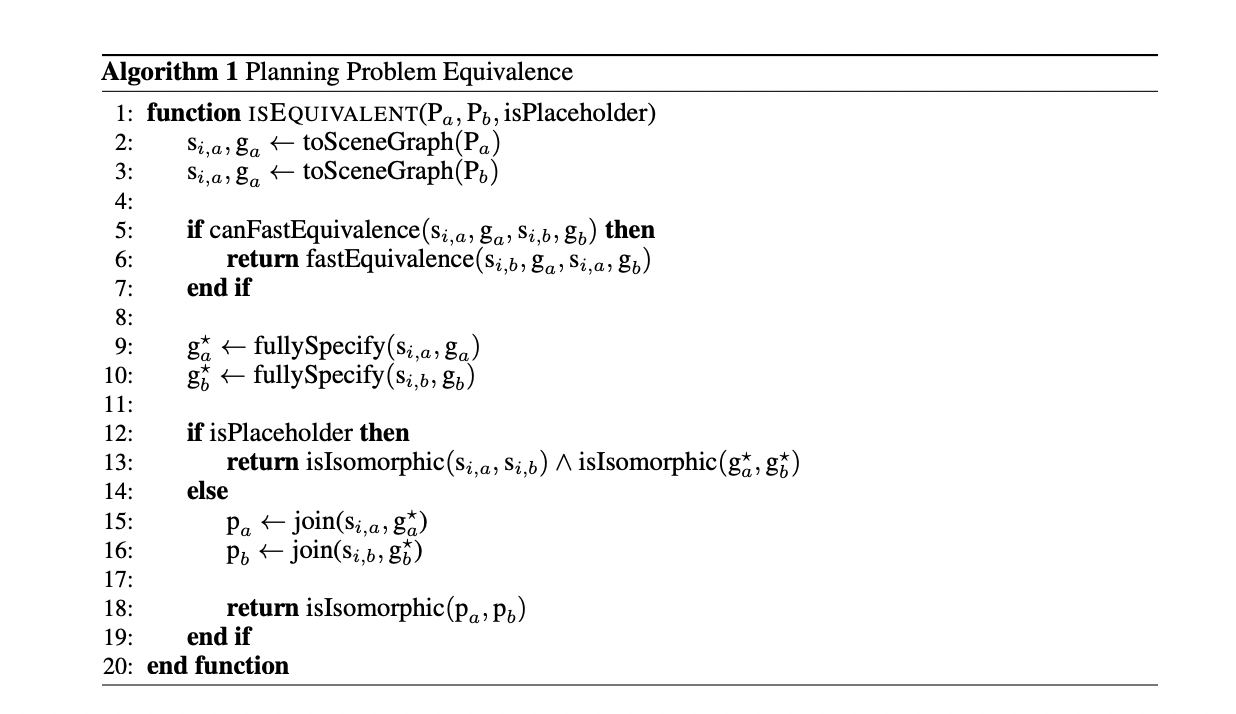

The Planetarium benchmark introduces a rigorous algorithm for evaluating PDDL equivalence, addressing the challenge of comparing different representations of the same planning problem. This algorithm transforms PDDL code into scene graphs, which represent both initial and goal states. It then fully specifies goal scenes by aggregating all trivially true edges and builds problem graphs by joining the initial and goal scene graphs.

Equivalence checking involves several steps: first, it performs quick checks to detect obvious cases of non-equivalence or equivalence. If these fail, it proceeds to fully specify the target scenes, identifying all true propositions in all reachable target states. The algorithm then operates in two modes: one for problems where object identity is important, and one where objects in target states are treated as placeholders. For problems with object identity, it checks isomorphism between the combined problem graphs. For placeholder problems, it checks isomorphism between the initial and target scenes separately. This approach ensures a complete and accurate assessment of PDDL equivalence, capable of handling various representation nuances in planning problems.

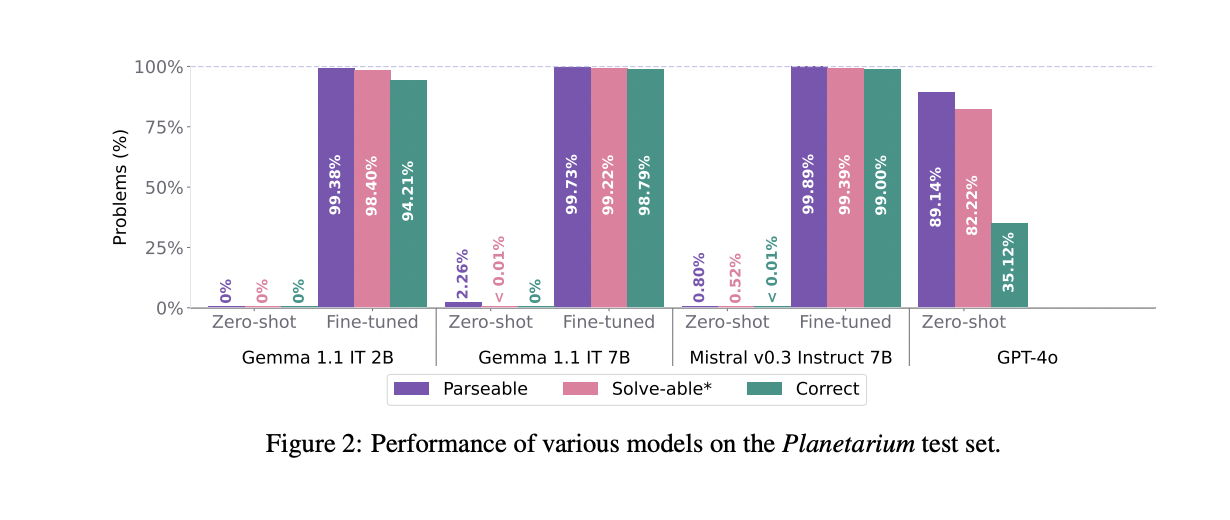

The Planetarium benchmark evaluates the performance of several large language models (LLMs) in translating natural language descriptions into PDDL. The results show that GPT-4o, Mistral v0.3 7B Instruct, and Gemma 1.1 IT 2B & 7B performed poorly in zero-shot configurations, with GPT-4o achieving the highest accuracy at 35.12%. The performance breakdown of GPT-4o reveals that abstract task descriptions are harder to translate than explicit ones, while fully explicit task descriptions facilitate easier generation of parsable PDDL code. Also the case: fine-tuning significantly improved performance on all open-weight models. Mistral v0.3 7B Instruct achieved the highest accuracy after fine-tuning.

This study presents the Planetarium reference model, which marks a significant advancement in assessing the ability of LLMs to translate natural language into real-world language for planning tasks. It addresses crucial technical and societal challenges, and emphasizes the importance of accurate translations to avoid potential harm from misaligned results. Current performance levels, even for advanced models such as GPT-4, highlight the complexity of this task and the need for further innovation. As LLM-based planning systems evolve, Planetarium provides a vital framework for measuring progress and ensuring trustworthiness. This research pushes the boundaries of ai capabilities and underscores the importance of responsible development to create trustworthy ai planning systems.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram Channel and LinkedIn GrAbove!.

If you like our work, you will love our Newsletter..

Don't forget to join our Subreddit with over 46 billion users

Asjad is a consultant intern at Marktechpost. He is pursuing Bachelors in Mechanical Engineering from Indian Institute of technology, Kharagpur. Asjad is a Machine Learning and Deep Learning enthusiast who is always researching the applications of Machine Learning in the healthcare domain.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER