Introduction

Mistral has released its first multimodal model, the Pixtral-12B-2409. This model is based on Mistral’s 12 billionth parameter, Nemo 12B. What makes this model different? It can now accept images and text as input. Let’s take a closer look at the model, how it can be used, how well it performs tasks, and other things you need to know.

Overview

- Discover Mistral's new Pixtral-12B, a multimodal model that combines text and image processing for versatile ai applications.

- Learn how to use Pixtral-12B, Mistral's latest ai model, designed to handle high-resolution text and images.

- Explore the capabilities and use cases of the Pixtral-12B, which includes a vision adapter for better image understanding.

- Understand the multimodal features of Pixtral-12B and its potential applications in image captioning, story generation, and more.

- Learn about the design, performance, and how to tune Pixtral-12B for specific multimodal tasks.

What is Pixtral-12B?

Pixtral-12B is a multimodal model derived from Mistral's Nemo 12B, with an added 400M parameter vision adapter. Mistral can be downloaded from a torrent file or from Hugging Face under an Apache 2.0 license. Let's take a look at some of the technical features of the Pixtral-12B model:

| Feature | Details |

| Model size | 12 billion parameters |

| Layers | 40 layers |

| Vision adapter | 400 million parameters, using GeLU activation |

| Image input | Accepts 1024×1024 images via URL or base64, segmented into 16×16 pixel patches |

| Vision encoder | 2D RoPE (Rotary Position Embeddings) improves spatial understanding |

| Vocabulary size | Up to 131,072 tokens |

| Special chips | img, img_break and img_end |

How to use Pixtral-12B-2409?

As of September 15, 2024, the model is currently not available on Le Chat or La Plateforme de Mistral to use the chat interface directly or access it via API, but we can download the model via a torrent link and use it or even adjust the weights to suit our needs. We can also use the model with the help of Hugging Face. Let's see them in detail:

Torrent link to use:

magnet:?xt=urn:btih:7278e625de2b1da598b23954c13933047126238a&dn=pixtral-12b-

240910&tr=udp%3A%2F%http://2Ftracker.opentrackr.org%3A1337%2Fannounce&tr=udp%

3A%2F%http://2Fopen.demonii.com%3A1337%2Fannounce&tr=http%3A%2F%http://2Ftrac

ker.ipv6tracker.org%3A80%2Fannounce

I am using a laptop running Ubuntu, so I will be using Transmission app (it is pre-installed on most Ubuntu computers). You can use any other app to download the torrent link for the open source model.

- Click on “File” at the top left and select the Open URL option. You can then paste the link you copied.

- You can click “Open” and download the Pixtral-12B model. The folder containing these files will be downloaded:

Hugging face

This model requires a high-performance GPU, so I suggest you use the paid version of Google Colab or Jupyter Notebook with RunPod. I will be using RunPod for the demonstration of the Pixtral-12B model. If you are using a RunPod instance with a 40 GB disk, I suggest you use the PCIe A100 GPU.

We will be using Pixtral-12B with the help of vllm. Make sure to perform the following installations.

!pip install vllm!pip install --upgrade mistral_common

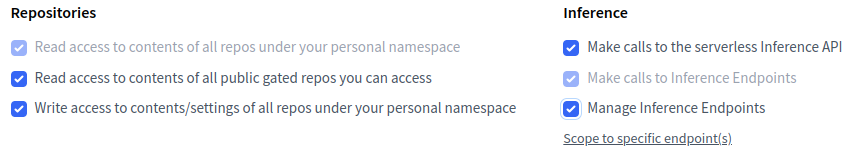

Go to this link: https://huggingface.co/mistralai/Pixtral-12B-2409 and agree to access the model. Then, go to your profile, click on “access_tokens” and create one. If you don’t have an access token, make sure you have checked the following boxes:

Now run the following code and paste the access token to authenticate with Hugging Face:

from huggingface_hub import notebook_login

notebook_login()#hf_SvUkDKrMlzNWrrSmjiHyFrFPTsobVtltzOThis will take a while while the 25GB model is downloaded for use:

from vllm import LLM

from vllm.sampling_params import SamplingParams

model_name = "mistralai/Pixtral-12B-2409"

sampling_params = SamplingParams(max_tokens=8192)

llm = LLM(model=model_name, tokenizer_mode="mistral",max_model_len=70000)

prompt = "Describe this image"

image_url = "https://images.news18.com/ibnlive/uploads/2024/07/suryakumar-yadav-catch-1-2024-07-4a496281eb830a6fc7ab41e92a0d295e-3x2.jpg"

messages = (

{

"role": "user",

"content": ({"type": "text", "text": prompt}, {"type": "image_url", "image_url": {"url": image_url}})

},

)I asked the model to describe the following image, which is from the 2024 T20 World Cup:

outputs = llm.chat(messages, sampling_params=sampling_params)

print('\n'+ outputs(0).outputs(0).text)Production

Processed prompts: 100%|██████████| 1/1 (00:06<00:00, 6.52s/it, est. speed

input: 429.80 toks/s, output: 51.54 toks/s)

The image is a composite of three frames showing a cricket player in action,

likely from the T20 World Cup. Here's a detailed summary:1. **Left Frame**:

- The player is seen mid-jump, looking upwards with his arms outstretched,

attempting to catch a cricket ball.

- He is wearing a blue jersey with the name "Surya" and the number "63"

printed on the back.

- His expression is intense and focused, emphasizing the concentration

required for the catch.2. **Middle Frame**:

- The player has his hands cupped together, measurably closer to catching

the ball.

- He wears the same blue jersey with red sleeves, which also features the

"India" team logo and the sponsor's branding, specifically from Adidas.

- The ball is now very close to his hands, indicating a high likelihood of

a successful catch.3. **Right Frame**:

- The player has successfully caught the ball, raising both hands above

his head with the ball clasped in his left hand.

- He displays a triumphant expression with a broad smile, typically

associated with successfully making a catch.

- His uniformity and raised hands convey a sense of victory and great

effort.The background in all frames features billboards indicating the "T20 World

Cup," and specifically, a prominent sign for "Emirates," which has

presumably sponsored the event. There is green grass on the field,

reflecting the actual playing conditions during the match.Overall, the image illustrates a key moment in a cricket game, showcasing the

player's athletic skill and team spirit.

From the result, we can see that the model was able to identify the T20 World Cup image and was able to distinguish the frames of the same image to explain what was happening.

prompt = "Write a story describing the whole event that might have happened"

image_url = "https://images.news18.com/ibnlive/uploads/2024/07/suryakumar-yadav-catch-1-2024-07-4a496281eb830a6fc7ab41e92a0d295e-3x2.jpg"

messages = (

{

"role": "user",

"content": ({"type": "text", "text": prompt}, {"type": "image_url", "image_url": {"url": image_url}})

},

)

outputs = llm.chat(messages, sampling_params=sampling_params)

print('\n'+outputs(0).outputs(0).text)Production

Processed prompts: 100%|██████████| 1/1 (00:06<00:00, 6.99s/it, est. speed

input: 401.77 toks/s, output: 52.92 toks/s)

In the heart of a bustling stadium, the T20 World Cup is in full swing. The

atmosphere is electric, and the crowd is on the edge of their seats, eagerly

watching the match between India and one of their formidable rivals. The

focus shifts to the Indian fielders, specifically Suryakumar Yadav, who is

positioned strategically on the boundary line.As the ball sails into the air after a powerful shot from the opposing

batsman, Suryakumar's eyes follow its trajectory. With age-old instincts

honed by countless hours of practice, he knows that this is his moment. The

ball is lofted high, giving him just enough time to gauge its path and plan

his next move.In the first snapshot, Suryakumar is Hat-cheaper crouched, eyes fixed on the

ball as it peaks. The anticipation is palpable. His teammates watch

intently, hoping he can execute the catch perfectly.The second snapshot captures a moment of pure athleticism. With a burst of

energy and agility, Suryakumar springs into action. He takes a few swift

steps forward, his body eager to reach the height required to make the

catch. The ball is slightly above his head, and he extends his arms, fingers

spread wide, ready to secure the prize.In the final snapshot, Suryakumar's face lights up with accomplishment. His

eyes are focused on the ball, now safely nestled in his palm. The crowd

explodes with cheers, acknowledging the outstanding effort. His teammates

rush towards him, celebrating the crucial catch that could turn the tide of

the match.This sequence of successful plays not only highlights Suryakumar's individual

skill but also underscores the strategic teamwork and determined spirit that

define the Indian cricket team in the prestigious T20 World Cup.

When asked to write a story about the image, the model was able to gather context about the features of the environment and what exactly happened in the frame.

Conclusion

The Pixtral-12B model significantly enhances Mistral’s ai capabilities by combining text and image processing to expand its use cases. Its ability to handle high-resolution 1024 x 1024 images with a detailed understanding of spatial relationships and its strong linguistic capabilities make it an excellent tool for multimodal tasks such as image captioning, story generation, and more.

Despite its powerful capabilities, the model can be further tuned to meet specific needs, whether to improve image recognition, language generation, or to adapt it to more specialized domains. This flexibility is a crucial advantage for developers and researchers who want to adapt the model to their use cases.

Frequently Asked Questions

A. vLLM is a library optimized for efficient inference of large language models, improving speed and memory usage during model execution.

A. SamplingParams in vLLM controls how the model generates text, specifying parameters such as the maximum number of tokens and sampling techniques for text generation.

A. Yes, Sophia Yang, Mistral’s Developer Relations Director, mentioned that the model will soon be available on Le Chat and Le Platform.