This is a joint blog with AWS and Philips.

Philips is a health technology company focused on improving people’s lives through meaningful innovation. Since 2014, the company has been offering customers its Philips HealthSuite Platform, which orchestrates dozens of AWS services that healthcare and life sciences companies use to improve patient care. It partners with healthcare providers, startups, universities, and other companies to develop technology that helps doctors make more precise diagnoses and deliver more personalized treatment for millions of people worldwide.

One of the key drivers of Philips’ innovation strategy is artificial intelligence (ai), which enables the creation of smart and personalized products and services that can improve health outcomes, enhance customer experience, and optimize operational efficiency.

Amazon SageMaker provides purpose-built tools for machine learning operations (MLOps) to help automate and standardize processes across the ML lifecycle. With SageMaker MLOps tools, teams can easily train, test, troubleshoot, deploy, and govern ML models at scale to boost productivity of data scientists and ML engineers while maintaining model performance in production.

In this post, we describe how Philips partnered with AWS to develop ai ToolSuite—a scalable, secure, and compliant ML platform on SageMaker. This platform provides capabilities ranging from experimentation, data annotation, training, model deployments, and reusable templates. All these capabilities are built to help multiple lines of business innovate with speed and agility while governing at scale with central controls. We outline the key use cases that provided requirements for the first iteration of the platform, the core components, and the outcomes achieved. We conclude by identifying the ongoing efforts to enable the platform with generative ai workloads and rapidly onboard new users and teams to adopt the platform.

Customer context

Philips uses ai in various domains, such as imaging, diagnostics, therapy, personal health, and connected care. Some examples of ai-enabled solutions that Philips has developed over the past years are:

- Philips SmartSpeed – An ai-based imaging technology for MRI that uses a unique Compressed-SENSE based deep learning ai algorithm to take speed and image quality to the next level for a large variety of patients

- Philips eCareManager – A telehealth solution that uses ai to support the remote care and management of critically ill patients in intensive care units, by using advanced analytics and clinical algorithms to process the patient data from multiple sources, and providing actionable insights, alerts, and recommendations for the care team

- Philips Sonicare – A smart toothbrush that uses ai to analyze the brushing behavior and oral health of users, and provide real-time guidance and personalized recommendations, such as optimal brushing time, pressure, and coverage, to improve their dental hygiene and prevent cavities and gum diseases.

For many years, Philips has been pioneering the development of data-driven algorithms to fuel its innovative solutions across the healthcare continuum. In the diagnostic imaging domain, Philips developed a multitude of ML applications for medical image reconstruction and interpretation, workflow management, and treatment optimization. Also in patient monitoring, image guided therapy, ultrasound and personal health teams have been creating ML algorithms and applications. However, innovation was hampered due to using fragmented ai development environments across teams. These environments ranged from individual laptops and desktops to diverse on-premises computational clusters and cloud-based infrastructure. This heterogeneity initially enabled different teams to move fast in their early ai development efforts, but is now holding back opportunities to scale and improve efficiency of our ai development processes.

It was evident that a fundamental shift towards a unified and standardized environment was imperative to truly unleash the potential of data-driven endeavors at Philips.

Key ai/ML use cases and platform requirements

ai/ML-enabled propositions can transform healthcare by automating administrative tasks done by clinicians. For example:

- ai can analyze medical images to help radiologists diagnose diseases faster and more accurately

- ai can predict future medical events by analyzing patient data and improving proactive care

- ai can recommend personalized treatment tailored to patients’ needs

- ai can extract and structure information from clinical notes to make record-taking more efficient

- ai interfaces can provide patient support for queries, reminders, and symptom checkers

Overall, ai/ML promises reduced human error, time and cost savings, optimized patient experiences, and timely, personalized interventions.

One of the key requirements for the ML development and deployment platform was the ability of the platform to support the continuous iterative development and deployment process, as shown in the following figure.

The ai asset development starts in a lab environment, where the data is collected and curated, and then the models are trained and validated. When the model is ready and approved for use, it’s deployed into the real-world production systems. Once deployed, model performance is continuously monitored. The real-world performance and feedback are eventually used for further model improvements with full automation of the model training and deployment.

The more detailed ai ToolSuite requirements were driven by three example use cases:

- Develop a computer vision application aimed at object detection at the edge. The data science team expected an ai-based automated image annotation workflow to speed up a time-consuming labeling process.

- Enable a data science team to manage a family of classic ML models for benchmarking statistics across multiple medical units. The project required automation of model deployment, experiment tracking, model monitoring, and more control over the entire process end to end both for auditing and retraining in the future.

- Improve the quality and time to market for deep learning models in diagnostic medical imaging. The existing computing infrastructure didn’t allow for running many experiments in parallel, which delayed model development. Also, for regulatory purposes, it’s necessary to enable full reproducibility of model training for several years.

Non-functional requirements

Building a scalable and robust ai/ML platform requires careful consideration of non-functional requirements. These requirements go beyond the specific functionalities of the platform and focus on ensuring the following:

- Scalability – The ai ToolSuite platform must be able to scale Philips’s insights generation infrastructure more effectively so that the platform can handle a growing volume of data, users, and ai/ML workloads without sacrificing performance. It should be designed to scale horizontally and vertically to meet increasing demands seamlessly while providing central resource management.

- Performance – The platform must deliver high-performance computing capabilities to efficiently process complex ai/ML algorithms. SageMaker offers a wide range of instance types, including instances with powerful GPUs, which can significantly accelerate model training and inference tasks. It also should minimize latency and response times to provide real-time or near-real-time results.

- Reliability – The platform must provide a highly reliable and robust ai infrastructure that spans across multiple Availability Zones. This multi-AZ architecture should ensure uninterrupted ai operations by distributing resources and workloads across distinct data centers.

- Availability – The platform must be available 24/7, with minimal downtime for maintenance and upgrades. ai ToolSuite’s high availability should include load balancing, fault-tolerant architectures, and proactive monitoring.

- Security and Governance – The platform must employ robust security measures, encryption, access controls, dedicated roles, and authentication mechanisms with continuous monitoring for unusual activities and conducting security audits.

- Data Management – Efficient data management is crucial for ai/ML platforms. Regulations in the healthcare industry call for especially rigorous data governance. It should include features like data versioning, data lineage, data governance, and data quality assurance to ensure accurate and reliable results.

- Interoperability – The platform should be designed to integrate easily with Philips’s internal data repositories, allowing seamless data exchange and collaboration with third-party applications.

- Maintainability – The platform’s architecture and code base should be well organized, modular, and maintainable. This enables Philips ML engineers and developers to provide updates, bug fixes, and future enhancements without disrupting the entire system.

- Resource optimization – The platform should monitor utilization reports very closely to make sure computing resources are used efficiently and allocate resources dynamically based on demand. In addition, Philips should use AWS Billing and Cost Management tools to make sure teams receive notifications when utilization passes the allocated threshold amount.

- Monitoring and logging – The platform should use Amazon CloudWatch alerts for comprehensive monitoring and logging capabilities, which are necessary to track system performance, identify bottlenecks, and troubleshoot issues effectively.

- Compliance – The platform can also help improve regulatory compliance of ai-enabled propositions. Reproducibility and traceability must be enabled automatically by the end-to-end data processing pipelines, where many mandatory documentation artifacts, such as data lineage reports and model cards, can be prepared automatically.

- Testing and validation – Rigorous testing and validation procedures must be in place to ensure the accuracy and reliability of ai/ML models and prevent unintended biases.

Solution overview

ai ToolSuite is an end-to-end, scalable, quick start ai development environment offering native SageMaker and associated ai/ML services with Philips HealthSuite security and privacy guardrails and Philips ecosystem integrations. There are three personas with dedicated sets of access permissions:

- Data scientist – Prepare data, and develop and train models in a collaborative workspace

- ML engineer – Productionize ML applications with model deployment, monitoring, and maintenance

- Data science admin – Create a project per team request to provide dedicated isolated environments with use case-specific templates

The platform development spanned multiple release cycles in an iterative cycle of discover, design, build, test, and deploy. Due to the uniqueness of some applications, the extension of the platform required embedding existing custom components like data stores or proprietary tools for annotation.

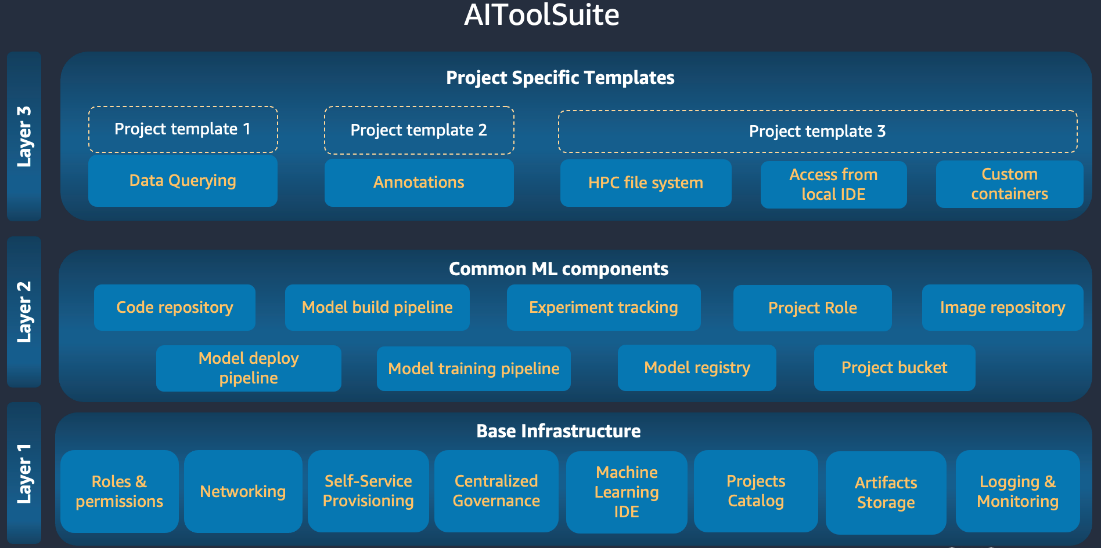

The following figure illustrates the three-layer architecture of ai ToolSuite, including the base infrastructure as the first layer, common ML components as the second layer, and project-specific templates as the third layer.

Layer 1 contains the base infrastructure:

- A networking layer with parametrized access to the internet with high availability

- Self-service provisioning with infrastructure as code (IaC)

- An integrated development environment (IDE) using an Amazon SageMaker Studio domain

- Platform roles (data science admin, data scientist)

- Artifacts storage

- Logging and monitoring for observability

Layer 2 contains common ML components:

- Automated experiment tracking for every job and pipeline

- A model build pipeline to launch a new model build update

- A model training pipeline comprised of model training, evaluation, registration

- A model deploy pipeline to deploy the model for final testing and approval

- A model registry to easily manage model versions

- A project role created specifically for a given use case, to be assigned to SageMaker Studio users

- An image repository for storing processing, training, and inference container images built for the project

- A code repository to store code artifacts

- A project Amazon Simple Storage Service (Amazon S3) bucket to store all project data and artifacts

Layer 3 contains project-specific templates that can be created with custom components as required by new projects. For example:

- Template 1 – Includes a component for data querying and history tracking

- Template 2 – Includes a component for data annotations with a custom annotation workflow to use proprietary annotation tooling

- Template 3 – Includes components for custom container images to customize both their development environment and training routines, dedicated HPC file system, and access from a local IDE for users

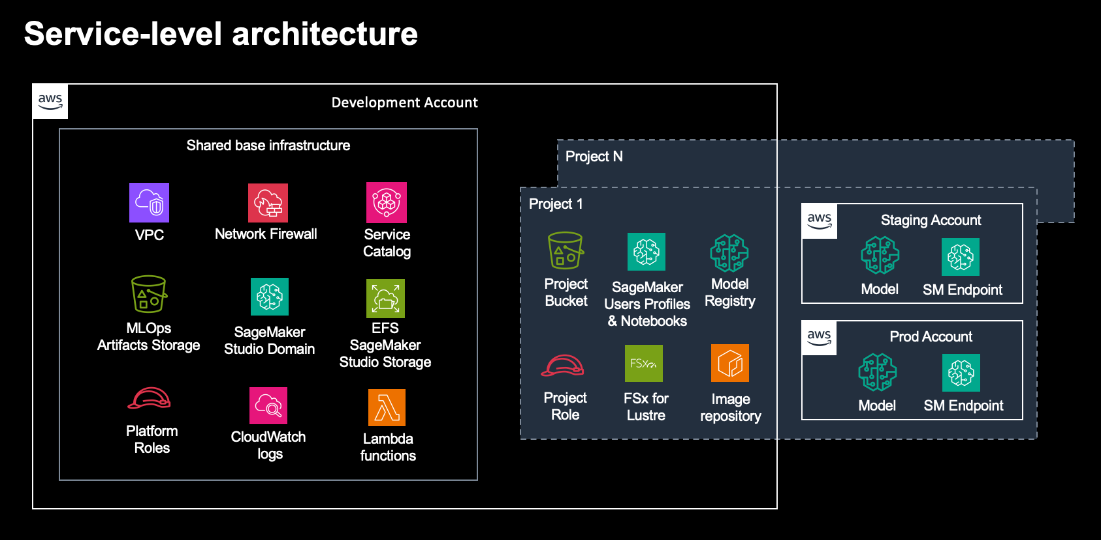

The following diagram highlights the key AWS services spanning multiple AWS accounts for development, staging, and production.

In the following sections, we discuss the key capabilities of the platform enabled by AWS services, including SageMaker, AWS Service Catalog, CloudWatch, AWS Lambda, Amazon Elastic Container Registry (Amazon ECR), Amazon S3, AWS Identity and Access Management (IAM), and others.

Infrastructure as code

The platform uses IaC, which allows Philips to automate the provisioning and management of infrastructure resources. This approach will also help reproducibility, scalability, version control, consistency, security, and portability for development, testing, or production.

Access to AWS environments

SageMaker and associated ai/ML services are accessed with security guardrails for data preparation, model development, training, annotation, and deployment.

Isolation and collaboration

The platform ensures data isolation by storing and processing separately, reducing the risk of unauthorized access or data breaches.

The platform facilitates team collaboration, which is essential in ai projects that typically involve cross-functional teams, including data scientists, data science admins, and MLOps engineers.

Role-based access control

Role-based access control (RBAC) is essential in managing permissions and simplifying access management by defining roles and permissions in a structured manner. It makes it straightforward to manage permissions as teams and projects grow and access control for different personas involved in AWS ai/ML projects, such as the data science admin, data scientist, annotation admin, annotator, and MLOps engineer.

Access to data stores

The platform allows SageMaker access to data stores, which ensures that data can be efficiently utilized for model training and inference without the need to duplicate or move data across different storage locations, thereby optimizing resource utilization and reducing costs.

Annotation using Philips-specific annotation tools

AWS offers a suite of ai and ML services, such as SageMaker, Amazon SageMaker Ground Truth, and Amazon Cognito, which are fully integrated with Philips-specific in-house annotation tools. This integration enables developers to train and deploy ML models using the annotated data within the AWS environment.

ML templates

The ai ToolSuite platform offers templates in AWS for various ML workflows. These templates are preconfigured infrastructure setups tailored to specific ML use cases and are accessible through services like SageMaker project templates, AWS CloudFormation, and Service Catalog.

Integration with Philips GitHub

Integration with GitHub enhances efficiency by providing a centralized platform for version control, code reviews, and automated CI/CD (continuous integration and continuous deployment) pipelines, reducing manual tasks and boosting productivity.

Visual Studio Code integration

Integration with Visual Studio Code provides a unified environment for coding, debugging, and managing ML projects. This streamlines the entire ML workflow, reducing context switching and saving time. The integration also enhances collaboration among team members by enabling them to work on SageMaker projects together within a familiar development environment, utilizing version control systems, and sharing code and notebooks seamlessly.

Model and data lineage and traceability for reproducibility and compliance

The platform provides versioning, which helps keep track of changes to the data scientist’s training and inference data over time, making it easier to reproduce results and understand the evolution of the datasets.

The platform also enables SageMaker experiment tracking, which allows end-users to log and track all the metadata associated with their ML experiments, including hyperparameters, input data, code, and model artifacts. These capabilities are essential for demonstrating compliance with regulatory standards and ensuring transparency and accountability in ai/ML workflows.

ai/ML specification report generation for regulatory compliance

AWS maintains compliance certifications for various industry standards and regulations. ai/ML specification reports serve as essential compliance documentation, showcasing adherence to regulatory requirements. These reports document the versioning of datasets, models, and code. Version control is essential for maintaining data lineage, traceability, and reproducibility, all of which are critical for regulatory compliance and auditing.

Project-level budget management

Project-level budget management allows the organization to set limits on spending, helping to avoid unexpected costs and ensuring that the ML projects stay within budget. With budget management, the organization can allocate specific budgets to individual projects or teams, which helps teams identify resource inefficiencies or unexpected cost spikes early on. In addition to budget management, with the feature to automatically shut down idle notebooks, team members avoid paying for unused resources, also releasing valuable resources when they are not actively in use, making them available for other tasks or users.

Outcomes

ai ToolSuite was designed and implemented as an enterprise-wide platform for ML development and deployment for data scientists across Philips. Diverse requirements from all business units were collected and considered during the design and development. Early in the project, Philips identified champions from the business teams who provided feedback and helped evaluate the value of the platform.

The following outcomes were achieved:

- User adoption is one of the key leading indicators for Philips. Users from several business units were trained and onboarded to the platform, and that number is expected to grow in 2024.

- Another important metric is the efficiency for data science users. With ai ToolSuite, new ML development environments are deployed in less than an hour instead of several days.

- Data science teams can access a scalable, secure, cost-efficient, cloud-based compute infrastructure.

- Teams can run multiple model training experiments in parallel, which significantly reduced the average training time from weeks to 1–3 days.

- Because the environment deployment is fully automated, it requires virtually no involvement of the cloud infrastructure engineers, which reduced operational costs.

- The use of ai ToolSuite significantly enhanced the overall maturity of data and ai deliverables by promoting the use of good ML practices, standardized workflows, and end-to-end reproducibility, which is critical for regulatory compliance in the healthcare industry.

Looking forward with generative ai

As organizations race to adopt the next state-of-the-art in ai, it’s imperative to adopt new technology in the context of the organization’s security and governance policy. The architecture of ai ToolSuite provides an excellent blueprint for enabling access to generative ai capabilities in AWS for different teams at Philips. Teams can use foundation models made available with Amazon SageMaker JumpStart, which provides a vast number of open source models from Hugging Face and other providers. With the necessary guardrails already in place in terms of access control, project provisioning, and cost controls, it will be seamless for teams to start using the generative ai capabilities within SageMaker.

Additionally, access to Amazon Bedrock, a fully managed API-driven service for generative ai, can be provisioned for individual accounts based on project requirements, and the users can access Amazon Bedrock APIs either via the SageMaker notebook interface or through their preferred IDE.

There are additional considerations concerning the adoption of generative ai in a regulated setting, such as healthcare. Careful consideration needs to be given to the value created by generative ai applications against the associated risks and costs. There is also a need to create a risk and legal framework that governs the organization’s use of generative ai technologies. Elements such as data security, bias and fairness, and regulatory compliance need to be considered as part of such mechanisms.

Conclusion

Philips embarked on a journey of harnessing the power of data-driven algorithms to revolutionize healthcare solutions. Over the years, innovation in diagnostic imaging has yielded several ML applications, from image reconstruction to workflow management and treatment optimization. However, the diverse range of setups, from individual laptops to on-premises clusters and cloud infrastructure, posed formidable challenges. Separate system administration, security measures, support mechanisms, and data protocol inhibited a comprehensive view of TCO and complicated transitions between teams. The transition from research and development to production was burdened by the lack of lineage and reproducibility, making continuous model retraining difficult.

As part of the strategic collaboration between Philips and AWS, the ai ToolSuite platform was created to develop a scalable, secure, and compliant ML platform with SageMaker. This platform provides capabilities ranging from experimentation, data annotation, training, model deployments, and reusable templates. All these capabilities were built iteratively over several cycles of discover, design, build, test, and deploy. This helped multiple business units innovate with speed and agility while governing at scale with central controls.

This journey serves as an inspiration for organizations looking to harness the power of ai and ML to drive innovation and efficiency in healthcare, ultimately benefiting patients and care providers worldwide. As they continue to build upon this success, Philips stands poised to make even greater strides in improving health outcomes through innovative ai-enabled solutions.

To learn more about Philips innovation on AWS, visit Philips on AWS.

About the authors

Frank Wartena is a program manager at Philips Innovation & Strategy. He coordinates data & ai related platform assets in support of our Philips data & ai enabled propositions. He has broad experience in artificial intelligence, data science and interoperability. In his spare time, Frank enjoys running, reading and rowing, and spending time with his family.

Frank Wartena is a program manager at Philips Innovation & Strategy. He coordinates data & ai related platform assets in support of our Philips data & ai enabled propositions. He has broad experience in artificial intelligence, data science and interoperability. In his spare time, Frank enjoys running, reading and rowing, and spending time with his family.

Irina Fedulova is a Principal Data & ai Lead at Philips Innovation & Strategy. She is driving strategic activities focused on the tools, platforms, and best practices that speed up and scale the development and productization of (Generative) ai-enabled solutions at Philips. Irina has a strong technical background in machine learning, cloud computing, and software engineering. Outside work, she enjoys spending time with her family, traveling and reading.

Irina Fedulova is a Principal Data & ai Lead at Philips Innovation & Strategy. She is driving strategic activities focused on the tools, platforms, and best practices that speed up and scale the development and productization of (Generative) ai-enabled solutions at Philips. Irina has a strong technical background in machine learning, cloud computing, and software engineering. Outside work, she enjoys spending time with her family, traveling and reading.

Selvakumar Palaniyappan is a Product Owner at Philips Innovation & Strategy, in charge of product management for Philips HealthSuite ai & ML platform. He is highly experienced in technical product management and software engineering. He is currently working on building a scalable and compliant ai and ML development and deployment platform. Furthermore, he is spearheading its adoption by Philips’ data science teams in order to develop ai-driven health systems and solutions.

Selvakumar Palaniyappan is a Product Owner at Philips Innovation & Strategy, in charge of product management for Philips HealthSuite ai & ML platform. He is highly experienced in technical product management and software engineering. He is currently working on building a scalable and compliant ai and ML development and deployment platform. Furthermore, he is spearheading its adoption by Philips’ data science teams in order to develop ai-driven health systems and solutions.

Adnan Elci is a Senior Cloud Infrastructure Architect at AWS Professional Services. He operates in the capacity of a tech Lead, overseeing various operations for clients in Healthcare and Life Sciences, Finance, Aviation, and Manufacturing. His enthusiasm for automation is evident in his extensive involvement in designing, building and implementing enterprise level customer solutions within the AWS environment. Beyond his professional commitments, Adnan actively dedicates himself to volunteer work, striving to create a meaningful and positive impact within the community.

Adnan Elci is a Senior Cloud Infrastructure Architect at AWS Professional Services. He operates in the capacity of a tech Lead, overseeing various operations for clients in Healthcare and Life Sciences, Finance, Aviation, and Manufacturing. His enthusiasm for automation is evident in his extensive involvement in designing, building and implementing enterprise level customer solutions within the AWS environment. Beyond his professional commitments, Adnan actively dedicates himself to volunteer work, striving to create a meaningful and positive impact within the community.

Hasan Poonawala is a Senior ai/ML Specialist Solutions Architect at AWS, Hasan helps customers design and deploy machine learning applications in production on AWS. He has over 12 years of work experience as a data scientist, machine learning practitioner, and software developer. In his spare time, Hasan loves to explore nature and spend time with friends and family.

Hasan Poonawala is a Senior ai/ML Specialist Solutions Architect at AWS, Hasan helps customers design and deploy machine learning applications in production on AWS. He has over 12 years of work experience as a data scientist, machine learning practitioner, and software developer. In his spare time, Hasan loves to explore nature and spend time with friends and family.

Sreoshi Roy is a Senior Global Engagement Manager with AWS. As the business partner to the Healthcare & Life Sciences Customers, she comes with an unparalleled experience in defining and delivering solutions for complex business problems. She helps her customers make strategic objectives, define and design cloud/ data strategies and implement the scaled and robust solution to meet their technical and business objectives. Beyond her professional endeavors, her dedication lies in creating a meaningful impact on people’s lives by fostering empathy and promoting inclusivity.

Sreoshi Roy is a Senior Global Engagement Manager with AWS. As the business partner to the Healthcare & Life Sciences Customers, she comes with an unparalleled experience in defining and delivering solutions for complex business problems. She helps her customers make strategic objectives, define and design cloud/ data strategies and implement the scaled and robust solution to meet their technical and business objectives. Beyond her professional endeavors, her dedication lies in creating a meaningful impact on people’s lives by fostering empathy and promoting inclusivity.

Wajahat Aziz is a leader for ai/ML & HPC in AWS Healthcare and Life Sciences team. Having served as a technology leader in different roles with life science organizations, Wajahat leverages his experience to help healthcare and life sciences customers leverage AWS technologies for developing state-of-the-art ML and HPC solutions. His current areas of focus are early research, clinical trials and privacy preserving machine learning.

Wajahat Aziz is a leader for ai/ML & HPC in AWS Healthcare and Life Sciences team. Having served as a technology leader in different roles with life science organizations, Wajahat leverages his experience to help healthcare and life sciences customers leverage AWS technologies for developing state-of-the-art ML and HPC solutions. His current areas of focus are early research, clinical trials and privacy preserving machine learning.

Wioletta Stobieniecka is a Data Scientist at AWS Professional Services. Throughout her professional career, she has delivered multiple analytics-driven projects for different industries such as banking, insurance, telco, and the public sector. Her knowledge of advanced statistical methods and machine learning is well combined with a business acumen. She brings recent ai advancements to create value for customers.

Wioletta Stobieniecka is a Data Scientist at AWS Professional Services. Throughout her professional career, she has delivered multiple analytics-driven projects for different industries such as banking, insurance, telco, and the public sector. Her knowledge of advanced statistical methods and machine learning is well combined with a business acumen. She brings recent ai advancements to create value for customers.