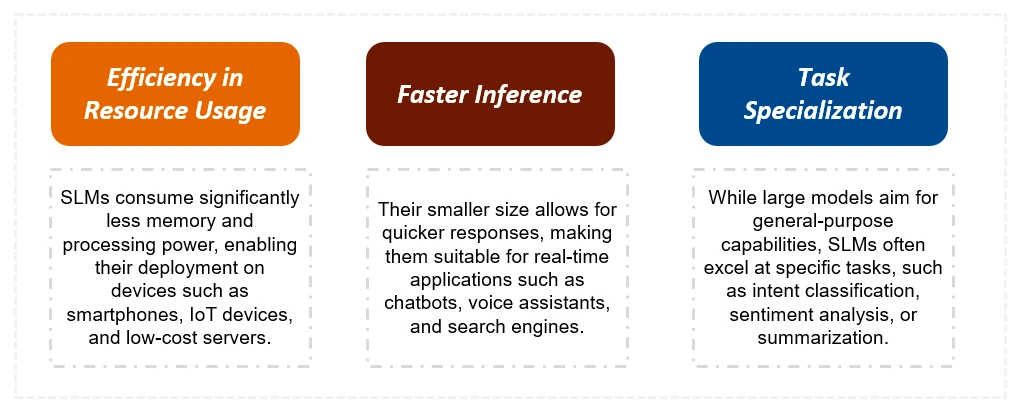

As a developer, you’re likely familiar with the power of large language models (LLMs) but also the challenges they bring—extensive computational requirements and high latency. Enter Small Language Models (SLMs)—compact, efficient versions of LLMs with fewer than 10 billion parameters. Designed for speed and resource efficiency, SLMs are tailor-made for scenarios like edge computing and real-time applications, delivering targeted performance without overwhelming your hardware. Whether you’re building a lightweight chatbot or enabling on-device ai, SLMs offer a practical solution to bring ai closer to your project’s needs.

This article explores the essentials of small language models (SLMs), highlighting their key features, applications, and creation from larger language models (LLMs). We’ll also walk you through implementing these models using Ollama on Google Colab and compare the results from different model variants, helping you understand their real-world performance and use cases.

Learning Objectives

- Gain a clear understanding of small language models and their defining characteristics.

- Learn the foundational techniques used to create small language models from large language models (LLMs).

- Gain insights into Performance Evaluation of Small Language Models to assess their suitability for various applications.

- Discover the key differences between small language models and their larger counterparts, LLMs.

- Explore the advanced features of the latest state-of-the-art small language models.

- Identify the primary application areas where small language models excel.

- Dive into the implementation of these models using Ollama on Google Colab, including a comparative analysis of outputs from various models.

This article was published as a part of the Data Science Blogathon.

What are Small Language Models (SLMs)?

Small Language Models have fewer parameters (typically under 10 billion), which dramatically reduces the computational costs and energy usage. They focus on specific tasks and are trained on smaller datasets. This maintains a balance between performance and resource efficiency. Small Language Models (SLMs) are compact versions of their larger counterparts, designed to deliver high efficiency and performance while minimizing computational resources. SLMs optimize for specific tasks and environments, unlike large-scale models like GPT-4 or PaLM, which demand vast memory, compute power, and energy. This makes them an ideal choice for edge devices, resource-constrained settings, and applications where speed and scalability are critical.

How are Small Language Models Created?

Let us learn about how small language models are created:

Knowledge Distillation

- The “student,” a smaller model, learns to mimic the behavior of the “teacher,” a larger pre-trained model.

- The student model learns from the teacher’s outputs (e.g., probabilities or embeddings) rather than directly from raw data, resulting in a compressed yet effective model.

Pruning

- The process removes redundant or less significant components, such as weights or neurons, to reduce the model’s size.

- This process involves identifying low-impact parameters that contribute minimally to the model’s performance.

Quantization

- Reduces the precision of the model’s parameters, such as using 8-bit integers instead of 32-bit floats.

- This lowers memory requirements and speeds up inference without significantly affecting accuracy

Small Language Models vs Large Language Models

Below is the comparison table of small language models and large language models:

| Small Language Models (SLMs) | Large Language Models (LLMs) | |

| Size | SLMs are much smaller in size with less number of parameters (typically under 10 billion) | LLMs are much larger with a lot higher number of parameters. |

| Training Data & Time | SLMs are trained with more focussed and context specific smaller datasets. SLMs can typically be trained in weeks. | LLMs are trained with a ton of varied datasets for generic learning requirements. For training LLMs, it can take months |

| Computing Resources | Needs much less resources making them a more sustainable option. | Owing to the large number of parameters in LLMs and the large training data used, LLMs need a lot of computing resources to train and run. |

| Proficiency | Best in dealing with simpler and specific tasks | Expert in dealing with complex and generic tasks |

| Inference | SLMs can run locally on devices like phones and raspberry pi without need of an internet connection | LLMs need GPU and other such specialized hardware to operate |

| Response Time | SLMs have faster response time owing to their small size. | Depending on the complexity of the tasks, LLMs can take much longer times to respond |

| Control of Models | Users can run SLMs on their own servers, tune them and even freeze them so that they don’t change at all in the future. | With LLMs, the control is in the hands of the model builders. This could lead to model drifts and catastrophic forgetting as well if the model changes. |

| Cost | Considering comparatively lower requirement of computing resources, overall cost is lower. | Owing to the large amount of computing resources needed to train and run LLM models, cost is higher. |

To know more, checkout our article on: SLMs vs LLMs: The Ultimate Comparison Guide!

Latest Small Language Models

In the rapidly evolving world of ai, small language models (SLMs) are setting new benchmarks for efficiency and versatility. Here’s a look at the most advanced SLMs, highlighting their unique features, capabilities, and applications.

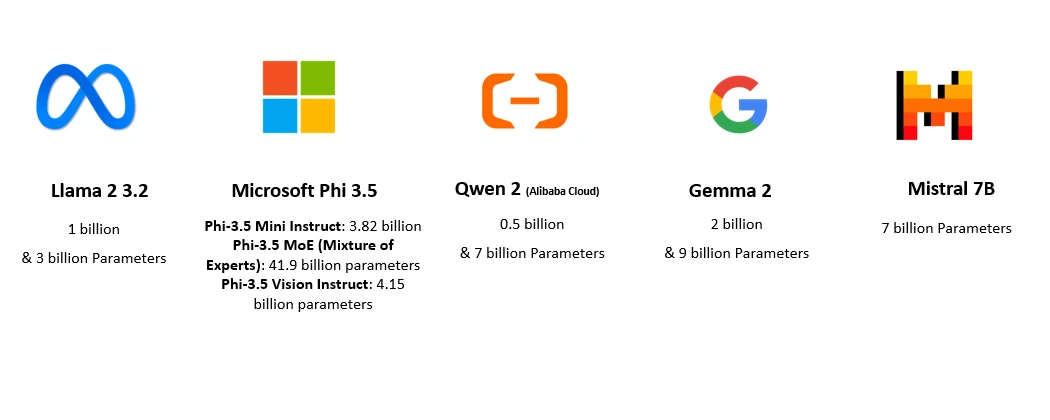

LLaMA 2 3.2

- Model Overview: The LLaMA 2 3.2 text-only models, developed by Meta, are part of the efficient and high-performing LLaMA 2 series, designed for resource-constrained environments.

- Variants: Available in 1 billion (1B) and 3 billion (3B) parameter configurations.

- Optimization Techniques: Meta utilized pruning to reduce unnecessary components and knowledge distillation to inherit capabilities from larger LLaMA models (e.g., 8B and 70B).

- Context Handling: Support 128,000-token context lengths, enabling advanced tasks like long-document summarization, extended conversational analysis, and content rewriting.

- Performance: Despite smaller sizes, the 3B model achieves an impressive 63.4 on the MMLU 5-shot benchmark, demonstrating strong computational efficiency and versatility.

Microsoft’s Phi 3.5

Model Series Overview: The Phi 3.5 series includes advanced ai models with diverse specializations:

- Phi-3.5 Mini Instruct: 3.82 billion parameters.

- Phi-3.5 MoE (Mixture of Experts): 41.9 billion parameters (actively using 6.6 billion).

- Phi-3.5 Vision Instruct: 4.15 billion parameters.

Context Window: All models support a 128,000-token context length, enabling tasks involving text, code, images, and videos.

- Phi-3.5 Mini Instruct: Designed for lightweight and efficient tasks such as code generation, mathematical problem-solving, and logical reasoning; optimized for resource-constrained environments.

- Phi3.5 MoE: Employs a modular architecture for advanced reasoning, multilingual tasks, and scalability, utilizing a selective parameter activation mechanism for efficient performance.

- Phi-3.5 Vision Instruct: A multimodal model excelling in image interpretation, chart analysis, and video summarization, ideal for visual data processing tasks.

Qwen 2

- Model Range: Qwen2, developed by Alibaba Cloud, offers models ranging from 0.5 billion to 7 billion parameters, catering to diverse applications from lightweight to performance-intensive tasks.

- Applications: The 0.5B model is ideal for lightweight apps, while the 7B model excels in tasks like summarization and text generation, balancing scalability and robustness.

- Efficiency Focus: While not as capable in complex reasoning as larger ai models, Qwen2 prioritizes speed and efficiency, making it suitable for practical uses requiring quick responses or operating under limited resources.

- Pretraining: The models pretrain on over 27 languages, significantly improving code and mathematical capabilities compared to previous versions.

- Context Lengths: Smaller models (0.5B and 1.5B) feature a 32,000-token context length, while the 7B model supports an extended 128,000-token context length, enabling handling of extensive data inputs

Google’s Gemma 2

- Variants and Size: Google’s Gemma 2 is a lightweight open-model family with three variants—2B, 9B, and 27B parameters.

- Training Data: The 9B model was trained on 8 trillion tokens, while the 2B model used 2 trillion tokens. Training data included diverse text formats like web content, code snippets, and scientific papers. Gemma 2 models are not multimodal or multilingual.

- Knowledge Distillation: Smaller models (2B and 9B) were developed using knowledge distillation, leveraging a larger teacher model.

- Context Length: The models support a context length of 8192 tokens, enabling efficient processing of extended text.

- Edge Computing Suitability: Gemma 2 optimizes for resource-constrained environments and offers a practical alternative to heavier models like GPT-3.5 or Llama 65B.

Mistral 7B

- Model Overview: Mistral ai developed Mistral 7B, a 7-billion-parameter language model designed for efficiency and high performance. As a decoder-only model, Mistral 7B generates text based on a given prompt.

- Real-Time Applications: The model optimizes for quick responses, making it suitable for real-time applications.

- Benchmark Performance: Mistral 7B outperforms larger models in various benchmarks, excelling in mathematics, code generation, and reasoning tasks.

- Context Length: The model supports a context length of 8192 tokens, allowing it to process extended sequences of text.

- Efficient Attention Mechanisms: Mistral 7B uses Grouped-query Attention (GQA) for faster inference and Sliding Window Attention (SWA) for handling longer sequences with reduced computational cost.

Where can SLMs be Applied?

Small language models (SLMs) excel in resource-constrained settings due to their computational efficiency and speed. They power edge computing by enabling real-time processing on devices like smartphones and IoT systems. SLMs are ideal for chatbots, virtual assistants, and content generation, offering quick responses and cost-effective solutions. They also support text summarization for concise overviews, text classification for tasks like sentiment analysis, and translation for lightweight language tasks. Additional applications include code generation, mathematical problem-solving, healthcare text processing, and personalized recommendations, making them versatile tools across industries.

Running Small Language Models on Google Colab using Ollama

Ollama is an advanced ai tool that allows users to easily set up and run large language models locally (in CPU and GPU modes). We will explore how to run these small language models on Google Colab using Ollama in the following steps.

Step 1: Installing the Required Libraries

!sudo apt update

!sudo apt install -y pciutils

!curl -fsSL https://ollama.com/install.sh | sh

!pip install langchain-ollama- !sudo apt update: This updates the package lists to ensure we are getting the latest versions.

- !sudo apt install -y pciutils: The pciutils package is required by Ollama to detect the GPU type.

- !curl -fsSL https://ollama.com/install.sh | sh – this command uses curl to download and install Ollama

- !pip install langchain-ollama: Installs the langchain-ollama Python package, which is likely related to integrating the LangChain framework with the Ollama language model service.

Step 2: Importing the Required Libraries

import threading

import subprocess

import time

from langchain_core.prompts import ChatPromptTemplate

from langchain_ollama.llms import OllamaLLM

from IPython.display import MarkdownStep 3: Running Ollama in Background on Colab

def run_ollama_serve():

subprocess.Popen(("ollama", "serve"))

thread = threading.Thread(target=run_ollama_serve)

thread.start()

time.sleep(5)The run_ollama_serve() function is defined to launch an external process (ollama serve) using subprocess.Popen().

The threading package creates a new thread that runs the run_ollama_serve() function. The thread starts, enabling the ollama service to run in the background. The main thread sleeps for 5 seconds as defined by time.sleep(5) commad, giving the server time to start up before proceeding with any further actions.

Step 4: Pulling Llama3.2 from Ollama

!ollama pull llama3.2Running !ollama pull llama3.2 ensures that the Llama 3.2 language model is downloaded and ready to be used. We can pull the other small language models too from here for experimentation or comparison of outputs.

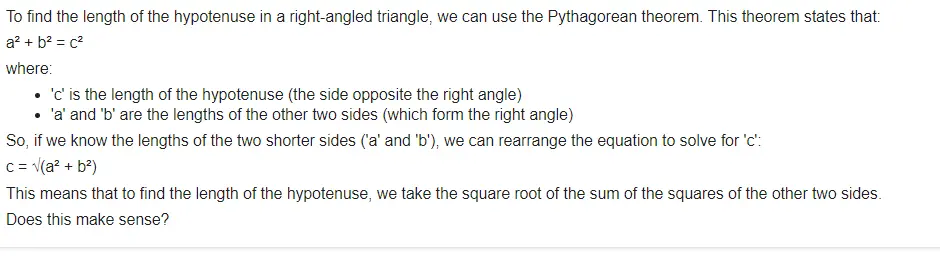

Step 5: Prompting the Llama 3.2 model

template = """Question: {question}

Answer: Let's think step by step."""

prompt = ChatPromptTemplate.from_template(template)

model = OllamaLLM(model="llama3.2")

chain = prompt | model

display(Markdown(chain.invoke({"question": "What's the length of hypotenuse in a right angled triangle"})))The above code creates a prompt template to format a question, feeds the question to the Llama 3.2 model, and outputs the response with step-by-step reasoning. In this case, it’s asking about the length of the hypotenuse in a right-angled triangle. The process involves defining a structured prompt, chaining it with a model, and then invoking the chain to get and display the response.

Performance Evaluation of Small Language Models

Understanding how small language models perform across different tasks is essential to determine their suitability for real-world applications. In this section, we compare outputs from various SLMs to highlight their strengths, limitations, and best use cases.

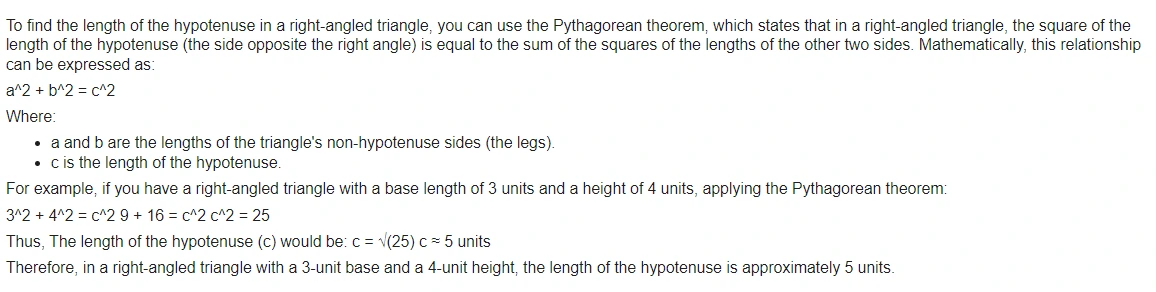

Llama 3.2 Output

Delivers concise responses with strong reasoning but struggles slightly with creative tasks.

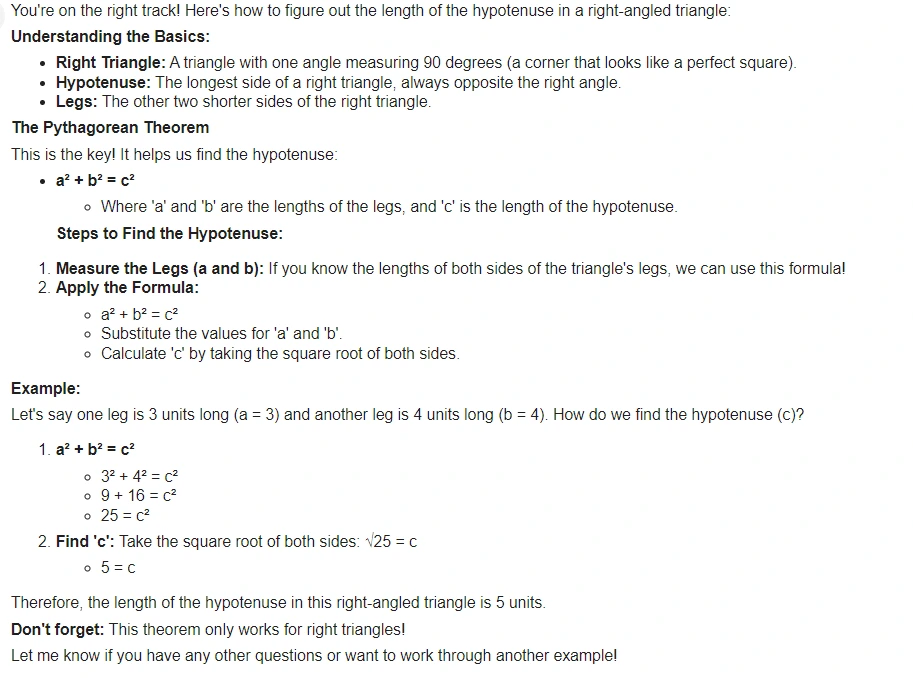

Phi-3.5 Mini Output

Offers fast responses with decent accuracy but lacks depth in explanations.

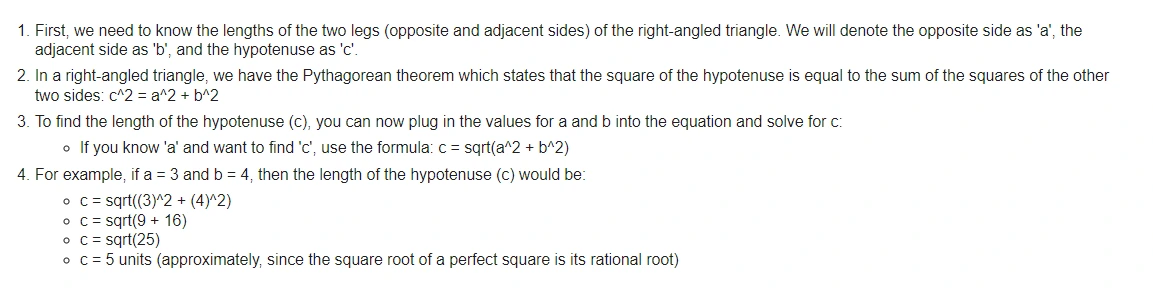

Qwen 2 (1.5 Billion Model) Output

Excels in structured problem-solving but sometimes over-generalizes in open-ended queries.

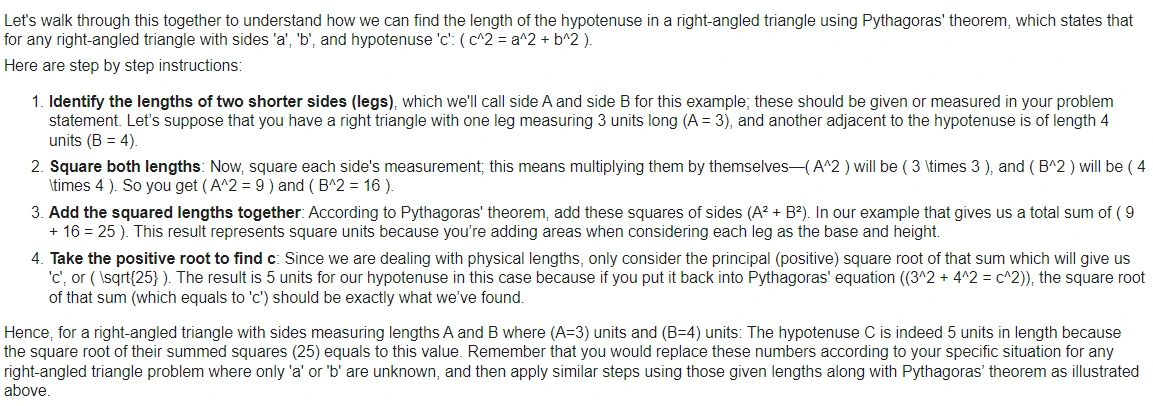

Gemma 2 (2 Billion Model) Output

Provides detailed and contextually rich answers, balancing accuracy and creativity.

Mistral 7B (7 Billion Model) Output

Handles complex queries effectively but requires higher computational resources.

Even though all the models give accurate response to the question, Gemma 2 (2 Billion) model at least for this question gives the most comprehensive and easy to understand answer.

Conclusion

Small language models represent a powerful solution for scenarios that require efficiency, speed, and resource optimization without sacrificing performance. By leveraging reduced parameter sizes and efficient architectures, these models are well-suited for applications in resource-constrained environments, real-time processing, and edge computing. While they may not possess the broad capabilities of their larger counterparts, small language models excel in specific tasks such as code generation, question answering, and text summarization.

With advancements in training techniques, like knowledge distillation and pruning, these models are increasingly capable of delivering competitive performance in many practical use cases. Their ability to balance compactness with functionality makes them an invaluable tool for developers and businesses seeking scalable, cost-effective ai solutions.

Key Takeaways

- Small Language Models have fewer parameters (typically under 10 billion), which dramatically reduces the computational costs and energy usage. They focus on specific tasks and are trained on smaller datasets.

- Understand the Performance Evaluation of Small Language Models, their strengths, limitations, and optimal use cases.

- Knowledge Distillation, Pruning and Quantization are some of the techniques through which small language models are created from Large language models.

- Small Language models should preferably be used when the requirement is for simple and specific tasks and when there are constraints on available resources.

- Some of the latest Small Language Models include Meta’s Llama 2 3.5 model, Microsoft’s Phi-3.5 models, Qwen 2 (0.5 and 7 billion) model, Gemma 2 (2 and 9 billion) model, Mistral 7B model.

Frequently Asked Questions

A. Small Language Models (SLMs) are language models with fewer parameters, typically under 10 billion, making them more resource-efficient. They are optimized for specific tasks and trained on smaller datasets, balancing performance and computational efficiency. These models are ideal for applications that require fast responses and minimal resource consumption.

A. SLMs are designed to deliver high performance while using significantly less computational power and energy than larger models like GPT-4 or PaLM. Their compact size suits edge devices with limited memory, compute, and energy, enabling scalable, efficient applications.

A. Knowledge distillation involves training smaller models using insights from larger models, enabling compact variants like LLaMA 2 and Gemma 2 to inherit capabilities while remaining resource-efficient.

A. Pruning reduces model size by removing redundant weights or neurons with minimal impact on performance. This directly decreases the model’s complexity.

Quantization, on the other hand, reduces the precision of the model’s parameters, for instance, by using 8-bit integers instead of 32-bit floating-point numbers. This reduces memory usage and increases inference speed without altering the overall structure of the model.

NEWSLETTER

NEWSLETTER