artificial intelligence (ai) is rapidly transforming, particularly in multimodal learning. Multimodal models aim to combine visual and textual information to allow machines to understand and generate content that requires input from both sources. This capability is vital for tasks such as image captioning, visual question answering, and content creation, where more than a single mode of data is required. While many models have been developed to address these challenges, only a few have effectively aligned the disparate representations of visual and textual data, leading to inefficiencies and suboptimal performance in real-world applications.

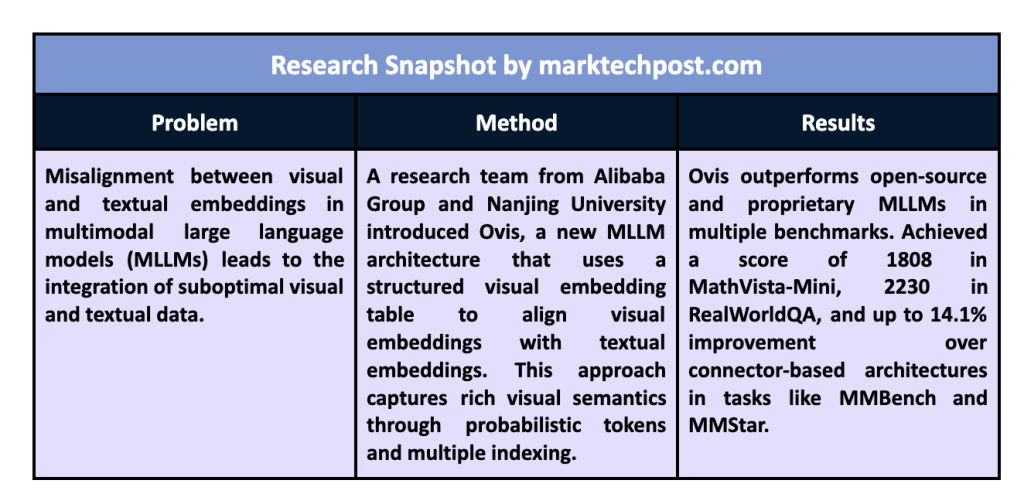

A major challenge in multimodal learning arises from how text and image data are encoded and represented. Textual data is typically defined using embeddings derived from a lookup table, ensuring a structured and consistent format. In contrast, visual data is encoded using vision transformers, which produce unstructured continuous embeddings. This representation discrepancy makes it easier for existing multimodal models to merge visual and textual data seamlessly. As a result, the models struggle to interpret complex visual and textual relationships, limiting their capabilities in advanced ai applications that require coherent understanding of multiple data modalities.

Traditionally, researchers have attempted to mitigate this problem by using a connector, such as a multilayer perceptron (MLP), to project visual embeddings into a space that can be aligned with textual embeddings. While effective on standard multimodal tasks, this architecture must resolve the fundamental misalignment between visual and textual embeddings. Leading models such as LLaVA and Mini-Gemini incorporate advanced methods such as cross-attention mechanisms and dual-vision encoders to improve performance. However, they still face limitations due to inherent differences in tokenization and integration strategies, highlighting the need for a novel approach that addresses these issues at a structural level.

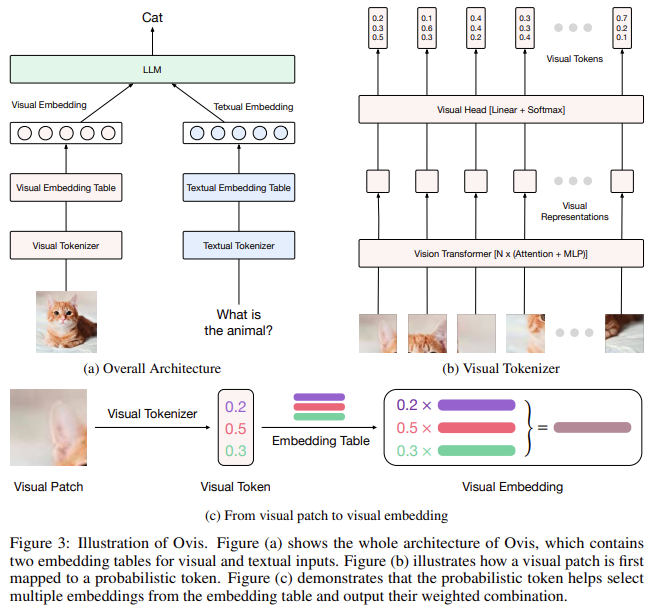

The team of researchers from Alibaba Group and Nanjing University presented a new version of ai/Ovis”>ovis: Ovis 1.6 is a new multimodal large language model (MLLM) that structurally aligns visual and textual embeddings to address this challenge. Ovis employs a unique visual embedding lookup table, similar to that used for textual embeddings, to create structured visual representations. This table allows the visual encoder to produce embeddings compatible with textual embeddings, resulting in more effective integration of visual and textual information. The model also uses probabilistic tokens for visual patches mapped to the visual embedding table multiple times. This approach reflects the structured representation used in textual data, facilitating a coherent combination of visual and textual input.

Ovis' main innovation lies in the use of a visual embedding table that aligns visual tokens with their textual counterparts. A probabilistic token represents each image patch and indexes the visual embedding table multiple times to generate a final visual embedding. This process captures the rich semantics of each visual patch and results in embeddings that are structurally similar to textual tokens. Unlike conventional methods, which rely on linear projections to map visual embeddings into a joint space, Ovis takes a probabilistic approach to generate more meaningful visual embeddings. This approach allows Ovis to overcome the limitations of connector-based architectures and achieve better performance on multimodal tasks.

Empirical evaluations of Ovis demonstrate its superiority over other open source MLLMs of similar sizes. For example, in the MathVista-Mini benchmark, Ovis scored 1808, significantly higher than its competitors. Similarly, in the RealWorldQA benchmark, Ovis outperformed leading proprietary models such as GPT4V and Qwen-VL-Plus, with a score of 2,230, compared to GPT4V's 2,038. These results highlight Ovis' strength in handling complex multimodal tasks, making it a promising candidate for future advances in the field. The researchers also evaluated Ovis on a number of general multimodal benchmarks, including MMBench and MMStar, where it consistently outperformed models such as Mini-Gemini-HD and Qwen-VL-Chat by a margin of 7.8% to 14.1%. %, depending on the specific reference point. .

Key research findings:

- Structural alignment: Ovis introduces a novel visual embedding table that structurally aligns visual and textual embeddings, improving the model's ability to process multimodal data.

- Superior performance: Ovis outperforms open source models of similar sizes in several benchmarks, achieving a 14.1% improvement over connector-based architectures.

- High resolution capabilities: The model excels in tasks that require visual understanding of high-resolution images, such as the RealWorldQA benchmark, where it scored 2230, beating GPT4V by 192 points.

- Scalability: Ovis demonstrates consistent performance at different parameter levels (7B, 14B), making it adaptable to various model sizes and computational resources.

- Practical applications: With its advanced multimodal capabilities, Ovis can be applied to complex and challenging real-world scenarios, including visual question answering and image captioning, where existing models struggle.

In conclusion, researchers have successfully addressed the longstanding misalignment between visual and textual embeddings. By introducing a structured visual embedding strategy, Ovis enables more effective multimodal data integration, improving performance across multiple tasks. The model's ability to outperform proprietary and open source models of similar parameter scales, such as Qwen-VL-Max, underscores its potential as a new standard in multimodal learning. The research team's approach offers an important step forward in the development of MLLM, providing new avenues for future research and applications.

look at the Paper, ai/Ovis?tab=readme-ov-file” target=”_blank” rel=”noreferrer noopener”>GitHuband ai/Ovis1.6-Gemma2-9B” target=”_blank” rel=”noreferrer noopener”>HF model. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet..

Don't forget to join our 52k+ ML SubReddit.

We are inviting startups, companies and research institutions that are working on small language models to participate in this next Magazine/Report 'Small Language Models' by Marketchpost.com. This magazine/report will be published in late October/early November 2024. Click here to schedule a call!

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER