Image by Author

As large language models (LLMs) such as GPT-3.5, LLaMA2, and PaLM2 grow ever larger in scale, fine-tuning them on downstream natural language processing (NLP) tasks becomes increasingly computationally expensive and memory intensive.

Parameter-Efficient Fine-Tuning (PEFT) methods address these issues by only fine-tuning a small number of extra parameters while freezing most of the pretrained model. This prevents catastrophic forgetting in large models and enables fine-tuning with limited compute.

PEFT has proven effective for tasks like image classification and text generation while using just a fraction of the parameters. The small tuned weights can simply be added to the original pretrained weights.

You can even fine tune LLMs on the free version of Google Colab using 4-bit quantization and PEFT techniques QLoRA.

The modular nature of PEFT also allows the same pretrained model to be adapted for multiple tasks by adding small task-specific weights, avoiding the need to store full copies.

The PEFT library integrates popular PEFT techniques like LoRA, Prefix Tuning, AdaLoRA, Prompt Tuning, MultiTask Prompt Tuning, and LoHa with Transformers and Accelerate. This provides easy access to cutting-edge large language models with efficient and scalable fine-tuning.

In this tutorial, we will be using the most popular parameter-efficient fine-tuning (PEFT) technique called LoRA (Low-Rank Adaptation of Large Language Models). LoRA is a technique that significantly speeds up the fine-tuning process of large language models while consuming less memory.

The key idea behind LoRA is to represent weight updates using two smaller matrices achieved through low-rank decomposition. These matrices can be trained to adapt to new data while minimizing the overall number of modifications. The original weight matrix remains unchanged and doesn’t undergo any further adjustments. The final results are obtained by combining both the original and the adapted weights.

There are several advantages to using LoRA. Firstly, it greatly enhances the efficiency of fine-tuning by reducing the number of trainable parameters. Additionally, LoRA is compatible with various other parameter-efficient methods and can be combined with them. Models fine-tuned using LoRA demonstrate performance comparable to fully fine-tuned models. Importantly, LoRA doesn’t introduce any additional inference latency since adapter weights can be seamlessly merged with the base model.

There are many use cases of PEFT, from language models to Image classifiers. You can check all of the use case tutorials on official documentation.

- StackLLaMA: A hands-on guide to train LLaMA with RLHF

- Finetune-opt-bnb-peft

- Efficient flan-t5-xxl training with LoRA and Hugging Face

- DreamBooth fine-tuning with LoRA

- Image classification using LoRA

In this section, we will learn how to load and wrap our transformer model using the `bitsandbytes` and `peft` library. We will also cover loading the saved fine-tuned QLoRA model and running inferences with it.

Getting Started

First, we will install all the necessary libraries.

%%capture

%pip install accelerate peft transformers datasets bitsandbytesThen, we will import the essential modules and name the base model (Call-2-7b-chat-hf) to fine-tune it using the mlabonne/guanaco-llama2-1k dataset.

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

from peft import get_peft_model, LoraConfig

import torch

model_name = "NousResearch/Llama-2-7b-chat-hf"

dataset_name = "mlabonne/guanaco-llama2-1k"PEFT Configuration

Create PEFT configuration that we will use to wrap or train our model.

peft_config = LoraConfig(

lora_alpha=16,

lora_dropout=0.1,

r=64,

bias="none",

task_type="CAUSAL_LM",

)4 bit Quantization

Loading LLMs on consumer or Colab GPUs poses significant challenges. However, we can overcome this issue by implementing a 4-bit quantization technique with an NF4 type configuration using BitsAndBytes. By employing this approach, we can effectively load our model, thereby conserving memory and preventing machine crashes.

compute_dtype = getattr(torch, "float16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=False,

)Wrapping Base Transformers Model

To make our model parameter efficient, we will wrap the base transformer model using `get_peft_model`.

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=bnb_config,

device_map="auto"

)

model = get_peft_model(model, peft_config)

model.print_trainable_parameters()Our trainable parameters are fewer than those of the base model, allowing us to use less memory and fine-tune the model faster.

trainable params: 33,554,432 || all params: 6,771,970,048 || trainable%: 0.49548996469513035The next step is to train the model. You can do that by following the 4-bit quantization and QLoRA guide.

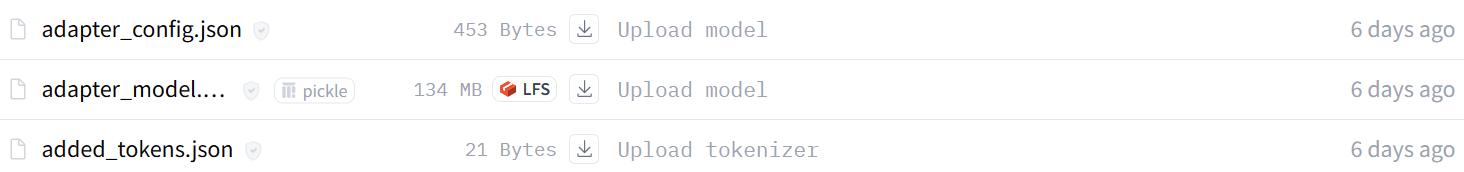

Saving the Model

After training, you can either save the model adopter locally.

model.save_pretrained("llama-2-7b-chat-guanaco")Or, push it to the Hugging Face Hub.

!huggingface-cli login --token $secret_value_0model.push_to_hub("llama-2-7b-chat-guanaco")As we can see, the adopter model is just 134MB whereas the base LLaMA 2 7B model is around 13GB.

Loading the Model

To run the model Inference, we have to first load the model using 4-bit precision quantization and then merge trained PEFT weights with the base (LlaMA 2) model.

from transformers import AutoModelForCausalLM

from peft import PeftModel, PeftConfig

import torch

peft_model = "kingabzpro/llama-2-7b-chat-guanaco"

base_model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=bnb_config,

device_map="auto"

)

model = PeftModel.from_pretrained(base_model, peft_model)

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = model.to("cuda")

model.eval()Inference

For running the inference we have to write the prompt in guanaco-llama2-1k dataset style(“(INST) {prompt} (/INST)”). Otherwise you will get responses in different languages.

prompt = "What is Hacktoberfest?"

inputs = tokenizer(f"<s>(INST) {prompt} (/INST)", return_tensors="pt")

with torch.no_grad():

outputs = model.generate(

input_ids=inputs("input_ids").to("cuda"), max_new_tokens=100

)

print(

tokenizer.batch_decode(

outputs.detach().cpu().numpy(), skip_special_tokens=True

)(0)

)

The output seems perfect.

(INST) What is Hacktoberfest? (/INST) Hacktoberfest is an open-source software development event that takes place in October. It was created by the non-profit organization Open Source Software Institute (OSSI) in 2017. The event aims to encourage people to contribute to open-source projects, with the goal of increasing the number of contributors and improving the quality of open-source software.

During Hacktoberfest, participants are encouraged to contribute to open-source

Note: If you are facing difficulties while loading the model in Colab, you can check out my notebook: Overview of PEFT.

Parameter-Efficient Fine-Tuning techniques like LoRA enable efficient fine-tuning of large language models using only a fraction of parameters. This avoids expensive full fine-tuning and enables training with limited compute resources. The modular nature of PEFT allows adapting models for multiple tasks. Quantization methods like 4-bit precision can further reduce memory usage. Overall, PEFT opens up large language model capabilities to a much wider audience.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology Management and a bachelor’s degree in Telecommunication Engineering. His vision is to build an ai product using a graph neural network for students struggling with mental illness.

NEWSLETTER

NEWSLETTER