Attaching a custom Docker image to an amazon SageMaker Studio domain involves several steps. First, you must create and push the image to amazon Elastic Container Registry (amazon ECR). You must also ensure that the amazon SageMaker domain execution role has the necessary permissions to pull the image from amazon ECR. After you submit the image to amazon ECR, create a custom SageMaker image in the AWS Management Console. Finally, update the SageMaker domain settings to specify the amazon Resource Name (ARN) of the custom image. This multi-step process must be followed manually each time end users create new custom Docker images to make them available in SageMaker Studio.

In this post we explain how to automate this process. This approach allows you to update your SageMaker configuration without writing additional infrastructure code, provisioning custom images, and attaching them to SageMaker domains. By adopting this automation, you can deploy consistent, standardized analytics environments across your organization, increasing team productivity and mitigating security risks associated with using single images.

The solution described in this post is aimed at machine learning (ML) engineers and platform teams who are often responsible for managing and standardizing custom environments at scale across an organization. For individual data scientists looking for a self-service experience, we recommend using native Docker support in SageMaker Studio, as described in Accelerate machine learning workflows with amazon SageMaker Studio Local Mode and Docker Support . This feature allows data scientists to build, test, and deploy custom Docker containers directly within the SageMaker Studio integrated development environment (IDE), allowing you to iteratively experiment with your analytics environments seamlessly within the familiar interface. by SageMaker Studio.

Solution Overview

The following diagram illustrates the architecture of the solution.

We implemented a pipeline using AWS CodePipeline, which automates the creation of a custom Docker image and attaching the image to a SageMaker domain. The pipeline first checks the code base from the GitHub repository and creates custom Docker images based on the configuration declared in the configuration files. After successfully creating and pushing Docker images to amazon ECR, the pipeline validates the image by scanning and checking for security vulnerabilities in the image. If no critical or high security vulnerabilities are found, the process continues to the manual approval stage before deployment. After manual approval is completed, the pipeline deploys the SageMaker domain and attaches custom images to the domain automatically.

Prerequisites

Prerequisites for implementing the solution described in this post include:

Implement the solution

Complete the following steps to deploy the solution:

- Sign in to your AWS account using the AWS CLI in a terminal shell (for more details, see Authenticating with Short-Term Credentials for the AWS CLI).

- Run the following command to ensure you are successfully signed in to your AWS account:

- bifurcates the GitHub repository to your GitHub account.

- Clone the forked repository to your local workstation using the following command:

- Sign in to the console and create an AWS CodeStar connection to the GitHub repository in the previous step. For instructions, see Create a connection to GitHub (console).

- Copy the ARN of the connection you created.

- Go to terminal and run the following command to cd into the repository directory:

- Run the following command to install all libraries from npm:

- Run the following commands to run a shell script in the terminal. This script will take your AWS account number and AWS Region as input parameters and deploy an AWS CDK stack, which deploys components like CodePipeline, AWS CodeBuild, ECR repository, etc. Use an existing VPC to configure the VPC_ID export variable below. If you don't have a VPC, create one with at least two subnets and use it.

- Run the following command to deploy AWS infrastructure using AWS CDK V2 and make sure to wait for the template to run successfully:

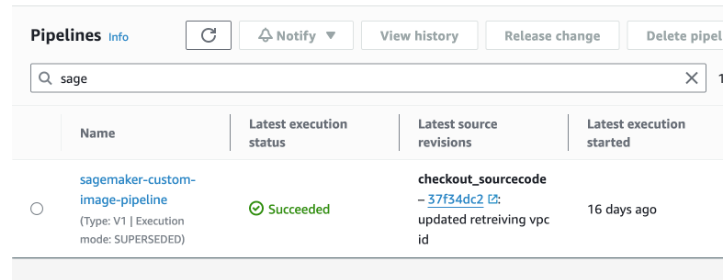

- In the CodePipeline console, choose Pipes in the navigation panel.

- Choose the link for the pipeline named

sagemaker-custom-image-pipeline.

- You can track the progress of the pipeline in the console and provide approval at the manual approval stage to deploy SageMaker infrastructure. The pipeline takes approximately 5-8 minutes to create an image and move to the manual approval stage.

- Wait for the pipeline to complete the deployment stage.

The pipeline creates infrastructure resources in your AWS account with a SageMaker domain and a custom SageMaker image. It also attaches the custom image to the SageMaker domain.

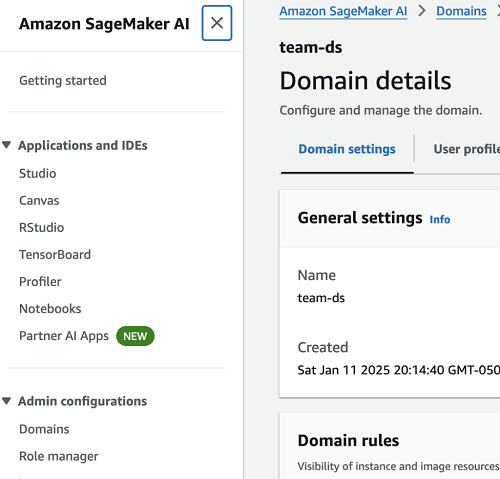

- In the SageMaker console, choose Domains low Administrator settings in the navigation panel.

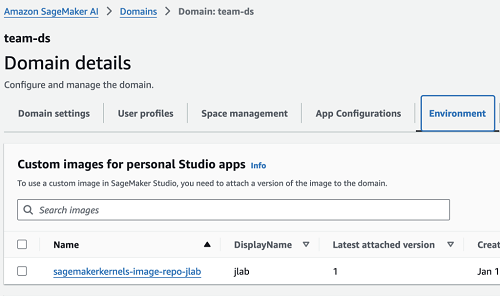

- Open the domain called team-ds and navigate to the Atmosphere

You should be able to see a custom image attached.

How custom images are deployed and attached

CodePipeline has a stage called BuildCustomImages which contains the automated steps to create a custom SageMaker image using the SageMaker Custom Image CLI and push it to the ECR repository created in the AWS account. The AWS CDK stack in the deployment stage has the steps to create a SageMaker domain and attach a custom image to the domain. The parameters to create the SageMaker domain, custom image, etc. They are configured in JSON format and used in the SageMaker stack in the lib directory. Consult the sagemakerConfig section in environments/config.json for declarative parameters.

Add more custom images

You can now add your own custom Docker image to attach to the SageMaker domain created by the pipeline. For custom images being built, see the Dockerfile specifications for Docker image specifications.

- cd into the images directory in the terminal repository:

- Create a new directory (e.g. custom) in the images directory:

- Add your own Dockerfile to this directory. For testing, you can use the following Dockerfile configuration:

- Update the images section in the json file in the environments directory to add the new name of the images directory you created:

- Update the same image name in

customImagesunder the settings of the created SageMaker domain:

- Commit and push changes to the GitHub repository.

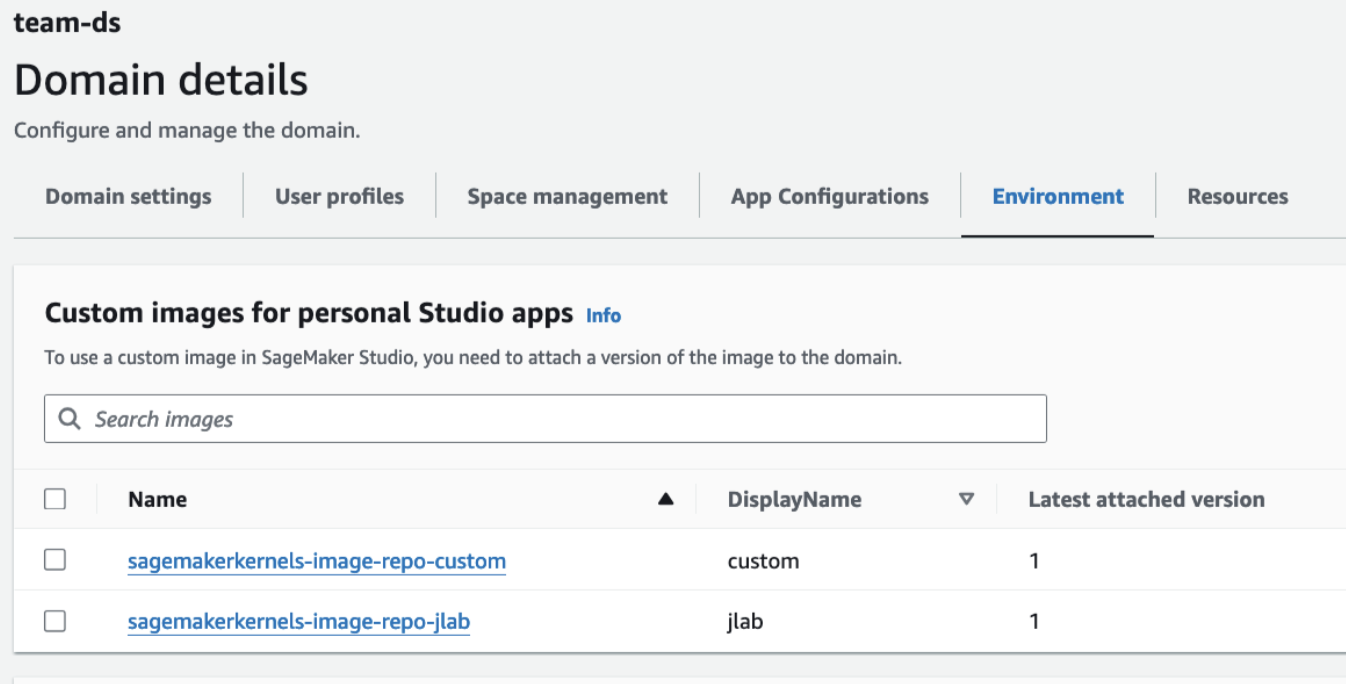

- You should see CodePipeline activate on press. Track process progress and provide manual approval for deployment.

Once the deployment completes successfully, you should be able to see that the custom image you added is attached to the domain configuration (as shown in the screenshot below).

Clean

To clean up your resources, open the AWS CloudFormation console and delete the stacks SagemakerImageStack and PipelineStack in that order. If you encounter errors like “The S3 bucket is not empty” or “The ECR repository has images”, you can manually delete the S3 bucket and the ECR repository that was created. You can then try again to delete the CloudFormation stacks.

Conclusion

In this post, we show how to create an automated continuous integration and delivery (CI/CD) pipeline solution to create, scan, and deploy custom Docker images to SageMaker Studio domains. You can use this solution to promote consistency of analytics environments for data science teams across your enterprise. This approach helps you achieve machine learning (ML) governance, scalability, and standardization.

About the authors

Muni AnnachiSenior DevOps consultant at AWS, he has more than a decade of experience in architecting and implementing software systems and cloud platforms. He specializes in guiding nonprofit organizations to adopt DevOps CI/CD architectures, adhering to AWS best practices and the AWS Well-Architected Framework. Beyond his professional activity, Muni is passionate about sports and tries his hand at cooking.

Muni AnnachiSenior DevOps consultant at AWS, he has more than a decade of experience in architecting and implementing software systems and cloud platforms. He specializes in guiding nonprofit organizations to adopt DevOps CI/CD architectures, adhering to AWS best practices and the AWS Well-Architected Framework. Beyond his professional activity, Muni is passionate about sports and tries his hand at cooking.

Ajay Raghunathan is a machine learning engineer at AWS. His current work focuses on designing and implementing machine learning solutions at scale. He is a technology enthusiast and builder with a main area of interest in ai/ML, data analytics, serverless and DevOps. Outside of work, he enjoys spending time with family, traveling, and playing soccer.

Ajay Raghunathan is a machine learning engineer at AWS. His current work focuses on designing and implementing machine learning solutions at scale. He is a technology enthusiast and builder with a main area of interest in ai/ML, data analytics, serverless and DevOps. Outside of work, he enjoys spending time with family, traveling, and playing soccer.

Arun Dyasani is a Senior Cloud Application Architect at AWS. His current work focuses on the design and implementation of innovative software solutions. His role focuses on creating robust architectures for complex applications, leveraging his deep knowledge and experience in developing large-scale systems.

Arun Dyasani is a Senior Cloud Application Architect at AWS. His current work focuses on the design and implementation of innovative software solutions. His role focuses on creating robust architectures for complex applications, leveraging his deep knowledge and experience in developing large-scale systems.

Shweta Singh He is a Senior Product Manager on the amazon SageMaker machine learning platform team on AWS and leads the SageMaker Python SDK. He has worked in various product roles at amazon for over 5 years. He holds a bachelor's degree in Computer Engineering and a Master of Science in Financial Engineering, both from New York University.

Shweta Singh He is a Senior Product Manager on the amazon SageMaker machine learning platform team on AWS and leads the SageMaker Python SDK. He has worked in various product roles at amazon for over 5 years. He holds a bachelor's degree in Computer Engineering and a Master of Science in Financial Engineering, both from New York University.

Jenna Eun is a Senior Practice Manager for the Healthcare and Advanced Computing team at AWS Professional Services. His team focuses on designing and delivering data, machine learning, and advanced computing solutions for the public sector, including federal, state, and local governments, academic medical centers, nonprofit healthcare organizations, and research institutions.

Jenna Eun is a Senior Practice Manager for the Healthcare and Advanced Computing team at AWS Professional Services. His team focuses on designing and delivering data, machine learning, and advanced computing solutions for the public sector, including federal, state, and local governments, academic medical centers, nonprofit healthcare organizations, and research institutions.

Meenakshi Ponn Shankaran is a Principal Domain Architect at AWS in the Data and Machine Learning Professional Services organization. He has extensive experience designing and building large-scale data lakes, handling petabytes of data. Currently, he is focused on providing technical leadership to AWS US public sector customers, guiding them in using innovative AWS services to meet their strategic objectives and unlock the full potential of their data.

Meenakshi Ponn Shankaran is a Principal Domain Architect at AWS in the Data and Machine Learning Professional Services organization. He has extensive experience designing and building large-scale data lakes, handling petabytes of data. Currently, he is focused on providing technical leadership to AWS US public sector customers, guiding them in using innovative AWS services to meet their strategic objectives and unlock the full potential of their data.

NEWSLETTER

NEWSLETTER